Article by | Zheng Wen

Editor|Zhou Changxian

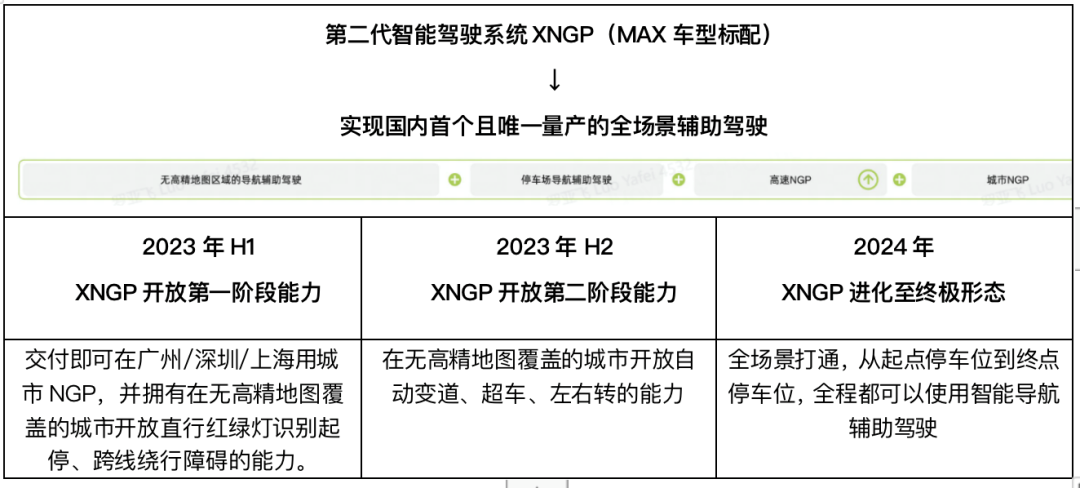

In October last year, at the annual “1024 XPeng Motors Technology Day”, He Xiaopeng introduced the XNGP Intelligent Driving Assistance System. With a brand-new technical architecture, XNGP covers city NGP and enhanced LCC, comprehensively improving capabilities in positioning, perception, prediction, and control.

The system can achieve assisted driving from the starting point parking space to the destination parking space after launching navigation based on a regular map. This includes exiting a parking space, driving on city roads, highways/expressways, and entering internal roads within cities and parking lots, eventually parking in a designated spot.

In summary, the XNGP Intelligent Driving Assistance System is the next-generation smart driving system for XPeng Motors after the XPILOT system. It will possess full-scenario intelligent assisted driving capabilities and will be the ultimate product before realizing autonomous driving.

Wu Xinzhou, VP of Autonomous Driving at XPeng Motors, explained the “ultimate product mode”, “For XNGP, in terms of software and functionality, we hope that they can start providing users with an L4-like experience in some scenarios and eventually in all scenarios. That is, no intervention required.”

Of course, visions must be put forward, and phased goals for the team should be quantified, with the indicators refined to an incredible extent.

This is especially important when XPeng Motors is undergoing a significant organizational restructuring and facing a market decline, as new progress is needed to boost market confidence. In Q1 of this year, XPeng Motors did not perform well in the market, selling only 18,000 new vehicles. As a company that has always emphasized intelligence, XPeng must have a highly detailed strategy.

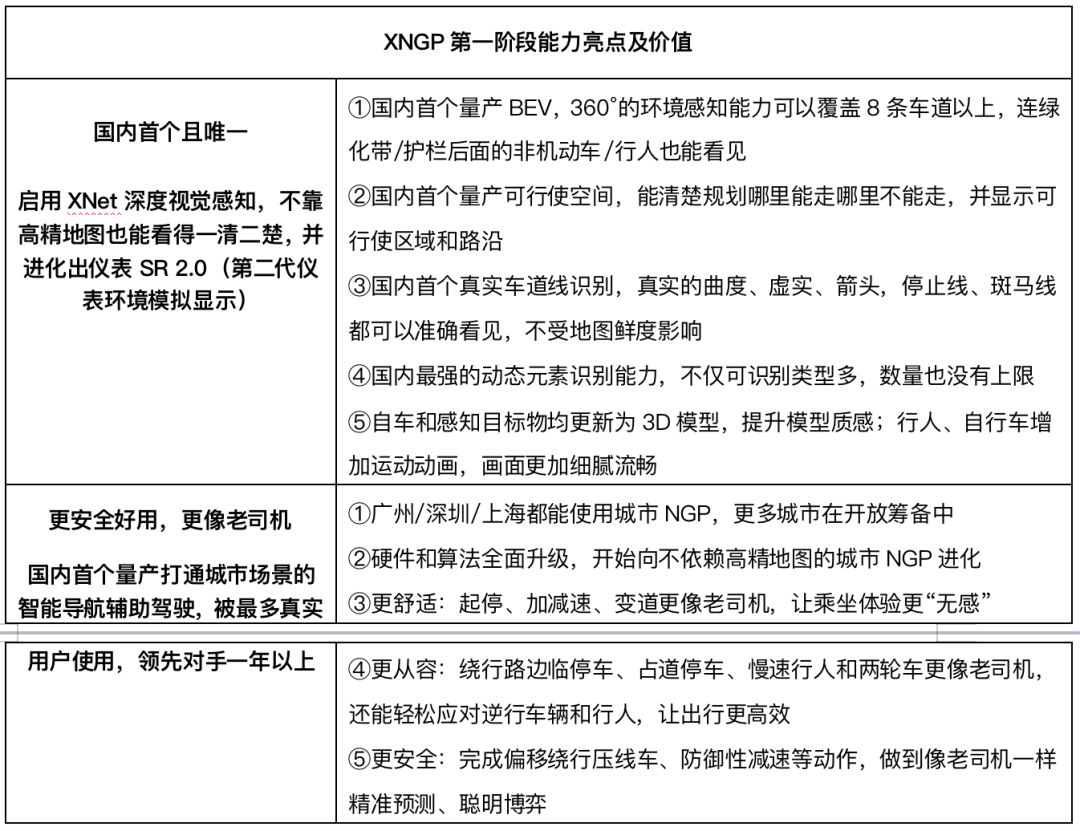

Before the first half of 2023, XNGP-equipped G9 Max models will be delivered with functions such as highway NGP, memory parking, LCC, and intelligent parking. The system will also have traffic light recognition and straight-through intersection capability in all map-less cities nationwide; city NGP will cover Guangzhou, Shenzhen, and Shanghai.

In the second half of 2023, lane changing, overtaking, and left/right-turning capabilities will be opened in most map-less cities (some high-level capabilities will be made available in phases according to user numbers and testing progress). In 2024, complete full-scenario connectivity will be achieved, realizing intelligent navigation-assisted driving capabilities from parking space to parking space.

Activate XNet Visual Perception”Our first principle is that everything can pause, but testing cannot, as software iterations must continue.” During the challenging development stage in the past pandemic, Wu Xinzhou said the team still worked hard to ensure progress.

He revealed that after the “1024 Technology Day”, over 300 software versions were iterated, including human-machine interaction and SR (Smart Assisted Driving Environment Simulation Display), “trying our best to release the version we developed in March in the best possible state.”

At the end of March this year, Wu Xinzhou’s team fulfilled their first-phase promise.

Starting on the evening of March 31, the first-phase capabilities will be rolled out in batches to G9 and P7i users. From the following morning, most users can receive updates for this software package. For P5 P edition high-configuration users, the service will be expanded to Shanghai, in addition to Guangzhou and Shenzhen, and continue to offer urban NGP services.

Xpeng’s XNGP hardware system comprises 31 perception components (33 if including advanced driver-assistance maps and IMU inertial measurement units), matched with Nvidia’s dual Orin-X. The hardware includes 7 driving assistance cameras, 4 surround views, 1 DMS sensor, dual LiDAR, 5 millimeter wave, and 12 ultrasonic components.

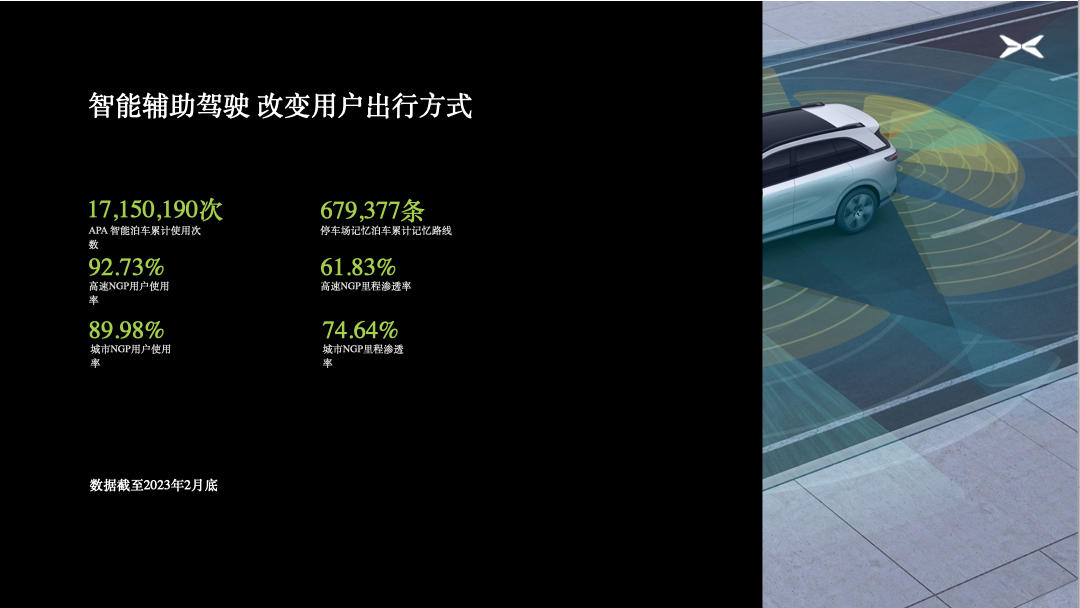

AutocarMax tested the XNGP’s interim achievements and found that overall traffic efficiency didn’t noticeably decline. Some driving behaviors resembled those of experienced drivers, such as forcing through yellow lights and consecutive lane-changing.

However, there were instances where zero intervention was not achieved, including solid line lane changes (due to consecutive changes near the broken line end), failure to distinguish between manual and ETC exits at the toll booth, sudden braking with merging traffic on the right, and following slow non-motorized vehicles on narrow roads with no markings.

Wu Xinzhou shared that, in terms of the number of interventions, internal test results showed that this generation performs ten to twenty times better than the previous one.

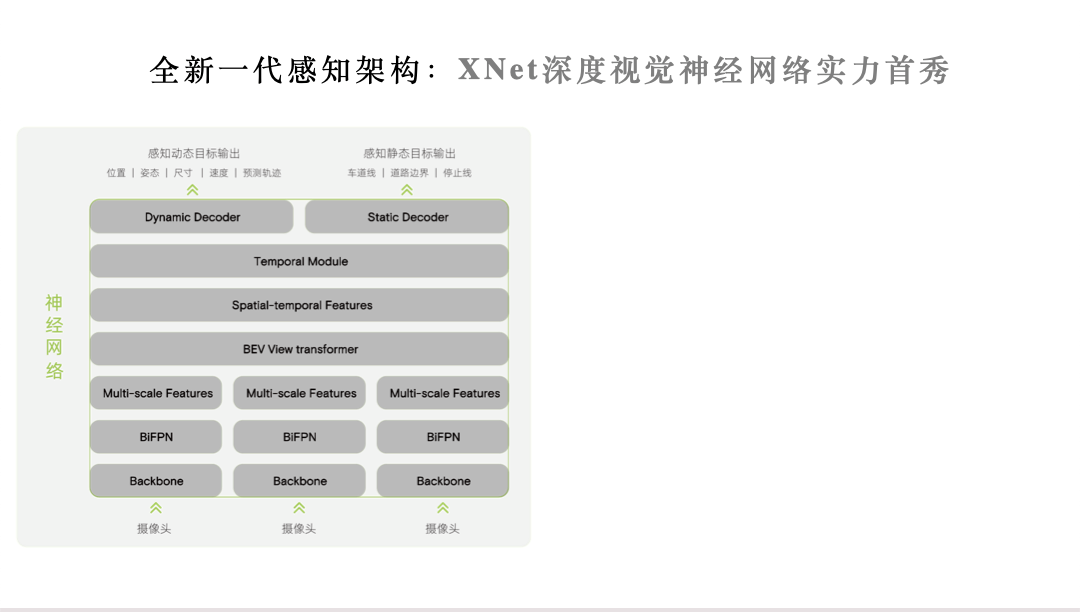

Worth mentioning is the beautiful lane markings, stop lines, and zebra crossings visible on SR, as well as various geometric outputs similar to map objects.The credit for the evolved instrument cluster SR 2.0 belongs to XNet Neural Network, which is the most notable change in this phase of achievements. XNet Deep Vision Neural Network is the first mass-produced BEV perception architecture for domestic vehicles.

BEV (Bird’s-eye-view Perception) is the 3D object detection on bird’s-eye view based on multi-angle cameras. This technology involves a neural network that transforms image information from image space into BEV space, adding a temporal dimension.

Traditional CNN-based perception architecture provides vehicles with a series of spatial information frames, which rely on high-precision maps for continuity.

However, high-precision maps face challenges such as qualification audits and re-audits, extensive mapping and surveying, frequent road information updates, and heavy policy restrictions on sensitive data—all of which hinder its practical application.

Consequently, companies have begun to explore solutions independent of high-precision maps. Tesla popularized the BEV perception architecture, and now the global industry is heading in this direction. By incorporating “temporality” and constructing its time-space, BEV Perception sees a continuous world, which serves as the foundation of mapless driving solutions.

“XNet enables us to truly break through the limitations of high-precision maps, or at least reduce our dependence on them,” said Wu XinZhou. “This is why, in the second half of the year, we can launch XNGP in dozens of mapless cities.”

In fact, the capabilities of XNet are just the beginning. At present, XNet has completed the geometry of ground, guardrails, traffic lights, trees, buildings, and other road static objects, such as length, width, height and relative positions. However, further effort is needed to understand semantics such as zebra crossing deceleration and stake barrel line detour.

Wu Xinzhou explained, “The core is to use it in relatively complex processes, such as lane change and turns. It requires semantic understanding, including the interconnectivity between different road segments.”

Wu Xinzhou is also very confident about the future capabilities of XNet.

“It is to truly transform the results of visual networks into the high-precision maps we are familiar with in daily life.” He said that, so far, he has not seen any insurmountable issues.

Hard indicators for “Commercialization”

Inside the Xiaopeng ZhiJia team, there has always been tension in converting engineering products into goods. For smart driving products, translating abstract technology into practical and commercially viable merchandise is much more difficult than for most researchers.

“Urban intelligent driving” is the top priority in scenarios. Data shows that city roads account for 71% of users’ total driving mileage and up to 90% in terms of driving time. Meanwhile, only 25% of users travel via highways daily, while city roads account for 100%.

The difficulty of urban NGP is hundreds of times more challenging than highway scenarios. The complexity of urban traffic, the high number of participants, the diverse city roads, and the differences in road conditions between cities all bring enormous challenges to the implementation of autonomous driving in urban settings.

“We have always said that implementing assisted driving in the city is not about willingness, but capability. We believe that it will take time to gradually improve. However, from an assisted driving perspective, we think it will quickly approach a score of 80, even 85.” Wu Xinzhou said.Undoubtedly, as the number of cities increases, so does the amount of engineering work.

Taking traffic lights as an example, Shijiazhuang has heart-shaped lights, while Tianjin has progress bar-like lights… Wu Xinzhou also shared an interesting case: during the initial trial in Shanghai, numerous red lanterns on the streets posed a significant challenge for traffic light detection. Such situations demand higher generalization capabilities and present engineering challenges that need to be addressed.

“I expect that initially, there will be a 5% or even 10% difference between each new city we implement our technology in. However, as we expand to five, ten cities, this difference will gradually converge.”

Next, Wu Xinzhou’s team has several milestones to conquer, including map-less navigation, point-to-point, and the continuous evolution towards full autonomy.

Additionally, there is the challenge of cost reduction. Wu revealed that He Xiaopeng proposed a 50% cost reduction in the intelligent driving segment, “The boss said it must be done.”

“Each of these functionalities presents its own difficulties. However, we are particularly confident because our R&D system has reached a steady state, and the level of cooperation between our various teams is exceptionally smooth.” Wu Xinzhou emphasized earnestly, “So, although the workload is large, it’s not difficult.”

Side note:

In early April, Yi Chen, a professor in the Department of Electrical and Computer Engineering at Duke University, reposted a statement from a seasoned industry practitioner on his Weibo:

Imagine AI as a child: Western AI education follows an elite path, with families investing heavily in education from birth until the child obtains a Ph.D. Upon graduation, the AI immediately amazes everyone with its powerful capabilities.

Chinese AI, on the other hand, follows a utilitarian education route characterized by survival skills. At the age of 15, the AI is already pressured to start making money for the family, focusing on marketable skills.

Take Google’s AlphaGo, Boston Dynamics’ robot dogs, or ChatGPT as examples. All three share similar characteristics: 1) Quietly burning money for many years; 2) Revealing astounding achievements; 3) Earning money through technology infrastructure, with no direct profit model visible.

In contrast, look at China: In 2014, I joined Baidu’s DuMi team (the precursor to Xiaodu). As soon as the robot mastered basic conversation, it began seeking profitable applications, leading to Xiaodu AI and its derived home appliances. In 2018, after joining Alibaba’s DAMO Academy, the conversation robots transitioned to Ali Xiaomi customer service robots as soon as they could speak.Initially, self-driving technology had only learned to navigate open roads at low speeds, recognizing routes and obstacles, and soon began to explore autonomous vehicle deliveries. In 2022, I joined ByteDance, where NLP-driven robot customer services were being developed. I’ve spent a decade in AI at the top three Chinese companies; however, at each company, it’s around two years before the technology and products become subordinated to business goals.

Recently, ChatGPT gained significant attention, with many consulting companies asking me to give talks. They don’t focus on China’s current technical advancements or the key differences between China and the US; instead, their primary concern is how AI can make money and which business opportunities exist.

Nowadays, Chinese AI practitioners face a dilemma with their bosses and investors, akin to a young person from a village eager to pursue higher education. At every turn, they encounter people questioning the value of their ambitions: “What’s the point of spending so much money on a Ph.D. if it doesn’t make money? It’s better to be like Niu Erwa, who built a new house in just three years by working in a factory!”

When asked, “Why does Western AI seem stronger than ours?” my public response, guided by professional pride and company loyalty, is “Chinese AI focuses more on business applications and commercialization capabilities.”

However, in the quiet of the night, my inner voice confesses, “One’s fate is sealed in the womb, and robots cannot escape this either.”

Objectively speaking, this passage may be emotionally charged, but it highlights some critical issues.

It vividly presents the stark contrast between the developmental paths of AI in China and abroad. The market is indeed the primary driving force behind technological advancements, and emerging technologies cannot avoid market-driven challenges. However, overreaching for fast results may ultimately backfire.

To some extent, the challenges encountered by China’s AI development mirror the struggle to implement advanced driver-assistance systems.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.