AI Technology Promotes the Development of the Autonomous Driving Industry

Tesla’s supercomputer center has nearly 20,000 GPUs, which has an immediate effect on the efficiency of autonomous driving training, maximizing the development efficiency of autonomous driving systems.

NIO’s Intelligent Computing Center has increased the inference speed by six times and saved 24% of resources; model development efficiency has improved by 20 times, helping to shorten the time-to-market of autonomous driving vehicles.

Continental Group’s high-computing power cluster has shortened the development cycle from weeks to hours, making it possible to realize the implementation of autonomous driving in mid-term commercial plans; the shortened machine learning time has accelerated the pace of new technologies entering the market.

…

Currently, an undisputed fact is that automakers with long-term plans in the field of autonomous driving, whether they are new players in the industry, traditional brands, or technology suppliers, are all building their own supercomputer centers to control stable computing resources, shorten development cycles, and accelerate the launch of autonomous driving products.

Conversely, without a supercomputer center, the training speed of autonomous driving will be significantly slowed down, and the gap between autonomous driving companies will become even more apparent.

Recently, NVIDIA and IDC jointly released “Reality + Simulation, Enabling Autonomous Driving with Supercomputing Power”. The white paper combines the current development status of autonomous driving, deeply explores the business needs and challenges in the autonomous driving development process, and comprehensively analyzes how automakers and technology suppliers can meet the computing power needs of autonomous driving development through building supercomputer centers, promoting efficient autonomous driving development and implementation.

The following text will analyze and interpret “Reality + Simulation, Enabling Autonomous Driving with Supercomputing Power”, revealing the mysterious veil of autonomous driving supercomputing power.How can we reduce the massive costs of time, people, and resources involved in the automotive industry? With a large amount of data, most problems can be solved through data-driven software algorithm upgrades, reducing the time, manpower, and material costs of research and development.

As stated in the white paper, the early development of autonomous driving systems depends on a large amount of road environment data input, forming algorithms that run through multiple links such as perception, decision-making, planning, and control. Even after the early development, continuous input of data is required to continue training and verifying the algorithms to achieve iteration and accelerate the implementation of autonomous driving. The training of autonomous driving algorithms requires a high level of computing power within a limited amount of time. The high-intensity computing power is not only needed to run, update, and iterate models, but also to support the construction and rendering of scenarios in simulation testing.

1.1 A I Supercomputing Center Provides Computing Power Support for Autonomous Driving System Training

To train and verify autonomous driving systems using data, computing power is required. Computing power directly affects development efficiency and determines the product’s time to market.

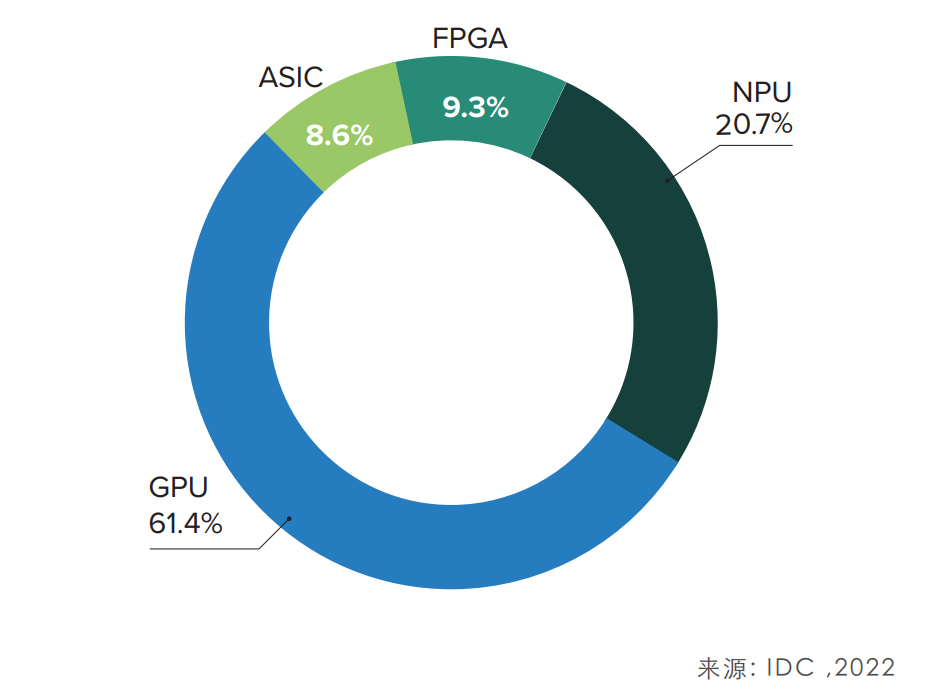

The white paper points out that the data center carries the enormous computing power required for training autonomous driving systems, providing important hardware infrastructure to support artificial intelligence computing. The underlying hardware technology path includes GPU, ASIC, FPGA, and NPU. Through research, IDC found that the hardware infrastructure for training autonomous driving algorithms in the automotive industry is mainly based on GPUs, supplemented by others.

In addition, the development of GPUs has led to a large number of matching software and services, including development tools and platforms, greatly reducing the time and cost for developers to deploy hardware facilities in testing and optimization, enabling end-users in the automotive industry to quickly deploy computing power.

Many readers may wonder what GPUs and matching software and services are used in the industry’s data centers. Here, we introduce the NVIDIA DGX SuperPOD integrated solution.

To meet the AI model’s computing power demand and help companies build AI data centers, NVIDIA launched the DGX SuperPOD cloud-native supercomputer in April 2021, providing users with a one-stop AI data center solution and a powerful solution for enterprises to satisfy AI’s large-scale computing needs.# SuperPOD is a reference architecture that supports rapid expansion from small scale, continual software optimization, and eliminates the complexity of “turnkey” data centers. It helps autonomous driving customers deal with challenging AI and high-performance computing (HPC) workloads and enables them to focus more time and effort on algorithm and software iteration rather than building data centers.

The integrated solution includes 20 DGX GPU servers, high-speed storage, Mellanox IB networks, software, scheduling platforms, services directly provided by NVIDIA, installation and deployment services, tuning services, pre-training and project technical customer managers, and many other services.

1.2, Digital Twin Technology Enhances Simulation Testing

It is well known that there are two ways to collect actual vehicle data: one is to rely on test cars, and the other is to rely on production cars to transmit data. However, for companies that are just beginning to develop autonomous driving, both methods are difficult, because there are not yet enough vehicle fleets for data collection or production cars that can transmit data.

If, as mentioned above, there are not enough vehicles for actual testing, and all potential corner cases cannot be tested due to the cost and safety limitations of actual testing, virtual simulation can be used to solve some cost and scenario diversity requirements. In this regard, large-scale long-tail scenarios require sufficient computing power support from data centers. At the same time, the regression process of simulation scenes to reality also requires enormous computing power support.

Digital twin technology can enhance the authenticity of virtual environments in simulation testing. In the mapping of the simulation layer to the real layer, rendering technology can make the pixel set closer to physical reality, and the use of computing power can generate simulations of terrain, environment, weather, and even light, to ensure consistency between pixel sets and reality. Secondly, based on the physics simulation engine, physical phenomena in the virtual environment are made to comply with the physical laws of real-world scenarios, ensuring the accuracy of the physical attributes of objects in the virtual world.

So, what tools do autonomous driving companies use for simulation testing? Here, NVIDIA DRIVE Sim can be referred to. It is based on Omniverse (Omniverse is a platform built by NVIDIA for the metaverse) and has the following features:# Cloud-native

Cloud-native refers to building for enterprise’s internal cloud and is a large-scale cluster in the middle of the data center with data center-level management and task distribution, as well as result statistics.

Scenario-based

Scenario-based is based on scenarios and continuously creates random scenarios to find potential problems in autonomous driving.

Scalability

As the development volume increases and more corner cases emerge, the demand for clusters increases, requiring support from different applications from workstations to data centers.

Three-stage launch

The software is launched in three stages: first, synthetic data is released; second, software in environmental simulation is released; and finally, hardware in environmental simulation is released, satisfying the end-to-end needs of customers.

Advantages of DRIVE Sim: first, it is fast because there is a large amount of synthetic data, so even without data collection, testing can be started; second, it is accurate, using machine labeling and viewing labeled scenes from God’s perspective to improve accuracy; third, it is diverse and can simulate various weather conditions such as rainy, foggy, and snowy days; fourth, it is low-cost, greatly reducing data acquisition costs through the synthesis of simulated data.

Status of the Autonomous Driving Enterprise Supercomputing Center

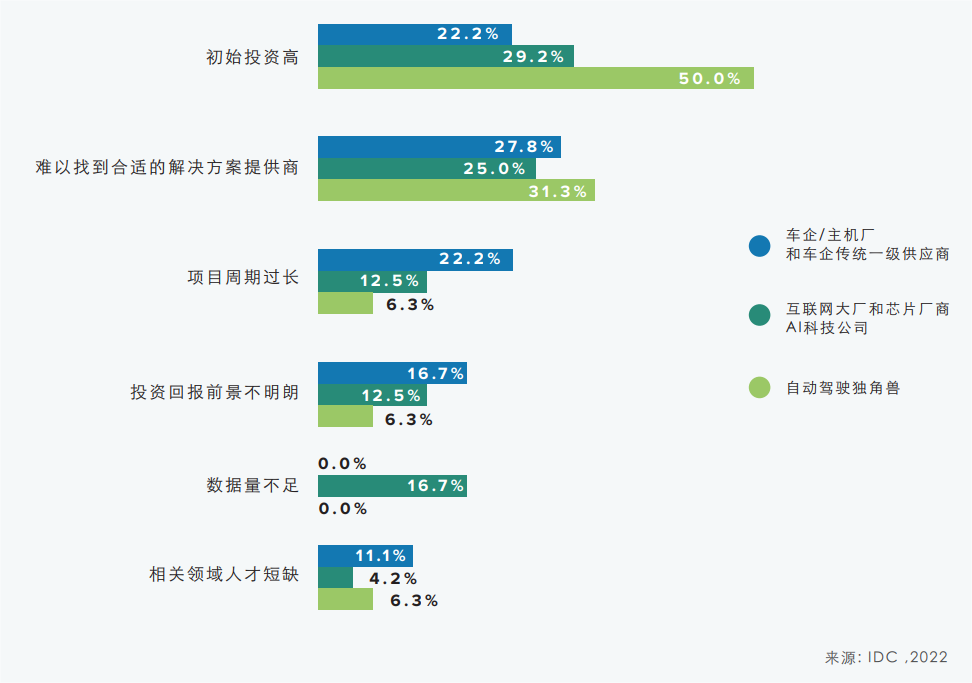

Investment in computing power for autonomous driving algorithms requires continuous investment. Through research, IDC found that the most common problem in the industry when building AI computing centers is that initial investment is too high, which is particularly difficult for unicorns in the autonomous driving industry. Another common problem in the industry is the difficulty of finding suitable solution providers. In addition, a long project cycle is a common problem faced by car companies and traditional tier-one suppliers.

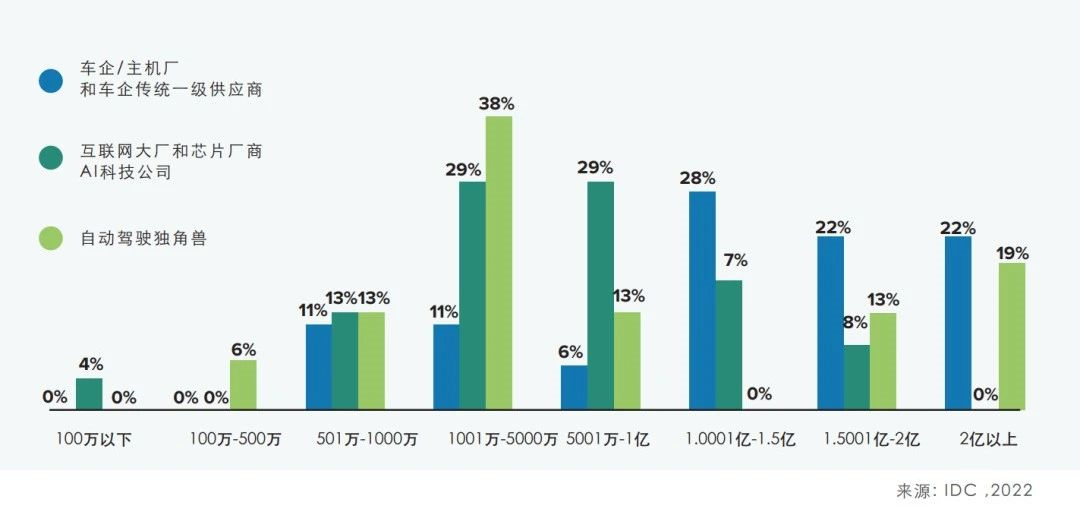

Regarding investment, both OEMs and Tier 1 suppliers generally budget more than 100 million yuan for building AI computing centers. Meanwhile, over 20% of them invest over 200 million yuan. Tech companies typically invest tens of millions, including cases where the investment exceeds 100 million yuan. The investment in AI computing centers puts a significant financial pressure on development teams across the industry.

Regarding investment, both OEMs and Tier 1 suppliers generally budget more than 100 million yuan for building AI computing centers. Meanwhile, over 20% of them invest over 200 million yuan. Tech companies typically invest tens of millions, including cases where the investment exceeds 100 million yuan. The investment in AI computing centers puts a significant financial pressure on development teams across the industry.

Regarding the initial investment in building AI computing centers, NVIDIA believes that the initial cost is relatively high, but the marginal cost will converge as the scale of the center expands. On the other hand, other alternatives may have lower initial investment barriers, but the marginal cost will gradually become uncontrollable in the later stages. In this case, autonomous driving developers need to balance their own investments based on long-term plans in the autonomous driving field, in order to make reasonable plans.

2.2 Relevant to how to find a suitable solution provider for building AI computing centers

Regarding this issue, the white paper pointed out that the building and operation process of AI computing centers is complex, and requires a high level of technical expertise. It is necessary to simultaneously consider different parts such as GPU clusters, storage, high-speed networks, software scheduling, and data center management. Each of these parts involves a large number of components, which not only increases design complexity, but also has independent delivery cycles, leading to significant uncertainty in deployment time. This presents a significant challenge for teams without building experience. At the same time, the operation of the computing center requires guidance from an experienced team to maintain maximum operational efficiency.

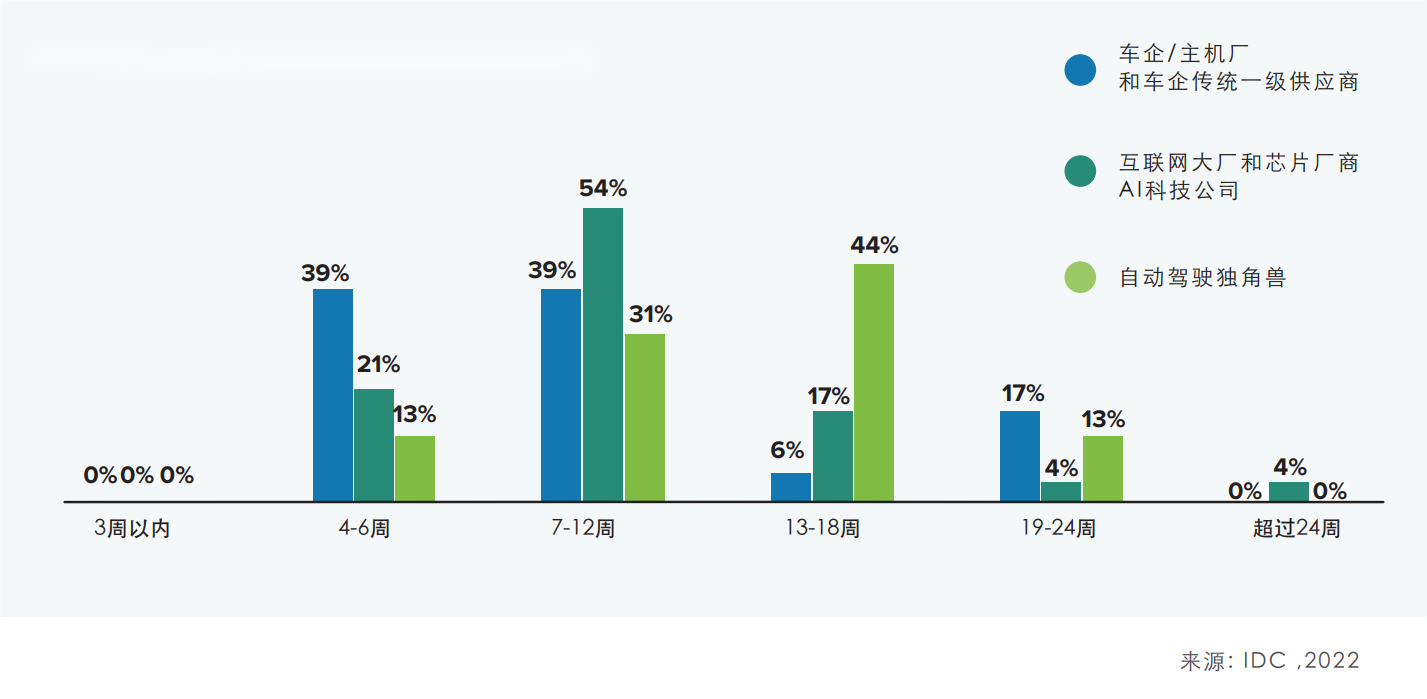

2.3 Regarding the long project cycle of building AI computing centers

Currently, the market condition for building AI computing centers is generally over one month, with most situations close to three months, leaving considerable room for optimization. NVIDIA believes that a mature solution can shorten the construction time, helping autonomous driving products to lead the market and providing a strong advantage for the first-mover in the field of autonomous driving products.

## 5 Suggestions for IDC to the Industry

## 5 Suggestions for IDC to the Industry

To make the computing resources more effectively support the development of autonomous driving systems, IDC suggests:

-

The selection of data center chips and network construction involves specialized knowledge in the IT field, and companies need to have relevant knowledge reserves.

-

Data center solution providers should launch an integrated full-stack AI solution, providing not only devices but also device construction services and after-sales service.

-

Based on the supply of computing power solutions and the computing power requirements of their own autonomous driving solutions, as well as capital investment, a long-term plan should be developed for computing power.

-

After the supercomputing center is built, there is a stability issue with the computing power. Computing power operators need a proactive plan to deal with various emergencies, rather than being passively led by emergencies.

-

Industry development definitely comes from the progress of the industry ecosystem. To support the cooperation of all parties in the industry ecosystem, computing power providers need to provide an open platform that can facilitate collaboration.

In the development process of autonomous driving technology, computing power has become one of the key driving forces to accelerate the development efficiency and rapid landing of autonomous driving products. Carmakers or technology providers with a long-term plan in the field of autonomous driving need to develop a long-term plan for computing power, and consider hardware, network, software, and service factors comprehensively, to build a supercomputing center that suits them, shorten development time, reduce costs and risks, and accelerate the landing of autonomous driving products.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.