Author: Zhu Yulong

A few days ago, Nissan’s next-generation Pro-Pilot demo vehicle with Luminar Lidar sensors was widely promoted, which is quite interesting. Unlike the other two Japanese car manufacturers, Nissan is currently developing around its own AD/ADAS advanced technology through iteration on the existing Pro-Pilot 2.0 by adding Luminar Lidar sensors. In light of Nissan’s situation, I will also touch on the different approaches of the three Japanese car manufacturers.

- Nissan

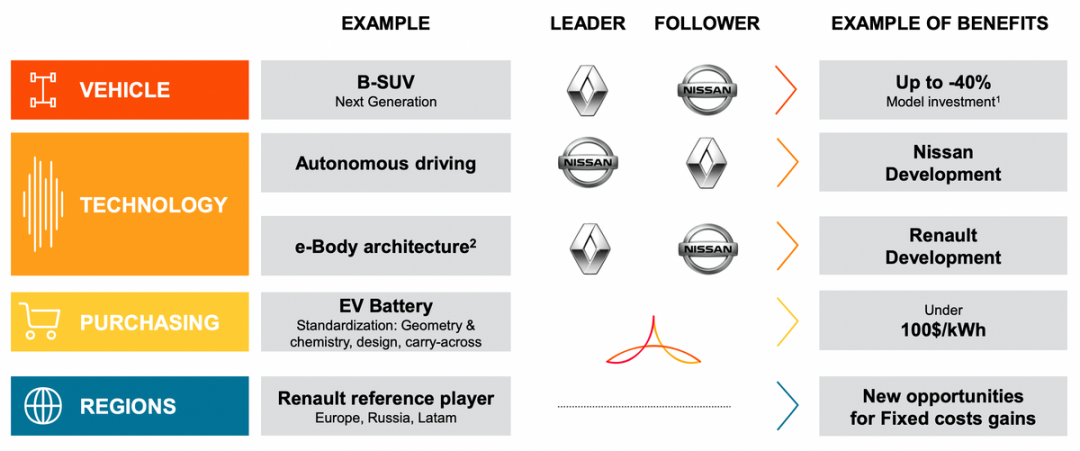

Nissan still plays an important role in the alliance of Renault, Nissan, and Mitsubishi. At the automatic driving chip release event of Qualcomm, RNM Alliance previously worked with Waymo, but did not push forward particularly quickly. Now, with Renault beginning to sell most of Nissan’s shares, from the perspective of division of labor, Nissan and Renault are developing HPC hardware and software for automatic driving in the EE architecture, led by Renault, and led by Nissan.

- Toyota

Toyota’s favorite now is the Woven Planet Group, with investments in Aurora and Pony as backups. Toyota’s self-development team focuses on the development of L2 + as a whole. Actually, Toyota’s cabin needs quite a long time to figure out. The business of pouring huge capital investment into the soul of the car may depend more on US research teams.

- Honda

Development of Honda’s Legend seems more like the Japanese automatic driving team’s final struggle. It is difficult to keep up with cost control and iteration of control system development. Therefore, Honda is still fully invested in Cruise together with GM, and also relies on the capital strength of the two companies to support an independent automatic driving development company.

Overall, if RNM wants to catch up with the first few companies in terms of automatic driving assistance technology, the key is the degree of continuous investment. Renault (with a loss of assets and sales in Russia) and Nissan are currently bleeding heavily from a financial perspective.

Note: For a passive otaku living in Shanghai, I have been at home for the past two months. However, I hope readers can enjoy this 5-day Labor Day holiday and have the right to choose not to stay at home during the festival. Happy holidays to all readers! You have the right to go out and have fun!

Development of Nissan’s automatic driving assistance system

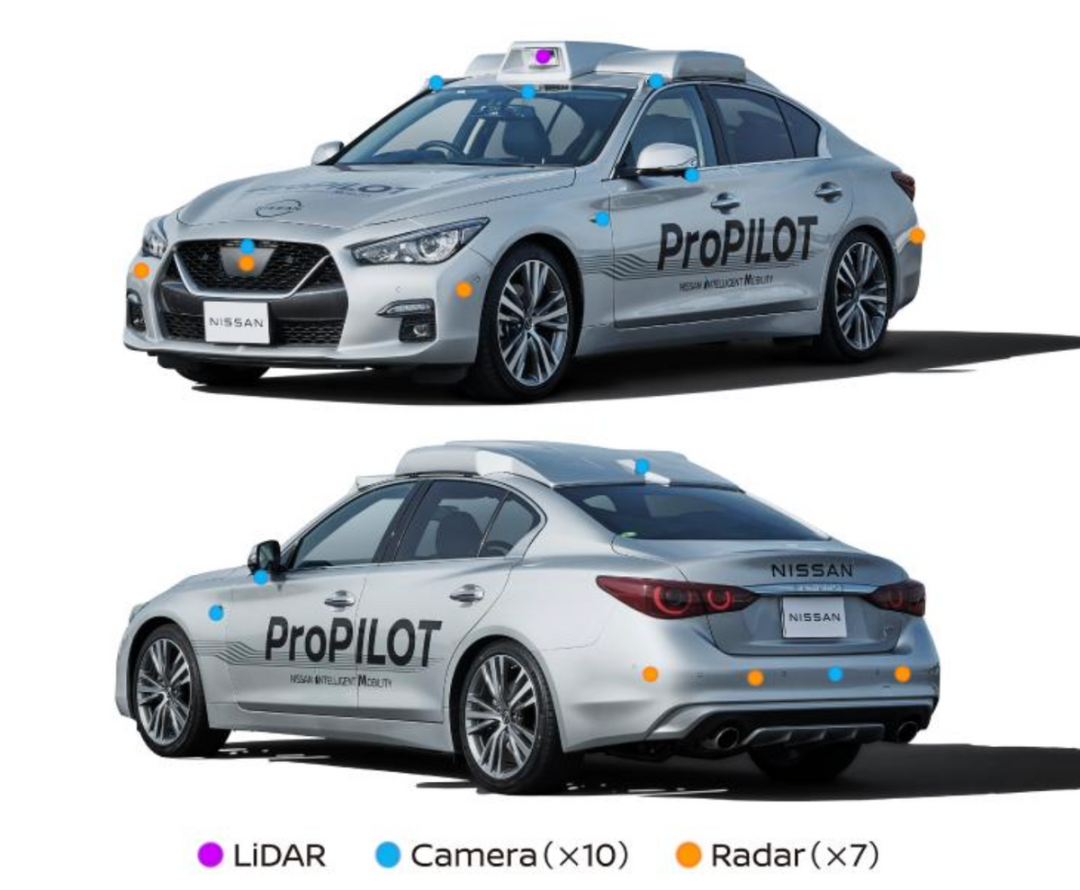

Nissan’s previous Pro Pilot was based on external vision algorithms, with integration work being the main focus. This time, however, it will integrate Luminar Lidar sensors into the perception configuration of the new generation ProPilot demo vehicle:

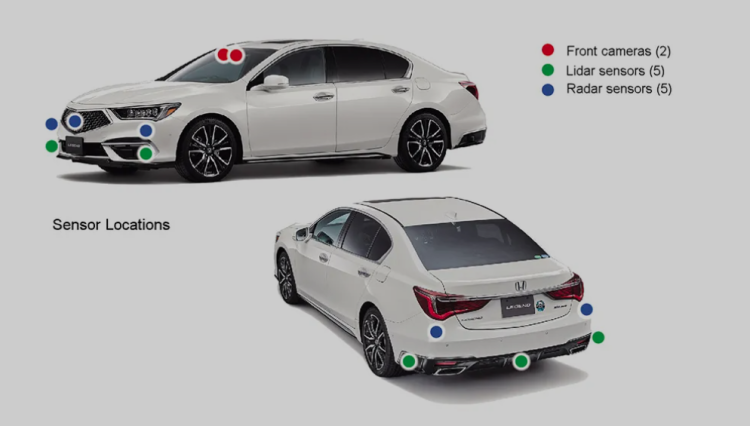

(1) 10 camerasThis installation method is similar to the common practice of installing perception kits on L4 vehicles, which is currently a bit like adding a roof sign to a taxi. ProPilot 2.0 is equipped with eight cameras (three front-facing cameras, four AVM cameras, and one DMS camera):

-

One front-facing camera is used for detecting a large field of view in front of the vehicle.

-

Two front-facing cameras are arranged on the left and right sides of the roof of the vehicle (position changed).

-

Two rear-view cameras are arranged on the left and right sides of the fenders (newly added).

-

One rear-view camera is arranged at the top of the antenna (newly added).

Here, six cameras are used to cover the entire vehicle’s 360-degree view, which is an increase of three from the original.

In addition, four fisheye cameras with 360-degree coverage are configured (quantity unchanged), as well as front and rear + left and right side-view mirrors (which may be integrated as part of the CMS import).

(2) Seven millimeter-wave radars (an increase of two from the original)

From the arrangement, one long-range radar and four short-range radars are arranged at the four corners of the vehicle, and two long-range radars are added to the rear. It is not clear what the use of the two rear radars is, but it may be for obtaining speed information of rear vehicles during lane changes.

(3) One LiDAR (newly added)

The LiDAR uses Luminar’s LiDAR and is installed on the front roof of the vehicle.

In addition, there are:

- One high-precision mapping module

- Ultrasonic sensors (nine in total) which do not have any noticeable improvement in functionality.

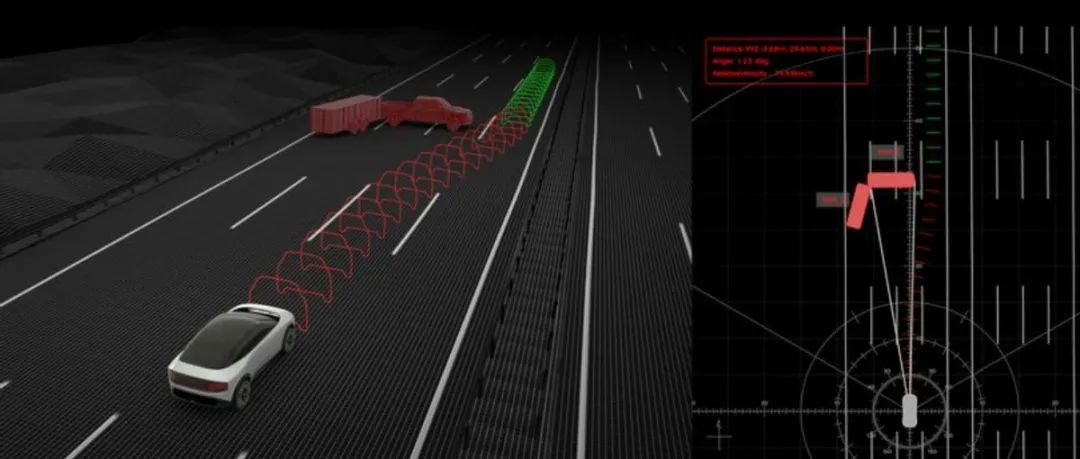

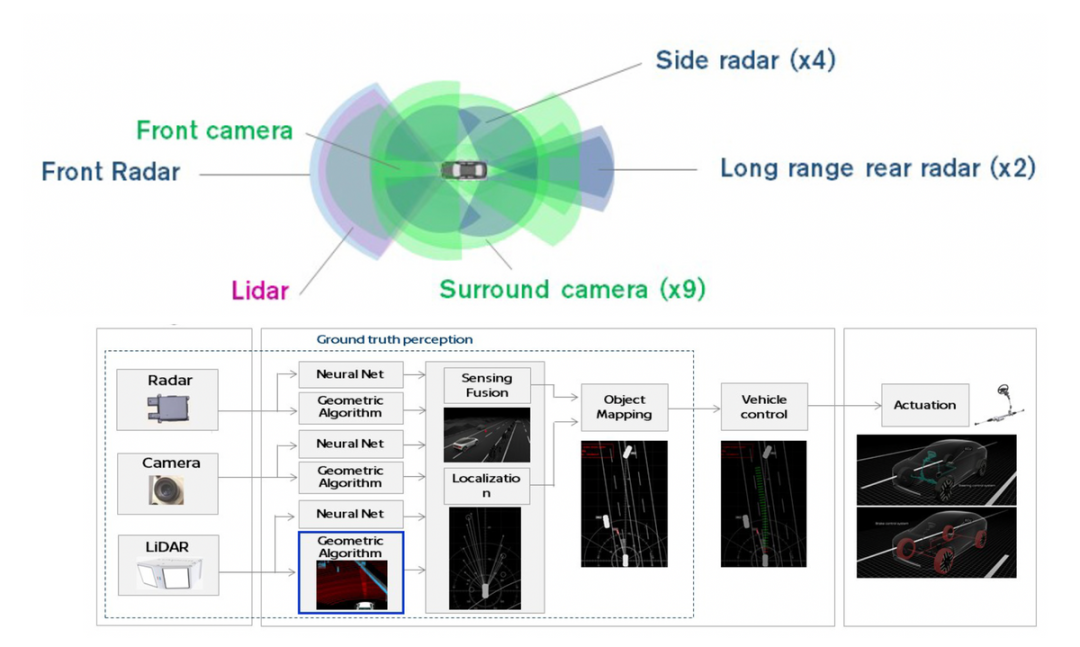

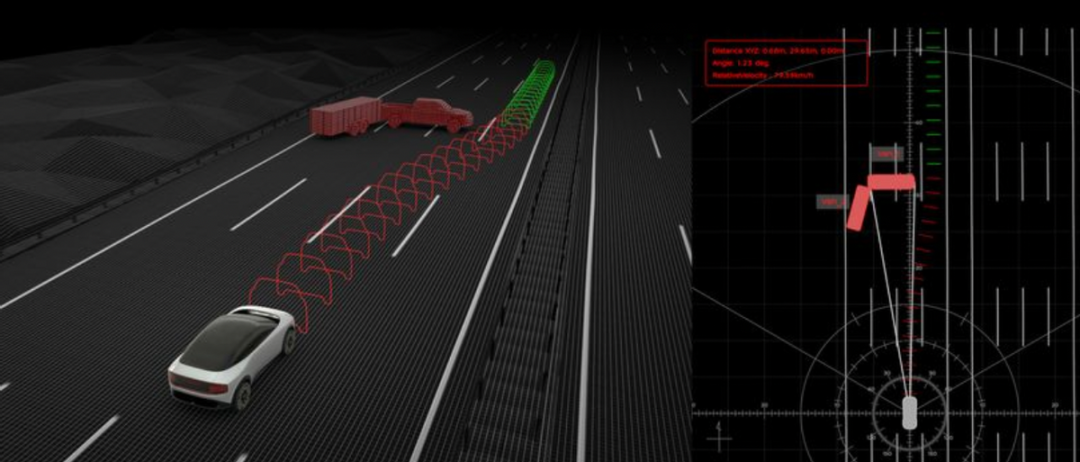

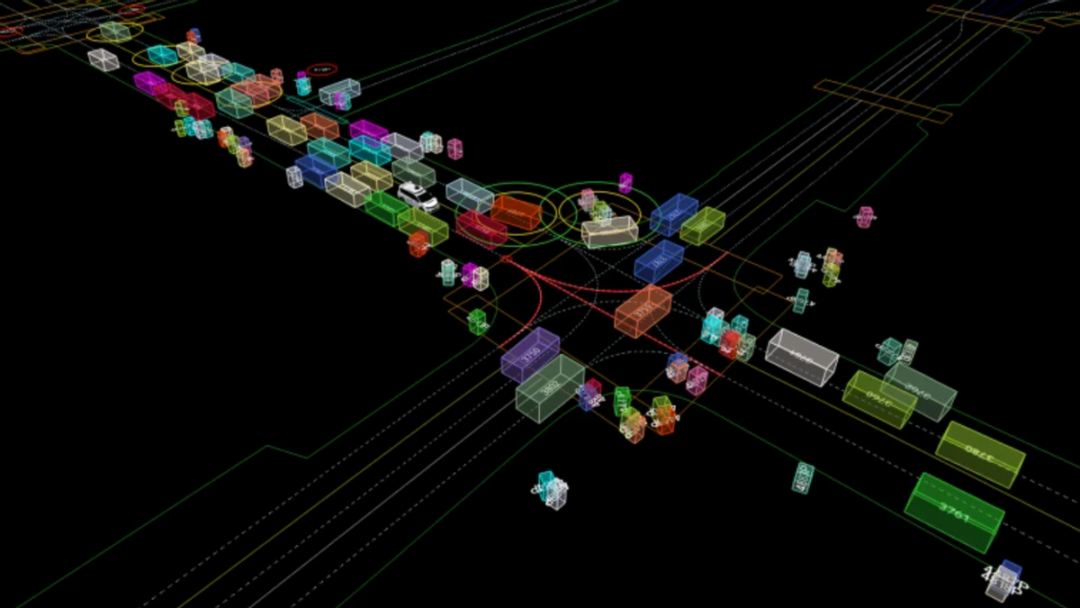

The diagram below may provide a clearer picture: Nissan has surrounded the vehicle with a circle of surround-view cameras, which are fused in the algorithm using radar, cameras, and LiDAR to achieve “ground reality perception.” Currently, Nissan has not disclosed how the entire processor is implemented, and it is possible that it may be integrated with the Qualcomm Snapdragon Ride in the future.

Note: Qualcomm and Renault announced first that, due to the consistency of the hardware platform, Nissan may have already made a selection in this regard.

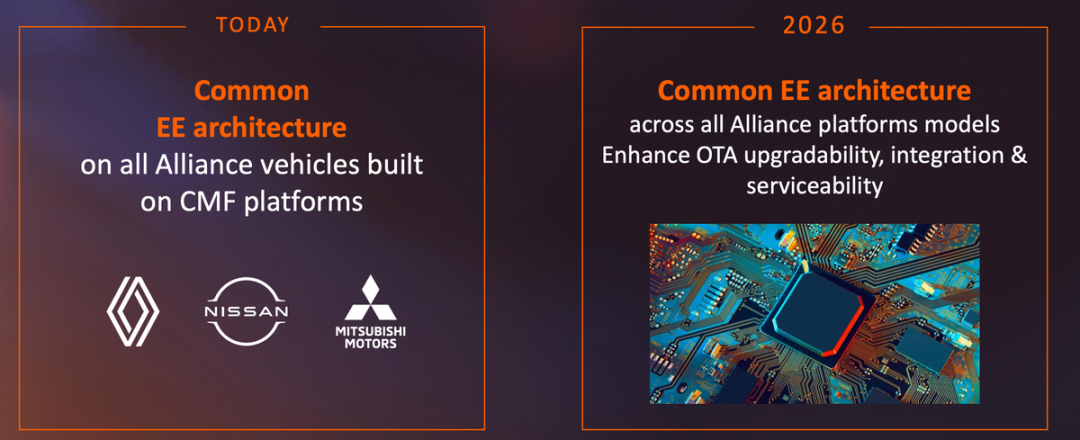

In the RNM alliance, there is a unified EE electronic architecture. However, in 2026, the next generation of RNM vehicles will use a universal electronic architecture. If designed in this way, the entire RNM vehicle styling design will face huge challenges. I don’t actually understand how this will be solved.

In the RNM alliance, there is a unified EE electronic architecture. However, in 2026, the next generation of RNM vehicles will use a universal electronic architecture. If designed in this way, the entire RNM vehicle styling design will face huge challenges. I don’t actually understand how this will be solved.

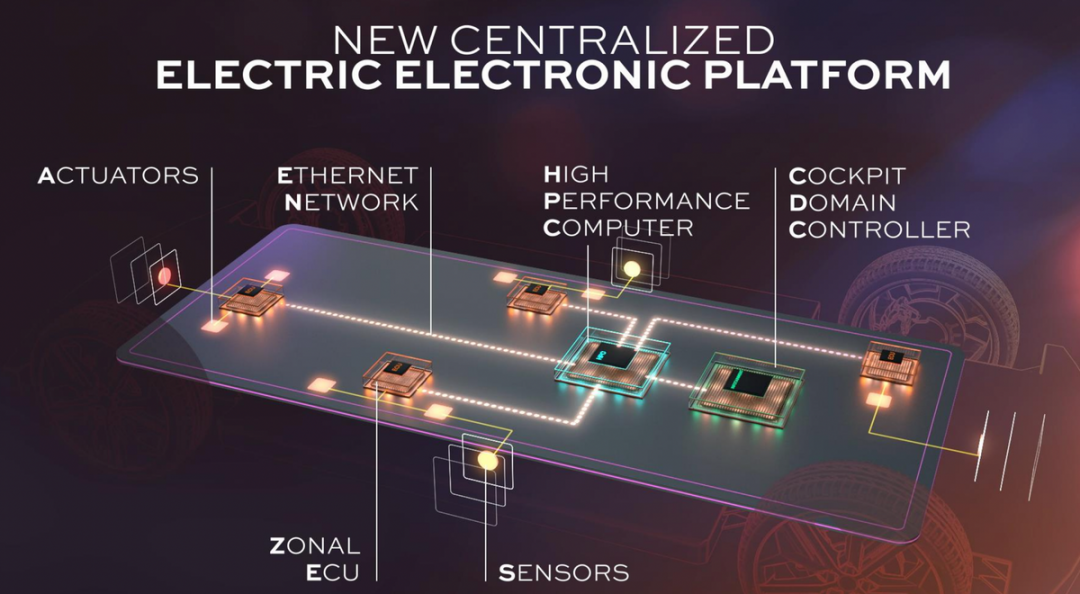

In other words, in 2026, a centralized electrical and electronic architecture will be adopted to achieve cost savings during joint development.

This is a cost-saving measure during joint development.

Japanese Companies’ Strategies for Self-Driving Cars in the US

(1) Toyota

With Toyota’s $550 million acquisition of Lyft’s self-driving department, Woven Planet’s development path is also changing. For Toyota, if many vehicles on the market can collect diverse driving data, this is of great practical significance for the North American team to develop autonomous driving car systems. By collecting data based on Woven’s original lidar, and embedding data through Lyft’s operating vehicles (installing cameras to collect data), it is possible to help improve the high-definition maps used for autonomous driving vehicle navigation. The camera data includes intersections, cyclists, pedestrians, and Lyft driver video recordings. Through the combination of 3D computer vision and machine learning, Woven can automatically identify traffic objects from the camera’s images.

(2) Honda

Regarding the Legend project, the publicity value is greater. With Honda beginning to make major investments, especially strengthening cooperation with General Motors, the two companies have actually entered a substantive advanced technology alliance.

Summary: In a sense, Japan and the United States have reached a new generation of technology dependence in intelligent vehicles. The Japanese automotive industry may rely heavily on technology development from the US, except for Nissan. In a few years, if the Apple software suite for cars is released, it may rapidly change the game.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.