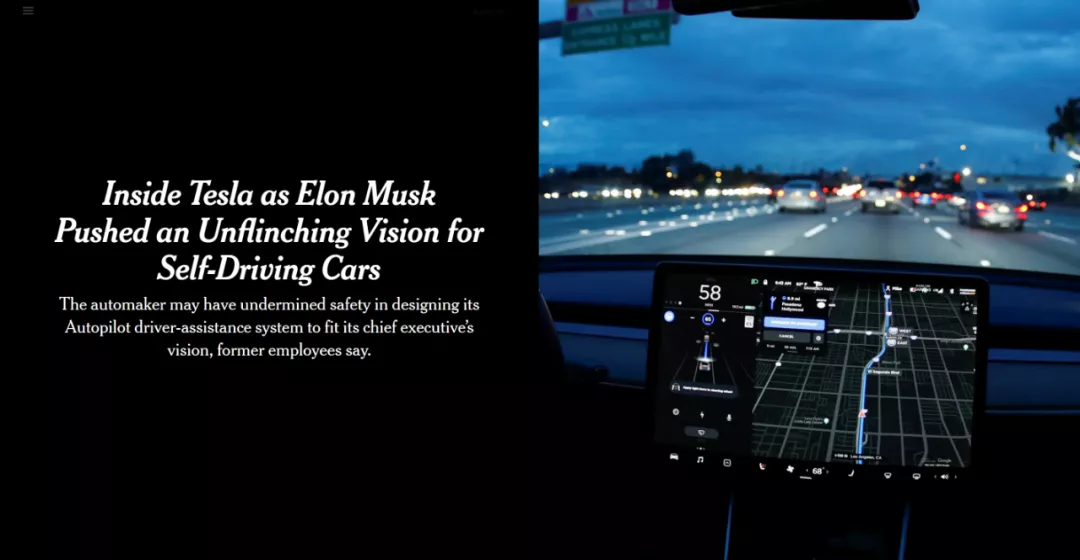

On December 6, at 5 am, The New York Times published an article titled “Inside Tesla as Elon Musk Pushed an Unflinching Vision for Self-Driving Cars” which once again targeted Tesla’s pure vision strategy.

Indeed, we cannot ignore Tesla’s pure vision strategy anymore, especially when Tesla officially removes the millimeter-wave radar from the models produced in its US factory, and FSD Beta public beta teams have pushed it throughout the United States based on its “safety rating”. Furthermore, Tesla has gradually removed the introduction of millimeter-wave radar from its official Chinese and European websites.

We need to face Tesla’s pure vision strategy squarely.

The timing of Tesla’s removal of radar is unfortunate. The chip shortage in the entire automotive industry has led to forced production cuts and stoppages throughout 2021.

In May 2021, Model 3/Y produced at Tesla’s Fremont factory in California are no longer equipped with millimeter-wave radar. Two quarters later, both Ideal Motors and XPeng Motors in China had to introduce new sales policies due to the limited supply of millimeter-wave radar chips, which required vehicles to be delivered first and then equipped with radar.

It is logical to deduce that Tesla’s rush to implement a pure vision solution in production models due to the shortage of millimeter-wave radar chips, however, this conclusion appears incongruous with the information revealed by Tesla’s Senior AI Director, Andrej Karpathy, in his two speeches.

The most important information was disclosed during Tesla’s AI Day on August 20. At that event, which aimed at recruiting industry professionals, Andrej detailed the development of Tesla’s Autopilot deep neural network during the engineering process. You might find it hard to believe that Tesla eventually removed the millimeter-wave radar due to dissatisfaction with the visual perception, but it is indeed the case.In September 2019, Tesla officially launched the Smart Summon feature. Based on the 8 cameras surrounding the car, Tesla Autopilot can identify obstacles in various corners without relying on lane lines or high-precision maps, and plan its own driving route up to 65 meters away, driving to you.

As you might expect, this feature was indeed very difficult to develop. Just going from 0 to 1 required a major restructuring of the Autopilot department. During that process, then Autopilot Vice President Stuart Bowers, Perception Head Drew Steedly, Regulations and Control Head Frank Havlak, and Simulation Head Ben Goldtein successively resigned, and Elon Musk took over the Autopilot team.

Unexpectedly, the challenge of optimizing the experience from 1 to 10 encountered even greater challenges, and the algorithm optimization associated with it ultimately resulted in the “dismissal” of the radar.

At Tesla AI Day, we learned about the dilemma Autopilot faced at the time.

In Smart Summon, Autopilot’s primary task is to identify and predict the curbs of different parking lots. Tesla developed a vector space tool called Occupancy Tracker. The images captured by all 8 cameras in the vehicle (rather than video based on time series) are stitched together and projected into the Occupancy Tracker.

This brought about two major problems. First, Occupancy Tracker was written in C++ code, and its iteration and related parameter adjustment require a lot of complex manual programming work. This problem may be one of the reasons why the Autopilot core team members left their positions as mentioned above.

Perhaps a more fatal problem is that manual programming deviates from Tesla’s underlying development logic of gradually replacing all manually written rules with deep neural networks, and the Occupancy Tracker based on image stitching has poor performance, not slightly different, but vastly different.Once on the open road, significant deviations arise from image-seamed lane predictions; the lane markings which appear accurate in 2D images can exhibit a large discrepancy when projected into 3D vector space due to the inability of each 2D pixel to accurately predict depth and project it into the 3D vector space.

When performing object detection based on images, if a semi-trailer happens to pass by the car, five of the eight cameras capture the trailer, but integrating these camera predictions becomes extremely difficult since the input is essentially an image, rather than dynamic video.

This led Tesla to recognize that the direction of the Occupancy Tracker was wrong, and the entire algorithm needed to be overhauled. All images (i.e. video) captured by the cameras throughout the whole car are fed into the same deep neural network (later known as the Bird-Eye View Net) based on the sequence of frames.

This resolves the complex problem of manual programming mentioned earlier, and perceptual performance begins to improve as the deep neural network trains. However, accurately projecting features from video captured by the cameras onto the vector space remains a complex problem. Andrej provides an example of the same lane markings from different camera perspectives in the vector space.

Take note of the title; at this point, Tesla is still struggling with the challenges of visual perception without yet mentioning millimeter wave radar, which is about to come on stage. Let’s continue.

Tesla inserted a Transformer layer, a model introduced by Google in June 2017 through their paper “Attention Is All You Need.” Transformer’s multi-head attention mechanism can link different positions in a sequence and calculate the representation form of the sequence, resolving the correspondence between image input and vector space output.Here is the translated text in English Markdown format, with HTML tags preserved:

When it comes to engineering with Transformers, Tesla has had to deal with a lot of challenges (as emphasized by Andrej), which are easy to talk about but hard to overcome. Nevertheless, the new BEV Net + Transformer model has achieved great improvements in mapping performance in vector space. In fact, at this point, the status of millimeter-wave radar has begun to waver, as it becomes clear that Tesla was not targeting it in the first place.

In solving the issues with video input and mapping performance in vector space, the problems of object detection and prediction fusion with multi-camera setups that were mentioned earlier no longer exist. However, when the algorithm was tested on public roads, Tesla quickly discovered a new problem.

Simply put, as compared to human drivers, Autopilot lacks the ability to “remember” things. For example, when we are driving and notice that the car in front of us is getting closer, we are able to perceive that the car is slowing down / moving slower than our car / stopped, and make new driving decisions based on this information. However, the ability to “notice that the car in front of us is getting closer” depends on our “memory” of the relative distance between the car in front and ours in the past.

In addition to this, a lot of road sign information (about upcoming turns) and other drivers’ actions (such as using turn signals) that are vital in driving depend heavily on “memory.” So, to address this issue, Tesla has introduced the Feature Queue module, which includes time-based and space-based sequences.

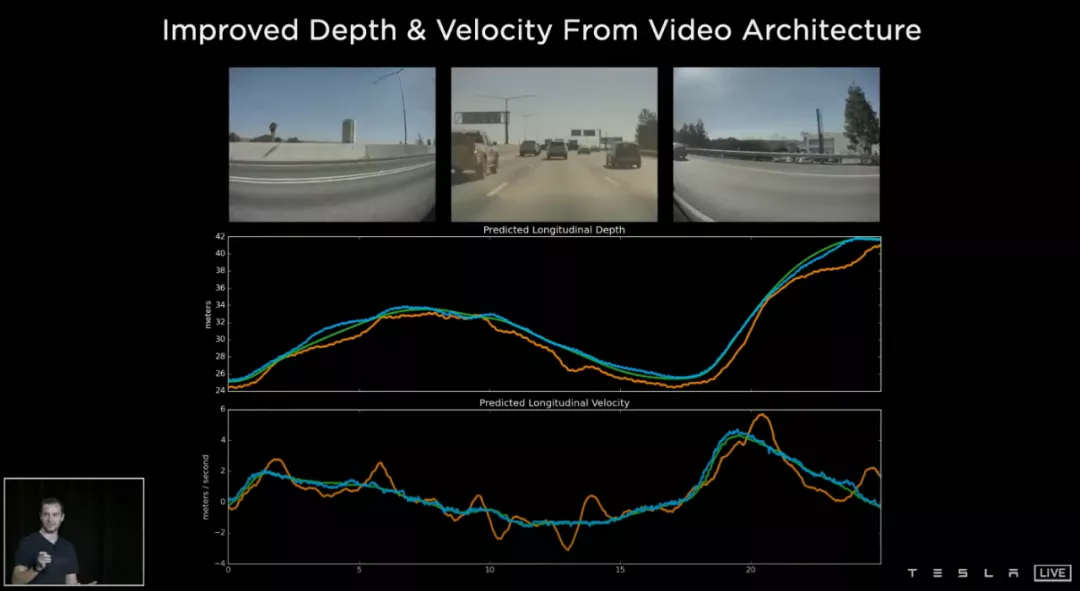

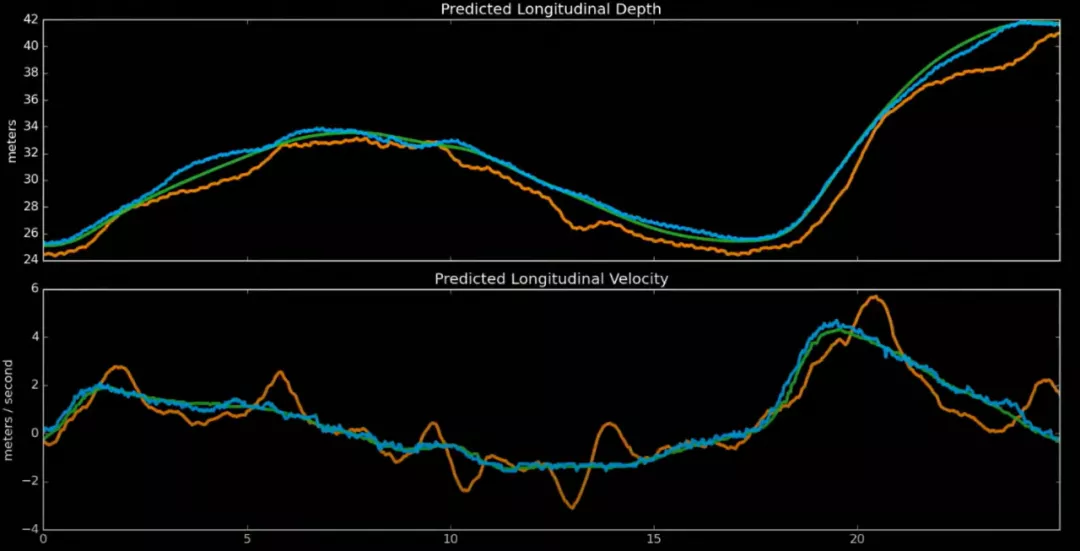

The time-based sequence synchronizes every 27 milliseconds, while the space-based sequence synchronizes every 1 meter. This gives the Autopilot algorithm the ability to “remember”. However, more importantly, it allows Tesla to obtain information on the speed, acceleration, and distance of obstacles around the vehicle using just cameras.

In the accompanying PPT, the upper image represents depth (distance prediction), while the lower one represents speed prediction.“`

The green output represents the output of millimeter-wave radar, and the yellow output represents the prediction of a neural network that takes 2D images as input. The large deviation between the 2D image and the millimeter-wave radar output can be observed. The blue output is the latest prediction of a neural network that takes 3D video input, which is highly consistent with the millimeter-wave radar and completely overlaps in many parts.

Finally, we come to the topic of millimeter-wave radar. The core principle of a series of active safety functions such as Adaptive Cruise Control (ACC) and Forward Collision Warning (FCW) implemented by millimeter-wave radar is to obtain information about the relative velocity, distance, angle, and direction of surrounding obstacles by emitting and receiving electromagnetic wave signals.

It can be seen that millimeter-wave radar obtains information similar to pure vision through a completely different method. This is the standard concept of heterogeneous redundancy. Therefore, when Autopilot is working, isn’t it better to make millimeter-wave radar and cameras backup and double-check each other?

However, in Andrej’s speech at CVPR on June 20, he said in a gentle and elegant manner:

“We are able to start removing some of the other sensors because they are just becoming these trashes that you start to not really need at all.”

This is because since Tesla’s self-developed camera and millimeter-wave radar perception algorithm in October 2016, the Autopilot experience has been continuously troubled by millimeter-wave radar.

Andrej explained this point more specifically in subsequent speeches. Simply put, millimeter-wave radar is always prone to false alarms. Because obstacles such as well covers or bridges that are higher than the ground, intersections, and oncoming vehicles that are not well tracked will cause the radar to issue false alarms, which are actually noise.

In actual experience, this is manifested as Tesla’s probabilistic ghost braking when Autopilot is running. Many car owners blamed this on the insufficient perception performance of Tesla’s camera, but it is actually the fault of millimeter-wave radar.

“`# Andrej’s Opinion

Andrej claims that when the information density of sensor A is more than 100 times greater than that of sensor B, the purpose of sensor B becomes an obstacle, and its real contribution is noise.

This statement is consistent with Elon’s previous evaluation of millimeter-wave radar: the essence of sensors is bitstream. The amount of information in bits/second from the camera is several orders of magnitude higher than that from radar and lidar. Radar must increase the signal/noise of the bitstream meaningfully to make it worth integrating. As visual processing capacity improves, the camera’s performance will far outstrip that of current radar.

Starting from Elon’s first public mention of the Autopilot’s core underlying code rewrite and transition to 3D annotation in March 2020, to Tesla’s official removal of millimeter-wave radar in May 2021, the entire engineering cycle took 14 months.

In my opinion, although this is a technical evolution in the engineering world, it is also very interesting. Although cameras were introduced later, they have taken over from radar like “I destroy you, but it’s none of your business” in “The Three-Body Problem” due to teachers’ emphasis in key areas, and unique talents.

For autonomous driving, the judgment of the first principle of the chosen technical pathway may determine the outcome of the major battle.

Oh, by the way, I almost forgot a question that concerns car owners: have the Chinese and European official websites successively removed the introduction pages of millimeter-wave radar, and are the Model 3/Y vehicles produced in Tesla’s Shanghai Gigafactory equipped with millimeter-wave radar?

Answer: As of now, Model 3/Y produced in Tesla’s Shanghai Gigafactory are still equipped with millimeter-wave radar, but changes are expected according to Tesla’s technological progress.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.