Urban navigation-assisted driving is about to welcome a formidable contender: Polestar.

On October 17, Polestar officially released an unbroken video of its mass-produced model successfully achieving navigation-assisted driving on the roads of Shanghai. At the same time, it announced for the first time its Occupancy Network (abbreviated as OCC) technology, co-developed with Baidu.

Compared to the urban navigation-assisted driving solutions rolled out by players like Huawei and Xpeng, Polestar’s showcased solution has a significant difference: It is based on ‘pure vision’, making LiDAR no longer a standard configuration.

In fact, what Polestar presents is the country’s first BEV+Transformer ‘pure vision’ advanced smart driving solutions—signifying that amidst the industry where laser radars are used for mass production for urban navigation-assisted driving, Polestar opts to pioneer a new technological path, benchmarking Tesla across the sea.

Now, it’s about a week till Polestar 01 officially enters the market on October 27—if the smart driving solution demonstrated by Polestar is finally made available for mass production, then Polestar will be the only manufacturer in the country to adopt pure vision for advanced smart driving.

Furthermore, we’ve learned that all the high-level intelligent functions of Polestar 01 can be used immediately upon delivery, meaning ‘launch and delivery’, which is impressive in terms of its speed.

What’s special about Polestar’s pure visual route?

At present, for any automaker committed to realizing mass production of autonomous driving, conducting navigation assistance driving on city roads is a core scenario for demonstrating its intelligent driving capabilities.

But the key is the actual performance.

From the official video demonstrated this time, Polestar’s urban navigation-assisted driving shows a relatively high degree of product completeness. To elaborate:

- On the road. Starting from the Polestar store in Lujiazui, Shanghai, passing through typical urban sections such as Lujiazui CBD, the Bund, and the Nanpu Bridge, the total distance covered is 15.8 kilometers, crossing 36 traffic lights, achieving ‘zero takeover’.

- Scenarios. The sections presented in the video covered urban ground roads, tunnels, complex overpasses etc, which are technically demanding in urban navigation-assisted driving.

- Perception. From the perception information displayed on the instrument panel after enabling the navigation-assisted driving, the vehicle can quickly and accurately identify traffic lights, lane lines, vehicles, pedestrians, and pylons.

- Driving. On large curvature bends like the Nanpu Bridge, the vehicle stays within the respective lane correctly without any difficulty; in scenarios like merging into lanes, accessing ramps, and avoiding vehicles, the car shows a relatively steady driving style.* Lane changing. In the video, the vehicle completed a total of 15 lane changes, with a style that isn’t overly aggressive, primarily prioritizing safety. However, when the system deems it safe, it will act decisively, in line with human experience perception.

In general, on the premise of pure visual technology, G3’s demonstrated city navigation assisted driving scenarios have achieved a usability that exceeds our expectations. This is particularly evident on complex roads such as those in downtown Shanghai, where its perception, handling and passage capacity for different scenarios have indeed helped us build some confidence in the pure vision track’s application to city navigation assisted driving.

Especially in terms of perception, the pure visual track shown by G3 has successfully eliminated strong reliance on LiDAR sensors.

Freeing itself from LiDAR means that G3 needs to invest more in enhancing the efficiency and capabilities of its BEV+Transformer algorithm framework and in raising the upper limit of visual perception.

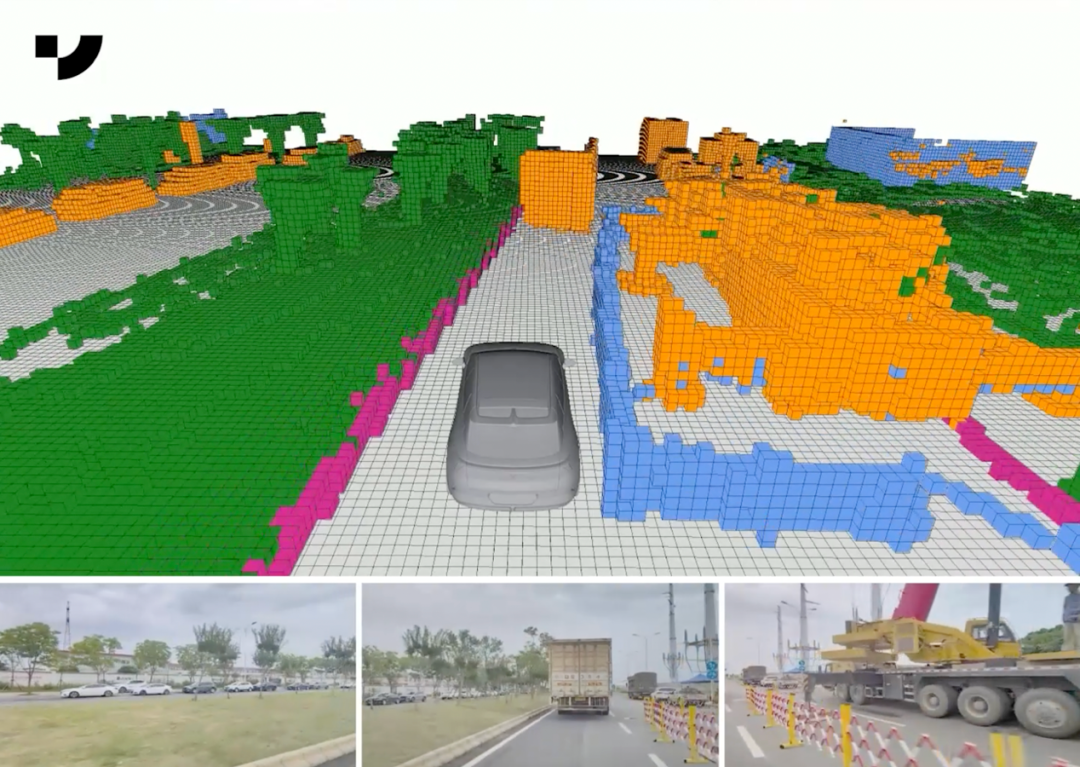

In reality, the OCC network technology featured in G3’s demonstration helps the vehicle better reconstruct 3D scenarios and obtain three-dimensional structural information with a resolution higher than that of LiDAR point clouds. It can also reduce errors in omission and commission and compensate for the lack of spatial height information in vision, thereby greatly breaking through the upper limit of perceptual capabilities and significantly improving generalization capabilities.

Exactly because of this, G3’s approach can compensate for the perceptual shortcomings brought about by the absence of LiDAR and meet the visual perception needs in different lighting and road scenarios.

This is not an easy task, but as of now, G3 is highly confident.

Currently, it is unclear what driving intelligence scheme G3 will finally adopt when it goes public. However, its promotion implies that the driving intelligence function on the G3 01 will be “ready to use” upon release. In other words, users will be able to experience its city navigation assisted driving function in designated cities upon receiving the G3 01, without waiting for subsequent OTA upgrades.

In terms of delivery speed, G3’s city navigation assisted driving leads the domestic market. Looking across the entire industry, G3’s delivery speed in terms of achieving mass production of intelligent driving is unprecedented.

This means that with respect to the rhythm of mass-produced urban intelligent driving products, G3 has surpassed many host manufacturers and solution providers who have already started. It stands ready to compete with top-tier domestic players like Huawei and Xpeng who have already rolled out city navigation-assisted driving in specific cities. Moreover, the latecomer is aiming at the title of the “Top 3” in high-order intelligent driving in China.

Where Does G3’s Confidence to Challenge Tesla Come From?

The pure visual solution released by G3 this time is technically the closest approach to Tesla’s.

In terms of computing power, G3’s solution is equipped with two Nvidia DRIVE Orin chips, capable of delivering up to 508TOPS of AI power, which is a standard frequently adopted by domestic players including Xpeng in city navigation-assisted driving scenarios.From the perspective of the algorithm framework, Xpeng’s solution adopts the BEV+Transformer sensory technology framework highly chosen in the industry, coupled with the OCC occupancy network technology co-developed by Xpeng and Baidu, enabling recognition of irregular obstacles and further enhancing Xpeng’s pure visual perception capability.

So, why has Xpeng chosen to announce its new pure visual route on the eve of the launch of its first model? The key reason is the breakthrough in core capabilities brought about by AI.

As far as we understand, Xpeng had adopted a “vision-centric+lidar” hybrid advanced intelligent driving solution during the early development of the 1.0 stage. These two solutions were independent of and complementary to each other.

But by the 2.0 stage, Xpeng found that the pure visual solution based on BEV+Transformer was maturing. Meanwhile, backed by the OCC occupancy network technology, its 3D perception of the surrounding environment had greatly improved in terms of perception effect and efficiency. Thus, Xpeng began to consider whether to keep the lidar, and gradually reduced its reliance on high-precision maps.

However, Xpeng CEO Xia Yiping told us in the exchange session another core consideration for Xpeng’s exploration of the pure visual route: compared to the point cloud information captured by lidar, the image information based on pure vision cameras actually had a bigger data mining space.

Specifically, it was more conducive for Xpeng to integrate large models and end-to-end capabilities, forming a data-driven closed loop and providing a more efficient channel for subsequent sensory improvements.

In other words, although lidar did provide a helpful boost in 3D perception during the early stages, Xia Yiping believes that, both in the immediate and long term, under the joint support of the BEV+Transformer algorithm framework and OCC occupancy network technology, the capabilities of the pure vision route have been proven and its subsequent potential will be continuously magnified under the support of frontier technologies like large AI models and end-to-end.

In the interview, Xia Yiping stressed that the real key to choosing the pure visual route is confidence in the current capabilities and subsequent iterations of the technology – he said, it’s a long-term consideration.

To this, Wang Liang, chairman of Baidu’s autonomous driving business unit’s technical committee, also told us: in fact, when he first entered the autonomous driving industry, he had to face the dispute between lidar and pure visual route.

But from the very beginning, he realized that lidar was not necessarily the ultimate solution, and poorly used lidar could even “cause trouble”. Therefore, considering future development trends, autonomous driving will follow the principle of “keeping it simple” in choosing sensors, and the number of sensors will decrease.

Interestingly, apart from benchmarking its technological route against Tesla, Xpeng’s ability to deliver mass production has already shown a stronger advantage than Tesla.During an interview, Yiping Xia told us that compared to Tesla, Xpeng G3 has more local advantages in mass production, including data, mapping etc. —— At the same time, even if Tesla’s FSD had functioning implementations in China, they would still have confidence in competing in the same arena.

The paradigm of autonomous driving mass production might have already been changed

For the entire smart driving industry, the importance of Xpeng’s urban navigational assistance driving plan that has been demonstrated here, and its adoption of ‘mass production equals instant delivery’ strategy, is manifold.

On one hand, it has joined the battle against Tesla taking place across the ocean together with competitors like Huawei and Xpeng, through the swift landing and delivery capability. They have also started to lock horns directly with Tesla from a technical perspective —— even though Tesla’s FSD has yet to land in China. Still, the back-runners, Xpeng, have displayed a notable competitiveness supported by the underlying advantages of data volume and map data etc.

On another hand, the pure vision serve drive-capability demonstrated by Xpeng this time somewhat follows and confirms a new trend in the smart driving industry during its landing process.

Which is: while technology remains vital to the development of smart driving, cost also needs to be considered.

Currently, from the standpoint of mass-produced vehicles, the idea of continuously relying on technologies that stack expensive sensors and processors becomes less and less the only viable solution for companies. It’s difficult to achieve a user experience value that matches the hardware input.

So, how to transform smart driving from a technology into a product that can be deployed is the most challenging yet crucial task.

In actuality, during the development of smart driving, the auto industry has always been exploring a pathway to a wider consumer base from various dimensions such as technology, market and policy hurdles. Now, in 2023, advanced features like urban navigational assistance driving have finally started to reach consumers with the landing of mainstream mass-produced models, and the whole industry has at last seen the opportunity for smart driving to really take off in bulk.

What the entire industry needs to do now is to keep optimizing iterations via differing methodologies on the software and algorithm layers while ensuring that perception capability and computing power fulfill needs so as to achieve better experience results.

What’s even more critical is that, with the rise of large models in the AI field and the exploration and application of cutting-edge techniques like end-to-end operation in the entire automated driving industry, AI technology’s further involvement in the development of automated driving has provided auto makers and solution providers that are in this industry with more ways and possibilities for bulk landing. This has made the industry even more ‘flourishing’.

It could be said that under this trend, including trailblazers like Tesla and latecomers Xpeng, while making their choices regarding tech routes, are inducing the industry to rethink profoundly the question of whether ‘advanced driving assistants necessarily need to use Lidar scanners’.It’s worth noting that, according to the information we gather, beyond Tesla and P7, some emerging car manufacturing forces in the industry are beginning to explore the possibility of eliminating LiDAR in urban scenarios. From this perspective, the solution deployed by the P7 demonstrates a certain trendsetting direction.

In simple terms: the paradigm for mass deployment of autonomous driving has changed.

So in any case, for the progress of autonomous driving in China, the arrival of the P7, whether in terms of its technical route or its participation in mass production, is good news. As for its ability to gain user and market recognition in terms of experience, we look forward to seeing the answer via 42Mark’s review after the launch of the P7 01.

This article is a translation by AI of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.