By 2023, active safety features like AEB are gradually becoming standard for SUV models over 300,000 yuan. Sensors, including laser radars, millimeter wave radars, cameras, etc., can perceive the situation around the vehicle and trigger AEB in emergencies.

The conditions that trigger AEB vary greatly between different vehicles. It fulfills its purpose in key moments, which is the true goal of an AEB system.

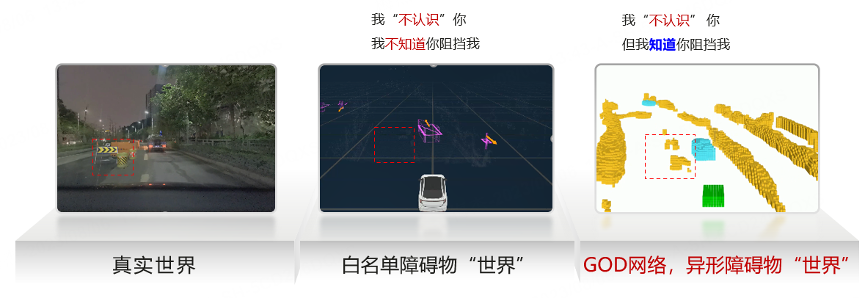

Nonetheless, no matter what type of sensor it is, once it perceives the external world, it invariably employs a “whitelist obstacle detection” approach. Objects outside the whitelist are chosen to be ignored.

Yes, items like fallen trees, overturned vehicles, rolling rocks…are directly overlooked. This implies that if you happen to be distracted while driving, the AEB system may not be able to help you.

To recognize objects outside the whitelist, we must employ the GOD (General Obstacle Detection) network to perceive a more complete world.

Recently, we had a sneak preview of Avatar 11’s forthcoming GAEB in a closed field in Shanghai. Compared to traditional AEB trigger conditions, there has been a clear evolution. In addition, the performances of the currently hottest 5 new energy vehicles were also compared, with a total of 6 car performances, who performed better?

Daytime Collective “Accident”

In a standard AEB test, typically a vehicle or person is the obstacle.

For example, in European E-NCAP standards, the target vehicle can reflect radar signals 360 degrees. Visually, the test car also has clear vehicle features.

However, objects that are outside the AEB whitelist and can’t reflect radar signals are outright ignored. This is well illustrated by an image Avatar showed us, where in the world of whitelist obstacles, only the obstacles within the whitelist can be distinctly identified.

Even within the whitelist, there are special cases, such as people wearing hats or overweight people, where the system may judge their overlap with the whitelist’s “people” to be too low and directly ignore them.

But with the GOD network, there essentially is no whitelist, and irregular obstacles finally appear in the world of perception systems.

Therefore, for a competent AEB system, having a GOD network means that the vehicle can perceive more elements when “observing” the world.Our test this time features 6 models that represent the “best assisted driving”. The Avatr 11 has the most LiDAR in a domestic mass-produced vehicle, complete with Huawei’s backing; the NIO ES7 boasts the highest assisted driving AI computing power among mass-produced vehicles, at 1016 TOPS; the Xpeng G9 has two LiDARs watching the front, with a potent 508 TOPS computing power; the Tesla Model Y has long been considered one of the benchmark products for assisted driving; LI has become the largest customer of LiDAR companies, with a “far ahead” sales volume among new car manufacturers; ZEEKR 001’s assistance drive is the highest standard of Mobileye mass-produced hardware.

We simulated urban driving conditions, starting the test at a speed of 40 km/h, and tested the speed limit at which the AEB of the test vehicles could stop within the limited runway length.

After a long explanation, you, our reader, should have a good idea about the test results. For vehicles without the GOD network, they wouldn’t trigger AEB upon seeing cones, cardboard boxes, water horses and other objects that lack radar reflective surfaces and aren’t white-listed obstacles, even if the vehicles perceive obstacles directly ahead.

Meanwhile, the newly updated Avatr 11 with the GOD network can accurately identify objects over 50cm x 50cm and accurately trigger the GAEB system without driver intervention.

Even at a maximum speed of 70 km/h, the Avatr 11 can identify cones, cardboard boxes and water horses in the center of the road in anticipation, and eventually stop.

Your impressions after the Avatr GAEB system started would be roughly as follows:

- The driver did not intervene in the throttle, brakes, or steering.

- The vehicle can perceive obstacles from about 70 meters away and display them accurately on the instrument screen.

- The vehicle decides to start GAEB based on braking distance, initially imposing light braking force.

- If the driver still doesn’t brake or steer, the vehicle begins to brake fully until it comes to a complete stop. Meanwhile, the emergency brake warning lights will come on.

- Afterward, the vehicle idles forward, and the driver can intervene to come to a complete stop.

Other cars did not perform as well. Firstly, none of them stopped under a speed of 40 km/h. The Tesla Model Y, NIO ES7, and Xpeng G9 could accurately identify the cones in front of the vehicle, but they didn’t stop.

At the same time, Tesla’s ultrasonic radars are quite sensitive, capable of identifying water barriers ahead at speeds of up to 40 km/h, though warnings are only provided when the barrier is 1 meter away—a distance too short for braking.

The performance of the LI L9 and ZEEKR 001, however, was worse, seeming to ignore all water barriers, cone barrels, and cartons ahead. These obstacles never appeared on the screen even after multiple tests.

Can Avatr 11 Stop In Time Under Nighttime and Backlight Conditions?

For human drivers, night driving with oncoming vehicles’ high beams could cause temporary blindness, posing significant risks. If the vehicle’s perception system could detect danger earlier, it could help humans avoid hazardous situations.

As none of the Tesla Model Y, NIO ES7, Xpeng G9, LI L9, ZEEKR 001 could identify obstacles like cone barrels, cartons, and water barriers in daytime scenes, they were automatically disqualified from the nighttime challenges.

Avatr 11 performed well, albeit with varying results compared to daylight conditions.

If a cone barrel is the obstacle, Avatr 11 can activate GAEB at speeds up to 60 km/h, eventually coming to a stop. Compared to daytime scenarios, Avatr’s GAEB performance declined slightly. In several tests at 70 km/h, Avatr 11 could sense obstacles ahead and intervene in braking, but ultimately failed the test due to insufficient braking distances.

When the obstacle is a carton, Avatr 11’s performance remains the same as during the day, able to trigger GAEB and eventually stop at speeds of up to 70 km/h.

And when a water barrier becomes the obstacle, Avatr 11 performs even better, reaching a top speed of 80 km/h with GAEB activated, and ultimately stopping without issue.

In Conclusion

By 2023, strategies based on Transformer+BEV have become the route taken by key players in the field of self-driving. Additionally, Tesla’s Occupancy Network proposed at the 2022 AI Day has gradually turned into a buzzword on the level of perception algorithms, demonstrating that merely possessing BEV is far from sufficient.

Common obstacles such as overturned vehicles, fallen trees, road debris, and falling rocks, which are well-recognized by the human eye, are no longer ignored by the driver-assistance system.

The recently launched AITO M5 Intelligent Driving Edition is already equipped with a fused perception BEV network and a GOD network. As for the impending GAEB update on the avatr 11, it will facilitate Huawei’s ADS driver-assistance system to elevate to the next level.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.