On Saturday, 22nd July, Dr. Luo Heng, Head of Horizon BPU algorithm, visited the garage office and conducted a face-to-face offline exchange with over twenty user friends.

The exchange revolved around the computation architecture evolution of high-order intelligence driving and the Horizon product matrix, which includes aspects such as chip algorithm capacity, data collection and processing, as well as the commercialization process of the Horizon chip. Below are some key perspectives shared by Dr. Luo:

Software 2.0 Era

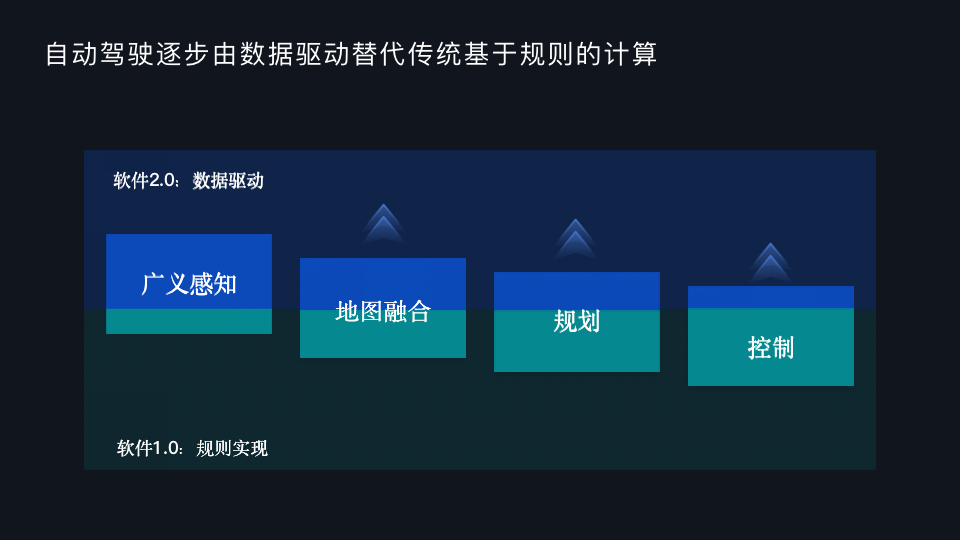

Compared to Software 1.0, which emphasizes rule implementation, the evolution of Software 2.0 is data driven and is expected to continue progressing. Utilizing automation to combat complexity and constantly learning and dealing with complex scenarios allows for continuous iteration of algorithms and models, thus approaching the crown at the pinnacle of autonomous driving.

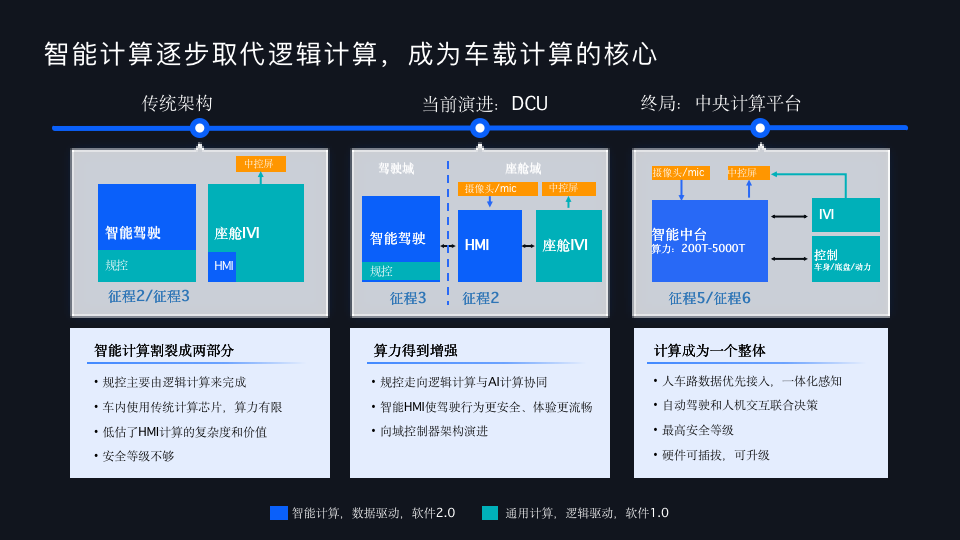

The architecture of vehicles has evolved from the traditional distributed system to domain control, and it is more likely to develop towards a central computing platform architecture in the future. The vehicle is evolving to resemble a computer increasingly, and the increasingly integrated end-to-end system is one of the optimal solutions for autonomous driving.

The generational progression of software also has a new impact on computation architecture. So, how can we construct a computation architecture that is suitable for Software 2.0? From our perspective, it requires aligning the computational power to meet the actual software requirements based on an integration of software and hardware. This will enable the computational architecture to prioritize and serve the most vital elements amidst diverse and complex algorithms.

The future fully implemented autonomous driving system will probably be a combination of end and cloud. That is, performing real-time estimation at the terminal side while collecting encountered issues unto the cloud, and then allowing the cloud to evolve over a longer period.

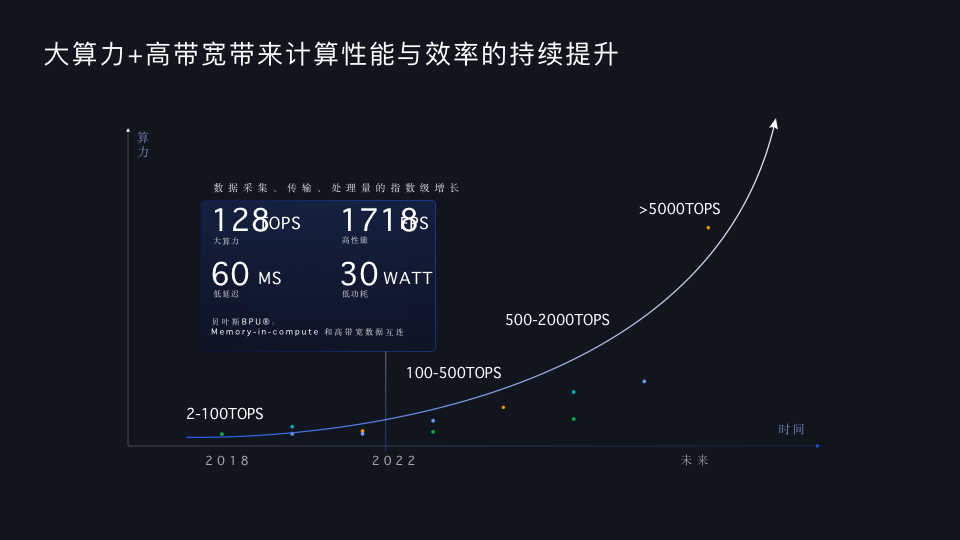

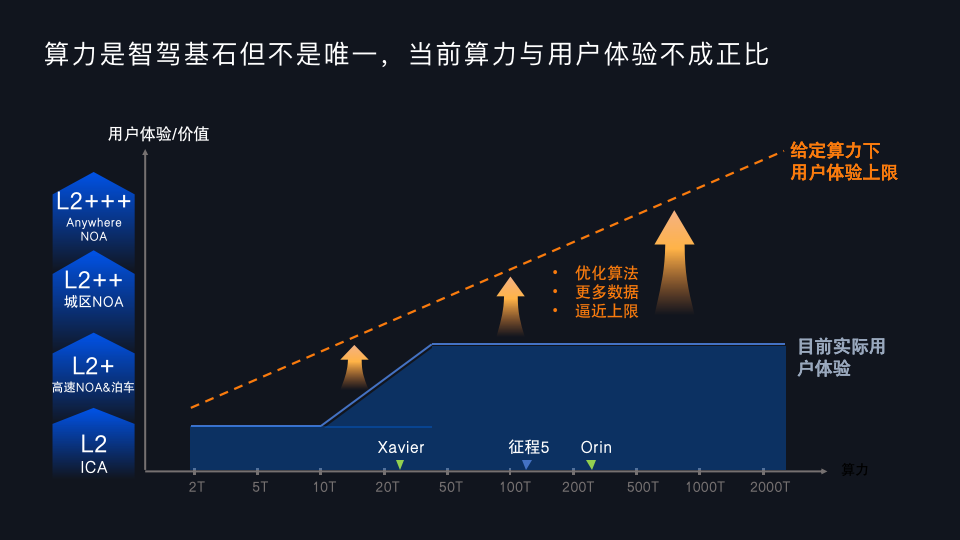

Computational Power and Bandwidth

In terms of the computational power and bandwidth problems on the terminal side, the more computation performed, the more data needed. Especially in transitioning from CNN to Transformer, Tesla has notably enhanced this aspect in its next-generation product planning, with bandwidth increasing approximately five times under the same computational power.

From the early autonomous driving systems, it is evident that merely having image recognition and object recognition is far from enough. We need to utilize a 3D spatial perspective for recognition and judgment in multiple camera video streams.

On our Journey 5, we have realized the application of partial algorithms of BEV and Transformer. Multiple cameras simultaneously advance into the model, which finally achieves consistency in a BEV space with data from different cameras. This allows for the prediction of other vehicle tracks, the association relationship prediction of road key elements, as well as the prediction of BEV perception of moving and stationary objects.

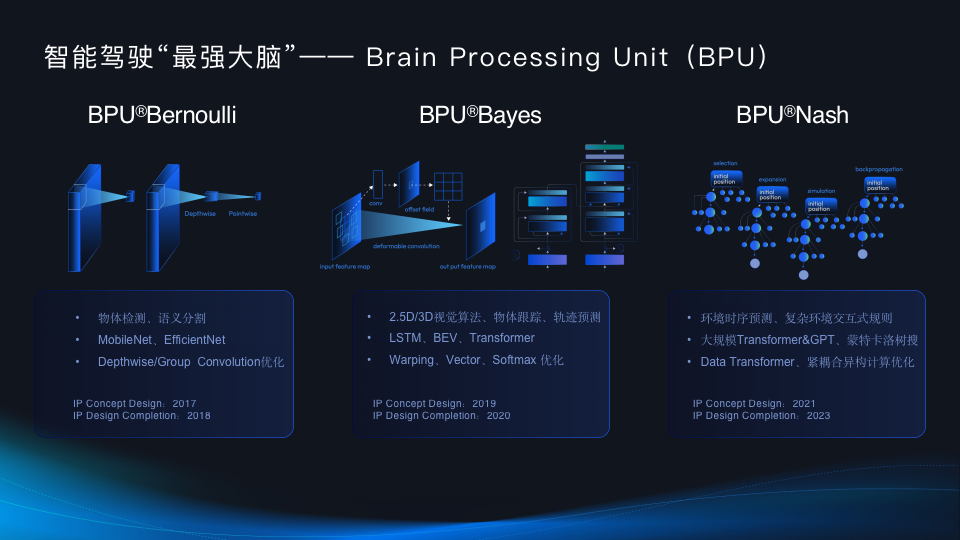

Horizon BPU Architecture

Horizon, in essence, is a company that integrates hardware and software on the edge side. We have evolved through three generations of BPU. The problems faced by the first generation of BPU are mainly focused on low-level ADAS, where our algorithm optimization emphasized on maximum efficiency, performance, and strict power-cost control.

By the Bayes generation, we noticed an increasing demand for higher-level autonomous driving such as High-speed NOA, beyond the value of entry-level ADAS. The demand reflects high expectations for a new computational capacity and intensifies the calls for universality and flexibility as the current software algorithm solutions are still falling short in addressing these high-level autonomous driving requirements.

For the next generation of BPU architecture evolution, we plan to further optimize Transformer based on the current foundation, including continuous predictions for environmental time sequence and complex environment-interactive rules. Transformer fundamentally offers more than just efficiency improvements, its modeling of long-distance dependency is something neither RNN nor CNN possess.

Our next-generation Nash architecture will be used in the entire vehicle intelligence center, and it is planned to be implemented in Journey 6. First and foremost, it is a BPU internal storage unit and a tightly coupled system. The tight-coupling means these units can achieve data interaction within the core, eschewing the need for DDR.

Compared with convolution networks, Transformer’s requirements for efficient data arrangement and numeric precision are higher. To meet this, we have built a dedicated data transformation engine that efficiently arranges data through a hardware acceleration unit and supports numeric precision through VAE and VPO.

Within VPO, we provide floating-point capability, which is applied to the most crucial parts of computing Softmax and LayerNorm. VAE is an integral vector acceleration unit, used to provide a large amount of relatively cheap computing power. Furthermore, we have defined multi-directional data flow. The multiple TAEs within the BPU core can conduct efficient data flow, while multiple BPU cores can flow data through L2M. In addition, through a more refined design of TAE, we are able to reduce data flipping and thus reduce power consumption by about 30%.> On Computational Power, Experience & Collaboration

During our interactions with some OEMs and vehicle manufacturers, we have found that the majority of the problems they are currently facing come from the software side, with hardware failures being quite rare. So, can the issues of autonomous driving be resolved in the next two to three years? I believe most people probably have not seen a clear trend towards a solution yet.

As higher-level assisted driving applications become increasingly prevalent, intelligent capabilities must simultaneously take into account both external road conditions and the status inside the cabin. The ideal outcome is to ensure vehicle safety with agility and timeliness through a very reasonable, new human-machine interaction interface, while reducing driving fatigue for all.

As to how to implement autonomous driving, the industry’s players are not entirely clear, and how to divide work and collaborate with software suppliers is also an open issue that needs long-term interaction.

For Horizon, we still hope to provide an open software platform for applications. Currently, our models are roughly divided into the following:

We offer algorithm toolchains and reference models; our AI development cloud, like Tesla’s continually enhancing cloud construction, is expected to achieve efficient automated annotation, training, testing, and rapid deployment on vehicles through the collection of more and more Corner cases. Another mode is about basic development components. We aim to help everyone efficiently complete development integration and verification by offering smart driving related application development kits.

In an intelligent driving system, the computational power chips provide is after all a basic ability. The upgrade of consumer experience requires the joint contribution of chips and software capabilities. Currently, the technological level in the chip industry is mixed, and we hope to optimize our software algorithm architecture continuously through more efficient and massive data collection to enhance the user experience. The overall system’s final experience must be a combination of capabilities from various aspects, such as software algorithm solutions, computational power, data, etc.

And for any company, optimizing their weaknesses and breaking through bottlenecks is particularly crucial. The long tail effect may not necessarily contribute positively to long-term returns.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.