Tesla Dojo, has finally reached mass production.

According to the official Tesla AI account’s message released in June, Dojo went into mass production by July 2023 — almost two years since Dojo’s initial revelation.

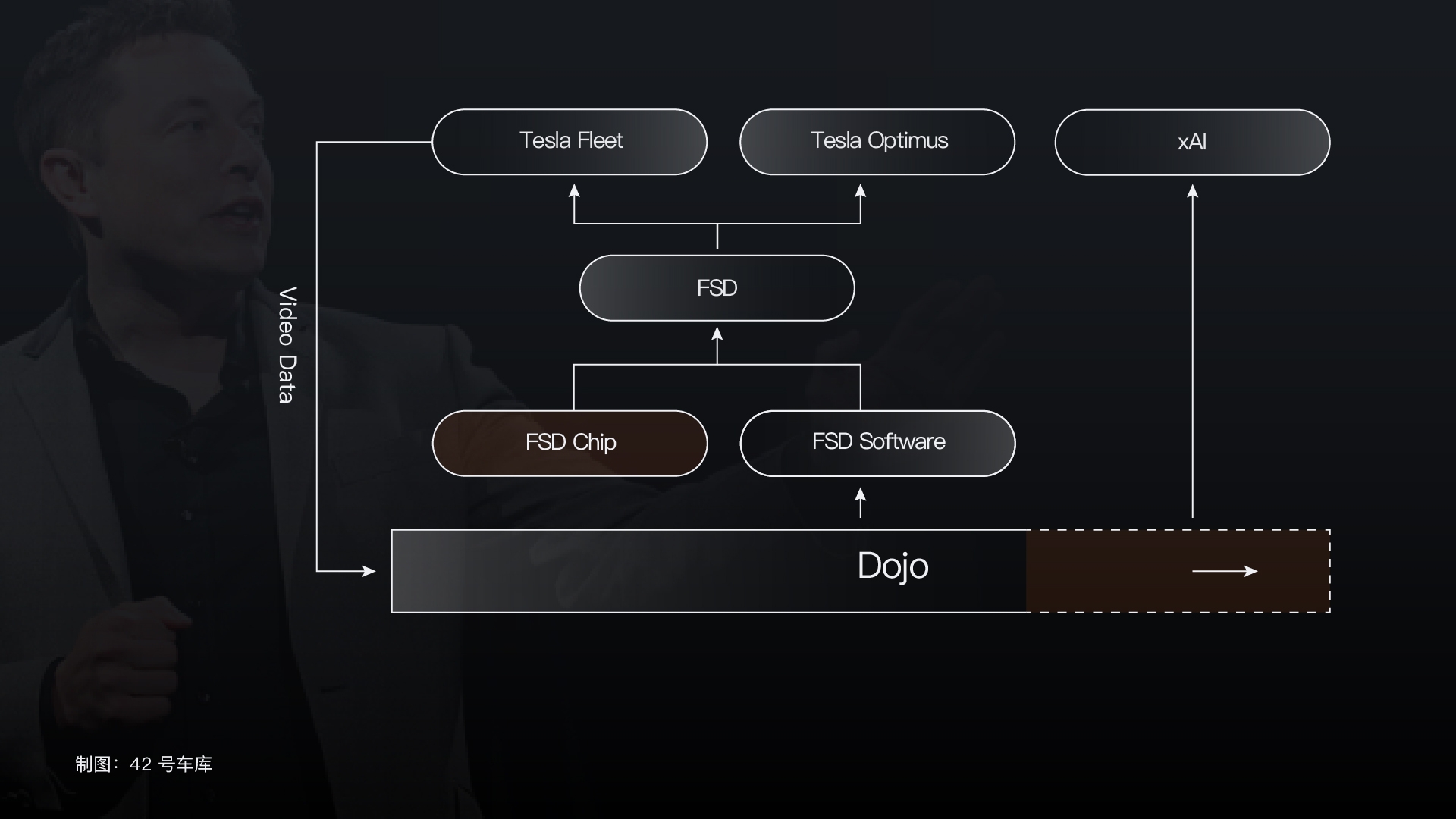

Yet, for Musk, Dojo isn’t merely a supercomputer for training autonomous driving models in the cloud anymore; it has become the computational foundation of Tesla AI’s entire business structure.

On a larger scale, Dojo serves as the embodiment of Musk’s AI ambitions.

The technical prowess is world-class

Since inception, Dojo has harbored Musk’s relentless ambition in technology.

In April 2019, at Tesla’s Autonomy Day, Musk first talked about Dojo, stating:

Tesla indeed has a significant project, which we call Dojo. It’s a super-powerful training computer aimed at being able to input massive data and train on the video level…… through the Dojo computer, a vast amount of video can be trained on a large scale without supervision.

Initially, Dojo was born to solve problems with model training for extensive video data.

The underlying premise here is: with the exponential growth of video data to be handled by Tesla due to the increase in vehicle sales and the rise in sophistication of autonomous driving functions, Tesla’s model training capabilities in the cloud have a higher demand, key among them being computational infrastructure.

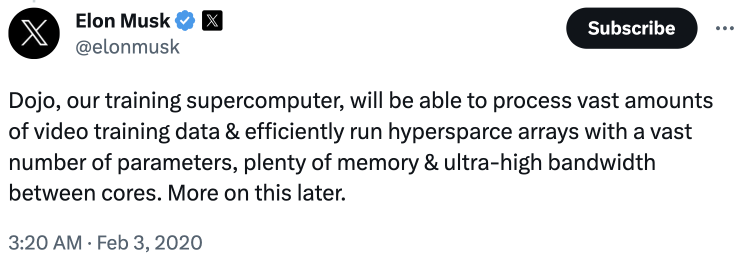

On this premise, Musk defined Dojo’s technical traits are:

Possessing massive computational power, able to process vast amounts of video training data, and efficiently handling hypersparse arrays with large parameters, robust memory, and ultra-high bandwidth.

Musk even hailed it as a “beast” (It’s a beast!).

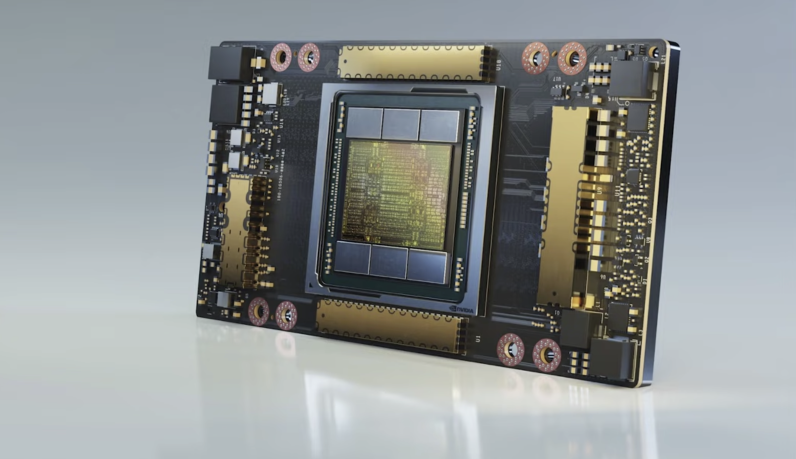

Given the information released at both 2021 and 2022 Tesla AI Day, Dojo indeed executed these technological specifications well.

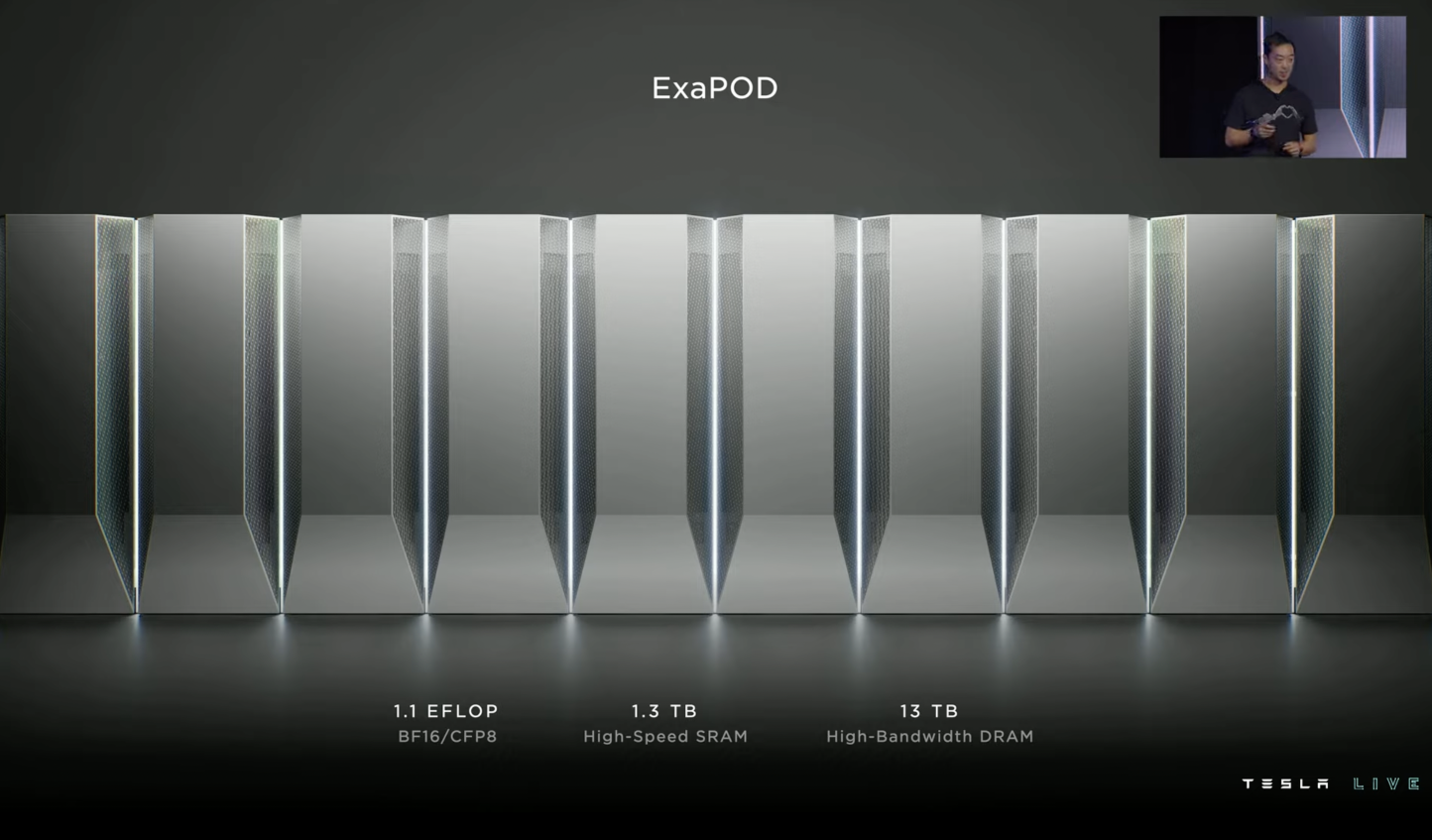

For instance, in terms of product form, the end unit for Dojo is a supercomputing cluster known as ExaPOD. It integrates 3,000 7nm-process D1 chips, comprising 120 training tiles, and ultimately achieving:* Up to 1.1 EFlops (a hundred billion times floating-point computations) of peak BF16/CFP8 computation power;

- 1.3 TB high-speed SRAM;

- 13 TB high-bandwidth DRAM.

Note that the ExaPOD, as a supercomputer cluster, is not the final form of this supercomputer — theoretically, it can be scaled up to meet Tesla’s greater computational needs and thus to “pile up” higher AI computational performance.

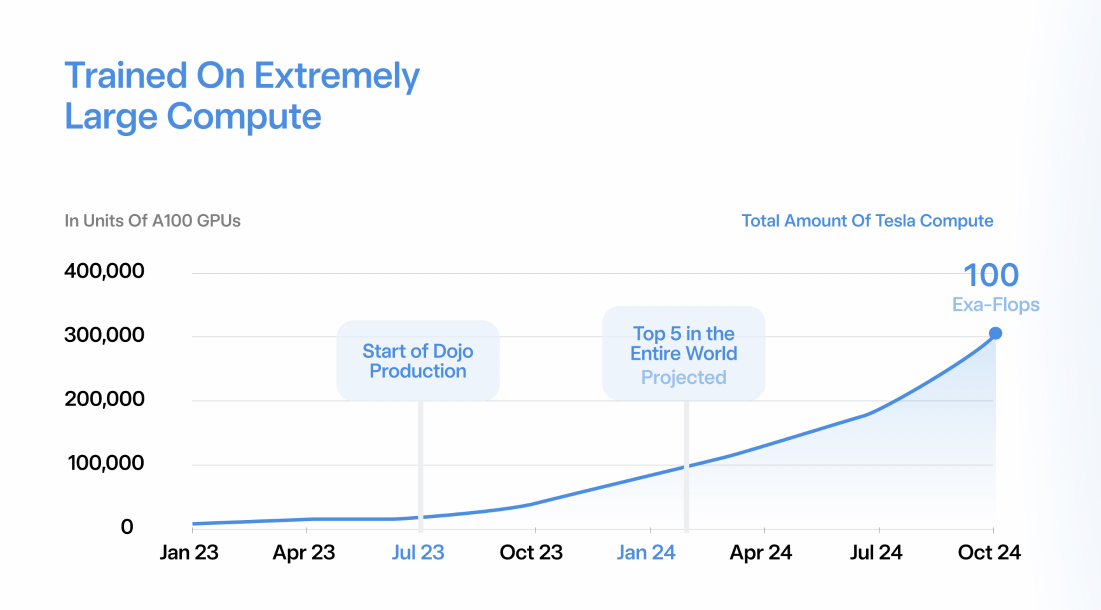

In fact, according to Tesla’s computational power development plan released in June this year, Dojo will rank in the top five globally in the first quarter of next year, reaching supercomputing power of 100 EFlops in October.

Of course, beyond hardware, there’s also considerable investment by Tesla in the software for the operation of Dojo itself.

In fact, adhering to the principle of software-hardware integration and full-stack self-research, Tesla has created an exclusive full-stack software system for the Dojo project through a combination of self-developed and open-source software, including underlying driver software, compiler engines, PYTORCH plugins, and upper-layer neural network models.

Besides, during the construction of the Dojo supercomputer, apart from solving technical difficulties in CPU, memory, bandwidth, and software, it also needs to solve power consumption and cooling problems closely related to its operations – and Elon Musk specifically emphasized that the latter in itself is seriously challenging.

This is why Elon Musk has spent several years building Dojo.

Moreover, according to what Tesla said on AI Day last year, the mass production of Dojo was originally expected to commence in the first quarter of this year; however, it clearly started mass production only in July this year, indicating a significant delay. The reason being its cost reduction fell short of expectations.

Therefore, from the technical dimension, Dojo undoubtedly represents a major self-challenge launched by Tesla in the AI and autonomous driving field. It very focusedly demonstrates Tesla’s multiple technical explorations in supercomputer cluster construction.

Worth mentioning is that, prior to Dojo, Tesla’s deployment in the fields of AI and autonomous driving has been very deep, whether it’s the self-developed algorithms or the FSD chips on the car side, both displaying a strength that has amazed the industry.

However, with the release of Dojo, Tesla has become a genuine AI company with full stack self-development and vertical integration technological capability, spanning cloud-end to terminal, chip to algorithm, and hardware to software—such businesses are indeed rare worldwide.

However, with the release of Dojo, Tesla has become a genuine AI company with full stack self-development and vertical integration technological capability, spanning cloud-end to terminal, chip to algorithm, and hardware to software—such businesses are indeed rare worldwide.

Due to this, the high level of technical expertise that Tesla presents in the Dojo project is world-class.

Tesla’s Multiple Considerations for Developing Dojo In-House

Tesla’s in-house development of Dojo is not merely a matter of technology.

In fact, prior to Dojo, Tesla had already deployed a supercomputer for cloud training, but it was based on Nvidia’s GPU.

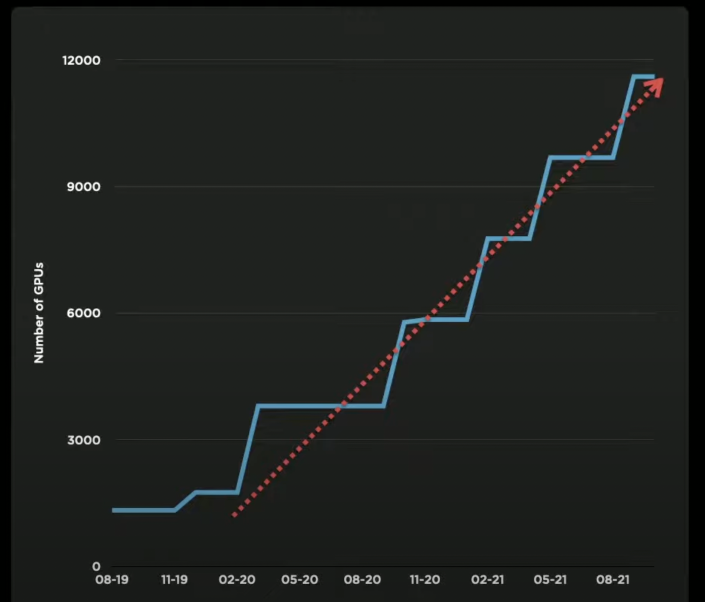

In August 2019, this supercomputer only required less than 1,500 GPUs—however, over the following two years, with the exponential growth of Tesla’s data volume, the number of GPUs it required also showed a multiplicative increase.

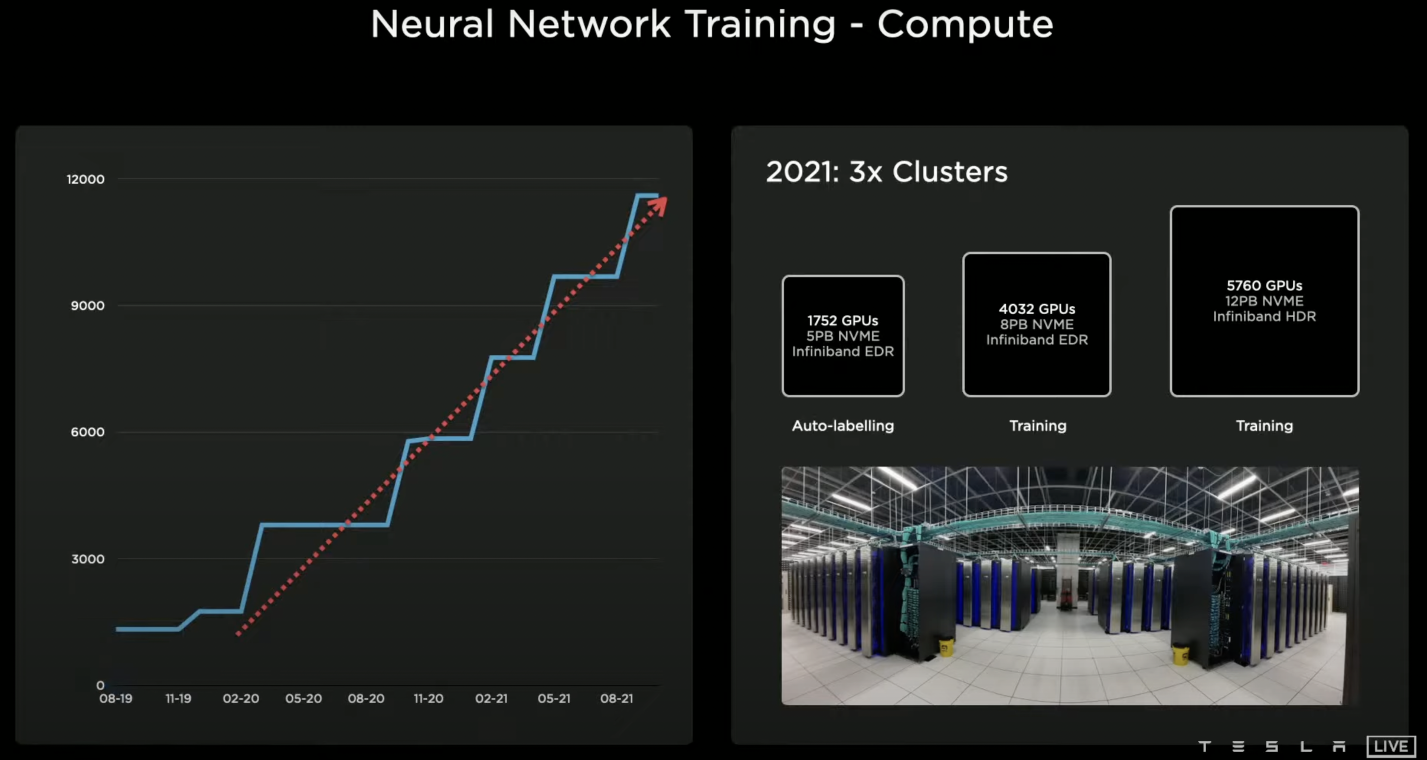

Interestingly, during that time, Tesla massively purchased Nvidia’s GPUs, including Nvidia’s A100 GPU based on TSMC’s 7 nm process technology, which was released in 2020. By August 2021, Tesla’s supercomputer for cloud deployment already had 11,544 GPUs, seven to eight times that of two years ago.

According to statements from Tesla at that time, this was the fifth-highest ranked supercomputer globally.

This supercomputer comprises three computing clusters.

In the 2021 CVPR, Andrej Karpathy, who was then in charge of Tesla AI, specifically introduced the largest cluster, which has 5,760 Nvidia A100 GPUs (80GB memory capacity), producing 1.8 EFlops of AI computing power.

The other two computing clusters, one is used for training, using 4,032 GPUs; the other is used for automatic labelling, using 1,752 GPUs.

In fact, for any company in the industry with a large amount of AI computing needs, purchasing GPUs from Nvidia to build their own computing system is a very normal thing. At the time, almost all cloud computing players in the industry were buying Nvidia’s GPUs, including tech giants like Microsoft, Amazon, Google, Alibaba, Tencent, and Baidu; and numerous tech internet companies from China and the US also needed GPUs to build their own AI computing power.So, it was quite natural when Tesla initially purchased GPUs from Nvidia.

Then, why did Tesla need to develop its Dojo?

The first reason is to improve efficiency.

Although Nvidia’s GPU possesses formidable AI computing power, it also has generality in AI capabilities making it less efficient when handling singular type tasks.

For Tesla’s FSD business, the supercomputers used for cloud training tasks predominantly handle singular tasks like video training. Thus, by developing a dedicated chip and software to build a new computing system, a significant efficiency boost is achievable.

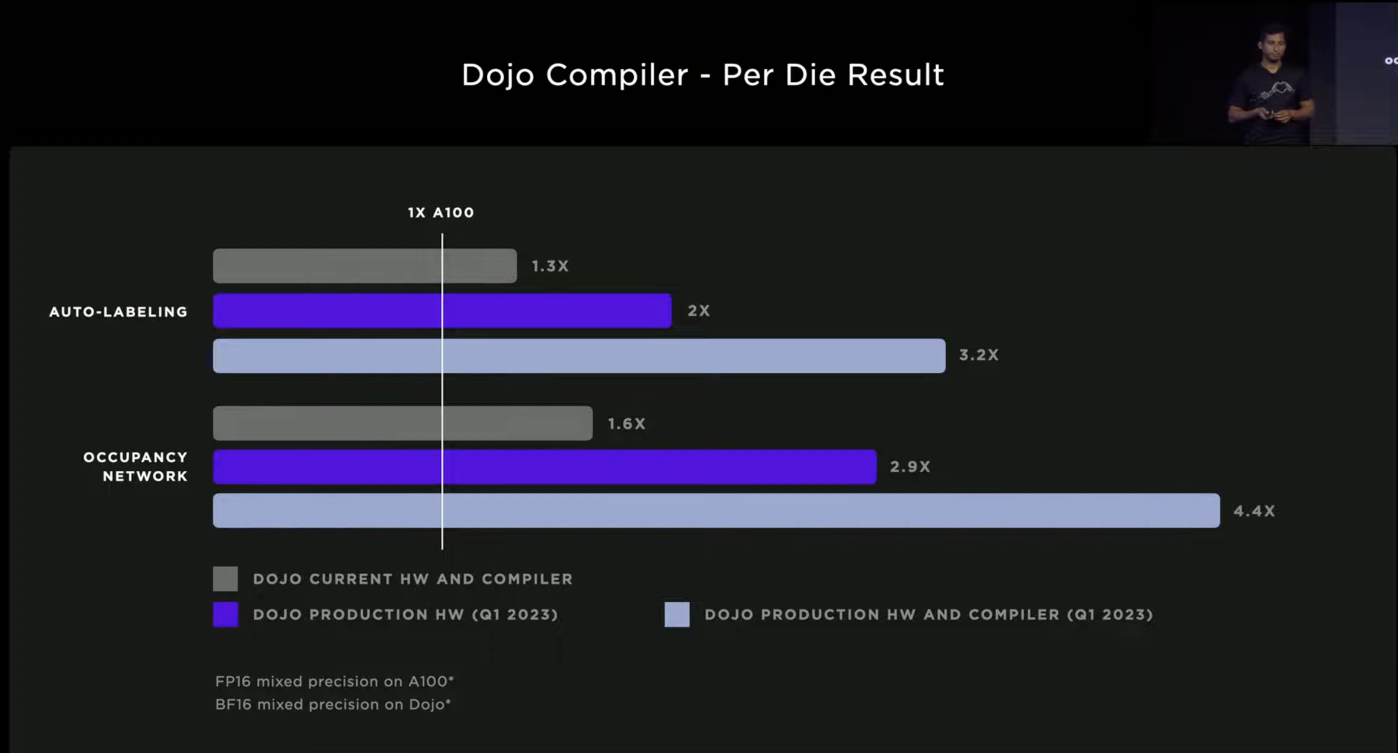

According to data released by Tesla on AI Day 2022, compared to Nvidia’s A100 GPU, each D1 chip (with Tesla’s in-house compiler) achieves 3.2 times the computational performance in auto-labeling tasks, and up to 4.4 times the computational performance in occupancy network tasks.

Looking at the overall goals, Tesla’s official data shows that for the same costs, the Dojo supercomputer they built exhibits 4 times the performance, a 1.3 times increase in energy efficiency ratio, and the occupied area of the computing system reduces by five times.

The second reason, of course, is to reduce costs.

Some in the industry calculated that by August 2021, to build a set of supercomputers for the cloud, Tesla invested over $300 million in hardware alone, with a significant portion going to Nvidia. Musk himself commented on Twitter stating Nvidia’s GPUs are too expensive.

Moreover, Tesla’s self-development of Dojo is intended for vertical integration, lessening their dependency on Nvidia’s GPU.

In reality, after the release of Nvidia’s A100 GPU, it quickly became sought-after in the AI industry, attracting numerous cloud computing, and internet tech companies, including Tesla. However, with limited A100 productivity, supply order matters, hence Tesla did not receive ample supplies to fulfill its computational demands due to exponential data growth.

Interestingly, although Tesla collaborated with Nvidia on cockpit chips and intelligent driving chips, they parted ways, particularly on intelligent driving chips, with Tesla insisting on self-development. Despite continuing business in GPUs, it’s clear that Tesla isn’t expecting any favors from Nvidia.From this perspective, Tesla’s decision to self-develop Dojo is a significant move in maintaining its strategic autonomy.

Propping up Musk’s ‘AI Empire’

From any angle, Dojo, as a hardcore tech product launched by a commercial company (especially one like Tesla, that places such a great emphasis on cost control), undoubtedly has very deliberate business considerations.

After all, Dojo, as an integrated product of hardware and software, from the basic chip design, to software adaptation development, to the computational cluster system, has already made significant business investment during its development process.

Interestingly, during the most recent earnings call, someone asked Musk how much money was spent on the development of the Dojo project. Musk did not publicly disclose spending on the Dojo project, but stated that he will invest 1 billion dollars in the Dojo project next year because Tesla does indeed have an astonishing amount of video data to train.

Furthermore, Musk emphasized that if someone wanted to plagiarize Tesla on the Dojo project, they would have to spend billions of dollars on computing power for training — a reasonable inference here is that billions of dollars are the sum that Tesla has already invested in the Dojo project.

So, why the huge investment given the high cost of the Dojo project?

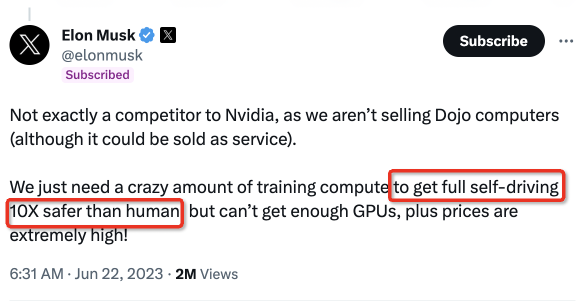

Musk’s answer: for a safer Full Self-Driving (FSD) than human driving.

During the earnings call, Musk said:

Tesla’s goal for the FSD is not just to be as good as a human, but ten times, if not hundred times better. We aim to achieve the highest possible safety. This means there’s a need for an astounding amount of video and computational demand… Hence, we intensely need it for video training. In the progress of FSD, the fundamental limitation is training; if we have more computation power for training, we would finish faster.

He also mentioned that given the incredible volume of data to process, developing their own chip is clearly the best solution.

It’s evident that the purpose of the Dojo project is to improve the safety and autonomous driving capabilities of FSD. Musk had previously stated that if FSD was strong enough, Tesla could sell cars at zero profit — such confidence clearly comes from the Dojo project’s enhancement of FSD.

It’s worth noting that as Tesla’s car sales increase, the initial costs of the Dojo project and FSD will undoubtedly be spread over the fleet size. Given that Tesla has set a goal of achieving 20 million annual sales by 2030, they clearly have confidence in recouping costs through volume expansion.Therefore, from the outset, Dojo was fundamentally a forward-looking, long-term investment project.

Naturally, as a highly gifted and business-savvy entrepreneur, Musk has considered diluting the cost of the Dojo project via alternative methods – he hinted as early as 2020 of potentially offering Dojo’s computational power like Amazon’s AWS, packaged as a subscription service.

Should this subscription model materialize, it will undeniably serve as an effective form of revenue generation for Tesla in covering the initial costs of the Dojo project.

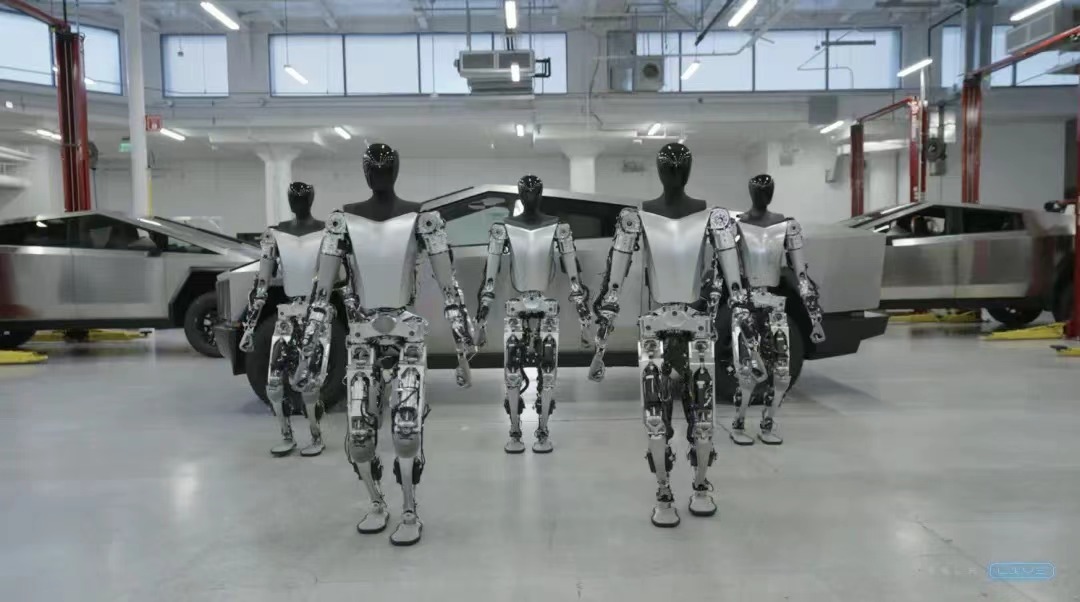

In addition, if we divert our attention from Tesla’s automotive focus and explore the wider scope of the Dojo project, we see Tesla’s humanoid robot business, Optimus, operating on the FSD software. As part of Musk’s long-term commercial vision, Optimus is a crucial part of the Tesla value system and its production quantity might even far exceed Tesla’s vehicle count.

Musk further asserted that a portion, or perhaps even all, of Tesla’s long-term value would be represented by Optimus.

From this perspective, the Dojo project not only supports Tesla’s FSD in its car business, but also caters to the potentially larger number of FSD needs in Tesla’s humanoid robot business. The broader the implementation of FSD in both car and robot businesses, the greater the potential value of the Dojo project.

This is why Musk, who places a high emphasis on cost control, is investing heavily in the Dojo project. Perhaps his audacity to do so stems from his firm belief and investment in the future he envisions.

Moreover, it’s worth noting that for Musk, the value of Dojo could even spill over beyond Tesla.

For instance, in June this year, Musk stated on Twitter:

Dojo V1 is highly optimized for volume video training, not a general-purpose AI; however, Dojo V2 will overcome such limitation.

This signifies that at some point in the future, Dojo could be used to address General AI (AGI) issues besides just supporting FSD. AGI is exactly what Musk’s newly established xAI company specializes in, and he has publicly announced xAI as a “competitor to OpenAI”.

Therefore, from Musk’s perspective, the Dojo project is indeed playing the role of a bearer for Musk’s AI ambition, and it is also part of the ‘infrastructure’ of the ‘AI Empire’ built by Musk.

Therefore, from Musk’s perspective, the Dojo project is indeed playing the role of a bearer for Musk’s AI ambition, and it is also part of the ‘infrastructure’ of the ‘AI Empire’ built by Musk.

Interestingly, during the Q2 financial results phone meeting, Musk made it explicit that Tesla would continue to use hardware from Nvidia while also pushing the Dojo project forward. He emphasized his great ‘respect’ for Jensen Huang and Nvidia, for their incredible work.

At present, Tesla unquestionably relies on Nvidia, as it has many AI tasks to run on GPUs.

Nonetheless, the moment Musk decided to commit to the Dojo project signified an inevitable divergence between Tesla and Nvidia in terms of cloud computing and AI. This is because Musk is the kind of person who always likes to firmly hold his future, fate and aspirations in his own hands, and his enormous ambition in the AI field is certainly no exception.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.