One day in January 2016, Jensen Huang arrived at Tesla’s California office with a case in hand, leading a team for a meeting with Elon Musk.

The case contained Nvidia’s first-generation self-driving computing platform, DRIVE PX. They brought this because Nvidia and Tesla wanted to affirm its actual capabilities. Consequently, Musk ran a neural network model trained by the Autopilot team on the platform, the results were satisfactory.

However, as a former Chinese Nvidia engineer who attended the meeting recalls, the neural network model from Tesla was just not up to par, even trailing behind the effects achieved by Mobileye based purely on rules.

The above is a depiction of the haltingly tentative footsteps made in the course of the last decade in the development of self-driving technology—a scenario far from stunning or eye-catching.

Now, self-driving technology is presently undergoing radical historical transformations—breaking free from the conceptual phase and gradually advancing towards the masses in terms of products. An array of carmakers including US-based Tesla and Chinese new car makers, are increasingly pushing for the mass production and commercial deployment of the city-navigation auxiliary driving functionality.

Developments are rapidly evolving that it’s easy to get bewildered: step-by-step, just how did this wave of self-driving technology get to where it is today?

Two Unavoidable Breakups

Busy Andrej Karpathy

In September 2012, 25-year-old Stanford Ph.D. student, Andrej Karpathy, was particularly busy swamped with tasks.

The reason was his mentor, renowned computer scientist Professor Fei-Fei Li, was organizing that year’s ImageNet Large Scale Visual Recognition Challenge (briefly known as ImageNet competition)—being one of the most observed events in the field of computer vision, the ImageNet competition attracted participation from top AI teams worldwide, resulting in her full team being mobilized and wholeheartedly committed to the competition throughout its term.

As a member of Fei-Fei Li’s team, Andrej Karpathy naturally got involved—consequently, on October 5, 2012, Andrej Karpathy announced the results of the ImageNet competition on Twitter at six-thirty in the morning.

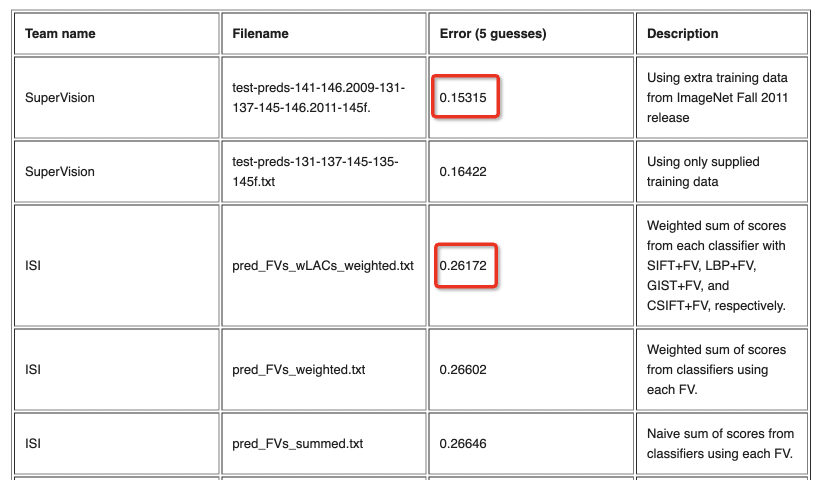

The results showed the 2012 ImageNet competition was won by a three-person team called “SuperVision”, who achieved a low error rate of 15.3% in the “Image Classification” task of ImageNet, a staggering 10.8% lower than the second place—leading by a large margin.

Interestingly, the championship-winning SuperVision team holds some connection with Andrej Karpathy.

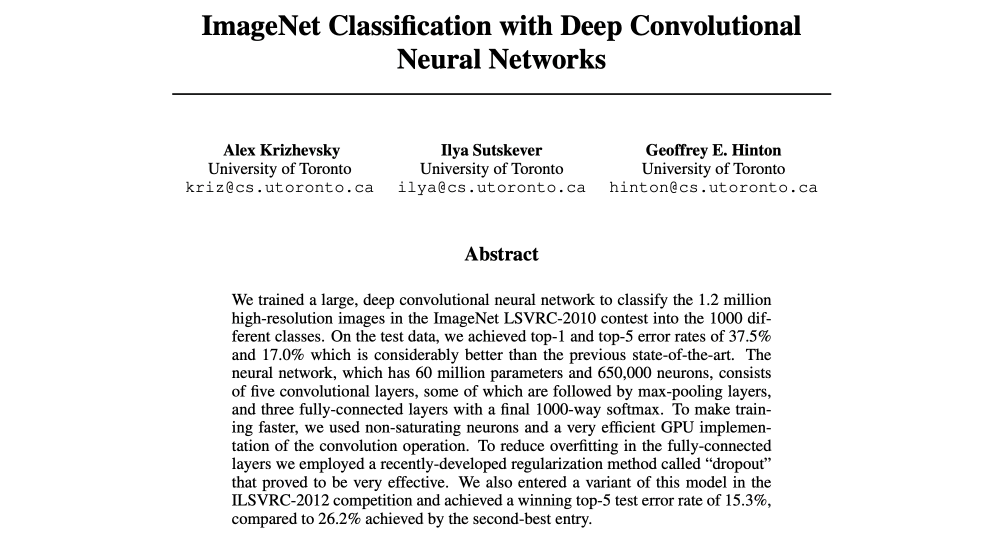

Initially, the three members of the SuperVision team were the renowned computer scientists, known as the ‘Father of Deep Learning’, Professor Geoffrey Hinton, and his students Alex Krizhevsky, Ilya Sutskever (currently the Chief Scientist of OpenAI, the core figure behind GPT). These three hail from the University of Toronto in Canada.

Strikingly, Andrej Karpathy had attended the University of Toronto for his undergraduate studies, where he was once a student in Professor Geoffrey Hinton’s course on Deep Learning. Thus, Andrej Karpathy was well-acquainted with them and naturally kept a close eye on the research paper they presented in this competition.

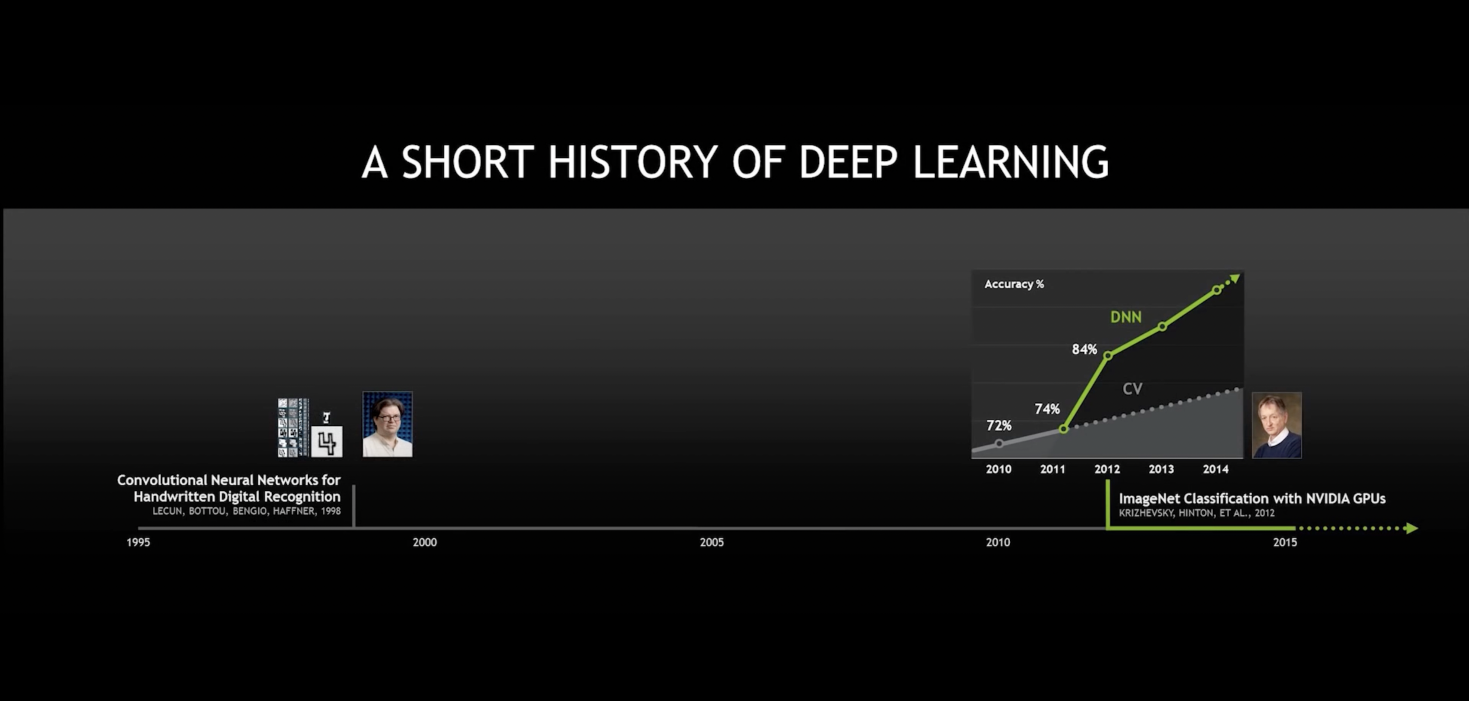

The paper titled “ImageNet Classification with Deep Convolutional Neural Networks,” primarily outlines a large-scale Deep Convolutional Neural Network (DCNN) used by the SuperVision team in the ImageNet competition. It also highlights the crucial hardware foundation used to train this network: two Nvidia GTX 580 3GB GPUs.

This large-scale Deep Convolutional Neural Network later became known widely as AlexNet.

By then, Andrej Karpathy had already begun to experience the power of AlexNet. He commended the competition results on Twitter as an impressive performance demonstrating the prowess of large-scale deep networks + Dropout algorithm + GPU.

Of course, he had not yet, as we do today, grasped the profound significance of AlexNet. That is: the advent of AlexNet and the publication of this research paper sparked a major revolution in the field of computer science and AI. It also made the Convolutional Neural Network (CNN) a central model in the field of computer vision for a long time. Consequently, it ushered in a major breakthrough in deep learning, spurring growth in several areas, including autonomous driving, among others.Markedly, at this time, Yukai, who was at the forefront of AI development and had previously won the ImageNet Competition in 2010, was aware of the significant value of this paper and AlexNet.

Therefore, as a person directly involved, Yukai represented Baidu in a fierce ‘bid’ against the three-person team of AlexNet that took place in the winter of 2012. This bidding unfolded between four companies: Google, Baidu, Microsoft, and DeepMind. However, Google was the ultimate winner. Of course, at that time, Yukai had not yet realized that his long-term future would be established in the field of autonomous driving.

Fast-forward to Andrej Karpathy.

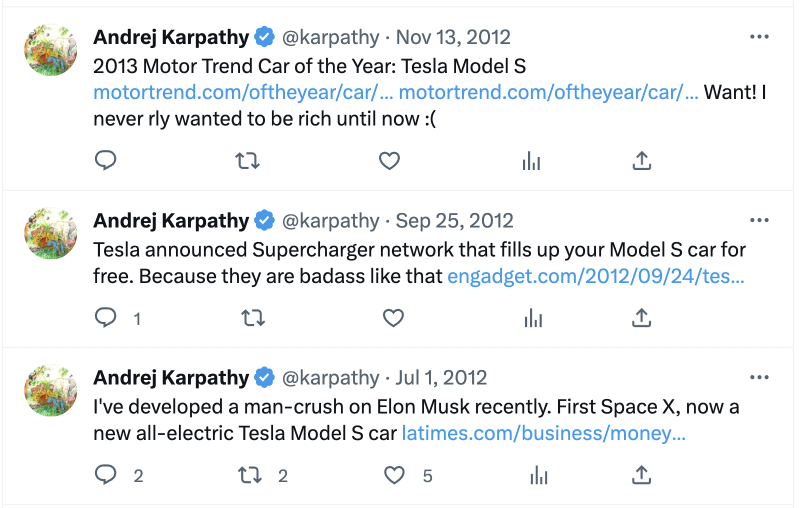

That autumn, while he was preoccupied with ImageNet competition matters, he was also enamored with the recently mass-produced Tesla Model S. He even posted about the Model S a few days before the competition results were announced. At that time, he also expressed his admiration for Musk on Twitter.

Back then, he could never have imagined that he would become the head of the Tesla AI team five years later, reporting directly to Musk himself.

Musk’s Reluctant Compromise

In 2013, when Musk decided to lead Tesla into the autonomous driving race, he found himself without a satisfactory solution and had to make a reluctant compromise.

Much of Musk’s interest in autonomous driving was influenced by Google.

In May 2013, Musk first mentioned in an interview that Tesla was considering adopting autonomous driving technology. At that time, Google’s autonomous driving car project (Google Self-Driving Car Project) had been underway for three or four years—a project Musk was no stranger to.

After all, this project was initiated by the Google X Lab, led by Google co-founder Sergey Brin. Brin, a close acquaintance of Musk’s and an early investor in Tesla, provided Musk with a deep understanding of Google’s technology and many discussions with Google’s team on autonomous driving technology.At that time, in Google’s self-driving car project, the basic paradigm was algorithm + computing chip + sensor. The algorithm itself was developed by Google. In terms of computing chips, Google used Intel’s Xeon server-grade processor and an Arria FPGA from Altera (used for machine vision). On the sensor level, Google opted for very expensive LiDAR.

Google’s goal was to achieve complete L4 level self-driving functionality.

Elon Musk agreed with the concept of complete self-driving. He saw it as a natural extension of Tesla’s active safety approach and believed it to be necessary. However, Musk preferred the term “Autopilot” compared to Google’s usage of “self-driving”.

Regarding Google’s LiDAR solution, Musk was dissatisfied, stating:

Google’s perception scheme is too expensive. It’s best to use an optical solution, such as a camera with software, just a glance can tell you what’s happened… I think Tesla will build its own Autopilot system, but it’s based on cameras, not LiDAR. However, we might work with Google on something.

In September 2013, Musk announced that Tesla would officially join the self-driving race and began recruiting self-driving engineers. Musk emphasised that Tesla would internally develop the technology, rather than adopt external technology from any other company.

At the time, there were two main paths within the realm of self-driving or driver-assist technology:

- One was Google’s solution, which involved deploying expensive LiDAR sensors and chips in the car and using a proprietary algorithm to aim for the L4 goal directly – this scheme was extremely aggressive, typical of internet companies and its main drawback was high cost.

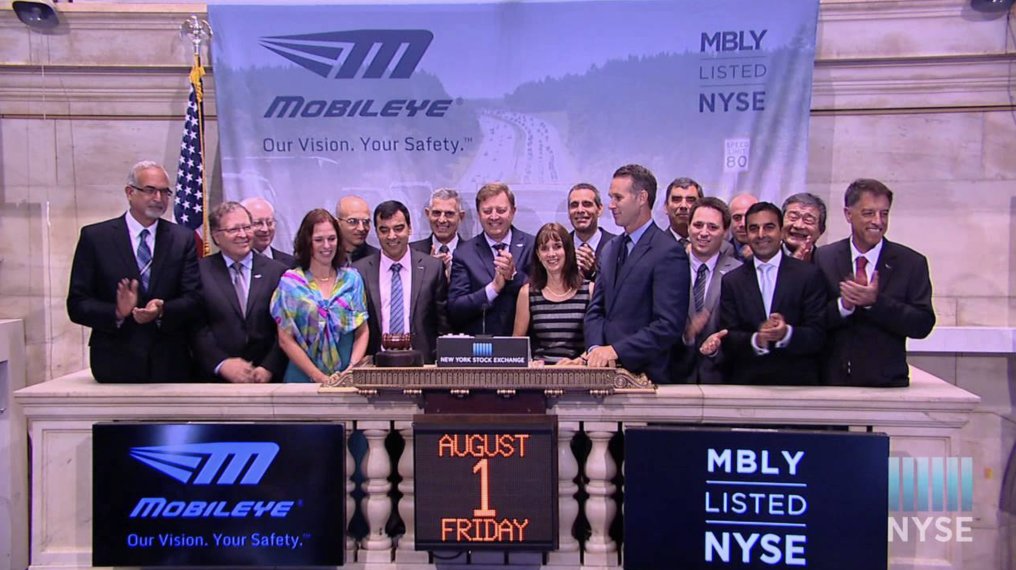

- The other came from Israel’s Mobileye, which used a cheaper camera-based solution and integrated visual algorithms into chips, packaged and sold to car companies. This solution had been validated over ten years and adopted a steady, gradual approach. It had already acquired numerous automaker customers, but the downside was Mobileye’s strong control desire, limiting automakers’ in-house algorithm development.

For Musk at the time, neither solution was satisfactory. On one hand, he was acutely aware of Tesla’s mass-production costs and couldn’t accept the high cost of LiDAR. On the other hand, he wanted to implement self-driving as soon as possible with a self-developed algorithm, not being held back by Mobileye’s slow pace.After thorough consideration, Musk, who is highly sensitive to costs, had no choice but to compromise and reluctantly chose to cooperate with Mobileye, which was in a very strong position at the time.

In October 2014, a year after announcing an entry into the autonomous driving industry, Musk announced that the Model S, which went on sale in September, was equipped with hardware capable of supporting Autopilot, including a forward long-range radar, a forward-looking camera, and twelve 360-degree ultrasonic radars; functionally, it could maintain lanes and automatically change lanes.

At the time, Musk didn’t reveal the identity of the supplier, until later when Mobileye voluntarily disclosed the news of supplying Tesla then it dawned on everyone–but in fact, the hidden worries of the cooperation between the two sides had already been planted.

Jensen Huang was in a hurry

As for Musk’s association with Mobileye, Jensen Huang saw it and was anxious about it.

Here is a premise: Nvidia has long been a supplier of Tesla. In fact, the 12.3-inch LCD dashboard and 17-inch touchable central control infotainment screen of the Tesla Model S, which was mass-produced and released in 2012, both run on two different Nvidia Tegra chips.

The reason why Jensen Huang was a bit anxious is that he also hoped that Nvidia could enter the autonomous driving industry and become Tesla’s supplier in the field of autonomous driving.

It turns out that, when AlexNet won the ImageNet competition, between 2012 and 2013, some teams approached Nvidia, expressing their intention to do computer vision based on deep learning via GPU. This made Jensen Huang realize that deep learning might usher in an explosion, and Nvidia’s GPU technology could open up a vast market due to its support for deep learning and computer vision algorithms.

According to an engineer who once worked at Nvidia, in the beginning, Jensen Huang didn’t fancy the autonomous driving market as he perceived the profit margins of this market sector as not high, especially compared to the server sector which had profit margins of 60% to 70%. However, after Nvidia’s setbacks in the smartphone business (for example, its collaboration with Xiaomi), Jensen Huang’s obsession with edge deployment led him to explore opportunities in different fields, including security, robotics, and automotive.

As a result, after exploring various options, Jensen Huang ultimately concluded that based on the high power consumption of Nvidia chips, autonomous driving based on electric vehicles was Nvidia’s best direction at the edge.

In November 2013, during an earnings call, Huang Renxun expressed his views on the development of the automotive industry:

The perspective of looking at vehicles today should actually be automation. Modern cars are connected vehicles, hence, digital computation is more important than ever before. Our digital clusters will provide a chance for the automotive industry to offer a modern driving experience, as opposed to using traditional mechanical instruments. In addition, because of the existence of GPGPU, programmable GPUs in our processors will make all sorts of new driver-assistance features possible. With capabilities in computer vision, driver-assistance, artificial intelligence, and more, we are going to make cars safer and driving more interesting. Therefore, from the digital clusters to the infotainment systems, and then to future driver-assistance systems, there will be more than just one GPU installed in vehicles.

Huang also noted, the success of Tesla’s electric vehicles is leading the way for more automotive companies to follow in its footsteps and actively incorporate mobile computational capabilities into vehicles— he emphasized that NVIDIA has invested heavily in this area for many years, and hence expects to continue being successful.

Finally, Huang concluded with a thought-provoking statement: The design wins.

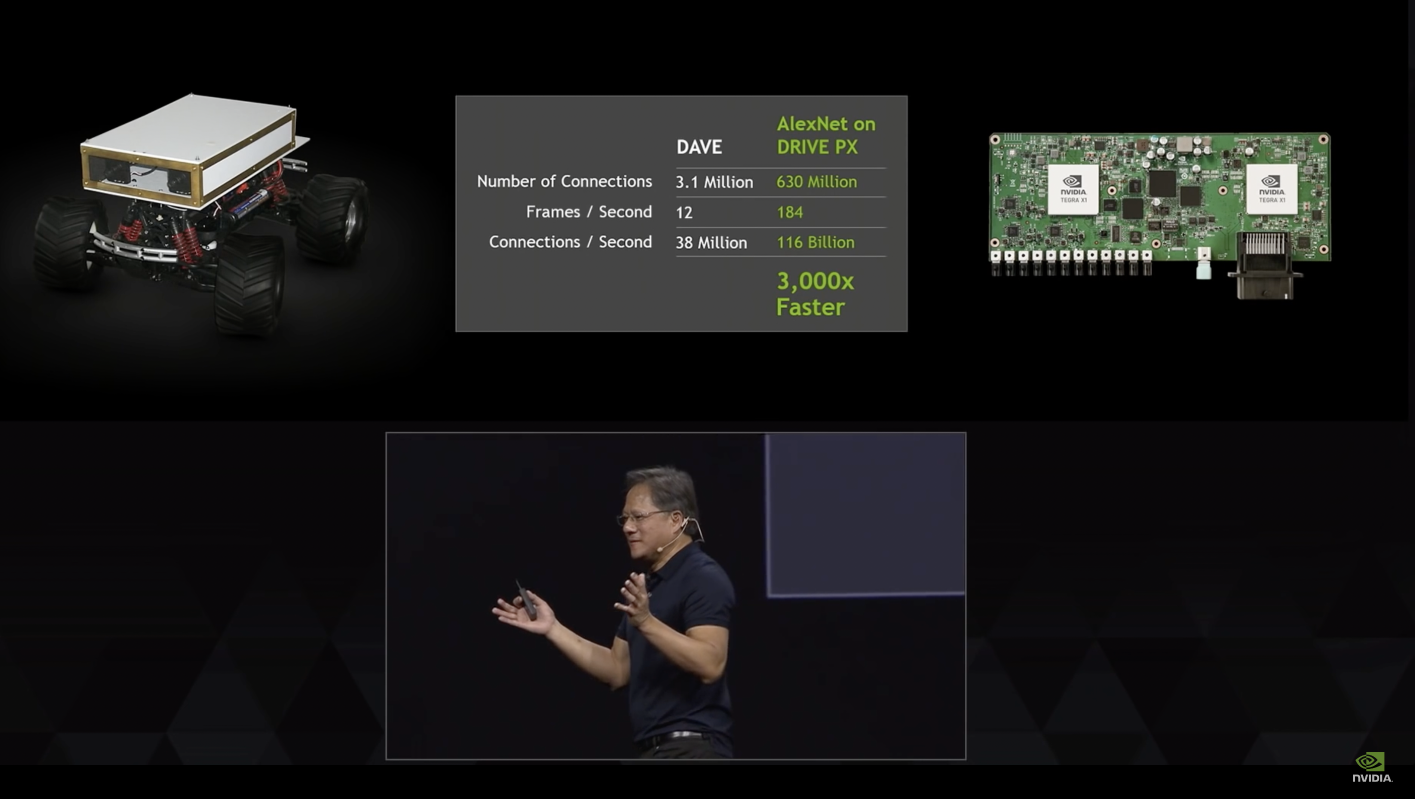

Therefore, after more than a year’s preparation, NVIDIA fired the first shot at the self-driving industry in January 2015: introducing the DRIVE brand and its two automotive computational platforms. Among them, DRIVE PX is based on the Tegra X1 chip and Maxwell GPU, with over 1 TOPS of computational power, supporting computer vision and machine learning technologies.

Interestingly, seemingly in response to Tesla, NVIDIA specifically used ‘Auto-Pilot’ in its introduction of DRIVE PX.

Additionally, at GTC 2015 in March, Huang even invited Musk onto the stage to discuss the development of AI and autonomous driving. On stage, Musk expressed that AI might potentially be more dangerous than nuclear weapons, but people don’t have to be overly concerned about autonomous driving, as it is a narrower interpretation of AI.

Worth mentioning is the fact that at GTC 2015, apart from Musk, Huang also invited PhD candidate Andrej Karpathy as a speaker — at that time, Musk and Karpathy hadn’t yet had the opportunity to meet each other, but it wasn’t long before they would.

Musk “Hedging His Bets”

If one was to encapsulate Elon Musk’s strategy concerning autonomous driving in 2015, the essence would be “hedging his bets”.

The first “bet” was the uneasy alliance with Mobileye.

Contrary to Mobileye’s typical clientele, Tesla was eager to accelerate the development of autonomous driving. Tesla did not merely adopt Mobileye’s solution passively but enhanced it considerably with unique innovations in data accumulation and software algorithm to enable Autopilot with self-learning abilities.

For instance, Tesla integrated the Fleet Learning function in their vehicles. Essentially, this capability allows the software to record and learn from human operation when Autopilot action differs from actual human decisions. This bears similarity with the subsequent “shadow mode” introduced by Tesla.

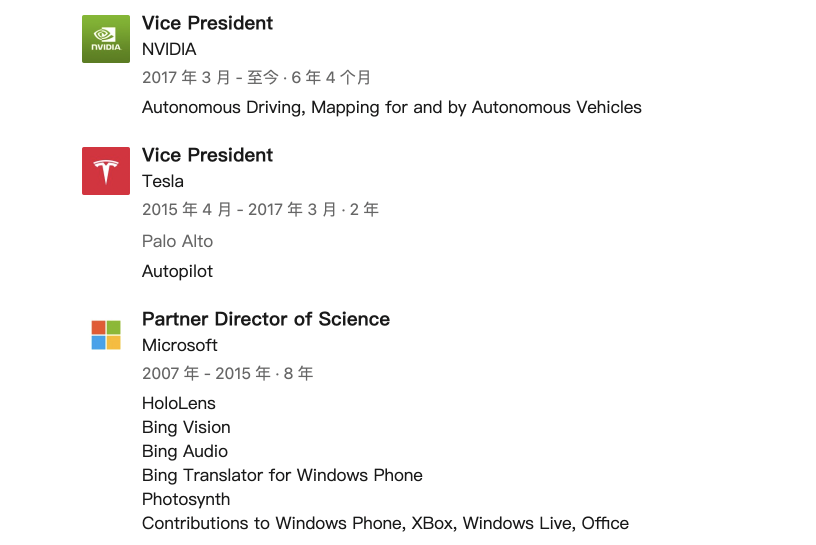

In order to accomplish this, Elon Musk personally attracted David Nister, an expert in computer vision technology from Microsoft, to establish the Tesla Vision team in April 2015.

However, this move attracted fierce resistance from Mobileye. As Mobileye has always operated under a closed system, hoping to keep both chip and algorithm in their control, they were opposed to automobile companies having the ability to develop independent algorithms. This led to serious conflict with Tesla in 2015, with the influential Mobileye demanding that Tesla halt the Tesla Vision project or face a cessation of technology support.

During that period, Tesla, still in its infancy in the domain of autonomous driving, had no choice but to temporarily submit to the suppression from Mobileye.

The second “bet” was a secret collaboration between Tesla and NVIDIA.

As a former NVIDIA engineer tells us, in 2015, following the fallout with Mobileye, Musk began searching for a chip capable of providing immense computational power, while also permitting Tesla’s independent development of vision algorithms. This led him to Jensen Huang of NVIDIA.

Upon hearing Musk’s requirement, Huang was immediately intrigued. He swiftly instructed his engineers to add an independent GPU to the base of the Tegra chip and test it with Tesla – the two parties had numerous interactions, continuously exploring the possibility of collaboration.

Hence, the opening act in January 2016 was played out.

Hence, the opening act in January 2016 was played out.

The third boat is the self-developed chip.

After all, during the collaboration with Mobileye, Musk also tasted what it’s like to be constrained by the core technologies controlled by others.

Taking full-stack self-research, increased computing power needs, vertical integration of the business model into consideration, as well as the upcoming massive production and delivery needs in the future, Musk decided to self-develop chips. Of course, self-developing chips is not an easy task and requires several years; thus, Tesla understands that there needs to be a “second boat” to satisfy the transitional needs before the arrival of the “third boat”.

This means that while Tesla was indeed proactive in advancing the cooperation with Nvidia at the time, in the long run, its “breakup” with Nvidia was inevitable.

In January 2016, in the same month that Musk and Jensen Huang held a meeting in Tesla’s California office, the chip guru, Jim Keller, known as the “Silicon Hermit”, officially joined Tesla.

Kicking Mobileye to the curb, joining hands with Nvidia

In the second half of 2016, Nvidia finally got the opportunity to become Tesla’s autonomous driving chip supplier, which was a major breakthrough for Nvidia’s autonomous driving business.

However, few people were aware that the significance of Nvidia and Tesla’s partnership was crucial for the development of the entire self-driving industry. It meant: a carmaker with ambition in the autonomous driving field is finally capable of finding a programmable chip capable of fulfilling its self-developed algorithm needs, now available on the market. In other words, the mass production of the entire autonomous driving industry at the carmaker level was equipped with a computational foundation.

For this opportunity, Nvidia had made ample software and hardware preparations.

For instance, at the beginning of 2016, Nvidia launched a series of software and hardware products based on its autonomous driving platform, including DRIVE PX 2, which Jensen Huang referred to as the “world’s first supercomputer for autonomous vehicles”.

Simultaneously, based on DRIVE PX 2, Nvidia has also built a complete autonomous driving technology architecture DriveWorks, including a range of hardware frameworks used for cloud-based or on-vehicle training or inference, as well as a range of software reference solutions.

In conclusion, Nvidia has not only increased performance robustly on the hardware front but also created a large field on the software and tooling end, ready to provide nanny-style services for car manufacturers, including Tesla, advancing into the realm of self-driving technology.

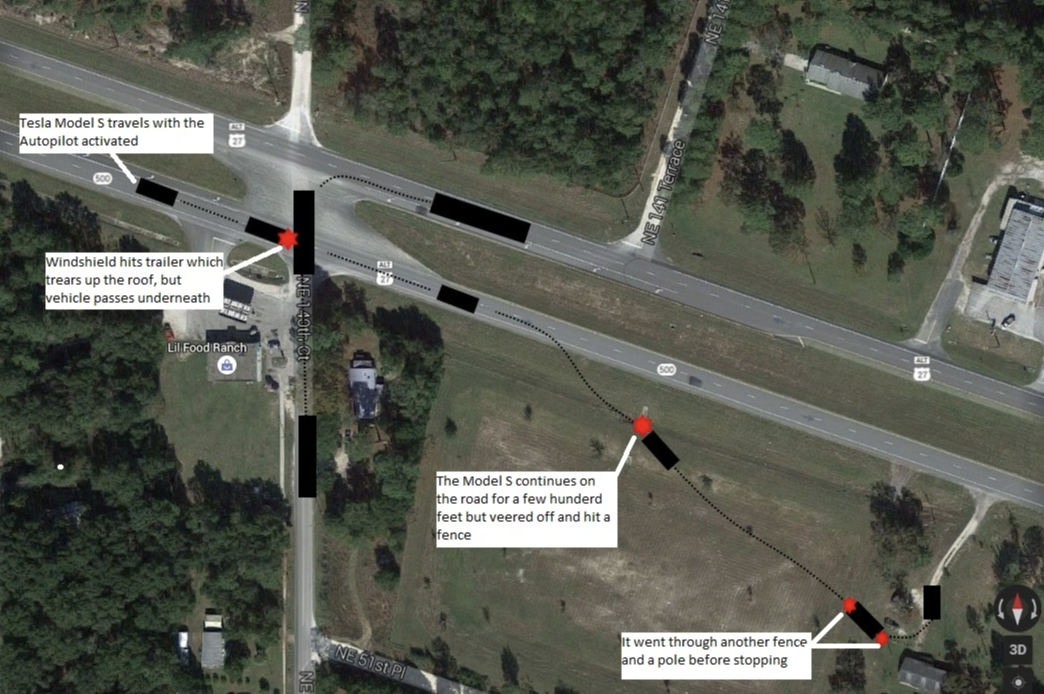

Then, an unexpected accident occurred.In May 2016, a Model S accident closely linked with Autopilot sped up the separation between Tesla and Mobileye—two months later, Mobileye announced the termination of the collaboration with Tesla.

Regarding the split, Musk appeared nonchalant. He stated that Mobileye’s technological development was negatively affected as it had to support hundreds of models from traditional car companies, resulting in a high coefficient of engineering resistance. Tesla, meanwhile, focused on implementing full autonomy on a single integrated platform.

Indeed, there was another reason behind the scenes: when Tesla launched version 8.0 of the software in the second half of 2016, the software demand had essentially reached the hardware limit.

Of course, Musk was completely unruffled, largely due to the long-standing secret communications with Nvidia.

After having witnessed the effect of Nvidia’s autonomous driving computing platform surprisingly early, and coinciding with the breakup with Mobileye, he didn’t hesitate to choose Nvidia as the new partner—considering Tesla’s self-developed chips, the inevitable split with Nvidia two and a half years later was predestined from the start.

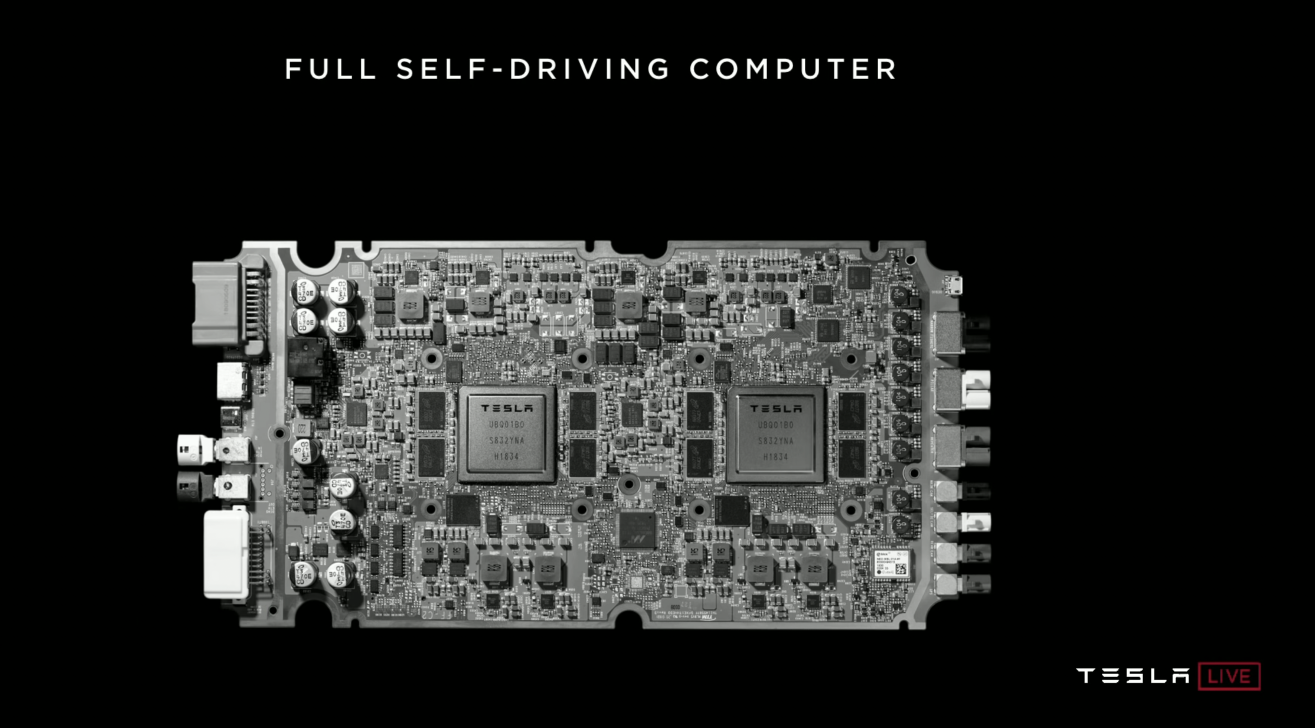

In October 2016, Tesla announced that every mass-produced model, including Model 3, would come with Full Self-Driving (FSD) capable hardware (HW2.0), including 8 surround cameras, 12 ultrasonic radars and a forward radar.

Meanwhile, HW2.0 also integrated a computing device that had forty times more computing power than the previous generation (i.e., Nvidia DRIVE PX2), capable of running Tesla’s latest developed neural network for visual, ultrasonic and radar fusion processing on Autopilot.

What deserves noting is that, though also called DRIVE PX2, the version utilized by Tesla was customized in collaboration with Nvidia.

At the same time, having removed all dependencies on Mobileye at the hardware and software levels, Tesla’s new models equipped with the Nvidia computing platform even temporarily lacked some basic features held by older models—such as automatic emergency braking. In other words, while they had the hardware foundation, Tesla still needed to catch up on software and AI capabilities.

At this point, Musk needed a capable assistant to help him build the AI algorithms.## When Autonomous Driving meets Transformer

A star-studded pivotal moment

In truth, working with Musk has always been a challenge, especially for the Autopilot team.

After all, Musk has a very aggressive attitude towards Autopilot. He hopes that Tesla can achieve autonomous driving “safer than human-driving” as soon as possible. Therefore, he has high expectations for this team and also puts great pressure on it—which could lead to frequent staff turnover in the Autopilot team in some circumstances.

In January 2017, Tesla poached a god-level software engineer, Chris Lattner, from Apple to serve as the Vice President of Autopilot software. However, he resigned less than half a year later, feeling that “Tesla was not suitable for him.”

Therefore, Musk let “Silicon Gnome” Jim Keller take charge of the software.

Unexpectedly, in March 2017, David Nister, the head of Tesla Vision whom Musk had poached from Microsoft, also resigned in the first half of 2017—he later joined NVIDIA to take charge of autonomous driving related business.

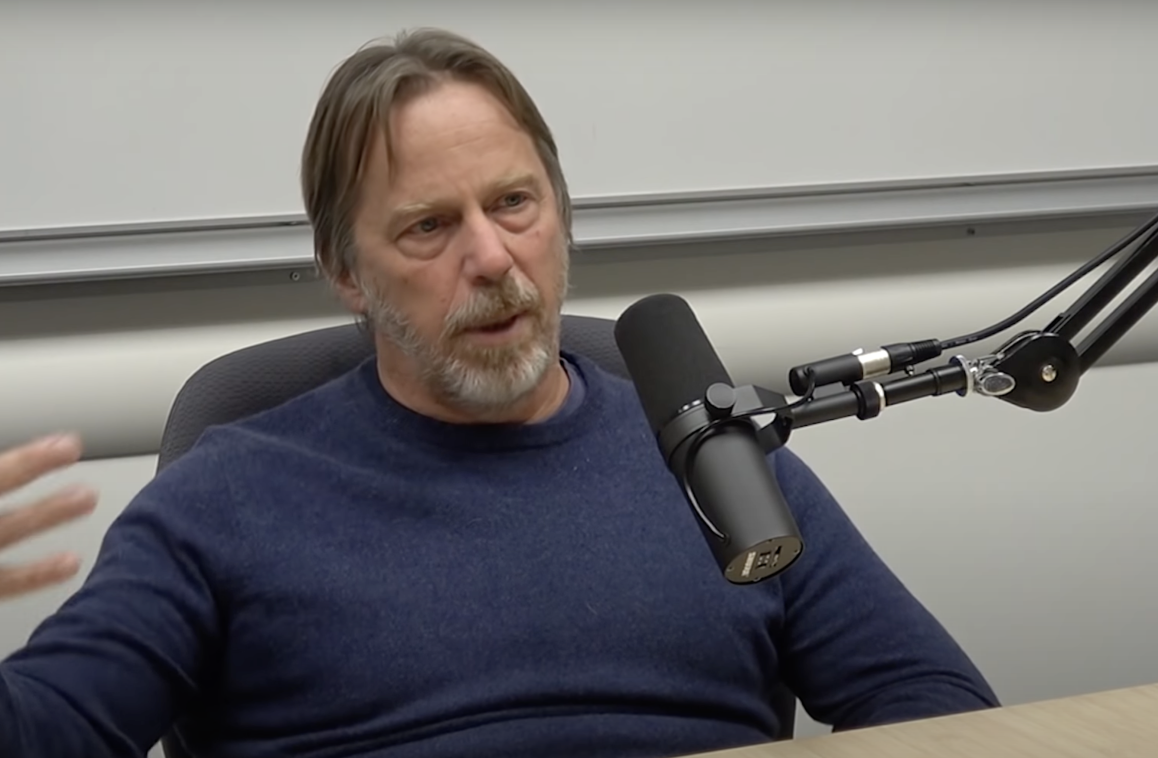

Then, in June 2017, Musk invited 30-year-old Andrej Karpathy to join Tesla as the head of Tesla Vision and AI team, reporting directly to Musk himself—The facts later proved that this was the most correct decision Tesla made in recruiting talents for Autopilot.

In fact, Andrej Karpathy was able to join Tesla thanks to Musk’s discerning eye.

As mentioned earlier, Andrej Karpathy admired Musk as early as 2012, but for a long time, he had no direct interaction with Musk. By the time Andrej Karpathy had a great deal of opportunity to interact with Musk, it was traced back to the founding of OpenAI.

OpenAI was established in late 2015. It is an open-source organization initiated by Musk and Sam Altman, out of concern that AI might become dangerous and to prevent AI from monopolization by large companies like Google, with Musk and Sam Altman serving as co-chairs.Apart from them, Musk also brought on Ilya Sutskever as Director of Research for OpenAI. Sutskever was one of the authors of the AlexNet paper. (His decision to join OpenAI was largely due to Musk’s persistent persuasion—later, Sutskever would become a key figure in GPT’s success.)

Notably, Andrej Karpathy was also among the founding members of OpenAI.

During his tenure at OpenAI, Andrej Karpathy continued his work on model training whilst also advising Musk on AI and algorithmic aspects of Tesla’s Autopilot. At one point, he found himself feeling restless. He wished to work on the practical application of AI and started exploring opportunities similar to startup ventures.

At this juncture, David Nister decided to leave for Nvidia, opening up a space which Musk approached Karpathy to fill. In Karpathy’s words during an interview, Musk asked him if he would be interested in joining Tesla and leading its Computer Vision and AI teams. Karpathy reflects:

Elon reached out to me at a very opportune moment. I was exploring new ventures and this opportunity seemed perfect. I was confident in my abilities to contribute. It was a defining role with tangible impact. I admire the company as well as Elon, and felt that it was a shining, pivotal moment. It strongly resonated with what I knew I ought to be doing.

When Karpathy joined Tesla, one of his noteworthy discoveries was that only two individuals were working on training deep neural networks, performing rudimentary visual tasks using CNN algorithms—moreover, having recently broken away from reliance on third-party vendor Mobileye’s hardware and software, Tesla found itself in a position of having to rebuild its own computer vision system.

For Andrej Karpathy, it was an endeavor that was akin to starting from scratch.

Sleepless Nights over Data Issues

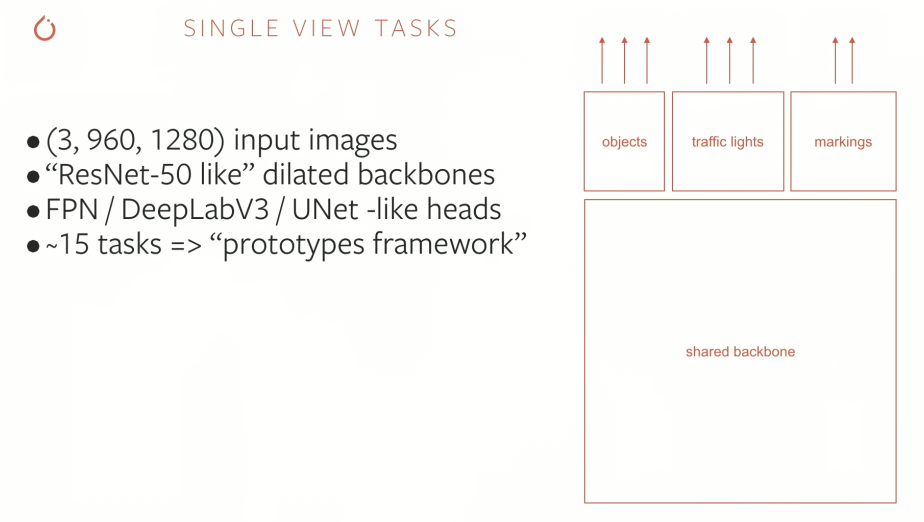

In the year or so following Andrej Karpathy’s arrival, his primary focus lies in two areas: algorithms and data.

Let’s consider the aspect of algorithms first.

In November 2017, Andrej Karpathy published a piece titled “Software 2.0” on the blog platform Medium, centered on this main theme:

Under the concept of Software 2.0, code is not manually written in languages like C++, but rather generated by neural networks. The paradigm of programming shifts from collecting training data and defining training targets, to an algorithm engineer transforming datasets, target settings, and architecture settings through a compilation process into a binary language that represents neural network weights and feedforward processes.

Under such philosophy, Andrej Karpathy started leading the team to reform Autopilot itself towards Software 2.0.

In fact, when Andrej Karpathy first joined Tesla, most of the Autopilot’s entire software stack was based on Software 1.0, with some CNN networks doing some basic visual recognition tasks. After more than a year following Andrej Karpathy’s addition to the team, Autopilot’s entire software stack began to embrace Software 2.0 on a large scale, widening its scope and continually narrowing that of Software 1.0.

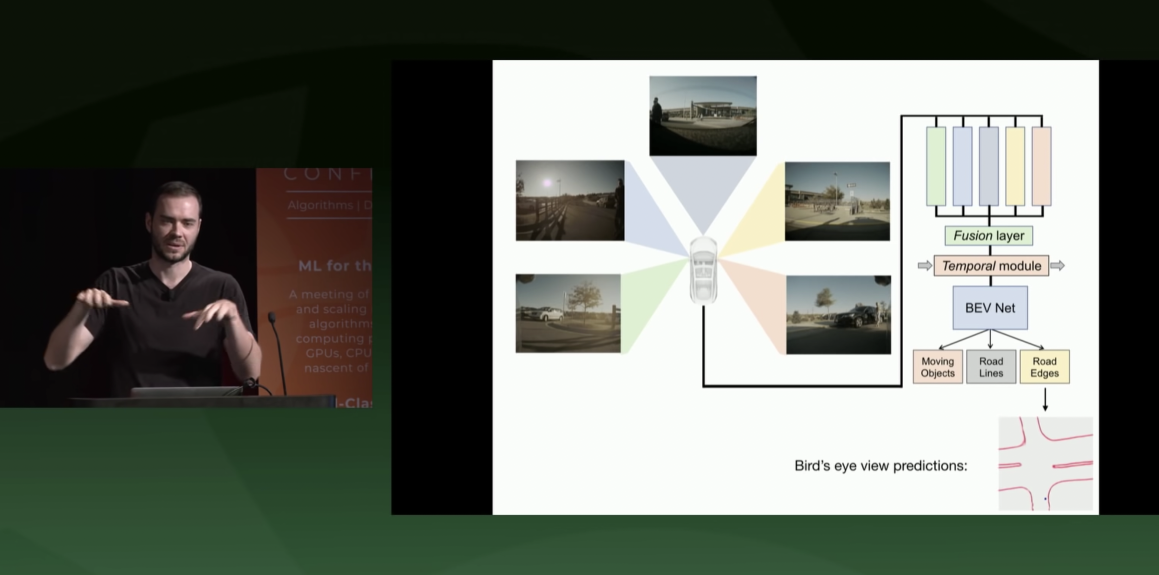

Looking at the outcome, a significant part of the work Andrej Karpathy did at the algorithmic level was to extract and recognize features on a large scale and multitask across 8 camera images through different neural network algorithms. This algorithm continued to evolve after his talk, and later in the second half of 2019, was named HydraNet.

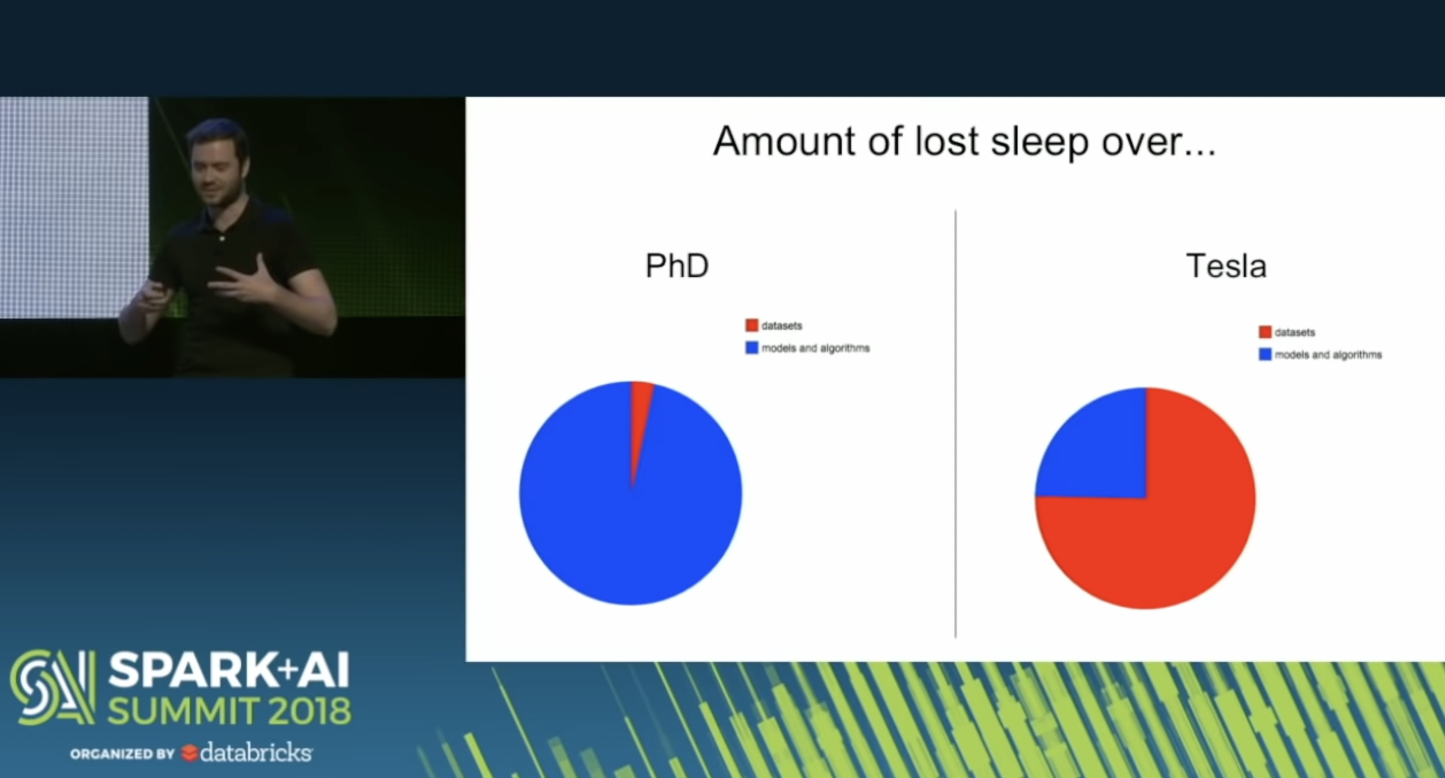

Moving on to the data level.

In fact, Andrej Karpathy found the data issue more challenging than the algorithm. Here’s a clear comparison he made:

During the course of his PhD, most of the sleepless nights were due to algorithmic problems, with a minimal part due to dataset issues. But at Tesla, 25% of the sleepless nights were due to algorithmic problems, and 75% were due to handling datasets.

Initially, the main undertaking was data annotation. However, as Tesla sold more and more cars, it implied a substantial volume of data, practically infinite. Hence, using expensive and limited manual annotation means was not a conceivable solution to this quandary.

So, how did Andrej Karpathy resolve this issue? His answer was: Data Engine.> A data engine, in essence, is more akin to a data set iteration annotation system. To put it simply, it is a mechanism that is based on an already expertly annotated data set to train algorithms, then deployed to a fleet of vehicles with a Shadow Mode in place. If the Shadow Mode identifies a deviation from the norm (for example, a driver’s actual maneuver disagrees with the algorithm’s predicted action), the detailed data encompassing this discrepancy is then relayed back to the cloud. Similar data is collected across the entire fleet and after undergoing a manual annotation procedure, is incorporated back into the data set. Thereafter, the retrained algorithm is redeployed for another round of data triage. This recursive system allows the stewardship of large-scale data annotation and training.

In the grand scheme of things, Tesla’s data engine is an automatic, minimally human-dependent data annotation system—This ever-evolving data engine has become the foundational infrastructure guiding Tesla’s entire autonomous driving strategy.

In late October 2018, 16 months after Andrej Karpathy joined the team, Tesla presented the Navigate on Autopilot (abbreviated as NOA) feature to its American consumers. This feature primarily provides navigation-assisted driving in high-speed scenarios—a significant accomplishment that showcases Andrej Karpathy and his team’s contribution to Tesla’s autonomous driving arena.

However, the introduction of the high-speed NOA brought forth a pressing issue—The Tesla models at the time were experiencing limitations in edge computing power. This suggests with the increasing complexity of the software stack and data processing based on Neural Networks for Autopilot, the vehicles’ computing power was already being pushed to its limit.

In essence, the Nvidia computing platform used in Tesla’s second-generation hardware (HW2.0) and the subsequent upgraded hardware (HW2.5) was powerful, but it was no longer able to meet the rising computational demands brought about by the increasingly complex iterations of neural network algorithms and data.

Fortunately, around this time, Tesla had completed the development of its in-house chip, ready to substitute Nvidia’s computing chip.

Nvidia Couldn’t Capture Tesla’s Heart

In the face of potential abandonment by Tesla, Nvidia has been making various preparations.

After successfully implementing Drive PX 2 on Tesla, Jensen Huang was working hard on the next-generation product. Alongside this, Nvidia spared no effort to expand its “friend circle” in the automotive arena. By May 2017, Nvidia had already established cooperative relationships with Audi, Daimler, Volkswagen Group, and Toyota, and a host of other industry giants regarding the DRIVE PX platform.

In fact, according to the statistics at the NVIDIA’s GTC 2017 conference, the number of companies that have partnered with NVIDIA on autonomous driving solutions has reached 225 — not just automotive companies, component suppliers, Internet companies, and map vendors, but also some startups.

It is worth mentioning that with the layout of giants like Google, Tesla, and NVIDIA in the field of autonomous driving, there has arisen a wave of startups in this field. A large number of them are auto-driving startups from China, many of which are laying out in the field of algorithms with the intention of achieving L4-level autonomous driving, thus requiring support from NVIDIA at the underlying hardware level.

NVIDIA unequivocally embraces these developments and has increased its focus on the Chinese market.

At the GTC China conference in September 2017, Jensen Huang announced that NVIDIA can allow startups to develop their algorithms and software through cutting-edge deep learning and computer vision computing devices. At the conference, NVIDIA stated that 145 startups are already developing self-driving cars, trucks, high-precision maps, and services based on the NVIDIA DRIVE platform.

Among these startups, many are Chinese companies or companies founded by Chinese in the United States. They have absorbed and cultivated a large number of talents, laying a solid foundation for mass production of autonomous driving in the Chinese market through car manufacturers.

Looking back, the more customers, the happier NVIDIA is.

Therefore, when Tesla publicly announced its self-researched chip plan at the end of 2017, NVIDIA was not at all panicked. Soon at CES 2018, Jensen Huang released a heavy product from NVIDIA in the field of self-driving: the brand new autonomous driving SoC platform DRIVE Xavier.

Compared with DRIVE PX 2, DRIVE Xavier is an autonomous driving SoC that integrates multiple modules, significantly improving its computing performance while reducing its power consumption. Notably, NVIDIA has already invested several billions of dollars in the early R&D of DRIVE Xavier.

Additionally, NVIDIA once again expanded its “circle of friends” at this event, such as announcing a collaboration with UBER to create autonomous UBER models (RoboTaxi). At this point, NVIDIA DRIVE’s business side already has over 320 partners, covering consumer-grade vehicles, trucks, transportation services, suppliers, maps, sensors, startups, academic institutions, and more.In addition, NVIDIA has further refined its autonomous driving software layout at this event by introducing an autonomous driving simulation system, in-vehicle application platform, AR platform, etc. We can say that through these operations, NVIDIA has successfully established a complete product system for autonomous driving software and hardware, from basic chips to higher-level applications.

However, even the most comprehensive system could not hold Tesla’s heart.

In August 2018, during a financial report call, Jensen Huang answered questions about “Tesla’s self-research chip”. He first discussed the difficulties of autonomous driving chips and software stacks, and then shouted to Musk that if the final result is not what you want, you can give me a call, and I would be very willing to help.

In response, Musk also replied on Twitter that NVIDIA has made great hardware, and he has a lot of respect for Jensen Huang and his company; but our hardware requirements are very unique and need to closely match our software.

In other words, it doesn’t matter how good NVIDIA’s products are, they don’t meet Tesla’s needs—from this we can see that Musk has decided to completely break up with NVIDIA, with no possibility of retention.

Finally, in April of 2019, at Tesla’s Autonomous Driving Day, the so-called “FSD Computer” HW3 was officially released. Subscribers of the FSD software package could upgrade for free——according to Musk, this is “the most advanced computer specifically designed for the purpose of autonomous driving.”

With the advent of HW3, Tesla’s biggest challenge at the level of autonomous driving algorithms is just beginning.

FSD stumbles, BEV appears

2019 was a very frustrating year for Musk on the FSD project.

In order to rush the progress of FSD to be completed by the end of the year, Musk put a lot of pressure on the Autopilot team and made several team adjustments. As a result, some heavyweight engineers from the Autopilot software engineering team left one after another in 2019, and Stuart Bowers, the deputy general manager of Autopilot software, was forced to leave as well —— fortunately, Andrej Karpathy, the head of Tesla AI, is still there.

In the end, FSD was still delayed in 2019.So, what insurmountable obstacles has FSD encountered in the implementation process? The answer is: 3D perception based on visual images.

In fact, humans fully rely on their eyes to perceive the surrounding environment and roads while driving; and the world seen by human eyes is based on 3D space—thus, based on such cognition, both Musk and Andrej Karpathy, who are full of interest in biology, insist that Tesla can fully utilize AI capabilities to achieve 3D perception based on camera images, rather than using laser radar.

However, the reasons for their refusal to use laser radar are actually different: Musk is more based on cost considerations, while Andrej Karpathy’s choice mainly comes from his confidence in the technical path of “perceiving 3D through AI for images”.

However, through 2D image perception for 3D, this is a very difficult road. In October 2019, Musk, in an interview with Lex Firdman, specifically talked about the biggest challenge in the process of FSD, he said:

The toughest thing is expressing physical targets precisely in vector space. For instance, through visual input, some Ultrasonic and radar input, you can create precise vector space expressions of surrounding objects. Once a precise vector space representation is obtained, controlling the vehicle is relatively easy.

In the face of this problem, Andrej Karpathy turned his attention to BEV (Bird’s Eye View).

It needs to be emphasized: **In computer vision and autonomous driving fields, BEV has never been a new term.** A typical example is that back in 2014, a paper titled “Automatic Parking Based on a Bird’s Eye View Vision System” had already been published. The crux of the paper is perceiving environmental information through four fisheye cameras and constructing a BEV visual system, thereby realizing automatic parking.

In fact, Andrej Karpathy has made many attempts in the process of constructing BEV.

For instance, initially, he used the software 1.0-based Occupancy Tracker, which maps the various features recognized by HydraNet from 2D images into 3D features, and then “stitches” the 3D features from various cameras together on the same timeline, thereby generating a BEV-based 3D map.Yet the Occupancy Tracker is merely Software 1.0-based, swiftly replaced by BEV Net powered by Software 2.0.

BEV Net, pioneered by Andrej Karpathy via Software 2.0, is a neural network designed from a BEV perspective. Essentially, it employs neural network models such as CNN and RNN. According to Andrej Karpathy’s public demonstration, compared to Occupancy Tracker, BEV Net enhances the perceptual capabilities of the Smart Summon function.

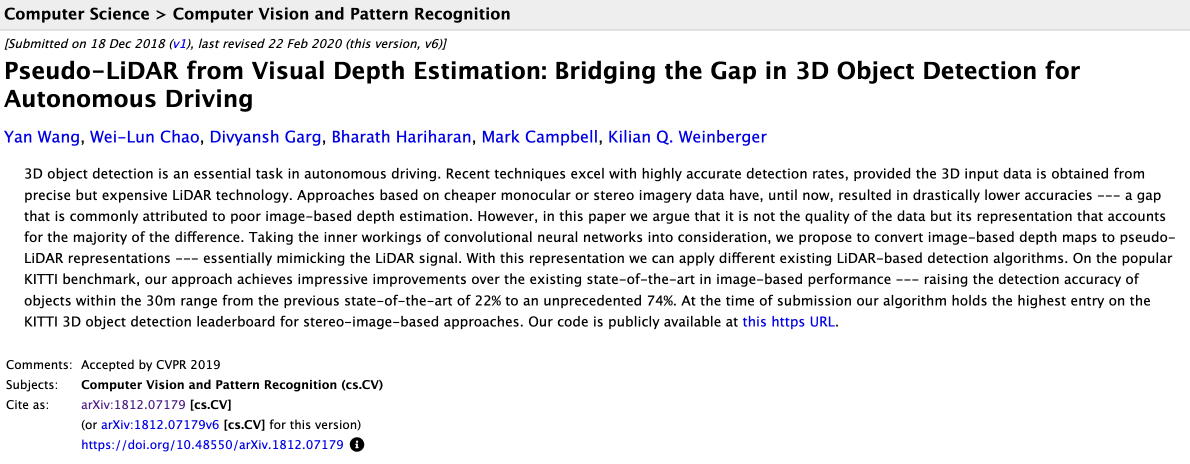

Worth noting, Andrej Karpathy has also spoken on a Pseudo LiDAR approach.

The earliest proposal of Pseudo LiDAR initiated from a 2018 paper titled “Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving”, introducing an approach of transforming depth maps based on image into a Pseudo LiDAR expression via convolutional neural network; essentially, emulating LiDAR signal.

According to Andrej Karpathy’s public lecture, employing pure vision technology and Pseudo LiDAR combined with the self-supervised learning strategy is closing the gap between 3D perception of 2D images and reality rapidly, a point internally corroborated within Tesla.

Overall, Andrej Karpathy has experimented numerous approaches in the development of BEV through neural network and machine learning. These methods often originate from academic research fields rather than Tesla’s original ideas, yet Andrej Karpathy always notices them and gives them a quick test in Tesla’s field practice.

An autonomous driving algorithm professional told us that Tesla and Andrej Karpathy should be grateful to open-source AI. He said:

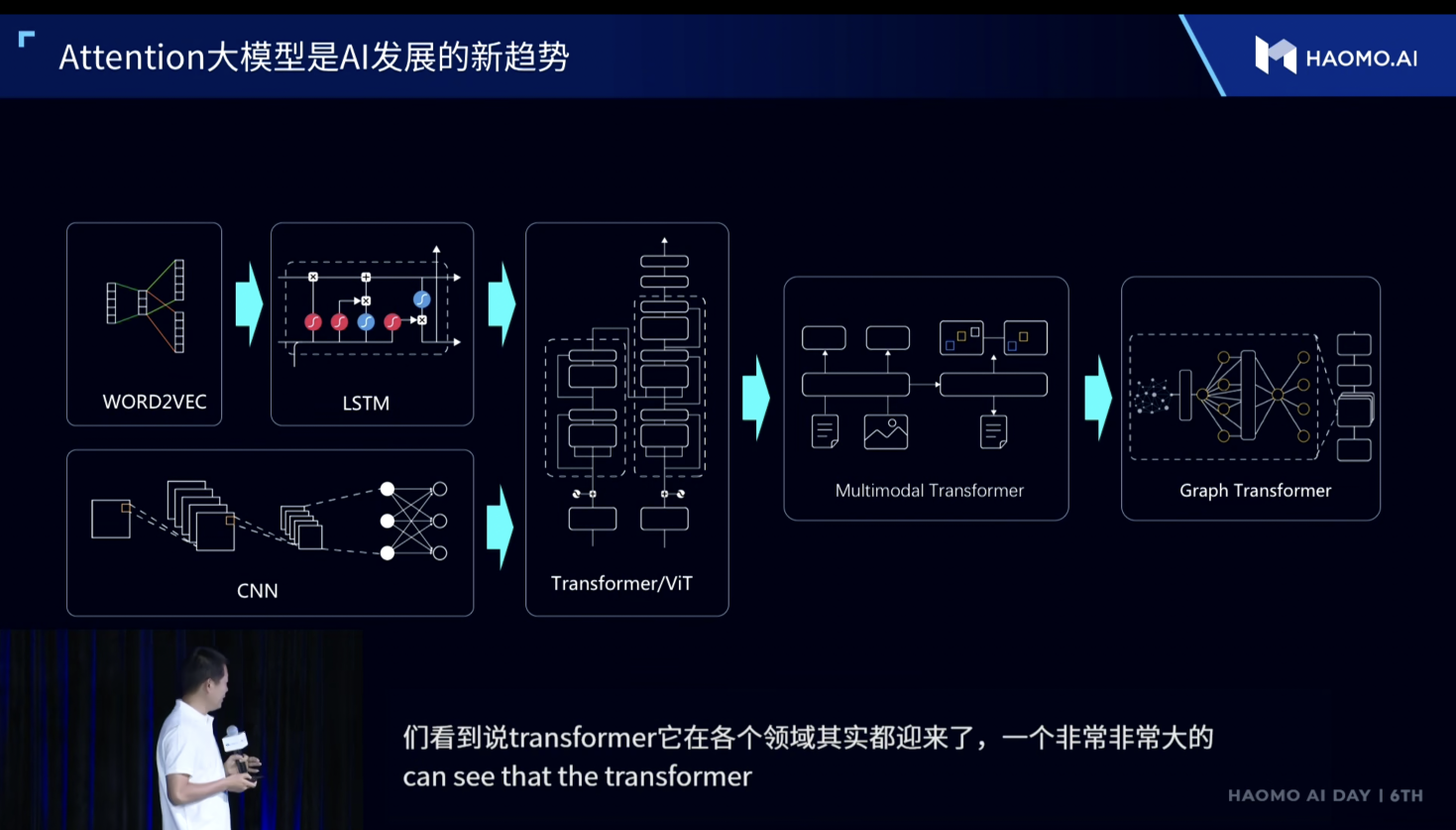

Over the past decade, AI has actualized significant advancements. Especially under the elaborative structure of deep learning, a variety of technical solutions such as CNN, RNN, and Transformer have surged from the academic and industrial research fields, swiftly translating into industrial applications. Meanwhile, this is an era of open-source AI, with numerous achievements published as papers, thus enabling everyone to keep abreast of the latest developments.Regrettably, these methods were later proven unviable by Tesla.

Enter the Transformer!

In 2020, Tesla undertook a comprehensive rewrite of the FSD algorithm.

Why the need for a rewrite?

It turns out that FSD was faced with a hardware limitation that couldn’t be overlooked: in the two or three years after Andrej Karpathy joined Tesla, the increasing sales of Tesla and the increasing complexity of the autonomous driving features to be implemented meant that the volume of data Tesla had to process was also growing rapidly, making the model inevitably more complex. However, on the car side, even after the upgrade to HW3, the 144 TOPS computational power possessed by the FSD computer is static and limited.

In other words, with the exponential increase in Tesla’s data volume, where the computational power on the vehicle side is fixed and limited, it becomes necessary to demand more of the capabilities and efficiency of the algorithm used for inference on the car side––which means that this algorithm framework deployed on the vehicle side must not only effectively implement the process from 2D image recognition to 3D vector space construction, including the crucial step to BEV without any delay (as lives are at stake), but should also ideally not consume too much power.

However, even using the previously mentioned BEV Net solution, it couldn’t meet the above needs given the drastically increasing data volume––indicating that it’s not very efficient and can’t break through the hardware limitations on the vehicle side.

This is why in August 2020, Musk mentioned on Twitter that Autopilot was trapped in a Local Maximum, tagging time-irrelevant single-camera images (which actually employed the industry’s often spoken about post-fusion solution, relatively inefficient).

In simple terms, previous algorithmic architectures hit the ceiling of vehicle-side computational power––so in such circumstances, there’s no choice but to start from scratch and rewrite the architecture.

And how’s that done?

This brings us to the second question––why introduce the Transformer when rewriting the autonomous driving architecture?

In fact, the Transformer originates from a paper released by the Google Research Team in April 2017 titled “Attention is All You Need.” For quite some time after its inception, it was widely applied in the field of Natural Language Processing, including the world-renowned GPT (Generative Pre-trained Transformer) from OpenAI.

The eventual evidence suggests that Transformer can demonstrate its prowess in the realm of computer vision, in some cases proving to be a more efficient and apt algorithm than common ones like CNN, particularly when dealing with large volume data. For instance, in the construction of Tesla’s BEV, Transformer exhibits superior recognition of road-related connections compared to traditional neural networks like CNN, thereby facilitating the vector space construction.

The eventual evidence suggests that Transformer can demonstrate its prowess in the realm of computer vision, in some cases proving to be a more efficient and apt algorithm than common ones like CNN, particularly when dealing with large volume data. For instance, in the construction of Tesla’s BEV, Transformer exhibits superior recognition of road-related connections compared to traditional neural networks like CNN, thereby facilitating the vector space construction.

So, how did Andrej Karpathy incorporate Transformer into Tesla’s autonomous driving algorithm system?

A fascinating coincidence here cannot be ignored—at the beginning of 2020, just as the development of FSD’s algorithm started hitting a bottleneck, Transform began to demonstrate some unforeseen potential in the field of computer vision through relentless exploration in the academic computer science world—a fact that Andrej Karpathy kept a close eye on.

For example, in May 2020, Andrej Karpathy retweeted a paper entitled “End-to-End Object Detection with Transformers” published by Facebook Research Institute. The paper proposed an end-to-end image object detection method via Transformer, which proved to be extremely effective.

In June 2020, Andrej Karpathy shared his vision on the fusion of GPT and future autonomous driving development on Twitter: The ultimate form for Autopilot should be inputting the DMV Handbook’s contents into a “large-scale multimodal GTP-10” and then feeding it with the past 10 seconds of sensor data, so it can navigate accordingly.

Evidently, at that time, Andrej Karpathy was closely following Transformer and GPT, and associating them with Autopilot. Thus, it can almost be confirmed that at that point, Andrej Karpathy was already attempting to incorporate Transformer into the algorithm framework of Autopilot—which turned out to be a part of the framework rewriting.

In August 2020, Musk expressed on Twitter:The improvement to FSD will be a massive leap as it entirely comprises a fundamental architecture rewrite rather than an incremental adjustment. I personally drive the most advanced alpha version in my own car. There is virtually zero intervention between home and work. Limited public release in 6 to 10 weeks.

This suggests that, at this point in time, the FSD architecture rewrite has begun to show results – two months later, Tesla finally released the first FSD Beta test version for a tiny percentage of users in the USA.

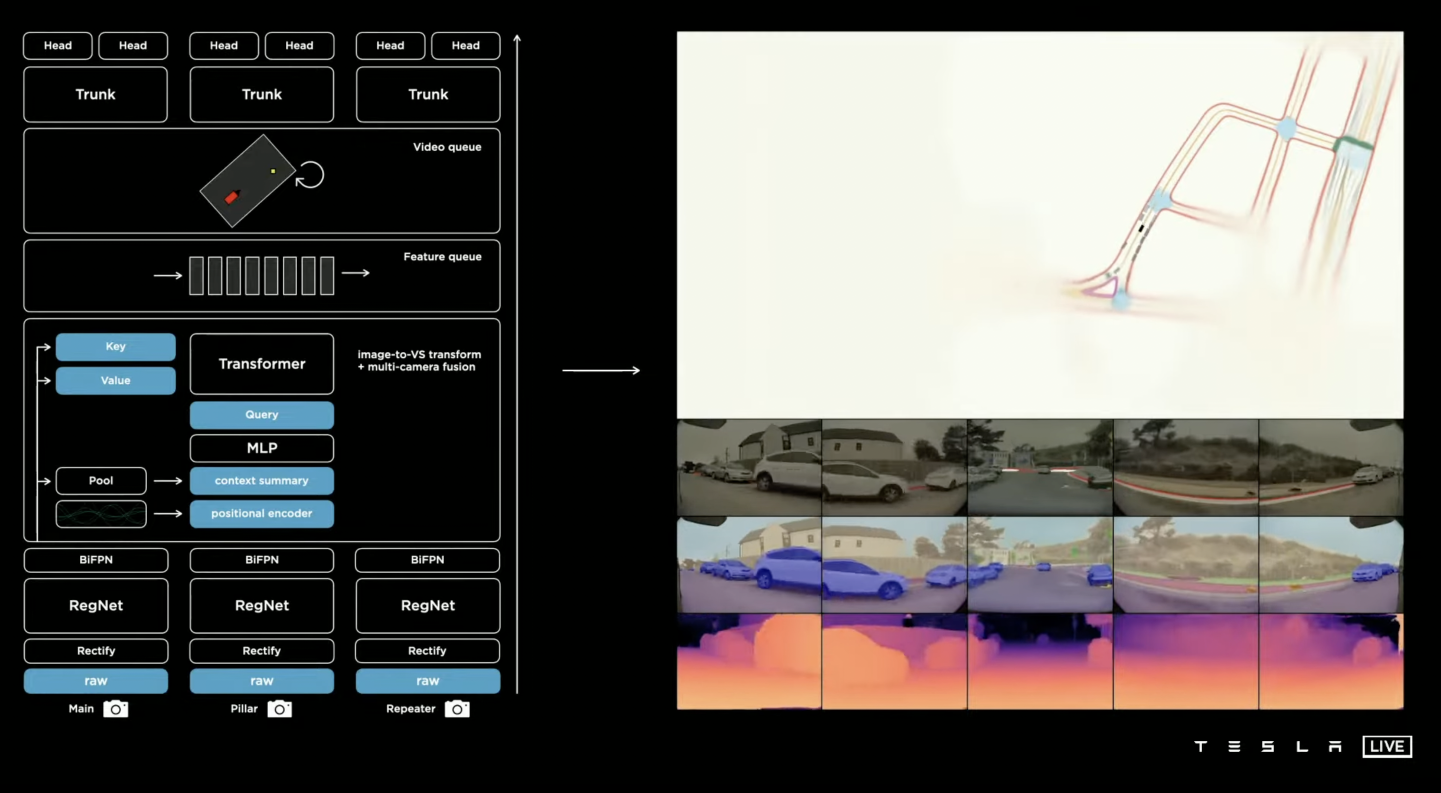

As of August 19th, 2021, at Tesla AI Day, Andrej Karpathy systematically discussed the latest perception-level achievements of Tesla’s self-driving. The matter of most interest in the industry was a diagram about Tesla AI’s all-new software architecture which uses 8 cameras to convert 2D pictures to BEV – the introduction of the Transformer model being the most significant point.

An expert in the field of self-driving AI algorithms told us:

What struck me about Tesla AI Day was the following: When we ourselves are trying a lot of new directions, reading almost every paper, looking at some good directions, and daring to experiment with new technologies including Transformers, looking at Tesla shows how far they’ve gotten. It shows that these directions are very accurate. On one hand, this is the star effect of Tesla AI Day. On the other, this also proves that choosing to follow this path itself takes courage.

Accompanying AI Day, Andrej Karpathy also experienced the highlight of his career at Tesla.

The Land of Promise for Autonomous Driving Mass Production

Meeting China’s New Forces in Car Manufacturing

While Musk was worried about the realization of the FSD algorithm, Nvidia’s automated driving business also faced challenges in its early stages – luckily, it met the ambitious new Chinese carmakers.

This is closely related to the commercial challenges faced by the entire autonomous driving industry at the time. In fact, from 2018 to 2019, the entire autonomous driving start-up industry entered into a winter period; as a provider of autonomous driving computing infrastructure, Nvidia was inevitably affected by the macro environment, and its stock price also fell back in the second half of 2018 and throughout 2019.Even though L4, advocated by startups, seems far from reach, the incremental L2 route adopted by automakers still holds great potential. After all, wealthy auto manufacturers also need to ride the tide of technology, albeit at a slower pace. Consequently, NVIDIA turns back to settling on a progressive approach from the L2 level, setting the focus on automakers that possess mass production capability.

At this point, NVIDIA found that, aside from Tesla, the automakers that meet its requirements for implementation can only be found in China.

The reason for this is that, despite NVIDIA’s established cooperation with several international auto giants, they were more inclined to limit their efforts on autonomous driving to research rather than pushing for mass production. Their investment was not substantial in this aspect, and their software and algorithm capabilities were relatively lacking, which impeded their ability to push forward commercial implementation in the short term.

In contrast, it was the emerging Chinese auto manufacturing brands, particularly XPeng, NIO, and LI Auto, that were eagerly seeking breakthroughs in intelligence during that period—especially in intelligent driving. Although they initially adopted Mobileye’s solutions, they had consistently emphasized the importance of developing their own autonomous driving algorithms in order to build their core competitiveness throughout their growth.

Fortunately, due to the openness of its own business, NVIDIA does not restrict automakers from developing their own autonomous driving algorithms but also provides them with great underlying and software support (of course, NVIDIA’s solutions are not without their downsides—one of which being expensive).

Therefore, these emerging Chinese automakers, with their strong software and algorithm capabilities, have become important partners in NVIDIA’s commercial implementation of autonomous driving—if looked the other way round, without the computing power foundation provided by NVIDIA, these automakers might not find it as easy when seeking self-developed algorithms.

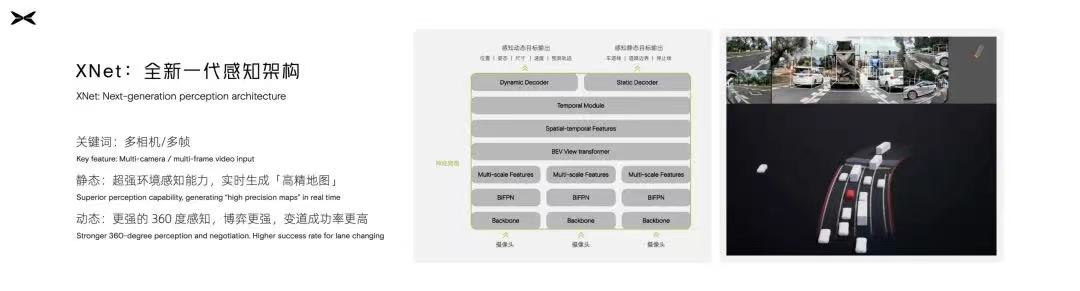

Among these emerging automakers, the one that was the most aggressive in developing its own algorithms was XPeng.

As a result, XPeng announced its collaboration with NVIDIA based on the DRIVE Xavier computing platform as early as November 2018—by April 2020, after a year and a half of development, the XPeng P7, equipped with the NVIDIA DRIVE Xavier autonomous-driving computing platform, finally launched.

It should be noted that, in this implementation project, NVIDIA provides the chips and underlying software technology support, while XPeng, as the original equipment manufacturer, fully controlled the autonomous driving algorithms and data from perception to decision-making.

Thus, after the NVIDIA DRIVE PX 2 was successfully implemented in Tesla, its successor, DRIVE Xavier, was finally mounted on a mass-produced car.### Why has Transformer+BEV become the paradigm?

Around 2020, with the gradual integration of AI and autonomous driving technologies into China’s automotive industry digitalisation and intelligentisation trends, as well as the emergence of new Chinese car-manufacturing forces, the Chinese market has increasingly become a hotbed for the deployment of autonomous driving.

A typical example is that in December 2019, at the NVIDIA GTC CHINA conference held in Suzhou, Jensen Huang first announced the new generation autonomous driving car platform, the DRIVE Orin chip, to the public. It is also a SoC type chip, with performance power that is 7 times of the previous Xavier system-level chip. Theoretically, it can support L2 to L5 expansions.

From the business perspective, new Chinese car manufacturers have been accepting DRIVE Orin, much faster than the previous generation products.

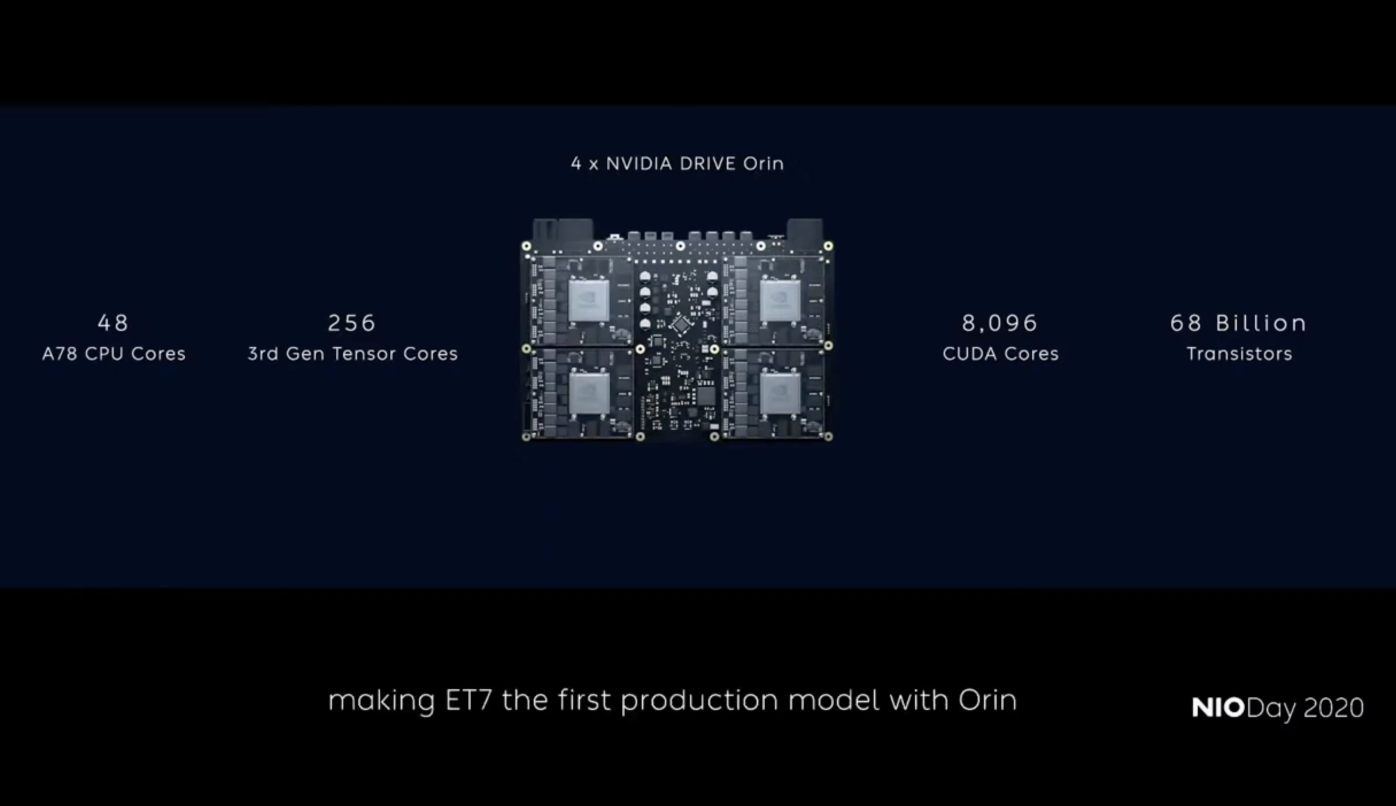

In April 2020, XPeng Motors announced that it would continue to cooperate with NVIDIA. Its next-gen intelligent electric car models would continue to carry NVIDIA’s AI autonomous driving computing platform. Subsequently, LI Auto also stated that it would use DRIVE Orin chip on the full-sized range-extended SUV to be launched in 2022. NIO went even more straight-forward, directly announcing the deployment of 4 DRIVE Orin chips in the later released ET7 model, achieving up to 1,016 TOPS of computational power.

By now, the three representative car companies of the new Chinese forces, NIO, XPeng, and LI Auto have all committed to projects deploying NVIDIA’s DRIVE Orin chip.

For clarification, while establishing partnerships with the “new forces car enterprises”, Horizon Robotics, a startup company providing chipsets for autonomous driving, has appeared in China. Kai Yu, who once competed for AlexNet, founded this company after leaving Baidu. Its chip products successfully landed on Changan Automobile in 2020 and was later loaded on LI ONE in 2021, providing the computational foundation for LI Auto’s high-speed NOA function.

Of course, outside of startups, another unmissable player is the Chinese tech giant, Huawei.

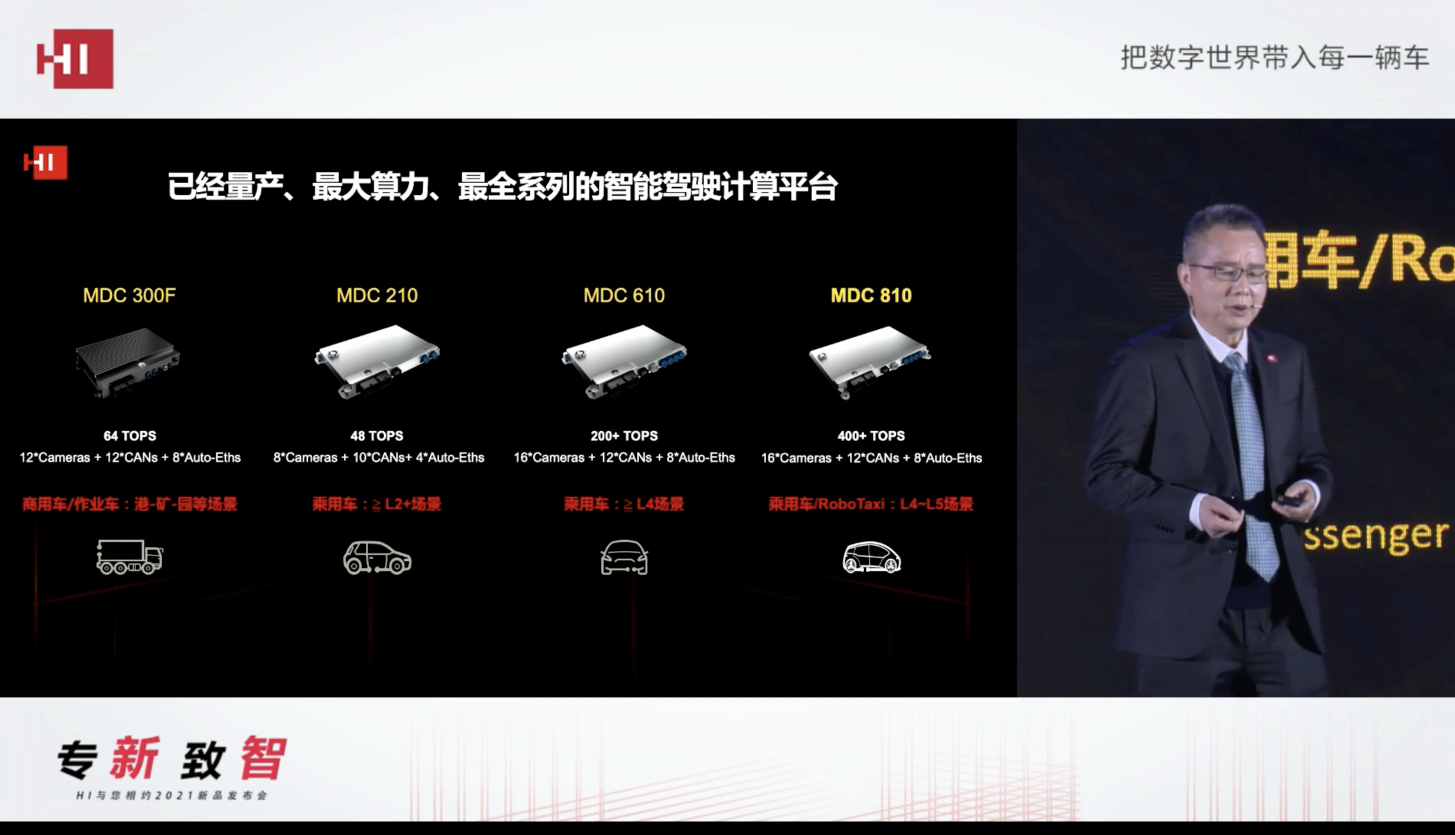

In fact, Huawei established Huawei Intelligent Automotive Solution BU in 2019 and utilised its capabilities in chip, cloud computing, software, and other fields for intelligent cars, with intelligent driving being of utmost importance-among them, the MDC autonomous driving computing platform built on the self-developed Ascend AI chip became Huawei’s “ace”.

However, computing power is only the foundation. To truly tap into the power of computing, a breakthrough at the algorithmic level is needed.

Coincidentally, in the second half of 2021, just as these automakers’ autonomous driving departments were intensively conducting their own autonomous driving algorithm research, Tesla’s Transformer + BEV proposal discussed at AI Day 2021 emerged and began to spark discussion, attention, and follow-up in the field of autonomous driving.

It needs to be emphasized that Tesla is not the only company that has noticed the potential of Transformer in the field of computer vision and autonomous driving. In fact, on the other side of the ocean in China, many companies have set their sights on Transformer, such as AutoX, which began to try to implement Transformer in perception algorithms as early as March 2021.

On this, an autonomous driving chip industry practitioner commented:

Compared to domestic enterprises, what really makes Tesla powerful is its ability to engineer the most cutting-edge technologies, such as the Transformer. That is, many technical solutions originate from academia, reliable or not; but they are always able to take these papers to the industrial scene for validation with the fastest speed and let their value be exerted. If other companies were to validate hundreds of academic papers on the ground, the cost might be relatively high. After Tesla’s completion, it has actually helped to do verification work for the entire industry. This is its contribution to the industry.

Therefore, thanks to Tesla AI Day’s inspiration for the entire industry at the algorithmic level, Transformer has also been valued in China across the ocean, and many automakers and algorithm companies have begun to make algorithm improvements based on it.

In fact, after Tesla, automakers including XPeng, LI Auto, and NIO, and autonomous driving solution providers like Huawei and AutoX, have all put their algorithm architectures through a rewrite, and have adopted similar technology paths to Tesla’s Transformer and BEV in the rewrite process – notably, due to the late movers advantage, the time it took for these three companies to rewrite their architectures was shorter than Tesla’s.

Interestingly, after recognizing the importance of Transformer + BEV in the mass production and implementation of autonomous driving, chip suppliers such as Nvidia and Horizon have also done a lot of work at the software framework adaptation level based on Transformer. For instance, Nvidia has developed a dedicated engine for Transformer, and Horizon has proposed an end-to-end algorithmic framework based on BEV+Transformer.Accordingly, the Transformer+BEV scheme has become a paradigm adopted by many car companies in the process of moving towards the mass production of autonomous driving.

Although having parted ways, they still meet from time to time

In 2022, with mass production becoming the main trend, autonomous driving is also constantly seeking new technological breakthroughs.

At the mass production level, NIO, LI Auto, and XPeng have all unanimously chosen the NVIDIA DRIVE Orin chip, and in 2022 they have launched their key models -— thus, as intelligence starts to gradually touch upon the buyer’s car purchase decision, NVIDIA’s DRIVE Orin has firmly occupied the high-end position in the intelligent driving computing platform.

Nevertheless, NVIDIA does not stop there.

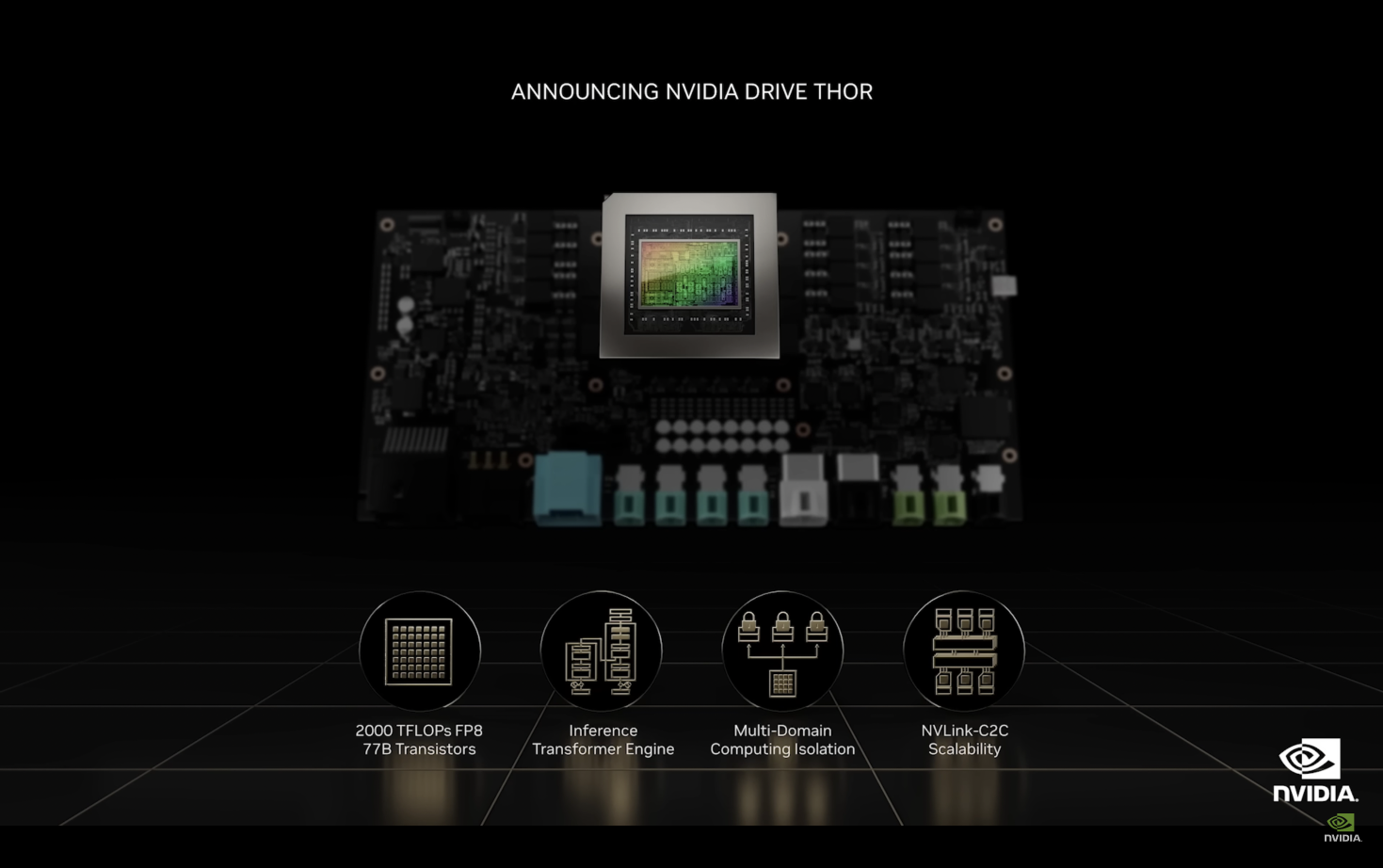

On September 20, 2022, NVIDIA released its next-generation SoC for the field of automotive intelligence, named DRIVE Thor. This is a performance monster of a single-chip computational power capable of reaching 2000 TOPS —- interestingly, Thor specifically increases support for Transformer models.

With the release of DRIVE Thor, NVIDIA boldly allowed DRIVE Atlan, which had already been released at GTC 2021, to “die in the womb.” One of the reasons behind this is that: the evolution of intelligent driving algorithms in the automotive industry is moving too fast.

Meanwhile, the release of DRIVE Thor actually takes into account a big picture in the auto industry: With the major trends of electrification and intelligence, the electronic and electrical architecture of cars is rapidly changing, moving from distributed computing to domain fusion and even central computing.

Therefore, Thor is positioned as a central computing platform in a car, integrating all AI computing requirements in the field of intelligent vehicles, including intelligent driving, active safety, intelligent cockpit, automatic parking, vehicle operating systems, infotainment, etc. Interestingly, on the day of Thor’s release, Zeekr, not to be outdone, announced its entry into Thor’s cooperation list, pre-scheduling the first mass-production in 2025.

Of course, while computation power continues to break through, there are also new breakthroughs at the algorithmic level.

Just ten days after NVIDIA released Thor, Tesla held its 2022 AI Day. Among them, at the perception algorithm level, Occupancy Network started to become a buzzword, with its core ability being to recognize general obstacles — after all, just having a BEV is not enough.In addition to the occupancy network, Tesla also introduced an algorithm called NeRF that was proposed in 2020. This algorithm, when combined with the occupancy network, enables 3D rendering of the vehicle’s surrounding environment. During AI Day 2022, Tesla devoted considerable time to introducing new algorithms for tasks related to planning beyond autonomous driving perception.

Of note, the representative for Tesla during AI Day 2022 wasn’t Andrej Karpathy anymore.

Previously, Andrej Karpathy had filed a leave request in March 2022, and several months later announced his resignation from Tesla. His reason for leaving, as he put forth, was his preference to concentrate on AGI over management.

So, could there be other reasons for Andrej Karpathy’s departure?

As shared by an autonomous driving engineer:

During his tenure at Tesla, Andrej Karpathy managed to improve autonomous driving from 0 to 70, an endeavor that required immense creativity and also something that top-notch AI talents like himself would prefer to work on. However, following the development of BEV+Transformer, the next step for Tesla in autonomous driving was to progress from 70 to 80, a stage which involves resolving numerous Corner Cases, which Andrej Karpathy didn’t find enticing, leading to his decision to leave.

An expert in autonomous driving algorithms also suggested that one potential reason for Andrej Karpathy’s departure was a possible disagreement with Musk on the technical direction of autonomous driving.

The expert pointed out that although Tesla’s end-to-end model doesn’t appear to be GPT, Andrej Karpathy might have preferred pursuing GPT, as understanding semantics is crucial in driving scenarios, and this direction holds potential. If Andrej Karpathy insisted on GPT but it was not endorsed by Musk, that could be a reason for Karpathy’s departure.

Regardless, Andrej Karpathy’s departure has not impeded Musk’s relentless pursuit for advancements in autonomous driving.

In reality, after a push of two and a half years, FSD Beta has accumulated more and more mileage at the user level —— but at the same time, Musk is advancing the development of a new, more powerful self-developed FSD chip, HW4.0, while consistently focusing on better technological pathways at the algorithmic level.

For instance, beyond past FSD algorithms, Musk is perennially focusing on the end-to-end implementation of autonomous driving algorithms, marking a new paradigm which holistically covers aspects like perception, prediction, planning, control and more; getting closer to the method of human driving.

What’s interesting is that the concept of end-to-end autonomous driving, frequently emphasized by Musk, stemmed from a paper published in 2016 titled “End to End Learning for Self-Driving Cars”—— the very paper was authored by NVIDIA as it was ardently venturing into the field of autonomous driving.

Therefore, even while diverging, Tesla and NVIDIA still manage to cross paths from time to time.

AI’s iPhone moment & Tesla’s ChatGPT Moment

In February 2023, Andrej Karpathy revealed his next career destination: OpenAI.

This is hardly surprising. After all, after releasing ChatGPT in late November 2022, OpenAI became the most watched AI company worldwide —— of course, it is even more evident that the introduction of ChatGPT, along with the release of GPT-4, paved the industry with a pathway leading to AGI (Artificial General Intelligence).

Andrej Karpathy has always been cognizant of AGI.

In fact, even during his tenure at Tesla, Andrej Karpathy has always kept a keen eye on GPT. A convincing proof is that in August 2020, as GPT-3 was sweeping the globe, Andrej Karpathy, in the midst of a key process of rewriting the Tesla Autopilot algorithm architecture, took out his professional time to craft a mini GPT training library, referred to as minGPT.

Hence, after the sudden appearance of ChatGPT, Andrej Karpathy’s return to OpenAI was only predictable.NVIDIA is equally thrilled about the breakthroughs achieved by OpenAI. At the GTC conference held in March 2023, in light of the overnight success of ChatGPT, Jensen Huang remarked:

The iPhone moment of AI has begun. Startups are racing to create revolutionary products and business models, while established companies are seeking ways to cope. The advent of generative AI has proven the urgency of formulating AI strategies among global corporations.

However, Musk has complex feelings about the AI frenzy triggered by OpenAI and ChatGPT.

On one hand, whether as the CEO of Tesla or the head of Twitter, Musk chooses to embrace the new wave of AI technology in his own way, such as actively purchasing GPUs.

In the financial conference call in April, he noted that Tesla would continue to invest massively in NVIDIA’s GPUs. Not just that, Musk confirmed on Twitter that around 10,000 NVIDIA computing GPUs were purchased by Twitter. All corporations, including Tesla and Twitter, are obtaining GPUs for advanced computing and artificial intelligence applications.

On the other hand, although OpenAI originates from Musk, he and OpenAI have parted ways due to various factors. Afer Microsoft’s investment in OpenAI and following a shift from the previous open-source alignment towards a new business orientation, OpenAI is no longer ‘open’. This greatly disappoints Musk.

In an interview discussing Tesla and ChatGPT, Musk proposed:

I believe Tesla will also experience this so-called ‘ChatGPT moment’. If it doesn’t happen this year, it definitely won’t be later than next. Suddenly, 3 million Tesla cars are capable of self-driving…then 5 million…then 10 million…Imagine if we switch places, with Tesla creating a language model as powerful as ChatGPT, and Microsoft and OpenAI working on autonomous driving. We swap tasks. There’s no doubt we would win.

Accordingly, Tesla’s autonomous driving technology is also approaching a critical moment in Musk’s view. Despite the challenges, it is poised to explode and transform like ChatGPT.

In fact, Tesla is quite solitary in the domestic autonomous driving race in the US. However, the AI wave brought by ChatGPT has attracted attention from Chinese automakers and algorithm companies who are starting to make strategic moves.For example:

- XPeng Motors stated that GPT would impact XPeng in the short, medium, and long term. In the long run, when GPT is deployed locally and augmented by cloud computing, it will assist intelligent vehicles to evolve from L4 to L5.

- NIO management has also emphasized on numerous occasions that the optimal implementation environment for large models like GPT is in vehicles.

- LI Auto announced in June that it had incorporated its self-developed Mind GPT into ‘LI Pupils’ , enabling features such as voiceprint recognition, content recognition, dialect recognition, travel planning, AI drawing, AI computing, and so forth.

Indeed, aside from these high-profile automakers, companies like Huawei, Momenta, and SenseTime that provide autonomous driving algorithm solutions are also intensively exploring various possibilities of Transformer and GPT in the field of autonomous driving—and within the broad framework of Transformer, some of the latest research results in autonomous driving have been born in China.

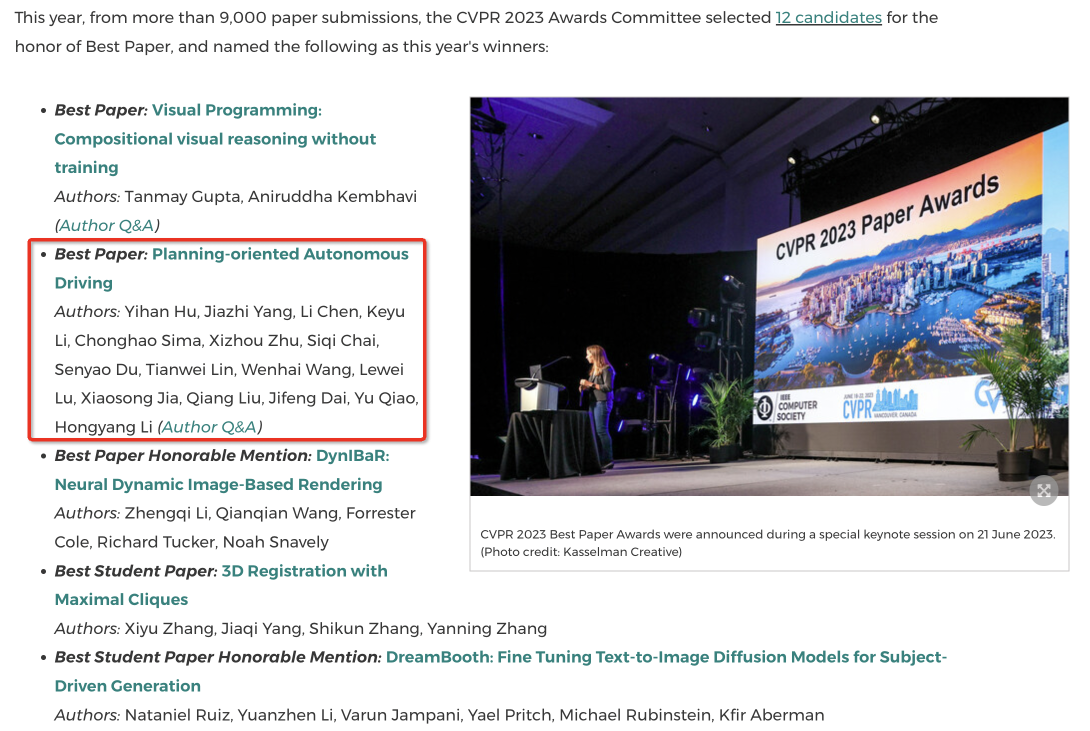

It is noteworthy that at the most recent top international conference in the field of computer vision, CVPR, the first-ever best paper focused on autonomous driving was born. The paper is titled “Planning-Oriented Autonomous Driving” and is a collaborative publication from the Shanghai AI Lab, Wuhan University, and the SenseTime team in China.

This paper primarily presents UniAD (Unified Autonomous Driving algorithm framework). Specifically, it merges numerous components of autonomous driving algorithms, such as Perception, Prediction, and Planning, into a task-oriented end-to-end framework, which is also based on Transformer.

Although it has not been fully validated at the mass production level, the significance of this paper has earned it the moniker ‘Light of Autonomous Driving’ from one industry insider working on the implementation of autonomous driving at a new energy vehicle company.

Of course, this ‘Light of Autonomous Driving’ belongs to China.

The journey is long, the quest continues

In fact, ‘Autonomous Driving,’ as a concept, has been around for nearly a century.

In August 1925, a Chandler automobile named ‘American Wonder’ appeared on the bustling streets of New York. With no driver, it could control the vehicle through a set of radio equipment, performing actions such as acceleration, deceleration, and turning. This was the first ‘autonomous vehicle’ in human history.

Ever since, it has been waiting for a real opportunity to be implemented.

Ever since, it has been waiting for a real opportunity to be implemented.

In 2012, with the explosion of AI and deep learning, a number of pioneers finally saw the possibility of mass implementation of autonomous driving in the automotive industry; hence, AI and autonomous driving have made vast progress in the past decade.

From AlexNet to ChatGPT, AI development has undergone a ‘singularity’-like transformation in the last decade.

At the same time, from the moment Musk announced Tesla’s entry into autonomous driving, to the current ‘ChatGPT’ era, autonomous driving in the span of a decade is no longer a fictitious and unattainable concept—instead, its potential value is gradually revealed as assisted navigation driving is implemented in highway and urban scenarios.

In retrospect, this development process can be excellently described as ‘starry brilliance’.

In fact, in the intertwined advancement of AI and autonomous driving, these shining stars are emitted by the companies and individuals leading, pushing, and participating in this industry. Among them, innovations in technology and business models are indeed a key dimension, but we cannot overlook that the mass production of autonomous driving is fundamentally an issue of engineering implementation, hence the role of engineering implementation is extremely significant.

In this process, American tech giants represented by NVIDIA and Tesla have certainly played the role of pioneers from 0 to 1; however, China’s automotive newcomers and autonomous driving solution providers, represented by XPeng, NIO, and LI Auto, and by Huawei, Horizon, Momenta, and SenseTime, have explored a broader path for autonomous driving implementation through learning, following, and innovating.

Of course, as it appears now, this road is long, with the end not yet in sight, it may become increasingly rugged and laborious—and some have already chosen to leave.

However, there are still many people who continue to search on this road.

For example, Musk is still looking for an algorithm more efficient than Transformer, NVIDIA is still exploring opportunities for centralized computing architecture and end-to-end implementation; and the new Chinese automotive firms and autonomous driving companies are constantly seeking new tech paths in the real road scenarios of China through their practical implementations, and have achieved numerous breakthroughs…

In the eyes of these diligent practitioners, autonomous driving is not just a technical problem worth striving for, it is also a way to empower the automotive industry and assist human travel safety through technological development—in fact, for many engineers, it is more like a belief.

In October 2022, Andrej Karpathy, several months after leaving Tesla, accepted an interview with well-known tech blogger Lex Firdman. In their discussion on ‘the feasibility of autonomous driving’, an interesting conversation ensued:> L: It’s like, you’re climbing a mountain, though there’s fog, you’re continually making great strides. A: Amid the fog, you’re making progress, and you can see what’s ahead. The remaining challenges do not deter you, nor do they alter your philosophy. You refuse to distort yourself. You’d say, in fact, these are the things we still need to do.

Perhaps one day, after traversing through the heavy fog, some will stand atop the once unattainable peak of ‘autonomous driving’— and look back at the path they’ve traveled, rejoicing in their unwavering perseverance.

References:

[01] ImageNet Classification with Deep Convolutional Neural Networks

[02] Tesla moves ahead of Google in race to build self-driving cars, FT

[03] Andrej Karpathy on the visionary AI in Tesla’s autonomous driving

[04] Software 2.0, Andrej Karpathy

[05] Automatic Parking Based on a Bird’s Eye View Vision System

[06] Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving

[07] Attention is All You Need

[08] End-to-End Object Detection with Transformers

[09] End to End Learning for Self-Driving Cars

[10] Planning-oriented Autonomous Driving

[11] Andrej Karpathy: Tesla AI, Self-Driving, Optimus, Aliens, and AGI | Lex Fridman Podcast[12] Elon Musk: Neuralink, AI, Autopilot, and the Pale Blue Dot | Lex Fridman Podcast

[13] Full Interview with Tesla CEO Elon Musk on CNBC Television

[14] Tesla Autonomy Day 2019

[15] Tesla AI Day 2021/2022

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.