Author: Michelin

The relationship between people and cars cannot do without the word “interaction”. Whether it’s the mechanical buttons of traditional car era or the touch screens and voice interactions of the smart car era, finding safer, more efficient and comfortable ways of interaction has always been the common pursuit of the industry. What kind of interaction is more appropriate is also an eternal proposition discussed in the industry.

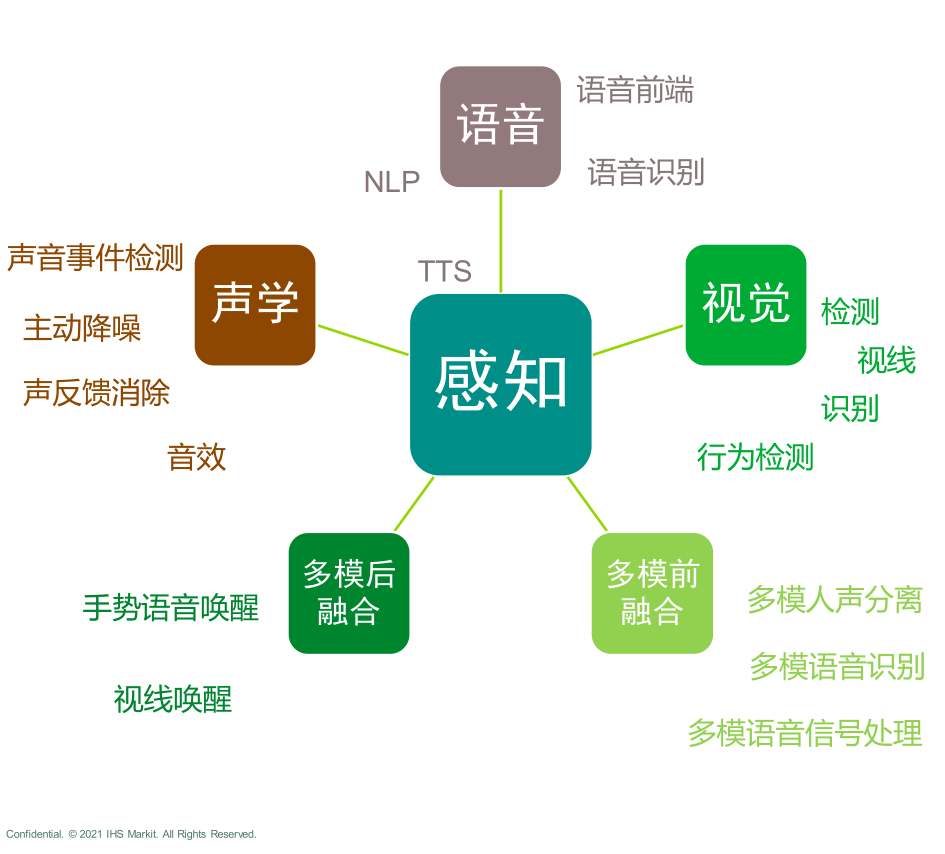

In the previous Orange Book “Voice Interaction: From Impetuousness to Pragmatism, Stubbornly Pursuing Experience Value“, we explored the growth and trends of voice interaction in the past year. Behind the trend towards pragmatic voice interaction is the process of cabin systems gradually adapting to and accommodating human natural interaction habits. It’s not just voice interaction. With the multi-modal interaction ability based on voice interaction, the DMS/OMS function which monitors real-time information in the cabin and gives corresponding responses, the future integration and linkage between cabin and driving, the AI technology powering them, and so on. While each of these abilities is improving, they also jointly constitute the transformation of the cabin system from passive interaction to active interaction.

In the process of turning to active interaction, users can communicate with the system in a more natural way, and even vague instructions and intentions can be understood by the system. The system also tries to provide more refined services based on the user and the state inside the cabin.

To better illustrate our observations, this time we talked to the Horizon Intelligent Interaction team to explore together how to provide more granularity of active services in the cabin interaction.

DMS/OMS: The Evolution of “Troublemaker Manufacturing Machine”

If some intelligent configurations in the cabin are always regarded as “show off,” then DMS/OMS functions are definitely representatives of practical functions.

By using the camera in the cabin, the system can track the driver’s gaze on the designated screen, then automatically turn on the screen or wake up the on-board assistant. Face ID can automatically adjust the seat position and angle when the driver logs in after entering the cabin. By monitoring the driver’s facial micro-expressions and eye movements, the system can issue warnings and reminders when the driver is distracted or fatigued in time. By recognizing smoking, sleeping and other situations through the camera, the system can realize scenario-based services such as intelligent adjustment of car window status, air circulation and reducing volume.

Efficient, safe, and comfortable, the three fundamental needs of the cabin are all covered by the DMS/OMS functionality.

Efficient, safe, and comfortable, the three fundamental needs of the cabin are all covered by the DMS/OMS functionality.

Recently, monitoring data from the high-intelligence automobile industry shows that from January to November 2022, a total of 99.95 thousand units of the DMS standard configuration for passenger vehicles were installed, an increase of 111.8% compared to the previous year. Regarding DMS perception solution suppliers, Horizon Robotics (chip + perception), SenseTime, and Hikvision are the top three market share holders. It is predicted that by 2026, the penetration rate of DMS will reach 35%, and the trend of installing DMS/OMS on vehicles is unstoppable.

However, in the “2021 Intelligent Cockpit Orange Book,” we gave a less-than-positive evaluation of the DMS/OMS functionality at the time, calling it a “troublemaker.”

This is because in previous cabin evaluations, DMS/OMS caused trouble due to mis-triggers and mis-reminders in fatigue monitoring, distraction warnings, smoke alarms, and other scenarios. It made it impossible for us to give a higher evaluation at the time. The “Sleeping while driving” fiasco, where the system misjudged a person’s actions as sleeping, also made more people aware of the problems facing the DMS/OMS functionality: differences in physiological features, differences in motion habits, and differences in demands, all of which determine the many problems that DMS/ OMS must solve in practical applications.

The key to improving the “troublemaker” DMS/OMS lies in how to provide more accurate and appropriate services and avoid causing trouble due to mis-triggers and mis-reminders.

On the one hand, it is necessary to sort out common functional scenarios and filter possible false alarms by judging the scenarios.

On the other hand, it is necessary to set reasonable and scientific indicators for technical standards. Whether a person is in a state of fatigue, and what kind of fatigue state they are in, is not a simple “Yes or No” question. Therefore, setting a single and mechanized trigger threshold would inevitably cause mis-triggers and mis-reminders. The key to improving the performance of the DMS/OMS is to refine the trigger mechanism to be more precise and accurate.In the past year, many new car models have been equipped with DMS/OMS functions. For example, the Chery Tiggo 8 PRO is equipped with an AI emotional super interactive system that detects the driver’s body language, fatigue level, and distraction level based on face recognition and gaze tracking, and intelligently pushes chat, music volume, air conditioning temperature, and other scene modes to help relieve driver fatigue.

To explore how to accurately control the triggering threshold, we interviewed the product and R&D personnel of Chery Tiggo 8 PRO and the research and development personnel of Horizon, the DMS system supplier. In order to make the triggering standard more accurate and reasonable, and to avoid mis-triggering, the DMS team of Chery Tiggo 8 PRO and Horizon have finely divided the monitoring of the driver’s status:

For example, in the distracted scene, in order to make the triggering threshold more accurate, the gaze point of the distracted reminder is divided into fourteen areas; these fourteen areas are divided into different levels such as danger zone and warning zone according to their impact on driving behavior. The danger of driving distraction caused by the gaze staying in different areas for different periods of time is naturally different.

At the same time, the distraction and fatigue of drivers are further divided into mild, moderate, severe, etc.

Physiological reactions to external stimuli, the effect of early warning at different time intervals and other differences at different levels can all make DMS/OMS provide more accurate and detailed services.

In the future, with the increase in the number and performance of sensors in the cabin, and the improvement of the integration degree of the cabin, the cabin DMS/OMS function needs not only the indicators provided by single sensors such as cameras and millimeter-wave radars, but also multidimensional perceptual information to provide more reliable decision input through the fusion of multidimensional information; and DMS/OMS itself has become a part of multimodal interaction in the cabin.

For the next stage of DMS/OMS suppliers, they need to provide not only single software algorithm capabilities, but also hardware and software integration capabilities, as well as chip and AI perceptual capabilities behind them.Of course, the purpose of DMS/OMS itself is to provide users with safe, efficient, and convenient services, to distinguish between the services that users truly need and those that are unnecessary disturbances, and to avoid the privacy concerns caused by in-cabin cameras. These are still issues that need to be resolved in the evolution of this technology.

Multimodal Interaction: Fusing Multi-dimensional Information to Make Interaction More Human-like

Looking at the window and saying “bigger”, the window will automatically open; staring at the air conditioner and saying the same thing, the command becomes to turn up the air conditioning; focusing on the outside environment and saying “I want to know the purpose of this building”, the intelligent assistant will automatically search and inform you of the result…

This is a scene we have seen in concept cars in the past. The multimodal interaction technology that integrates various interaction methods such as voice recognition, visual recognition, and gesture recognition in the cabin satisfies our imagination of future technology.

In the past few years of intelligent cabins, we have seen multiple forms of interaction coexisting, including physical buttons, knobs, touch, voice interaction, gesture interaction, and facial recognition, which have also become the mainstream of the industry.

However, compared with the situation where various forms of interaction “fight alone” in the past, the essence of multimodal interaction lies in linking different interactive forms together. By complementing the perceptual ability of different dimensions of information under different interactive forms, the system can understand and execute the user’s intention in a more natural way, and even guess the user’s intention that has not been spoken out loud.

In the past year, we have seen the initial form of multimodal interaction attempts.

For example, in the Ideal L9, 3D Tof + voice interaction was used to fuse and finger-pointing at the sunshade, saying “open this”, and the sunshade can be automatically opened. Although this scenario may not seem very attractive as a button can also open the sunshade, the ability to fuse voice and gesture interaction creates more possibilities for cabin interaction, such as improving interaction accuracy.

In the cockpit of the Great Wall Alpha S HI model, speech recognition and lip movement recognition are combined together. By integrating visual and audio modalities, accuracy of speech recognition can be improved in noisy and crowded cockpit environment. The cabin solution, called Horizon Halo, based on Horizon Journey 2 and Journey 3, also adopts similar visual and audio integration scheme to improve recognition accuracy and achieve active interaction through a variety of sensor data fusion including visual and speech.

For example, the full-time non-wake-up function appearing in the cockpit in 2022 enables the speech assistant to stay on standby and ready for input at all times without the need for a wake-up word, making voice interaction more similar to natural human conversation.

To achieve this effect, optimization of speech interaction technology architecture and basic speech ability is essential. In addition, to further reduce the false wake-up rate and improve recognition accuracy, visual perception is introduced, and speech is fused with gesture recognition to provide more redundant information for the system.

The Chery Tiggo 8 Pro, which adopts the Halo 3.0 solution, is the first model with a full-scenario multimodal interaction system. Multi-modal interaction technology is used in the full-time non-wake-up function.

According to the developers from Horizon Smart Interaction, the supplier of this solution, to achieve the full-time non-wake-up capability of multi-modal speech interaction, a traditional speech recognition optimization chain and a front-end visual processing technology fusion method are adopted, in which video and speech data in the cabin are fused based on vision as an important dependency to reconstruct speech technology. To realize this effect, speech data needs to be synchronized with visual data in time series and 10 billion-level image data need to be processed. This data volume is 100 times that of facial recognition.

To deal with such massive data, sufficient computing power needs to be provided, along with edge computing capabilities to minimize latency, AI technology constantly optimized for models. This requires suppliers like Horizon to offer a complete set of solutions consisting of chips, algorithms, and toolchains, as well as the ability to optimize hardware and software jointly.

To deal with such massive data, sufficient computing power needs to be provided, along with edge computing capabilities to minimize latency, AI technology constantly optimized for models. This requires suppliers like Horizon to offer a complete set of solutions consisting of chips, algorithms, and toolchains, as well as the ability to optimize hardware and software jointly.

Correspondingly, after fusion, the effects become more precise, and in highly noisy scenarios, the error rate of multimodal voice interaction is reduced by 50% relative to ensure the improvement from unusable to usable in extreme working conditions. Especially when dealing with ambiguous intent instructions, the feedback given is closer to a natural response.

Of course, like DMS/OMS, multimodal interaction exists not to create a sci-fi experience. We often see designs in some intelligent cabins that exist for the sake of design, and the same is true in multimodal interaction experiments. Splitting one-click or one-sentence operations into multimodal interactions involving users’ voice, gestures, body language, and expressions does not reduce the user’s task load but rather increases it. Ultimately, it only becomes a fleeting curiosity driven by curiosity, and can even bring negative impacts to the users.

Correspondingly, only multimodal interaction that users truly need and that can meet their urgent needs will be tried, accepted, trusted, and finally integrated into the overall intelligent cabin.

Conclusion: The Future of Cabin Interaction

What will the future of cabin interaction look like? There may be one thousand answers to one thousand people. However, the relationship between humans, cars and the environment remains the same. The cabin interaction system reduces the information processing load on humans while driving and gradually adapts to human trends. After all, “laziness” is the first driving force behind the progress of technology.

Now we can already see that cabin interaction can better understand the instructions given by users, comprehensively judging based on the cabin’s perceived environment and status using multi-dimensional perception systems. In the future, on top of this, there will be consideration of the environment outside the cabin, the overall vehicle’s driving state, and even the system’s “memory” of past user states and behavior. Based on the fusion of more dimensional information, it can make judgments on the user’s intent and even provide proactive, seamless services.

Of course, this requires a series of skills to support: more powerful AI computing power, higher performance perception hardware, optimized deep neural networks for algorithms and model iteration to address boundary issues, improved hardware specifications, and even the linkage of information both inside and outside of the car to enable the fusion of more dimensional perceptual information in multimodal interaction.They work together to gradually move cabin interaction towards true humanoid intelligence.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.