Introduction

In the first year of mass production of LiDAR, various new forces in the automobile industry have gradually launched their self-developed visual perception algorithms. While Tesla announced the complete abandonment of millimeter-wave radar, the role of millimeter-wave radar in autonomous driving is a question that has been pondered recently. This article records some viewpoints and shares them with relevant practitioners, and hopes that more people will participate in the research and development of millimeter-wave radar technology for autonomous driving.

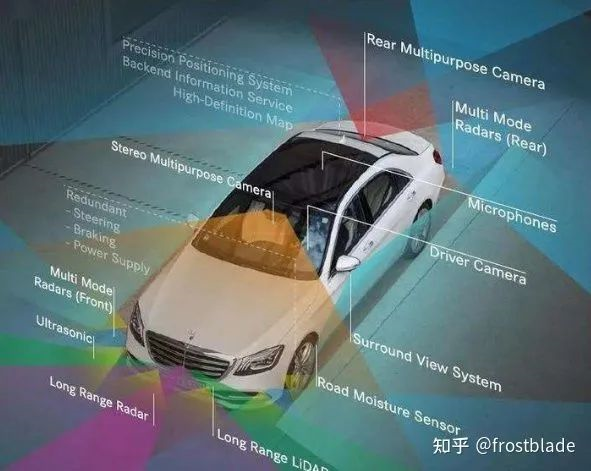

Currently, the main sensors used in autonomous driving are cameras, millimeter-wave radar, and LiDAR. Most new cars that focus on autonomous driving features are equipped with these three sensors, with the expectation of achieving optimal performance through fusion. Each of these sensors has its strengths and weaknesses, and has undergone different development processes. In addition to fusion, it also faces many competitions. For example, Tesla boss Elon Mask promotes a pure visual solution, despising LiDAR, and eventually even abandons millimeter-wave radar. In addition, with the decrease in the cost of LiDAR, its performance meets the requirements of mass-produced vehicles, and it is gradually encroaching on the role space of traditional millimeter-wave radar in autonomous driving. This article will mainly explore the role of millimeter-wave radar in autonomous driving and its subsequent development direction.

The Development of Various Sensors in Autonomous Driving

Millimeter-Wave Radar

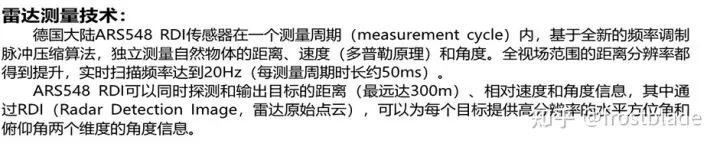

Millimeter-wave radar can be said to be the earliest sensor applied to mass-produced autonomous driving. In 1999, the Distronic (DTR) radar control system installed on the Mercedes-Benz 220 S-Class sedan could control the distance between the vehicle and the front car within the range of 40-160km/h, realizing the basic ACC function. Currently, major international mainstream millimeter-wave manufacturers, such as Bosch, Continental, and AMP, have developed their fifth-generation millimeter-wave radar for front loading, with a basic update speed of 2-3 years per generation. At the same time, domestic millimeter-wave radar manufacturers have also had about 5 years of development, and the mass-produced products of leading companies have approached or reached the international advanced level. Although there has been great improvement in accuracy and detection distance, overall, its development stage has not yet achieved essential breakthroughs.For millimeter-wave radar, currently, mass-produced millimeter-wave radar can only be called 3D (distance-azimuth-doppler) radar or quasi-4D radar, which basically lacks or has very weak pitching/altitude measurement capability. This results in the inability of the radar to identify stationary obstacles ahead as a sensor alone, as it cannot distinguish between vehicles (real obstacles) and bridges/manholes (false obstacles) in front. This significantly reduces the recall rate of obstacle perception, while avoiding frequent false alarms. To solve the above problem, 4D radar has been developed and is able to achieve high-precision 2D angular measurement, such as Continental’s ARS548. However, its application effectiveness in mass production has yet to be verified, and the current cost of 4D radar is also relatively high. It is expected to meet the requirements for pre-installed mass production in about 2 years.

Vision

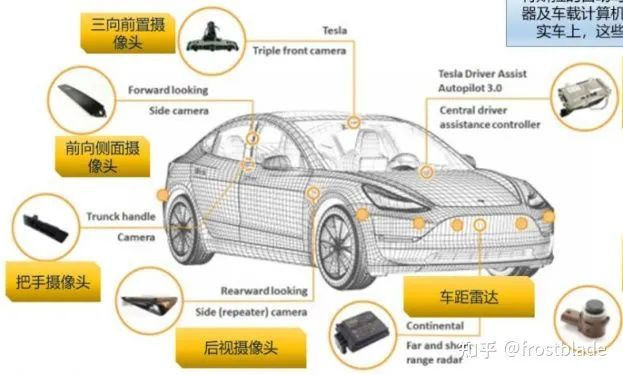

For vision, early vision solutions mainly focused on intelligent camera solutions, that is, with only one forward-facing camera, but obstacle information and lane information could be directly output through internal perception algorithms. Therefore, it can be used for 1R1V fusion or 5R1V fusion, significantly reducing the development difficulty of OEMs. Currently, most L2 level automated driving still adopts this route. For example, Tesla’s HW1.0-based on Mobileye chip first-generation driver-assist hardware. It uses a single EQ3 series camera, a single millimeter wave radar, and 12 medium-range ultrasonic sensors. The millimeter wave radar is provided by Bosch, and the camera is placed near the rearview mirror. The hardware selection is based on mature supplier products in the market. During the HW1.0 phase for Tesla, the main work was also multi-sensor fusion + application layer software development. In 2016, Tesla released an automated driving system based on self-developed visual perception algorithms, which prompted many OEMs to realize that the solution of multiple image sensors + deep learning perception computing platform far exceeds the advantages of a single intelligent camera: 360-degree perception coverage of the vehicle body, core algorithm owned in their own hands, continuously upgrade and iterate through data-driven methods while OTA. The first self-developed visual perception solution released in China was the XPilot 3.0 system on the XPeng P7 listed in 2020. Currently, most of China’s new car-making forces will also choose this self-research route.

The mechanical scanning version of LiDAR has been applied to various unmanned vehicles for a long time, but due to higher quality and price requirements for mass-produced cars, it wasn’t until 2021 that domestically produced mass-produced cars equipped with LiDAR were released. For example, XPeng P5 is equipped with two HAP LiDARs provided by Livox, each with a horizontal field of view of 120°, a detection range of up to 150 meters for low-reflectivity objects, a high angular resolution of 0.16° * 0.2°, and a point cloud density equivalent to 144-line LiDAR. Other leaders, such as Suteng Juchuang and HeSaitech, have released car-grade solid-state LiDARs. The solid-state LiDARs used in mass-produced cars meet the car-grade quality standards, reducing costs from tens of thousands of yuan to the thousands of yuan range, but at the expense of losing 360-degree detection capability. In addition, the current high-performance computing platforms have also promoted the improvement of LiDAR data processing and target detection performance, switching from traditional rule-based point cloud processing strategies to deep learning-based ones.

The mechanical scanning version of LiDAR has been applied to various unmanned vehicles for a long time, but due to higher quality and price requirements for mass-produced cars, it wasn’t until 2021 that domestically produced mass-produced cars equipped with LiDAR were released. For example, XPeng P5 is equipped with two HAP LiDARs provided by Livox, each with a horizontal field of view of 120°, a detection range of up to 150 meters for low-reflectivity objects, a high angular resolution of 0.16° * 0.2°, and a point cloud density equivalent to 144-line LiDAR. Other leaders, such as Suteng Juchuang and HeSaitech, have released car-grade solid-state LiDARs. The solid-state LiDARs used in mass-produced cars meet the car-grade quality standards, reducing costs from tens of thousands of yuan to the thousands of yuan range, but at the expense of losing 360-degree detection capability. In addition, the current high-performance computing platforms have also promoted the improvement of LiDAR data processing and target detection performance, switching from traditional rule-based point cloud processing strategies to deep learning-based ones.

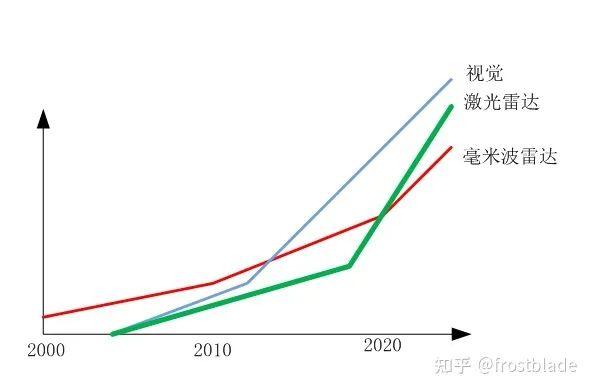

Overall, the development of millimeter-wave radar was the earliest, but the breakthrough came at the latest time point. In China, for example, the visual solution achieved breakthroughs in omnidirectional perception in 2016, and the LiDAR solution will achieve breakthroughs in solid-state mass production in 2021. On the other hand, it is expected that the breakthrough in 4D radar mass production based on millimeter-wave radar will be achieved in 2023, and the perception solution based on 4D radar will be launched in 2024 or 2025.

Problems faced by millimeter-wave radar

For millimeter-wave radar, its perception recall rate is high, but its biggest problem is false detection, including all kinds of clutter, multipath reflection, and targets with undetectable heights. In addition to hardware-related reasons, there are also significant software-related problems. The current data processing of millimeter-wave radar is implemented within the radar, relying on low-computing-capacity MCUs and traditional rule-based and recognition-based strategies for data processing, which naturally cannot achieve the sensing performance that deep learning can achieve.

As the earliest mass-produced sensor for autonomous driving, millimeter-wave radar has been left behind by cameras and lidars. I personally think that most of the reasons can be summarized by the word “threshold”. Both the hardware and algorithm of millimeter-wave radar have a relatively high threshold. Hardware design depends on highly advanced RF fundamentals and requires years of technical experience in processing technology. Therefore, the main hardware technology is still in the hands of foreign old-brand chip suppliers and automotive parts suppliers, while most of the domestic millimeter-wave radar start-ups also need to have relevant backgrounds in universities and research institutes. As for algorithms, the echo intensity information and Doppler information of radar data are more complicated than visual images and lidar’s 3D point cloud. The interpretation and analysis of the characteristics of echo points also rely on a lot of experienced accumulation and physical knowledge. Currently, most talents in the domestic autonomous driving industry come from computer, automation, and mechanical-related majors, and most of them do not have theoretical foundations such as signal and system and RF technology. Rather than facing difficult-to-understand and inefficient millimeter-wave radar data, it is more direct and easy to understand the laser radar and visual perception. This is also true in both the industrial and academic fields. Every year, there are few articles on millimeter-wave radar perception at international conferences in the computer and robotics fields, but there is an endless stream of articles related to visual and lidar perception, and many solutions do not require much development on their own, as SOTA (state-of-the-art) open source solutions are readily available online.

As the earliest mass-produced sensor for autonomous driving, millimeter-wave radar has been left behind by cameras and lidars. I personally think that most of the reasons can be summarized by the word “threshold”. Both the hardware and algorithm of millimeter-wave radar have a relatively high threshold. Hardware design depends on highly advanced RF fundamentals and requires years of technical experience in processing technology. Therefore, the main hardware technology is still in the hands of foreign old-brand chip suppliers and automotive parts suppliers, while most of the domestic millimeter-wave radar start-ups also need to have relevant backgrounds in universities and research institutes. As for algorithms, the echo intensity information and Doppler information of radar data are more complicated than visual images and lidar’s 3D point cloud. The interpretation and analysis of the characteristics of echo points also rely on a lot of experienced accumulation and physical knowledge. Currently, most talents in the domestic autonomous driving industry come from computer, automation, and mechanical-related majors, and most of them do not have theoretical foundations such as signal and system and RF technology. Rather than facing difficult-to-understand and inefficient millimeter-wave radar data, it is more direct and easy to understand the laser radar and visual perception. This is also true in both the industrial and academic fields. Every year, there are few articles on millimeter-wave radar perception at international conferences in the computer and robotics fields, but there is an endless stream of articles related to visual and lidar perception, and many solutions do not require much development on their own, as SOTA (state-of-the-art) open source solutions are readily available online.

In the current era of rapid development of deep learning (timing) + centralized processing systems (location) + low threshold (people), visual perception + lidar perception is developing rapidly, while millimeter-wave radar perception is lagging behind.

The irreplaceable millimeter-wave radar

In the current fierce competition, millimeter-wave radar is gradually losing its advantage over accurate velocity and distance measurement of visual measurement, and lidar can also achieve higher accuracy velocity and distance measurement. However, the coverage of 360 degrees on the vehicle by mass-produced lidar is still relatively costly, and only Tesla can currently achieve a super-strong visual perception solution. The high measurement accuracy of millimeter-wave radar is still an indispensable source of information for multi-sensor fusion.

Both lidar and millimeter-wave radar are gradually converging toward a high-precision, long-distance, and anti-interference development path in terms of system structure. However, one thing that cannot be changed is the frequency band of the waves. The frequency band of millimeter waves determines that it cannot completely solve some multipath interference from the echo level, but it also has advantages that optical (camera, lidar) sensors cannot match: adaptability to severe weather conditions such as sandstorms, thick fog, and rainy days. Of course, these road conditions are not currently the primary issues to be considered for landing autonomous driving, but they will serve as the “long tail problem” of autonomous driving technology and the “incremental market” of the autonomous driving industry, and will certainly receive more attention in the future.

Another advantage of the multiple reflections of millimeter-wave radar electromagnetic waves, despite sometimes causing false targets, is that it is not easily completely blocked. In dense scenarios, usually only millimeter-wave radar can detect vehicles and pedestrians in front, even in the far distance, while optical signals will be completely blocked.

Another advantage of the multiple reflections of millimeter-wave radar electromagnetic waves, despite sometimes causing false targets, is that it is not easily completely blocked. In dense scenarios, usually only millimeter-wave radar can detect vehicles and pedestrians in front, even in the far distance, while optical signals will be completely blocked.

Future Development of Millimeter-wave Radar and its Sensing Applications

One important direction of in-vehicle millimeter-wave radar development is 4D radar, which relies on more complex hardware systems to achieve higher performance, but also comes with increased costs. In my opinion, another direction of millimeter-wave radar is low-cost and lightweight, which can eventually replace ultrasonic radar and utilize capabilities such as speed measurement and angle measurement to achieve more human-vehicle interaction functions, such as DOW and trunk opening.

From the perspective of autonomous driving as a whole, the current direction is to improve autonomous driving capabilities through sensor and computing stack, so as to gradually achieve higher-level autonomous driving. However, for many low-cost vehicle models, the above-mentioned capabilities are still unacceptable, and the market for low-cost vehicle models is huge. For these vehicle models, 1V1R will serve as a long-term effective solution. Following the route of surrounding cities with rural areas and relying on the sinking market to achieve success, as demonstrated in the case of Pinduoduo, has been well-verified.

From the perspective of algorithmic problems, the problems that millimeter-wave radar perception processing needs to solve can be described from three angles: detection, state estimation, and attribute estimation. In terms of detection, it is necessary to achieve high recall rate while reducing false positives, such as commonly seen with multi-reflection targets and penetrating targets. In state estimation, higher accuracy is needed for position and speed estimation. For attribute estimation, more accurate and rich attributes such as credibility, category, and size need to be provided. Multi-dimensional processing and data-driven approach are the key to achieving the above goals. Deep learning methods are likely to be the breakthrough point for millimeter-wave radar sensing technology. Two articles by engineers at Wuha Tower, “Perception Algorithms for Millimeter-wave Radar” and “Deep Learning Methods for Radar Signal Processing,” provide some good examples of deep learning applications in millimeter-wave radar sensing processing.

From the perspective of data processing, the echo intensity information of millimeter-wave radar also contains some target semantics, including Doppler velocity. It can be said that the final data form of millimeter-wave radar is 5D: slant range, azimuth angle, elevation angle, Doppler velocity, and intensity. When point cloud density is not comparable to that of lidar and vision, fully utilizing the above information can also bring new highlights to millimeter-wave radar.

Finally, I want to say that millimeter-wave radar is very powerful and not as mysterious as we think. We need more industry participants, we need industry pioneers, to connect upstream and downstream, and to push the application of radar to new heights.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.