Yunzhong, sent from the copilot temple

Intelligent Car Reference | WeChat Official Account AI4Auto

Buddy, do you know this car?

This is the latest RoboTaxi unveiled today, based on Toyota’s “Sienna” Autono-MaaS (S-AM).

Strictly speaking, this car is not a new car, as it is not a new platform or a new model.

But it is also a new car because it is equipped with automatic driving software and hardware systems designed for L4 vehicle-level mass production.

And it is also the latest generation of automatic driving system from the technology unicorn – Pony.ai.

New sensor solutions, new computing unit solutions…

And the most obvious brand-new styling – from a large roof flow hood to a more integrated front and rear two-segment design. The new design reduces the height by 77.5% and the total length by 22.5% compared to the previous generation.

Honestly, when it runs on the road, who can see that this is an L4-level autonomous driving RoboTaxi?

Behind the exterior styling, Pony.ai also silently showcased its technical level this time.

From sensors to computing hardware, Pony.ai is transitioning from industrial-grade to mass production for vehicles. For the first time, they have adopted the solid-state lidar technology at a large scale, with a total of 23 sensors, including their proprietary signal light recognition camera with a 1.5x resolution increase.

The technical capabilities are self-evident.

The latest autonomous driving system from Pony.ai

Serial number: the sixth generation.

The internals are algorithms and software, and the externals are sensors and computing hardware.

And compared to previous generations, the most significant highlight changes for the sixth-generation autonomous driving system are in the visible and tangible external parts.

First, let’s talk about the sensors.

For the first time, they have adopted a large-scale use of the solid-state lidar.

Since its founding in late 2016, Pony.ai has clearly defined a multi-sensor redundant autonomous driving technology route.

And among them, the status and role of the lidar are very core.

But for the first five generations, they all demonstrated the ToF rotating lidar, or that kind of rotating lidar placed on the roof of a vehicle.

The ToF rotating lidar was also the mainstream solution for RoboTaxi before.

But with the acceleration of the intelligent vehicle trend, the solid-state lidar that can be embedded in the vehicle body has started to exhibit potential. And the demand is driving supply, exhibiting advantages in terms of vehicle-level, mass production, and cost, and is gaining more favor among autonomous driving players.

Pony.ai is one of the first players to embrace this trend.The collaborative achievement is now presented to the public.

In the new generation system, the sensor kit of Pony.ai adopts a front-and-rear split design –– integrating one solid-state lidar in the front and three solid-state lidars in the rear. The volume of the design has been significantly reduced compared to the previous generation, and is visually lighter, thinner, and more aesthetically pleasing. It even considers the emphasis on “aerodynamics” and “streamlined design” in automotive production, and is much more advanced in aesthetics than traditional RoboTaxi.

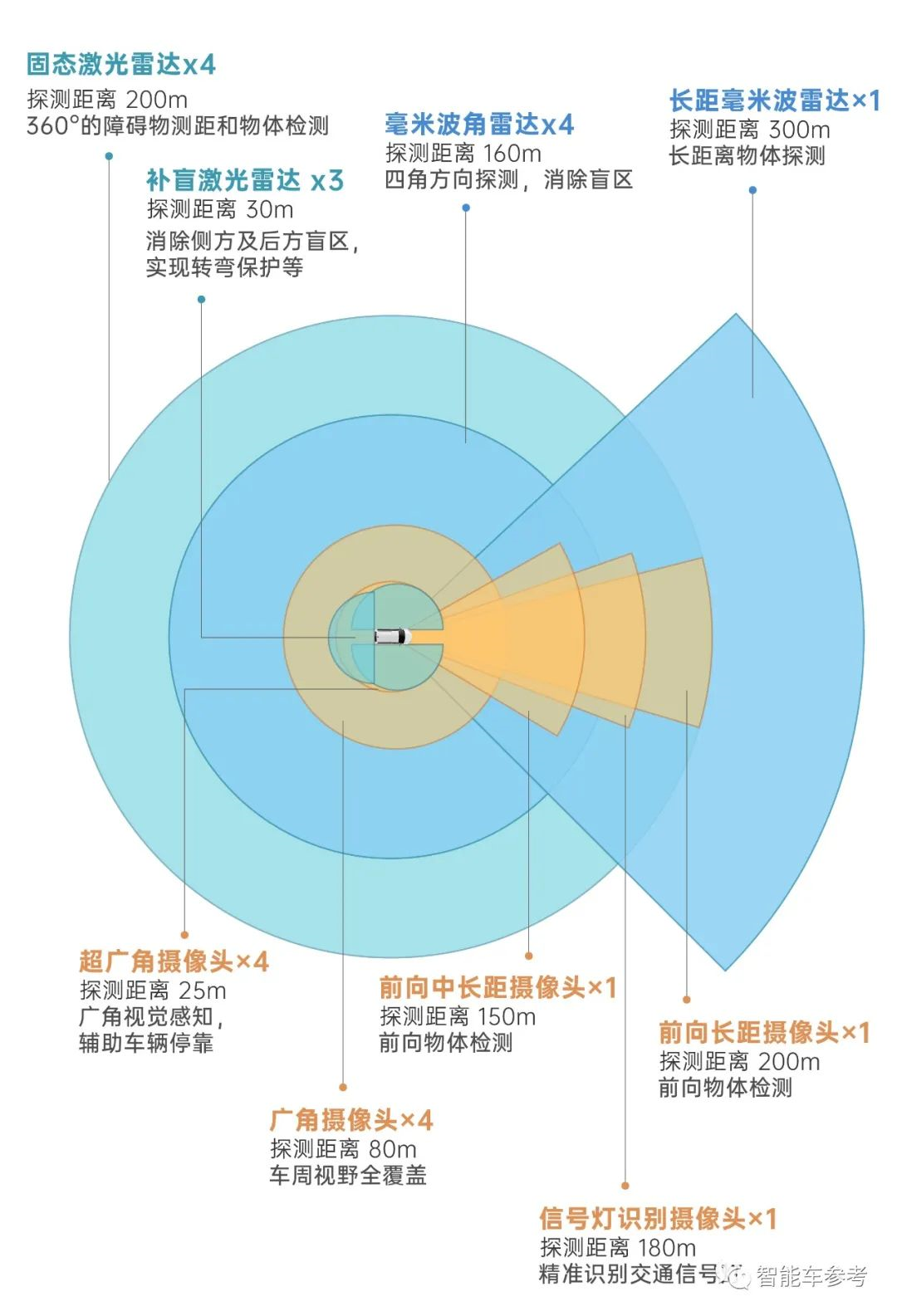

In addition to the solid-state lidar, Pony.ai’s latest sensors include three blind-spot lidars distributed on the left, right, and back, four millimeter-wave radar sensors located at the corners of the roof, one forward long-range millimeter-wave radar, and 11 cameras. The total number of vehicle sensors has increased to 23, further exceeding the 15 sensors in the previous generation.

However, Pony.ai emphasized that although the number of sensors has increased, the cost has been more efficiently controlled. On the one hand, the sensors are already mass-produced at the car level, which inherently has a cost advantage; on the other hand, it is due to Pony.ai’s independent research and development, such as the key signal recognition camera. After their own research and development, the camera’s resolution has been increased by 1.5 times and its cost has been better controlled.

With these latest sensor solutions, Pony.ai’s sixth-generation autonomous driving system bids farewell to the “bobblehead” era in terms of appearance and styling.

Regarding the computational unit, Pony.ai announced its partnership with NVIDIA this time. In the new generation system, Pony.ai introduced the automotive-grade NVIDIA DRIVE Orin system-level (SoC) chip. This chip has been repeatedly introduced and mentioned in various mass-produced intelligent vehicles’ launch conferences, and is NVIDIA’s flagship chip for autonomous driving.

A single NVIDIA Orin chip has 254 TOPS of computing power. With CUDA parallel computing architecture and the support of the deep learning accelerator (NVDLA), it has higher performance, higher bandwidth, and lower latency.

From domestic smart driving new forces to intelligent assisted driving capabilities, NVIDIA Orin is behind them all.After boarding Orin, the latest computing unit from Pony.ai, the processing power is expected to increase by at least 30% and the weight reduced by at least 30%, while the cost is expected to decrease by at least 30% compared to the previous generation unit in Xiaoma. In addition, the water-cooling system has been significantly optimized and upgraded based on the previous computing platform experience.

However, Orin does not represent the entirety of the latest computing units offered by Pony.ai, as the company has designed a flexible configuration plan for the new computing units which allows for different chip sets to be used, based on needs. The plan can be equipped with one or more NVIDIA Orin system-level chips, as well as NVIDIA Ampere architecture GPUs for automotive applications that require different computational needs.

The rationale for this design choice was explained by Pony.ai, as it could cater to different technical requirements for autonomous passenger vehicles and autonomous trucks. However, it is possible that considerations for mass production and implementation also played a role, as NVIDIA Orin chipsets are in high demand and their supply chain tends to be affected. In addition, the more versatile computing configuration of Pony.ai could ensure better mass production and implementation. Furthermore, in line with Pony.ai’s “dual-drive” approach – simultaneously advancing passenger cars and trucks towards autonomous driving – the company’s computing solutions must be tailored to different scenarios.

Pony.ai also revealed that it has already begun road testing using NVIDIA DRIVE Orin, and its software is capable of running in real-time with low latency on the Orin system. The new computing units will be mass-produced by the end of 2022, and mark a milestone breakthrough from an industrial-grade to an automotive-grade computing system for autonomous driving.

Furthermore, after the release of the sixth generation system and its initial unveiling, the first vehicles equipped with the system have been revealed. One of these vehicles is the Toyota S-AM, which is based on the Toyota Senna hybrid electric platform. According to Pony.ai, the Toyota S-AM vehicle will begin road testing this year and will join the PonyPilot+ fleet of vehicles in the first half of 2023, providing robo-taxi services. During the announcement of the Toyota S-AM, Pony.ai’s Co-founder and CEO, Jun Peng, emphasized that the inclusion of the Toyota S-AM in the PonyPilot+ fleet is another major achievement of the deep strategic cooperation between Pony.ai and Toyota, and that the new Automotive Grade L4 autonomous driving software and hardware system is designed to further improve the reliability of autonomous driving technology and promote the implementation of autonomous driving technology on a larger scale.

So overall, the key words behind Pony.ai’s sixth-generation system are clear:

Regulation-compliant and mass production.

Pony.ai has revealed that this system is aimed at mass production for regulation-compliant vehicles in terms of design, component research and development and selection, software and hardware integration, safety redundancy and system assembly and production.

In addition, the new system also has multiple layers of redundancy, including complete software, computing and vehicle platform redundancy systems, which maximize coverage of scenarios and safety processing mechanisms. In an emergency, it can achieve safe parking, reducing safety risks to a minimum.

Of course, safety, regulation compliance, and mass production for commercial use have always been Pony.ai’s path to advancing autonomous driving.

Especially since 2021, the topic of the second half of autonomous driving has been repeatedly raised, and the Pony.ai team has repeatedly emphasized that the key is mass production of regulation-compliant vehicles and commercialization on a large scale.

In the latest release, Pony.ai co-founder and CTO Loutiancheng said that the rapid decrease in sensor costs and the improvement of stability are accelerating the application and popularization of autonomous driving as a general technology.

However, as a general technology, there may be many roads and plans for the application and popularization of autonomous driving.

Previously, there were discussions in the industry about Pony.ai’s choices.

In the latest generation of the system, CEO Peng Jun made it clear that Pony.ai’s goal has never changed in the five years since its inception:

To create a virtual driver and revolutionize the field of travel.

And Pony.ai will always adhere to technology as the foundation, focus on the two most valuable scenarios that have already been clearly defined:

RoboTaxi for passenger transport and RoboTruck for freight transport.

Peng Jun summarized:

For Pony.ai, 2021 is a year of both opportunities and challenges, and future challenges may be even tougher. However, the industry’s space and opportunities are so clear and imaginative.

Pony.ai is now in the best state of adjustment, and has an unprecedented determination to move forward. In the new year, it will definitely achieve breakthrough achievements.

Undoubtedly, the sixth-generation autonomous driving system is the beginning of the appearance of this year’s breakthrough achievements.

One more thing

However, with the release of the latest generation system, new problems have emerged.

How will we identify the RoboTaxi on the road now that it has abandoned its iconic “bun head”?Perhaps considering the new challenge of integrating intelligent cars, Xiaoma Zhixing this time also spent some effort on design—

On the one hand, it’s cooler and more futuristic.

Xiaoma Zhixing designed a front horizontal ring and a rear vertical three-section concept car light for the roof sensing module, coupled with a integrated transparent cover, to create a different futuristic sense of technology for the Robotaxi.

On the other hand, light language.

Xiaoma Zhixing has designed an innovative light language for different scenario purposes. It not only adds visual references for various system operating states but also helps Robotaxi users quickly identify the scheduled self-driving vehicles.

Who would have thought that in the exploration of autonomous driving, there could still be such a small surprise as “light language”?

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.