Author: Dianche Sen

When it comes to autonomous driving, many car owners can show off their knowledge about lane departure warning, lane change assist, and forward collision warning, considering these features are just another routine for them. However, things may get awkward when you ask them about the perception and control of autonomous driving.

In this regard, it may be worth checking out the Autonomous Driving topic on Dianche Resources. Although there are plenty of official explanations on Baidu Baike, we prefer to explain it in a way that car owners can understand without competing with professionals. So, without further ado, let’s talk about how we should understand the overhyped autonomous driving in this edition.

What is autonomous driving?

The Motor Vehicle Auto Driving System refers to an intelligent automobile system that achieves unmanned driving through an on-board computer system. Generally, the system has three parts: perception system, decision-making system, and execution system. In other words, it’s like hiring a driver who replaces your eyes, brain, hands, and feet to react to situations.

However, like learning to drive from birth to being able to drive, it’s not that easy to develop autonomous driving either. In other words, it requires step-by-step progression and narrowing of the scope of autonomous driving from assisted driving to autonomous driving and then to unmanned driving. The five-level autonomous driving classification proposed in the SAE (Society of Automotive Engineers) J3016 document is currently widely recognized as the standard by the autonomous driving field and the international community. The classification is as follows.

Assisted Driving (L1, L2)

It refers to technologies that can assist or even replace drivers in a certain driving task to optimize the driving experience, including autonomous driving. L1 can continuously perform a sub-task of controlling the vehicle’s lateral (e.g., steering wheel) or longitudinal (e.g., accelerator, brake) motion control within the applicable scope (cannot be performed simultaneously). L2 can perform both lateral and longitudinal motion control tasks simultaneously.

Autonomous Driving (L3)

This means that the vehicle can automatically complete at least some or all control functions of key safety components without the direct operation of the driver. It includes unmanned driving as well as assisted driving. However, there has always been controversy about whether the L3 classification involves scenarios where drivers need to be ready to participate in driving tasks even when their eyes are free.

From a user perspective, whether L3 scenarios can be user-friendly is also an issue. For example, if the user is playing with their phone in the car in an L3-level scenario, and the system suddenly requires the user to take over the driving operation within 10 seconds. From a technical perspective, even in a short time, accidents can still occur. Therefore, the ability of the system to make judgments and reactions within 10 seconds or even shorter may have exceeded the technical capabilities of the L3 classification.### Autonomous Driving (L4, L5)

Vehicles can accomplish all driving tasks in limited or complete environments without any intervention from the driver. This is just like hiring a chauffeur.

When combined with the automatic driving classification standard mentioned above, it can be understood from another perspective. L1 and L2 liberate the driver’s hands and feet, and do not require control of the steering wheel, accelerator, and other functions. Only monitoring of the driving scene is required. L3 frees the driver’s eyes, but requires response to system requirements when necessary. In L4 and L5, the driver does not need to participate in driving at all.

What Does It Take to Develop Autonomous Driving?

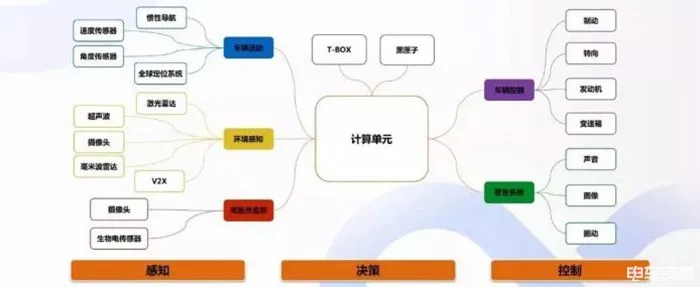

Even though various car companies and brands boast about their autonomous driving technologies, the frameworks of different levels of autonomous driving are similar because they develop different functions based on different requirements for precision and coverage. Its technical framework core is divided into three parts: environmental perception, decision-making planning, and control execution, which is similar to the human driving process.

Environmental Perception (Information Collection)

Human drivers rely on their eyes and ears to observe the environment and understand the position and status of themselves and other traffic participants in the surrounding environment. The environmental perception technology of autonomous driving technology obtains similar information through sensing algorithms and sensors, including positioning and environmental perception.

Decision-making Planning (Information Analysis)

After obtaining environmental information, driving paths and other information are planned through decision-making algorithms and calculation platforms, while ensuring safety.

Control Execution (Decision Making)

The control algorithm and wired control system control the vehicle to execute traveling operations along the planned path.

From the above picture, it can also be seen that the core technologies of the above three parts involve many modules.

Algorithm

Algorithm means the calculation method and steps to solve a problem. For example, when you cook, a recipe comes to mind, stir-fry first, then simmer, and finally simmer until the sauce is thick. This is an algorithm. It is not a literal interpretation of a mathematical formula. Different problems require different algorithms.Regarding the control algorithm, positioning algorithm, perception algorithm, and decision-making algorithm mentioned above, the control algorithm can basically meet the technical requirements in terms of maturity. As for current practices in Alibaba, the positioning algorithm can meet the precision requirements in most situations, while the perception algorithm needs to accurately identify the category, location, motion speed, direction, and other parameters of objects in the surrounding environment, but noise interference and other problems still exist. The decision-making algorithm needs to address noise issues and efficiently plan an executable path.

At present, the perception and decision-making algorithm modules are still the bottlenecks of autonomous driving technology and need to be optimized.

Sensors

Different sensor schemes can be selected based on different plans and levels. For example, L2 technology uses more cameras and millimeter-wave radar, while L4 technology requires the use of lidar. There are still many problems with lidar sensors, such as stability issues. At present, mechanical lidar is mainly used. Although solid-state lidar is progressing rapidly, it has been proven that it cannot yet meet the stability requirements of autonomous driving technology.

Computing Platform

It needs to be both powerful and low-power. Due to the undefined upper-level algorithms, it is difficult to make or optimize chips suitable for the algorithms.

Testing Methods

They include real road tests and simulation regression tests. Simulation regression testing is a hot topic in the field of autonomous driving. There are many technical issues to be solved regarding how to simulate driving environments and drivers’ actual behaviors.

Development Roadmap for Autonomous Driving

Currently, many companies in the field of autonomous driving, including automakers, are conducting research and telling their stories. However, based on the actual deployment roadmap that can be seen and touched, the development roadmap for autonomous driving is mainly divided into three modes: Waymo, Tesla, and GM Cruise.

Waymo focuses on the unmanned driving market and independently leads the development roadmap for unmanned driving. Its feature is that safety can be guaranteed, but it lacks host plant design and production, resulting in slow large-scale commercialization. For example, Horizon Robotics, NavInfo, and HoloMatic.

Tesla’s mode is from car manufacturing to autonomous driving, with driving assistance functions being mass-produced and intelligent hardware being upgraded through software OTA to achieve full autonomous driving. Its feature is faster commercial deployment but the difficulty in guaranteeing safety, and the prospects for full autonomous driving are unclear.The universal Cruise model focuses on the development of passenger cars from assisted driving to autonomous driving, while expanding into unmanned logistics vehicles and Robotaxi businesses. It combines the strengths of passenger car companies and technology companies to quickly achieve full autonomous driving. Examples include Wenyuan Zhixing, Yuanrong Qixing, and Pony.ai.

As for which model will ultimately prevail, there is currently no consensus, and it is unknown which approach will succeed.

Final Thoughts

Autonomous driving can be considered as another great transition in the hundred-year history of the automobile industry, redefining the rules of the automotive industry. It is no longer just “four wheels and a sofa”, nor is it just “a phone with four wheels”, but a “third space on wheels”. The development of autonomous driving is an inevitable trend, but there is still a need to “take a detour” before moving forward.

Of course, there is much more involved in autonomous driving. Although the core technologies of algorithms, LIDAR, cameras, and other technologies mentioned above are only briefly mentioned, subsequent content including high-precision maps, autonomous driving chips and a series of other contents will not be overlooked.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.