In the early days, Tesla used NVIDIA’s Drive PX2 chip for its Autopilot system, but eventually decided to develop its own chip to achieve its autonomous driving goals. With the release of Tesla’s self-designed Autopilot chip in the early hours of April 23rd, this marks a significant breakthrough in technology. However, as autonomous driving is closely related to driving safety, it is important to remain rational and cautious in our analysis. Tesla’s unique architecture and development strategy for Autopilot have been the subject of controversy, and this article will be divided into three sections: Tesla, the industry, and users. Understanding the technology behind Autopilot can help car owners better understand the system’s potential and shortcomings. For Tesla, the release of its self-designed Autopilot chip marks a major achievement in achieving CEO Elon Musk’s goals.> In terms of technological revolution, we are in the midst of the AI revolution.

The computation of AI is vastly different from classic scalar computation, vector computation, and graphics computation, and its application is incredibly widespread. Whenever there is such a revolution, especially when both the hardware and the software stack undergo changes, a large number of people will get involved.

One thing remains unchanged, the design of high-end processors is extremely difficult. It’s very challenging to combine countless modules to create differentiated, high-value processors. Look at the semiconductor industry now, some are standard products from large companies, and some are custom designed chips. But what remains unchanged is that super difficult challenges require true experts to solve them.

Elon Musk believes that computer vision + AI + massive real data is the most difficult and imaginative application scenario for AI technology in the automotive industry. As Jim Keller said, AI requires comprehensive changes from software to hardware at the bottom layer.

This was the original motivation of Tesla. Today, Tesla has achieved mass production of this chip.

First, let’s introduce the chip itself.

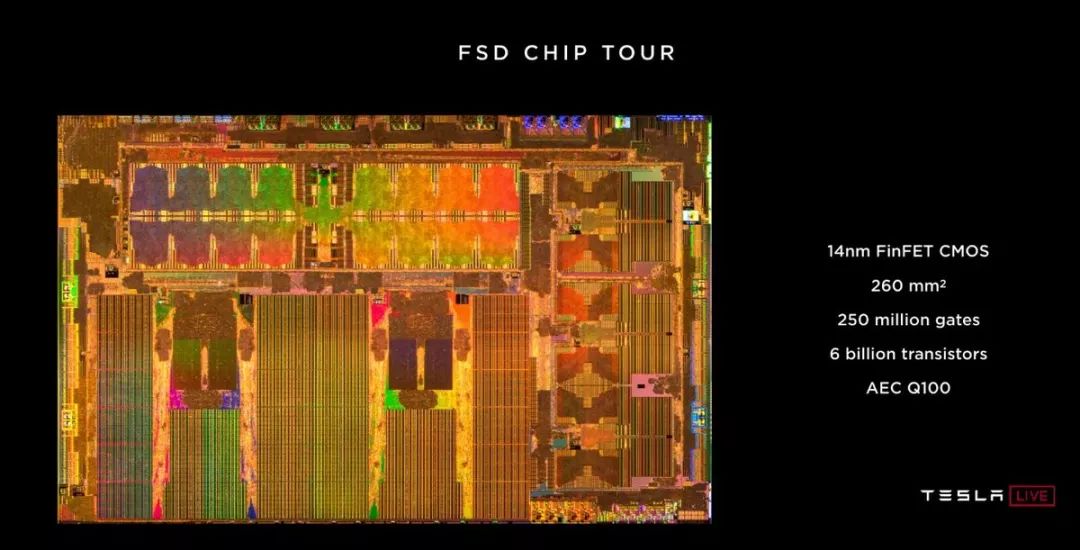

This chip has a specification of 260 square millimeters, with 6 billion transistors and 250 million logic gates, and a peak performance of 36.8 TOPS, using Samsung 14nm FinFET CMOS process.

The chip is equipped with LPDDR4 RAM with a frequency of 4.266 GHz/s, with a peak bandwidth of 68GB/S. In addition, Tesla has also integrated a 24-bit channel ISP on the chip, supporting advanced tone mapping and advanced noise reduction.

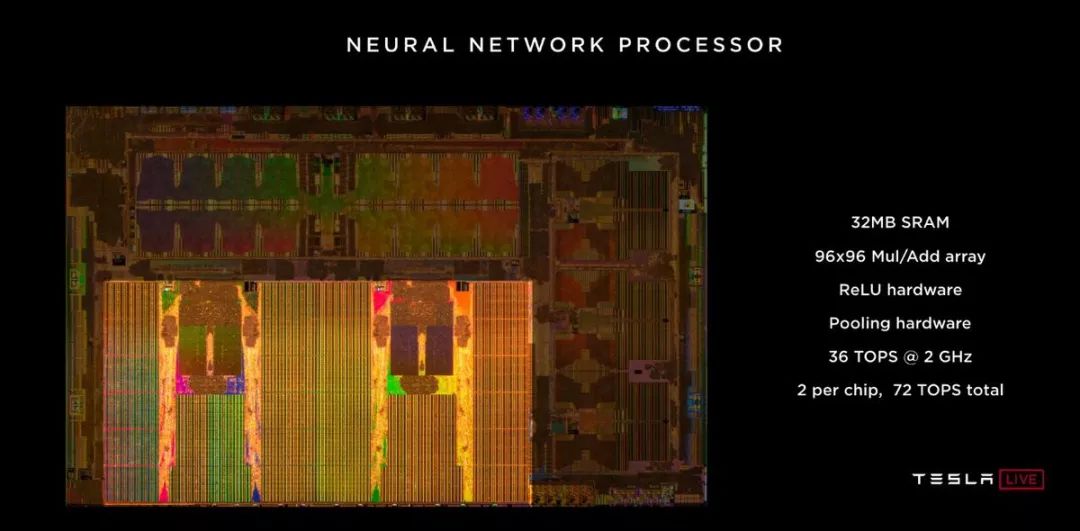

In addition, this motherboard also has two neural network accelerators with a frequency of 2GHz (redundant to each other), with 32MB SRAM and a 96×96 array, and a data processing speed of 1TB/s.

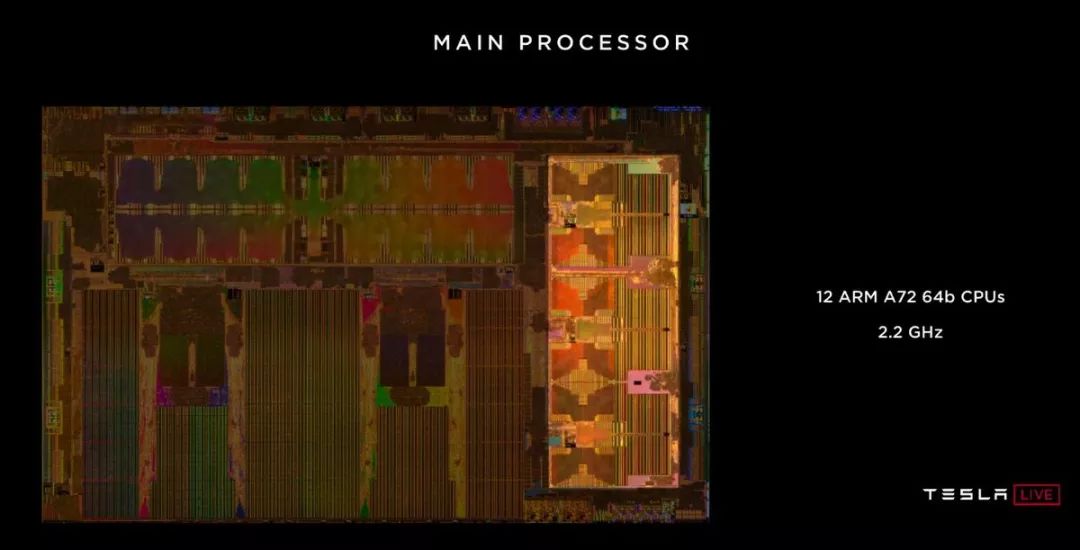

In terms of graphics processing, this chip supports 32 and 64-bit floating-point graphics processing. At the same time, it is equipped with 12 64-bit CPUs with a main frequency of 2.2Ghz.

The chip has an independent security chip and H.265 video decoder.

Finally, the main chip has a power consumption of only 75W, and the entire motherboard has a power consumption of 250W, which means that the Tesla chip will consume one degree of electricity for every 4 hours of driving.

You may wonder how the Mobileye EyeQ4, the most powerful mass-produced chip from Mobileye, and the NVIDIA Drive PX2, the most powerful mass-produced chip from NVIDIA compares to the Tesla Autopilot chip.

First of all, the Mobileye EyeQ4 is mainly used as a perception chip, and its performance peak is only 2.5 TOPS, which is irrelevant to the Tesla chip.

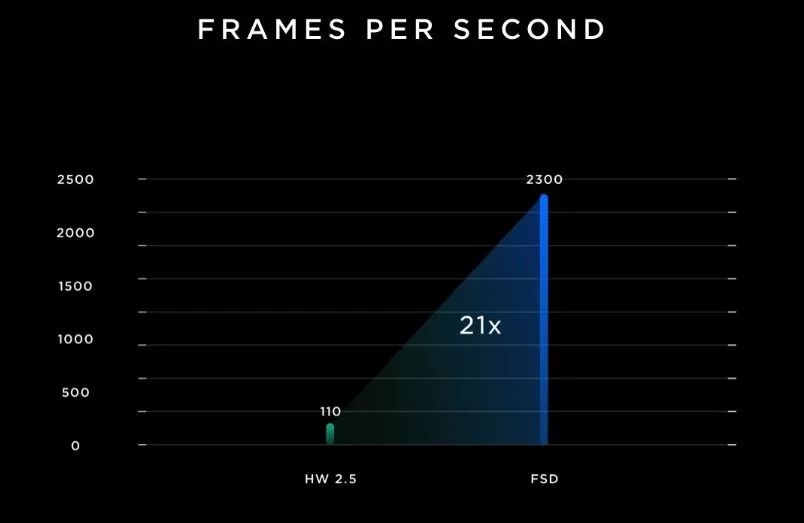

As for the Drive PX2, there is an official PPT for comparison. The processing speed of the Tesla chip reaches 2300 frames/s, which is 21 times the 110 frames/s of the Drive PX2. In another comparison, Elon mentioned that Tesla’s chip has 7 times the performance of NVIDIA’s next-generation Drive Xavier autonomous driving chip.

But in my opinion, it is not appropriate for Tesla to mercilessly bully their former chip supplier NVIDIA like this.Although both are positioned as central computing chips for autonomous driving, the Tesla chip is fully tilted towards image processing and AI calculations. On the other hand, the two generations of Nvidia chips are still autonomous driving chips with a GPU at their core. This puts Nvidia at a disadvantage in image processing comparison.

Whether it’s powerful image processing capabilities or neural network accelerators designed for AI, they are all bound to the AP 2.0-defined eight different viewing distance and specifications of cameras, Tesla Vision deep neural network visual processing tools. This is a chip dedicated to AP, and only AP can unleash its maximum performance.

Now let’s talk about what makes this chip good.

First of all, the increased computing power has enabled all eight cameras to be fully utilized. At the Q2 2018 conference, Tesla’s AI director, Andrew Karpathy, explicitly said that the AP runs large neural networks very well, but is unable to be deployed on the vehicle end due to computing limitations.

Tesla mentioned at the press conference that each accelerator of the new chip supports 8 cameras with 2,100 frames per second input speed, with each camera providing panoramic and full-resolution input.

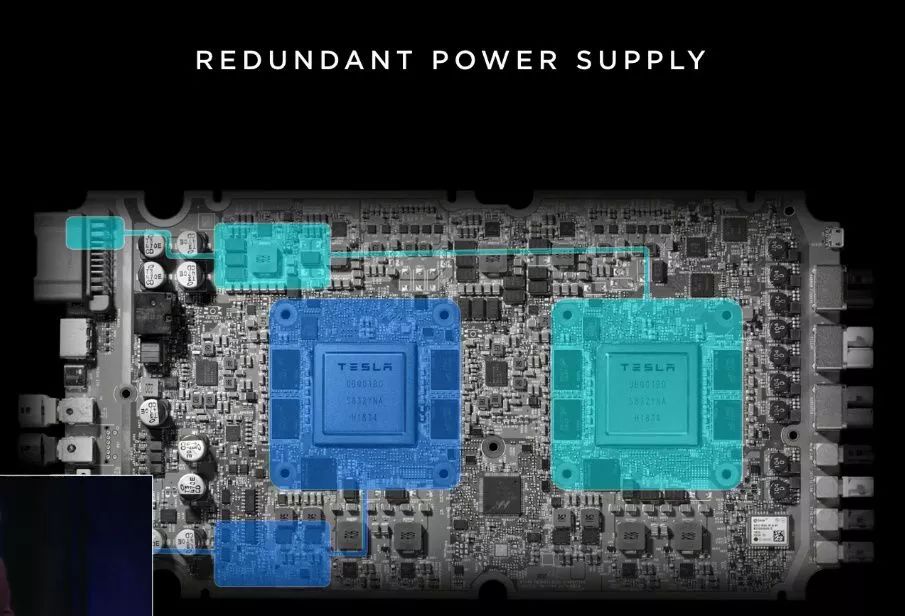

Secondly, in addition to the dual neural network accelerator, Tesla has also designed redundant power and computing. For Model 3, Tesla has pre-installed brake redundancy and steering redundancy. This achieves the full implementation of autonomous driving hardware redundancy beyond perception, with the redundancy of power, positioning, computing, control, and execution.

Another function related to autonomous driving is the CPU fault-tolerant (Lockstep) design. When the mainboard is running, two sets of identical hardware will process the same data at the same time. They will force the timing sequence between different chips and memory to be the same, ensuring that they process exactly the same data at the same moment. This ensures the low latency characteristics during the autonomous driving process, which are crucial for high-speed driving scenarios.

Even if software bugs or hardware failures occur, the system can continue operating without losing data.

Elon’s evaluation of redundancy and fault-tolerant design is that any part may fail, but the car will continue to run. Moreover, this computational chip will have a failure probability far lower than the probability of the driver losing consciousness during the driving process, at least an order of magnitude lower.“`

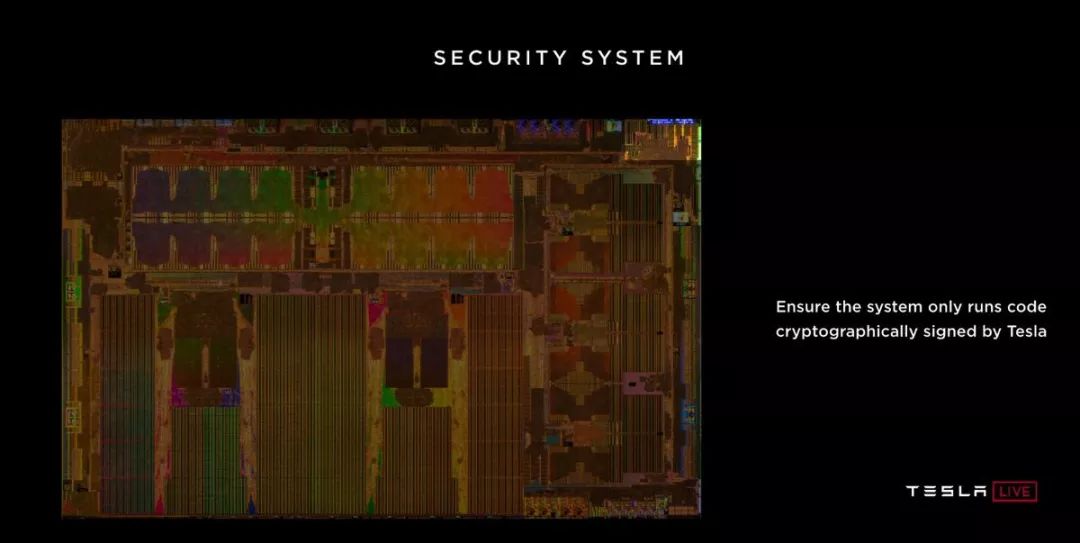

Finally, there is the design of the independent security chip. This chip actively checks all instructions and data in an encrypted manner to monitor the possibility of hackers attacking self-driving cars. The security chip reads input and output data and observes any suspicious perception information, including deceptive visual information (such as misleading the car with fake pedestrians) to adjust decision-making and control.

Overall, this chip fully meets the design requirements of Tesla, mainly those of Elon himself. Elon spoke highly of it at the press conference:

How could it be that Tesla, who has never designed a chip before, would design the best chip in the world? But that is objectively what has occurred. Not best by a small margin, best by a big margin.

Pete and Karparthy were also hailed by Elon as the world’s best chip architect and the world’s best computer vision scientist.

In August 2018, three executives from the AP team suddenly appeared at Tesla Q2 earnings conference, making the AP team the hero of the earnings conference. The reason for doing this was revealed today: In August 2018, Tesla tested the first batch of AP 3.0 vehicles, which performed well. In the past few months, Tesla has been testing AP 3.0.

Therefore, the experience from 1.0 to 2.0, which had a cliff-like decline, no longer exists during the transition from 2.5 to 3.0.

AP 3.0 versions of Model S/X and Model 3 have already been mass-produced on March 20th and April 12th respectively. In addition, the development of Tesla’s next-generation autopilot chip, HW 4.0, began a year ago. It is expected to be mass-produced within the next two years, and its performance will be three times better than that of the 3.0 chip.

In Industry

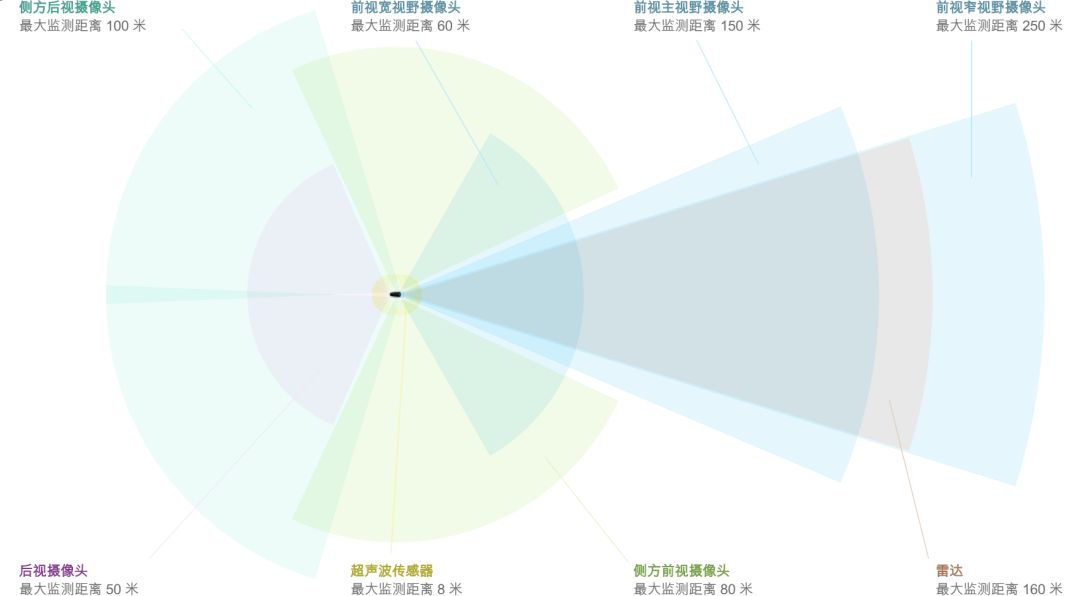

Let’s take a closer look at the AP 2.0 sensor suite.

“`* Three front cameras (wide angle (60m), telephoto (250m), medium range (150m))

- Two side front cameras (80m)

- Two side rear cameras (100m)

- One rear camera (50m)

- Twelve ultrasonic sensors (double detection distance/precision)

- One enhanced front radar (160m)

No LIDAR, not even one.

During today’s press conference, Elon reaffirmed his stance on LIDAR when answering questions from investors.

Lidar is a fool’s errand,Anyone relying on lidar is doomed. Doomed! [They are] expensive sensors that are unnecessary.

激光雷达是徒劳的,任何依赖激光雷达的公司都注定要失败的。注定!它们是昂贵的、不必要的传感器。

Relative to the CEO’s pure rejection, Tesla’s AI senior director Andrej Karparthy gave more convincing explanations. Karparthy believes that the world is built for visual recognition, and LIDAR is difficult to distinguish between plastic bags and tires. Large-scale neural network training and visual recognition are essential for autonomous driving.

You were not shooting lasers out of your eyes to get here. In that sense, lidar is really a shortcut,It sidesteps the fundamental problems, the important problem of visual recognition, that is necessary for autonomy. It gives a false sense of progress, and is ultimately a crutch. It does give, like, really fast demos!>You didn’t shoot lasers from your eyes to see this. In this sense, lidar is indeed a shortcut. It avoids the fundamental problem of visual recognition, which is crucial for achieving autonomous driving. It gives people an illusion of progress, but ultimately it is a crutch. It does provide very fast demos.

There are some differences in the technical routes of different companies. Early autonomous driving companies focused on lidar for perception, while most now rely on multi-sensor fusion, and a few companies rely primarily on computer vision. However, among all the autonomous driving start-ups and major companies in the world, there is only one that has achieved autonomous driving without using lidar – Tesla.

A reasonable guess is that lidar is costly and Tesla chose not to use it for commercial reasons. In fact, Karparthy’s analysis in the previous paragraph can represent the attitude of the AP team. There is also other evidence that Tesla’s dislike of lidar is purely due to technical differences.

First of all, Tesla has been caught using lidar for testing more than once, and Elon mentioned today that he doesn’t hate lidar. The SpaceX team independently developed lidar, but for cars, lidar is an expensive and unnecessary sensor.

Secondly, Elon had already explained the reason for abandoning lidar before considering cost, which was that the technical route of perception fusion was wrong.

“If you insist on an extremely complex neural network technology route and achieve very advanced image recognition technology, then I think you have maximized the problem. Then you need to fuse it with increasingly complex radar information. If you choose an active proton generator with a wavelength in the range of 400nm-700nm, it is actually stupid because you are doing this passively.

You will eventually try to actively emit protons at a radar frequency of about 4mm, because it can penetrate obstacles, and you can “see” the road ahead through snow, rain, dust, fog, and anything else. It is puzzling that some companies use the wrong wavelength to make active proton generation systems. They armed cars with a lot of expensive equipment, making them expensive, ugly, and unnecessary. I think they will eventually find themselves at a disadvantage in the competition.”

This is the difference between Tesla and the whole industry. The next question is, can cameras play the role of core sensors?

On this issue, Tesla can finally be consistent with the industry.In all sensors, cameras have the richest linear density, and their data volume far exceeds other types of sensors. An industry consensus is that vision-based perception is continuously gaining importance throughout the entire autonomous driving system. Based on the highest image information density advantage, it occupies a central position in the entire perception fusion.

In fact, it is feasible to completely rely on vision to solve the problem of road perception for autonomous vehicles, but there is still a long way to go. The development process of autonomous driving cars should be a gradual process of visual substitution for high-end lidars.

Therefore, Tesla’s real disagreement with the industry is that the industry generally recognizes the huge potential of vision, and lidars may one day exit the stage of history, but the maturity of computer vision and AI development today is impossible to complete perception independently. Elon believes from first principles that adding lidars will lead the technology roadmap astray, and everyone’s ultimate goal is for cameras to achieve perception.

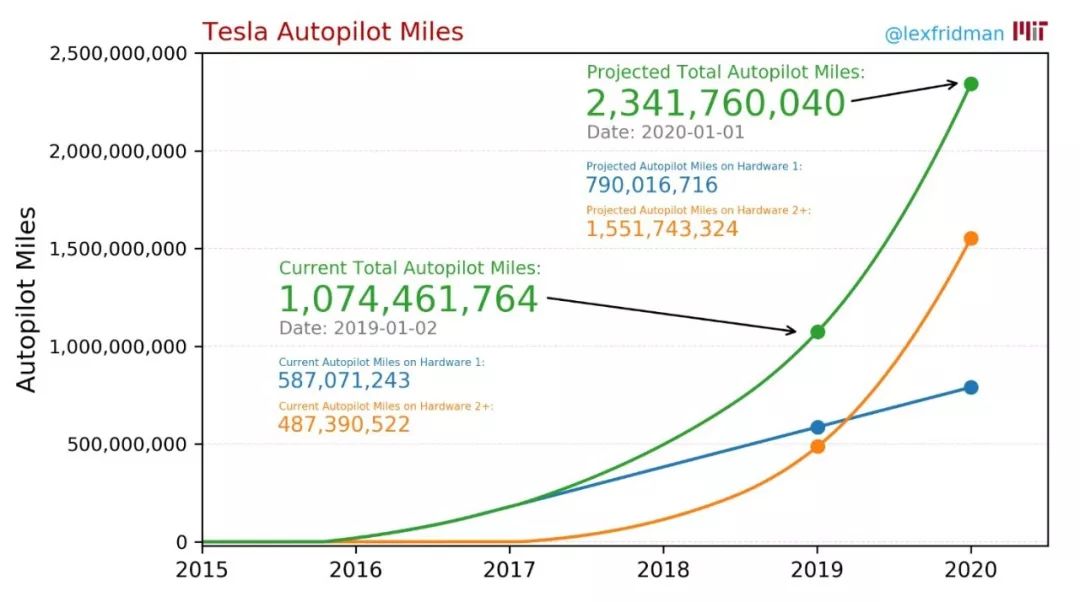

Tesla’s confidence comes from the 425,000 AP 2.+ models running around the world. MIT calculates that based on Tesla’s announced delivery volume, Tesla’s average driving mileage, and driving mileage under AP start-up status, Tesla’s cumulative road test data had reached 480 million miles by 2019 and is estimated to exceed 1.5 billion miles by 2020. According to Elon, Tesla’s road test data accounts for 99% of the total road test data in the industry.

Tesla was extensively questioned before, and it was said that after all 8 cameras on the entire vehicle were activated for perception, the average monthly data upload consumption was only 1-3 GB, and it seemed difficult for Tesla to conduct truly effective data collection.

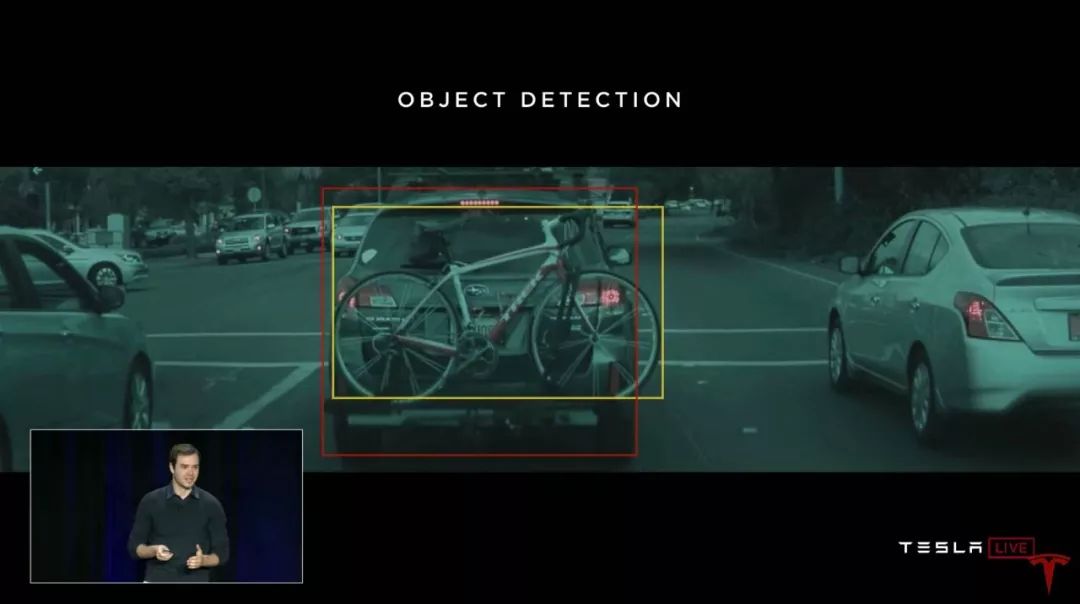

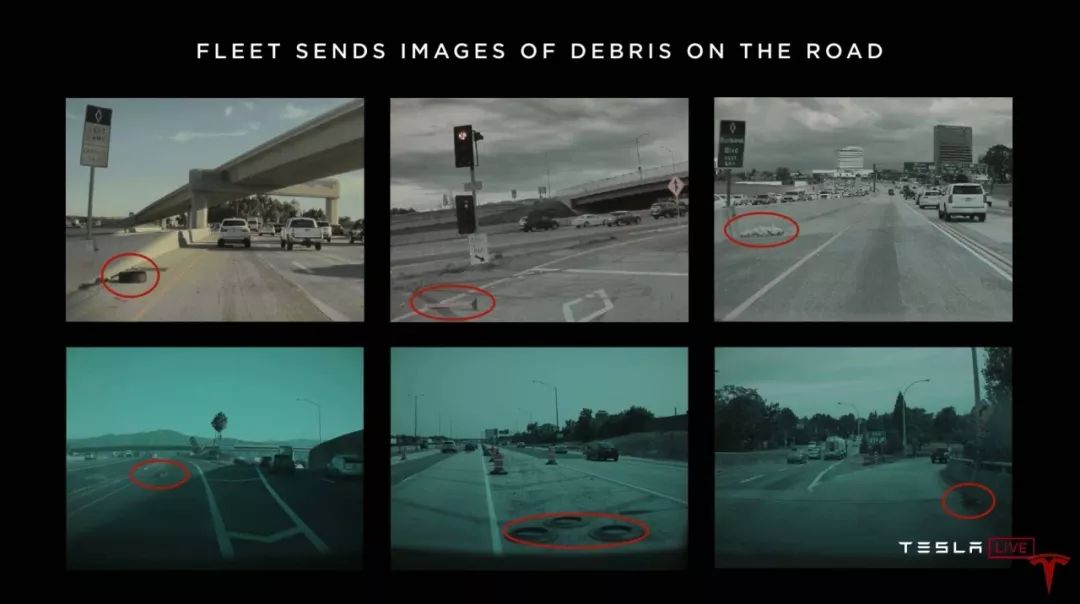

At today’s press conference, Karparthy explained this issue. For Tesla, the biggest advantage and the biggest challenge come from processing massive real data. After brief manual labeling in the early stage, a large number of obstacle recognition are quickly replaced by local machine automatic labeling to improve recognition.

Only images that cameras cannot understand or cause confusion will be uploaded to the cloud for annotation by engineers and imported into neural networks for training until the neural network has mastered the recognition of the scene.

Only images that cameras cannot understand or cause confusion will be uploaded to the cloud for annotation by engineers and imported into neural networks for training until the neural network has mastered the recognition of the scene.

Furthermore, different countries around the world have completely different road conditions, traffic rules, heavy rain, hail, fog, and even rare long-tail scenes like floods, fires, and volcanoes. Every time human intervention takes over the system during the AP enabled state, the system will record the information and data of the scene and learn from human decision-making and driving behavior.

Karparthy specifically mentioned that real-world driving conditions cannot be replaced by road data collected from simulation. Regarding the approach of using simulators to solve the problem of data scarcity that is widely adopted by competing companies, Tesla responded with two sentences: A simulator that rivals the real world itself will be more difficult to design than an automated driving system; Improvements using simulators are limited, like improving one’s own homework.

For the industry, Tesla holds the world’s largest autonomous driving fleet and has begun to explore computer vision + AI (software and hardware) + massive real data. Even today, General Cruise COO Daniel Kan’s evaluation of the AP team is still the most accurate: They are pushing the boundary of technology.

For Users

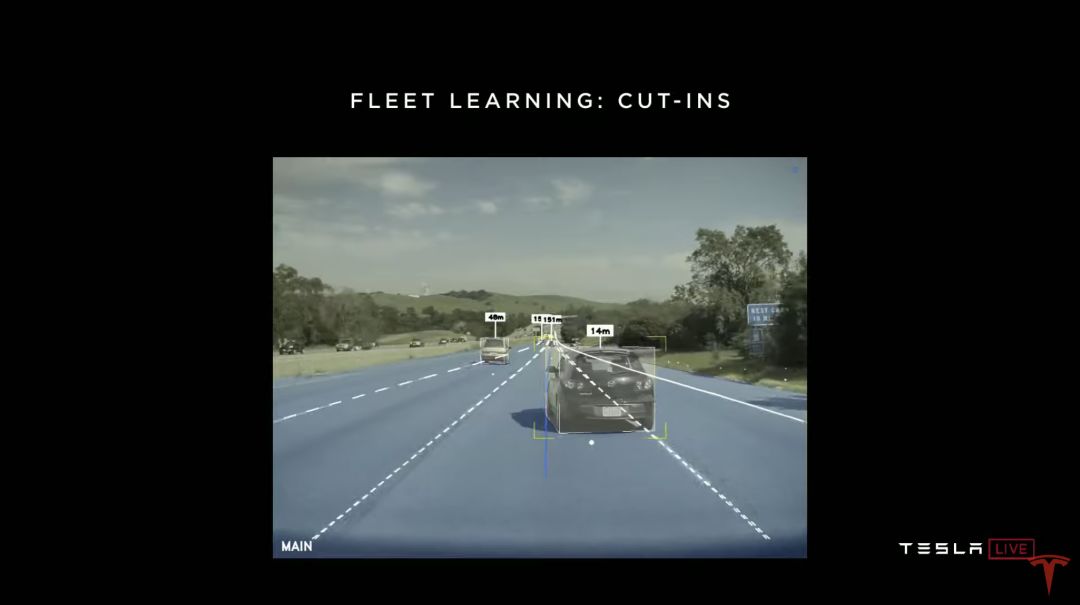

In the second half of the press conference, Tesla explained some issues. For example, the minimum following distance in AP mode is about 3 meters, which provides enough space for the side vehicle to merge in the congested traffic scene in China.

Tesla mentioned the shadow mode mechanism, which means that every time a driver takes over and accelerates close to the front vehicle in such a scene, the system will record the driver’s driving behavior and upload it to the cloud. When the proportion of the same behavior is high enough, the decision-making mechanism of the neural network will change and be pushed to thousands of users.

Therefore, in the near future, AP will become more and more user-friendly and approach full self-driving. But as a user, you must always understand that no matter how user-friendly the system is, it is not full self-driving until the official promise of full self-driving technology is achieved.

During the first stage of autonomous driving, Elon said during the launch event that AP still requires passengers to sit in the driver’s seat and focus on the road ahead. Doesn’t that sound contradictory?

Elon provided more detailed explanations in an interview with Ark Capital before:

By the end of this year, Tesla will achieve functional autonomous driving. By functional autonomy, I mean the car can find you in the parking lot, pick you up and take you to your destination, during which you need to pay attention to the road conditions and there is a very small probability that you need to take over the vehicle at the appropriate time, but most of the time the driver does not need to intervene.

People think this is 100% full autonomous driving with no human supervision needed, which is not the case. Functional autonomous driving Tesla can handle 99.9999% of scenarios, but more nines need to be added after that.

Tesla also clearly stated that there is a risk of the autonomous driving system stopping in extreme situations such as heavy rain, hail and heavy fog. In fact, when the system stops and the driver takes over, the system learns or collects information and data in mirror mode.

So, we have no interest in discussing Elon’s comment at the end of the event about the 1 million Robo-Taxi autonomous driving fleet by 2020. The real issue to watch is that, as the fleet, chips, and algorithms are in place, Tesla Autopilot has gone from completely unusable last year to having mainstream competitiveness at L2, and is rapidly approaching L4.

Tesla may be the world’s first company to negotiate with national regulatory agencies on the large-scale deployment of autonomous driving fleets. This requires all AP 2.+ car owners to correctly understand the system boundaries and use the system according to the user manual. Don’t let technological progress come at the cost of tragedy.

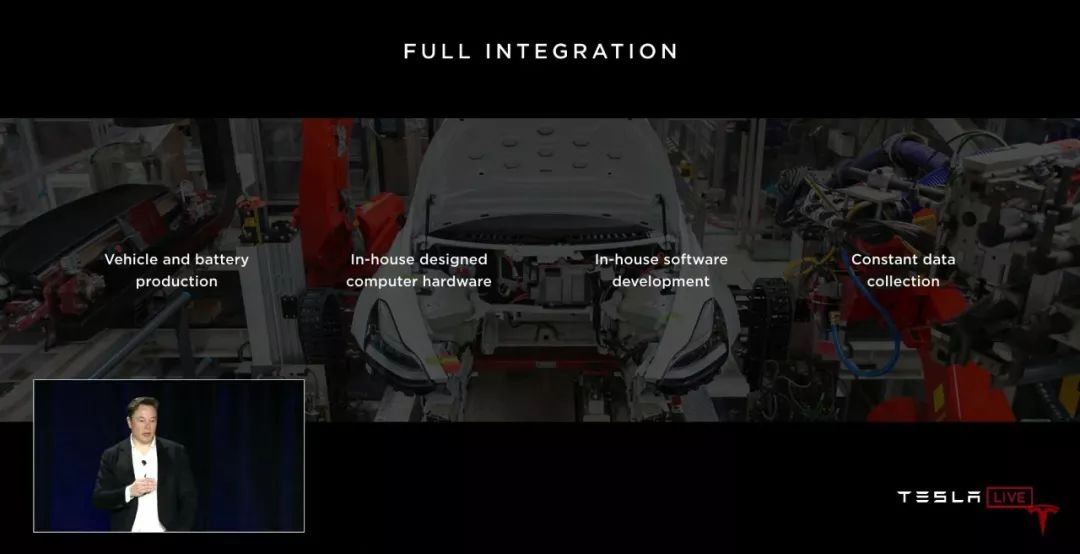

Started at the beginning of 2016 and put into mass production in the middle of 2019. With the release of the AP chip, Tesla has essentially put the last key piece in place. An image summarizes Tesla’s core competitiveness for the next decade.

Started at the beginning of 2016 and put into mass production in the middle of 2019. With the release of the AP chip, Tesla has essentially put the last key piece in place. An image summarizes Tesla’s core competitiveness for the next decade.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.