*Author: She Xiaoli

Recently, Huawei’s advanced driving assistance system (ADS) made its debut and attracted widespread attention, allowing ADS to finally emerge from a shroud of mystery.

From the demo that was not impressive a few years ago, to the product that has reached a reasonable level of maturity today, it can be said without exaggeration that in every field, from conception to design, there have been earth-shattering changes and reconstructions more than once.

Looking back today, this is the excruciating yet necessary process of forging an innovative product.

The field of safety is no exception.

This process forces us to abandon all standards and specifications, and question ourselves: In essence, where do the safety risks of autonomous driving come from?

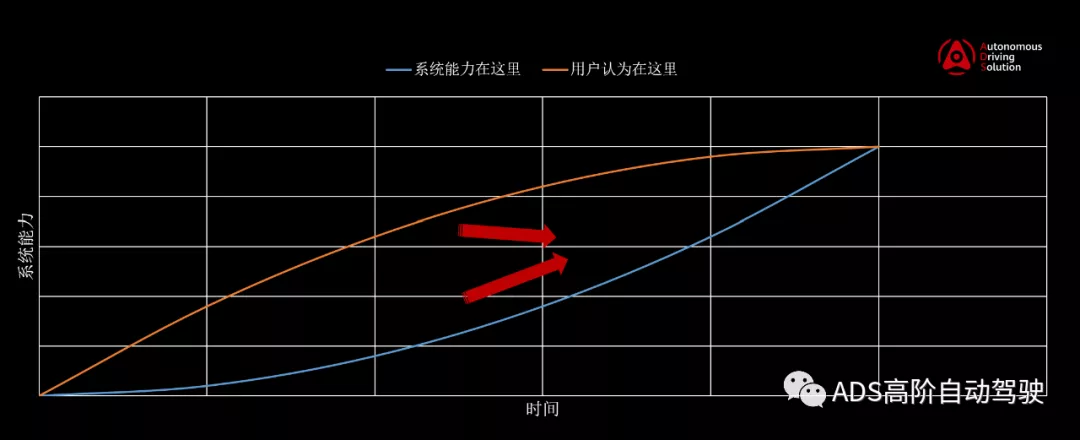

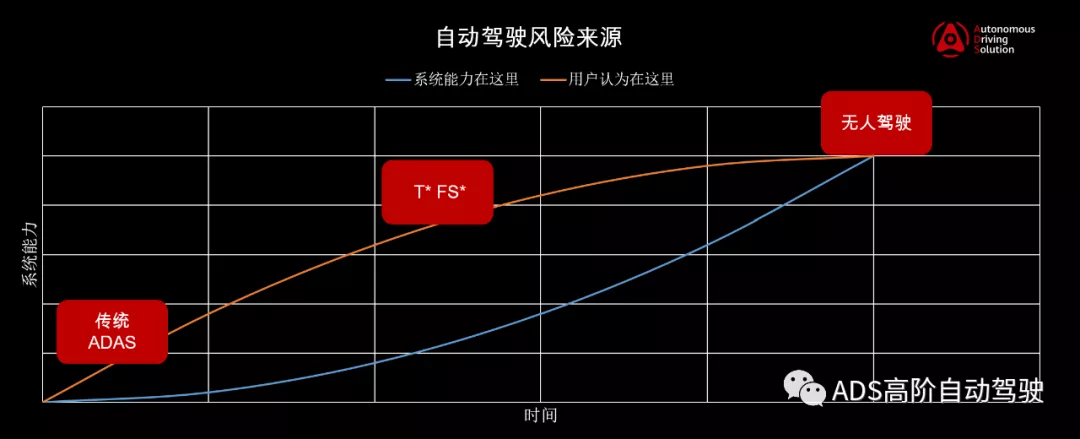

I think this picture roughly depicts our understanding:

This picture intuitively demonstrates the source of safety risks: the deviation between user expectation and system capability.

For low-level autonomous driving, or traditional advanced driver-assistance systems (ADAS), users realize that they can only use it for relaxing once in a while, without any misconceptions, and believe that it has more advanced functions. The lower the system capability, the lower the user expectations, and there is no risk to safety. The only drawback is that the product is not very practical.

From advanced autonomous driving to fully autonomous driving, the higher the system capability, the higher the user expectations, and there is no risk to safety. The only drawback is that such a product does not exist yet.

The most challenging thing is the process of transitioning from low-level to high-level autonomous driving. All autonomous driving systems on the market are in this stage, striving for a more continuous user experience, and trying to raise user expectations. During this ascent, the risks of the system are constantly accumulating.

Users are easily pleased by the single-point and short-term abilities of the system. When the system successfully avoids a small donkey, users assume the system can do anything. When the system does not require drivers to take over for a week, the driver’s trust will increase rapidly, and they may begin to look at their phones or doze off. User expectations will always grow much faster than the system’s capabilities.

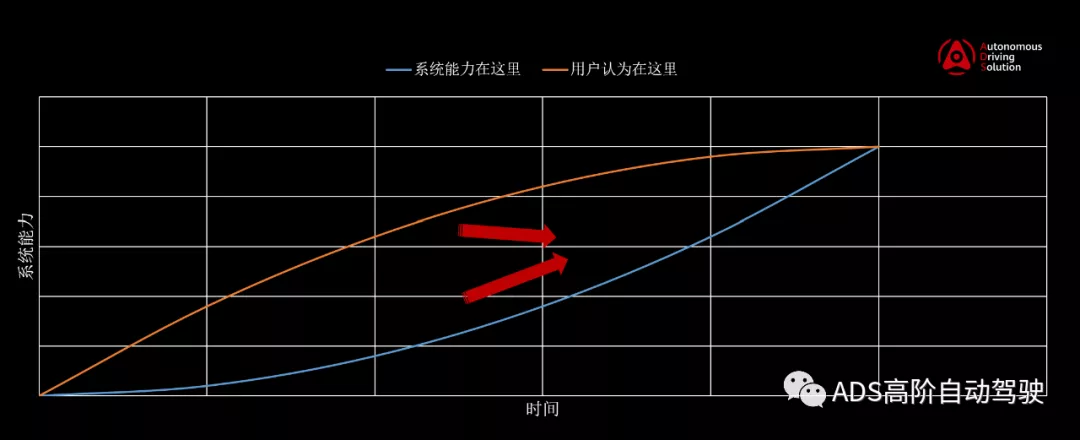

Our considerations and starting point are to bridge this gap.

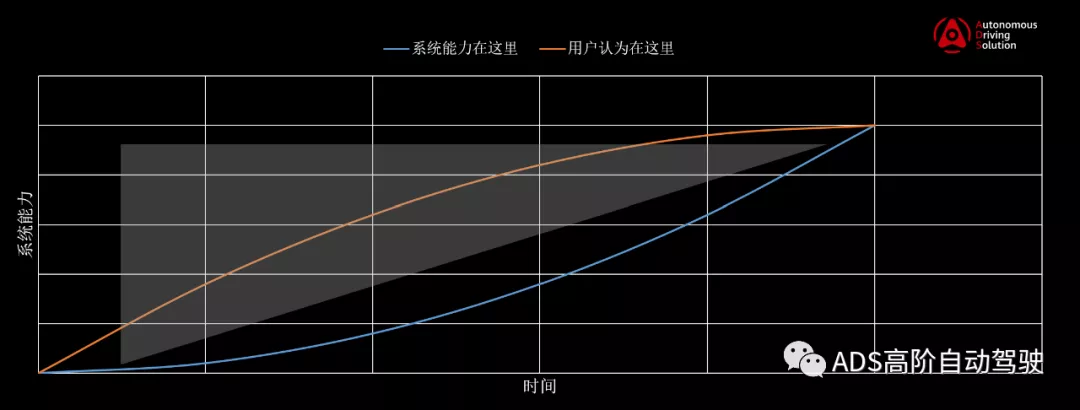

Traditional functional safety design is essential.

Essentially, traditional functional safety design ensures that risks outside the system’s capabilities (gray area) can be covered by humans.

Specifically, it guarantees two things:

First, the driver can take over at any time.

Second, to avoid causing unavoidable harm to public safety. Everyone has expectations for traffic behavior, and it is difficult for people to respond and avoid in a timely manner when their own car’s behavior greatly disrupts these expectations in a short period of time.Although the goal is the same, there are significant differences between the design of high-level autonomous driving systems and traditional ADAS. However, it is nothing more than the application of functional safety concepts to the new species of ADS. I believe that this will gradually become a mature research area in the industry, and I won’t go into more detail.

Appropriate Core Redundancy

The industry often refers to “fail-operation” as a response to the definition of L3: when the system fails, enough time is needed to allow the driver to take over (usually 10 seconds). There has been sufficient discussion about the paradox of this definition in the industry, but it is not the focus here. The focus is that, due to SAE’s rigorous definition of L3, fail-operation is almost equivalent to full redundancy, which has completely skewed the direction of redundancy design.

Interestingly, I attended a discussion on autonomous driving the other day and saw the mainstream consensus in the industry: no one is debating L2 / L3 / L4 anymore, and the focus is on urban areas, generalization scenarios, and user experience. Does this mean that redundancy design can be completely disregarded?

The problem still lies in the gap between system capabilities and user expectations. When the user trusts the system enough, it is difficult for them to take over the system in an instant. This is the root of the requirement for system redundancy.

On the other hand, redundancy is a double-edged sword. Redundancy means increased costs, complex architectures, and numerous pitfalls in the switching process. You can learn about the complex redundancy architecture for autonomous driving systems on the market, and I don’t know if this architecture has been implemented. In my opinion, this architecture is bound to fail and be non-competitive.

Redefining “System Delivery”

In the traditional product development process, it is necessary to ensure that the system’s capabilities, reliability, and safety have reached a high level of maturity at the moment of “SOP.”

However, for autonomous driving, “SOP” does not mean the end of product development. After SOP, the system’s capabilities will continue to evolve. This is a subversive impact on traditional “system thinking” for safety design.

This means that it is unrealistic and unnecessary to list all the requirements, develop and deliver them according to the V-model before SOP, as in the traditional approach.

The approach of “Data-Driven Improvement” is popular in the field of autonomous driving and is also the mainstream direction of system evolution recognized by industry players.

For safety design, to respond to this trend, one is to identify key delivery targets at each stage; the other is the timeliness of the closed data loop, which is also the problem to be solved by our “Data Driven Improvement” program. Finally, and still importantly, in the process of system climbing, reasonable human-machine interaction design needs to be used to control the overall system risk.

This also leads to the last and most difficult point, which is to make user expectations and system capabilities as consistent as possible through human-machine interaction design.

The challenge lies in the balance between “user experience” and “user expectation”.

From the perspective of user experience, we hope to minimize user interference and achieve full liberation.

From the perspective of product safety, users need to be prepared to take over the system’s ability boundary at any time.

These demands from both aspects have put forward very high requirements for human-computer interaction design:

-

When non-essential, minimize interference to users;

-

Remind with maximum precision and intuitiveness;

-

Based on the evolution of system capabilities, continually adjust and evolve human-computer interactions, and so on.

You may not be aware of it, but the design work and the difficulty in this field is no less than that of algorithm development.

Lastly, in recent years, we have always heard people complaining that traditional functional safety methods cannot effectively respond to the development of new technology. We hope to provide some new ideas to solve this problem.

After all, the user experience of the product is the key core competitiveness of the autonomous driving track. In a marathon-like competition, safety is also the guarantee that the product adheres to until the end.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.