Author: Pony.ai

Introduction:

When it comes to self-driving car hardware, people usually think of the sensors installed around the car body. Especially, the Velodyne 64 “full-house package” used to be the symbol of L4 self-driving development cars. Although the on-board computing platform for self-driving cars is hidden inside the body, and few L4 self-driving solutions mention it, it undertakes almost all data processing and algorithm execution tasks, and is also the central computing unit of the entire hardware system of PonyAlpha X. We have some unique ideas.

Optimization of Software and Hardware Together

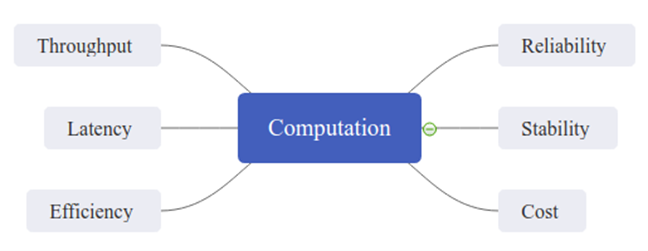

Currently, the TOPS (Tera Operations Per Second) is commonly used in the computing hardware industry to measure computing power. However, a trend believes that the higher the TOPS, the more it can meet the needs of L4 computing, and even some solutions claim that a certain TOPS data can achieve L4 autonomous driving. We believe that TOPS is more like the computing hardware provider’s forced induction for L4 computing needs. In practice, the overall system capability of L4 is not only TOPS, but more importantly, it is the overall optimization of software and hardware for different sensors and algorithms in terms of latency, data throughput, computing efficiency (perf/watt), computing determinacy, accuracy, system stability, etc.

In the development of the PonyAlpha X system, the Pony.ai software and hardware teams collaborated to build a highly customized heterogeneous computing system for a central on-board computing platform of L4 autonomous driving that fully meets the needs of Pony.ai’s application software while also taking into account high performance, efficiency, and reliability.

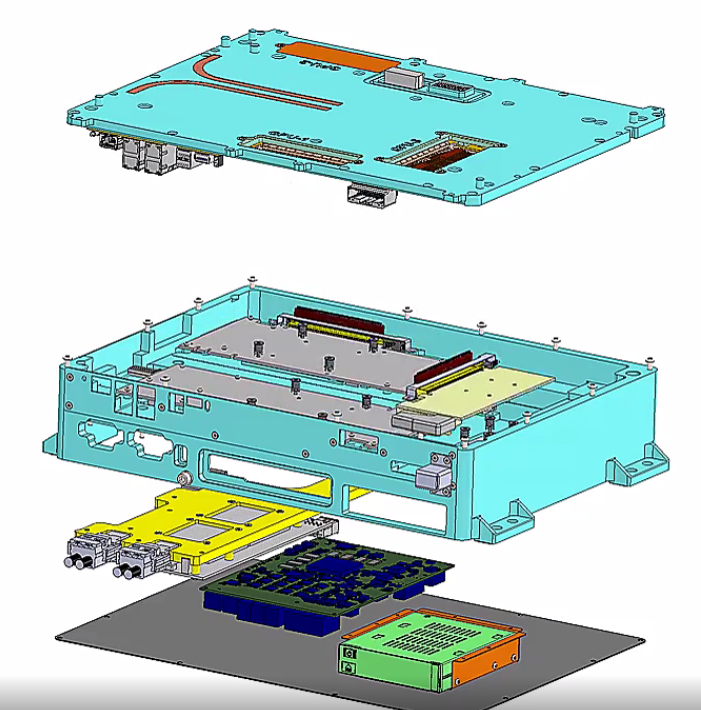

Customized Heterogeneous Computing Architecture

Although powerful CPUs and GPUs are still the main processors for L4 autonomous driving computing, significant reduction in latency, and improvement in performance, safety, and computing efficiency can be achieved through specific heterogeneous systems.

Data Preprocessing and Computing Shunting

By introducing FPGAs, the computing platform preprocesses massive, irregular sensor data with high throughput, providing functions such as time synchronization, calibration, data compression, checksum, and repackaging, which improve the overall latency of sensor data flow by six times and reduce CPU/GPU usage by 20%. At the same time, the FPGA provides a hardware abstraction layer, so that upgrading and updating of the sensors do not affect the overall system functionality.

Safety IslandTranslate the Chinese text below into English Markdown text in a professional manner. Keep the HTML tags inside the Markdown and output the result only.

Safety has always been the key to high-performance autonomous driving hardware. By introducing vehicle-grade MCUs as safety islands, the health status of the entire computing platform is fully monitored, and real-time fault detection is carried out to output error codes, thus triggering the failure safety system.

Communication Overhead

Heterogeneous computing often faces additional costs for inter-chip communication. By using the industry’s most advanced IO communication protocols, the bandwidth for inter-chip data exchange is tripled, enabling parallel computing among multiple chips.

Computational Efficiency

The charm of heterogeneous computing lies in the fact that by properly arranging different computing tasks to the most suitable computing chip, the overall system’s computational efficiency can be greatly improved. By optimizing the software and hardware on the computing platform, the final average power consumption is only 1/3 of that of an equivalent data center server, saving energy while significantly improving heat dissipation pressure and reliability.

Balancing Performance and Reliability

In automotive electronic products, higher performance and greater power consumption typically lead to reduced reliability. The engineering charm of Pony.ai’s central car computing platform is to balance performance and reliability.

High Performance

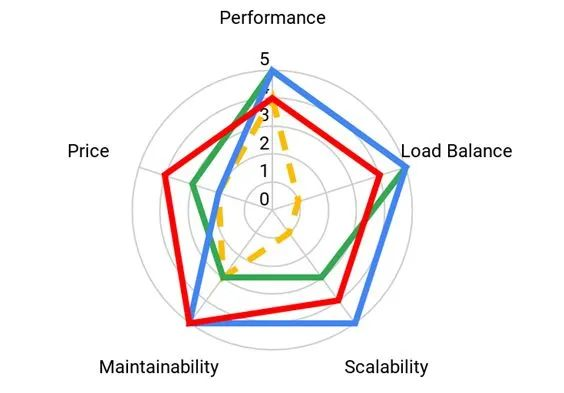

In order to achieve decoupling between hardware and software development, the design philosophy for performance in the computing platform is not only to meet existing development needs, but also to design ahead to support the development of L4 autonomous driving software in the future three years. In addition to the aforementioned heterogeneous computing, we seek the best balance point between perf/watt by optimizing both software and hardware, not only focusing on the absolute performance of chips and systems. The following figure shows how we choose the most efficient computing solution through multi-dimensional weighting.

High Reliability

One of our approaches to addressing automotive-level reliability is to provide sufficient design margins, whether it is signal/power integrity or environmental factors such as vibration and temperature. Only with sufficient design buffers can reliability be maintained in harsh automotive environments. For example, we designed a power supply system that is 4 times larger than normal operating power consumption to ensure that no power peak will cause system failure.

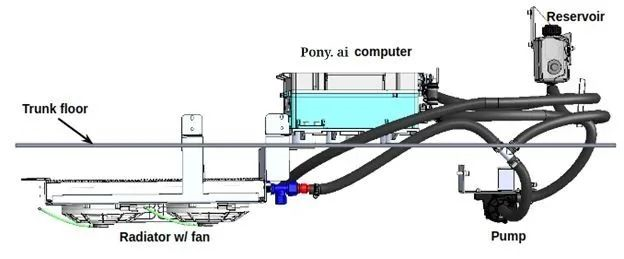

Another reliability strategy is to provide a comfortable workspace for high-performance computing systems as much as possible. For example, even though automotive electronics need to operate in an environment temperature range of -40°C to 105°C, we have designed precise liquid heating and cooling designs that allow even consumer-grade chips to operate reliably in automotive environments. For example, reasonable buffer design allows fragile components to be undamaged even under 25g impact.Translate the Chinese Markdown text into English Markdown text, in a professional manner, keeping the HTML tags inside Markdown and only outputting the result.

System Verification

After the design implementation according to the classic hardware development V model, we conducted system verification in multiple dimensions.

Firstly, at the signal level, the power of key signals was measured under different temperature and voltage conditions according to industrial standards to verify the signal margin matches the design.

Secondly, environmental and stress tests were conducted based on the DVT testing standards defined by Pony.ai to ensure that the system can operate normally under various conditions, such as temperature, vibration, and humidity, and is not damaged under conditions such as power failure, short circuit, and collision.

After that, at the system level, we developed an HIL simulation platform, which enables the central car computing platform to verify continuously in 7×24 hours offline to achieve a statistically significant greater than 100,000 hours MTBF.

Finally, we conducted a large number of actual road tests to ensure the integration reliability with the overall L4 autonomous driving hardware system.

图 6: Z-direction vibration test of the central on-board computing platform

Volume Production Evolution

The Pony.ai central on-board computing platform is not just an advanced pre-research project but aims to support L4 autonomous driving hardware volume production.

Every design detail considers DFM and DFA to make batch processing and assembly possible, ensuring volume production-level yield.

The computing platform is easy to deploy and maintain, featuring a plug-and-play overall design and modular design for easy maintenance. Finally, the computing platform has a clear car regulation roadmap and can achieve car regulation level L4 computing platform in 2023.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.