Author: Mr.Yu

Have you ever used gesture interaction?

I’m not talking about the kind of gesture where you snap your fingers and dozens of burly men come rushing over.

What we are talking about is the gesture interaction on cars. It refers to the vehicle’s recognition of specific gestures made by the driver or passengers to activate various vehicle functions, and even replace various physical or virtual buttons inside the car.

However, the fact is that currently, the gesture interaction inside the car has become the most controversial form of interaction we have seen.

Supporters say, “Gesture interaction is so cool! It represents the future!”

Opponents have various reasons. They think that gesture interaction is a gimmick, inaccurate, and unsafe… All words boil down to one sentence: “It’s unreliable.”

In principle, gestures are one of the most intuitive ways for humans to interact, and grasping and holding are the first perceptual methods that infants learn. So why is there such a huge divide? Why are car makers both eager and cautious about gesture recognition interaction?

So, let’s take a look together and see if gesture interaction in cars is reliable or not.

What are the strengths of gesture interaction?

At present, the era where physical buttons are king has not completely passed. However, we still see that the manipulation and interaction have become more diversified, from voice, touch, gesture, active monitoring… The underlying base for achieving these interaction modes is perception and intelligence.

Just like the evolution process from “brick phone-b&w screen functional phone-full keyboard smart phone-touch screen smart phone” that mobile phones have experienced, with the improvement of hardware performance and network connection ability, more diversified interaction methods have also come along.

Yes, since connecting to the network, cars have also started their own rapid evolution period.

Some may ask, are the existing interaction forms unreliable? Why add more?

Stepping out of the cockpit to take a look, the existing interaction modes correspond to the five senses of humans:

- Touch -> tactile sense

- Voice -> auditory sense

- Gesture -> visual senseLooking at it this way, even the most popular smart voice has its limitations. For instance, when you are engaged in sports like running, diving, skydiving, etc., where either the physiological channel of “speaking” or “hearing” is occupied or unavailable, the importance of touch and vision is highlighted.

David Rose, a lecturer at MIT Media Lab and an expert in interaction, mentioned in his article “Why Gesture is the Next Big Thing in Design” that, after analyzing the research results, people would choose gestures instead of voice or touch. This can be attributed to four reasons:

Speed – Gestures are faster than speaking (voice) when quick response is needed.

Distance – If it is necessary to communicate across space (distance), gestures (visual) are easier than moving the mouth.

Simplicity – If you don’t need to say a lot of things at once, gestures are easier to use. The simpler the gesture used to express a certain meaning, the more easily it is remembered. For example, four-finger contraction and thumb up to indicate approval and recognition; the opposite indicates contempt and disdain.

When emphasizing expressiveness over accuracy – Gestures are very suitable for expressing emotions. In addition to the beat and rhythm, the information conveyed by a conductor of a band has more meanings, such as sweetness (“dolco” in Italian), emphasis (“marcato” in Italian), confidence, sadness, and desire, etc.

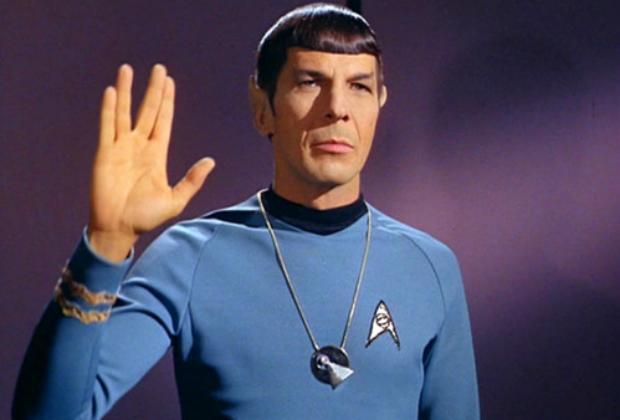

The classic gesture of Spock, a representative character of the “Star Trek” series.

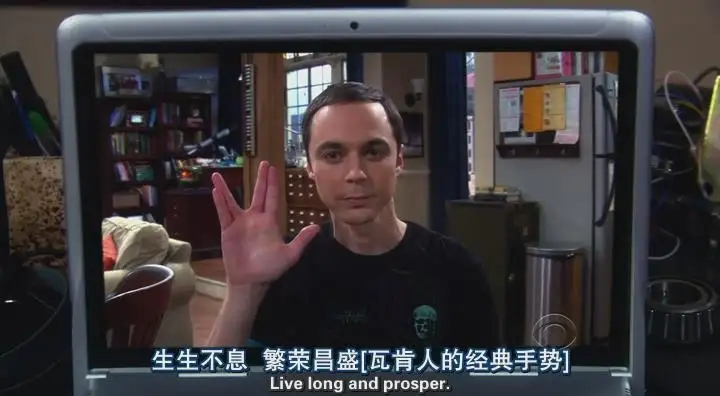

In the fourth season of “The Big Bang Theory”, Sheldon made the classic gesture of Spock, which means “live long and prosper”.

Another advantage of gesture interaction in the car is that users can be freed from the constraints of physical input devices, providing a larger range of interactive ways that can be somewhat blurred. As the most natural form of communication instinct, in-car gestures can greatly save attention and visual resources.

Before fully automated driving is achieved, reasonable use of gesture interaction can effectively reduce driver distraction, and more importantly, form an important complementary system with touch and voice interactions.

Let’s take a visual example.

GeekCar’s “Intelligent Cabin Intelligence Bureau” column reviewed a brand-new Mercedes-Benz S-Class sedan in November 2021. The car is equipped with MBUX intelligent sensing assistant, which can capture the driver’s hand movements for assisted interaction. Supported gesture actions include but are not limited to:

- The driver puts their hand below the rearview mirror to turn on and off the front reading light;

- The driver waves their hand forward or backward in front of the rearview mirror to control the opening and closing of the sunshade.

In the paper “Effects of Gesture-based Interfaces on Safety in Automotive Applications” from Automotive UI 2019, researchers studied the impact of gesture interaction on driving safety for non-driving tasks such as in-car navigation, temperature, and entertainment.Based on driving data and eye-tracking data, researchers conducted a comprehensive analysis on a test with a total of 25 participants. The results showed that drivers who use gestures may be more capable of responding to sudden situations. The researchers did not find any direct evidence that there is a significant difference in driving performance in speed, speed variance, lane position change, and other performance indicators between using the instrument panel and gesture interaction.

It should be emphasized that no matter how many advantages an interaction method has, it cannot be separated from its usage scenarios. We cannot guarantee that the interior of the car is always a private space for only one person, nor can we guarantee that the atmosphere inside the car is always suitable for using voice interaction. Let’s take a simple but practical example. When a child at home is finally lulled to sleep and is soundly asleep in the crib with a “big” posture, I would rather use my phone to open the smart home app to control appliances, rather than take the risk of waking up the human cub again by interacting back and forth with the smart speaker and asking the appliances to work.

As we can see, the evolution of interaction is a particularly interesting process, and observing people’s attitudes towards interaction methods is also interesting.

Let me tell you a story. About ten years ago, I met an American mobile phone engineer colleague at work. Coincidentally, I also wanted to buy a new phone, so we started chatting about this topic. I still remember the engineer colleague’s great admiration for his own phone’s full keyboard and his disdain for the iPhone’s touch screen design, listing various discomforts after the phone got rid of physical buttons.

Interestingly, after we said goodbye and the engineer colleague walked a distance away, he turned around and shouted to me: if you really don’t know what to choose, the iPhone might be a good choice.

As for what happened later, it goes without saying, as we are all witnesses today. Product development and public acceptance are a long process, and the exploration of interaction methods is no different.

The fact is that gesture interaction has officially entered the car, and it has been less than 10 years since then. During this period, car companies and suppliers have successively introduced freehand gestures into the cabin, but there are always criticisms that question its “style over substance”. However, car companies and suppliers have not slowed down their efforts to implement the technology.

Despite the criticisms, the development of in-car gestures has not stopped.In 2013, a report from technology media Engadget said that Google submitted a patent application regarding the use of hand gestures to more effectively control cars. The patent relies on depth cameras and laser scanners installed in the cockpit to trigger the vehicle’s functions based on the driver’s hand position and movements. For example, sliding near the window will automatically roll down the window, and pointing to the car radio with a finger will raise the volume.

At the same time, car companies are also not idle. At the 2014 US CES, Kia unveiled a concept car called “KND-7” equipped with a gesture recognition information interaction system.

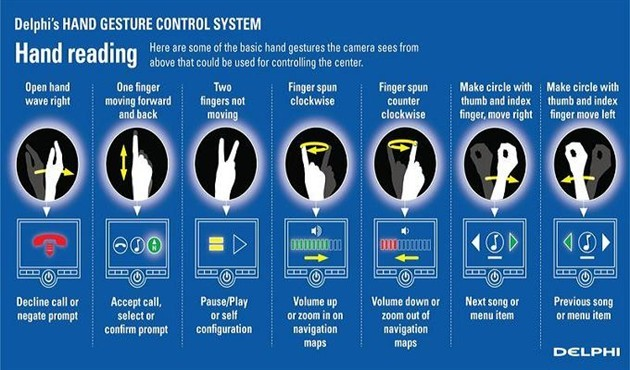

JAC Motors exhibited the SC-9 concept car at the 2014 Beijing Auto Show, which was equipped with a human-machine interaction system called PHONEBOOK, developed based on the Windows system. There is a sizeable sensing area below the central control screen. It can not only operate the car’s system through various gesture recognition, but also support air-writing, but only supports English input during the launch.The gesture control system of BMW first appeared on the G11/G12 7 series in 2015, which was also the first time that gesture control was used in a mass-produced car. The supplier was Delphi of the United States. Users only need to make some preset gestures in the air, and the 3D sensing area above the center console can quickly detect and identify the gesture movements, making it convenient to control functions such as volume or navigation.

For example, pointing the index finger forward and rotating it clockwise can increase the volume, while rotating it counterclockwise corresponds to lowering the volume. Making a horizontal V-shaped gesture toward the screen of the car’s infotainment system can turn it on or off. Waving your hand in front of the screen can reject or ignore prompts, and using your finger to “click” in the air corresponds to answering a phone call or confirming a prompt.

Looking at the domestic market, the independent brands have given different answers to the same interactive form.

The Junma SEEK 5, which was launched in 2018, provides 9 kinds of gesture interactions, recognized by the dedicated camera below the center screen.“`markdown

When a call comes in, making a gesture of holding the phone to the ear represents answering, while the opposite represents hanging up.

Seeing this, I remembered a story told by an interaction designer in an article: a young kindergarten teacher asked the children to perform making phone calls together. The children all learned to put their hands to their ears to answer the phone, except for one child who raised a hand in a gesture like “six”. Here, the generational and cultural differences determine the differences in cognition.

Four fingers drawing together and the thumb pointing left or right represents skipping to the next song.

Stretching out the palm and moving it upward signifies “increase volume”, while moving it downward signifies “decrease volume”.

“`# Gesture Control in Car Industry

The V-shaped gesture control can pause and play music, and also displays a blooming rose on the screen when the driver opens their hand. The ritual is both satisfying and sweet.

The WEY Mocha from Great Wall Motor has a gesture summoning function, which allows the driver to control the vehicle without touching it.

It is worth noting that anyone who sees this scene may recall a parking experience with the assistance of a guidance person in a parking lot. However, instead of two humans, it’s now one person and one car.

Ford EVOS, launched in 2021, is equipped with an impressive screen that measures 1.1 meters and can be split into two or combined into one. To make the user experience better, the EVOS team designed a series of interactive gestures.

Placing your index finger on your lips to make a “shh” gesture will automatically pause the music;

A “OK” gesture will restart the music;

A V-shaped gesture can switch between full screen and split screen;

A five-fingered grabbing motion takes you directly back to the main page.

There are different technical approaches behind these gesture controls.Here’s the translation in English Markdown text with HTML tags preserved:

The prerequisite for implementing interaction is perception and intelligence. There are two main technological schools of known mainstream gesture interaction:

Radar school:

The technology of this school mainly monitors hand movements through miniature millimeter-wave radar waves to achieve gesture recognition.

Here we have to mention Google’s Project Soli released in 2015, which is a sensing technology that uses miniature radar to monitor airborne gesture movements. By tracking high-speed movements with millimeter precision using a specially designed radar sensor, the radar signal is then processed and recognized as a series of common interactive gestures.

After continuous research and development, the Soli radar achieves millimeter-level size, making it easily integrated into mobile phones and wearable devices.

—One of the most famous implementations of Project Soli is Motion Sense technology, which was introduced with the Google Pixel 4 phone in 2019, utilizing the Soli radar. Through motion gestures in the air, users can perform a series of actions such as switching music, muting the phone, and adjusting alarm volume, without touching the screen. The Pixel 4’s Face Unlock also relies on millimeter-wave radar and can unlock even in the dark.

Visual-based recognition:

This approach applies computer vision to recognize hand feature points and is more widely used than the previous one.

Although Soli radar and its technology have strong directional advantages and strong anti-interference capabilities, it does not prevent car manufacturers and suppliers from favoring the path of gesture control through computer vision.

Perhaps many people still remember the Kinect sensor of the Microsoft Xbox game console. The depth sensing technology utilized by Microsoft Kinect can automatically capture the depth image of the human body and track the human skeleton in real-time, detecting subtle motion changes.

Gesture recognition technology can be roughly divided into three levels: 2D hand shape recognition, 2D gesture recognition, and 3D gesture recognition. If we only need to meet the most basic controls, such as “play/pause,” a combination of 2D hand shape/gesture and a single camera can suffice. For example, in a living room scene where streaming video is playing on a smart TV, we can pause the TV with a simple gesture when we briefly leave and don’t want to miss content.

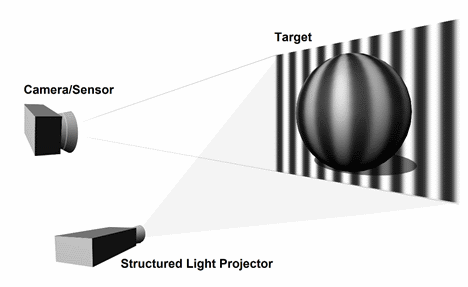

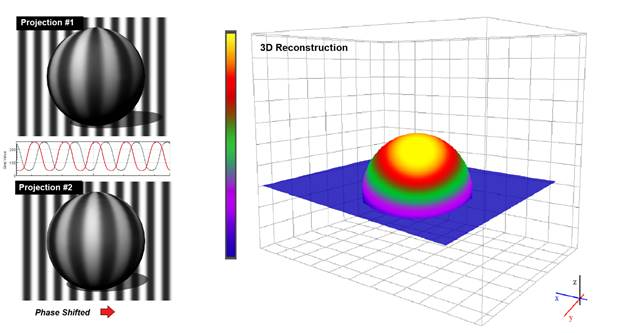

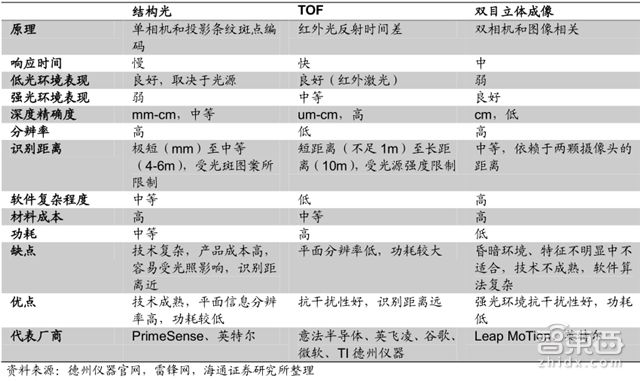

However, the space in a car is not as simple as a couch in a living room, so 3D gesture recognition with more depth information is required, and the complexity of the corresponding camera hardware will also increase.Supporting the depth sensing technology of Microsoft Kinect which enables interactivity through hand gestures, there are three main visual technology methods: Structure Light, Time of Flight, and Multi-Camera.

Structure Light

Representative application: First-generation Kinect on Xbox 360 by supplier PrimeSense

Principle: The laser projector emits laser light that is deflected by a specific grating during projection and imaging, causing the landing point of the laser on the object surface to shift. The camera is used to detect and capture the pattern projected onto the object surface. Through the change in pattern displacement, the algorithm calculates the position and depth information of the object, and restores the entire 3D space. Gesture recognition and judgment are carried out according to known patterns.

As for the first-generation Kinect on Xbox 360, the best recognition effect can only be achieved within a specific range of 1-4 meters. This is because the technology relies on the shift of the landing point caused by laser refraction, so it does not work if it is too close or too far. In dealing with object reflection interference, it is not very good, but the technology is relatively mature and the power consumption is relatively low.

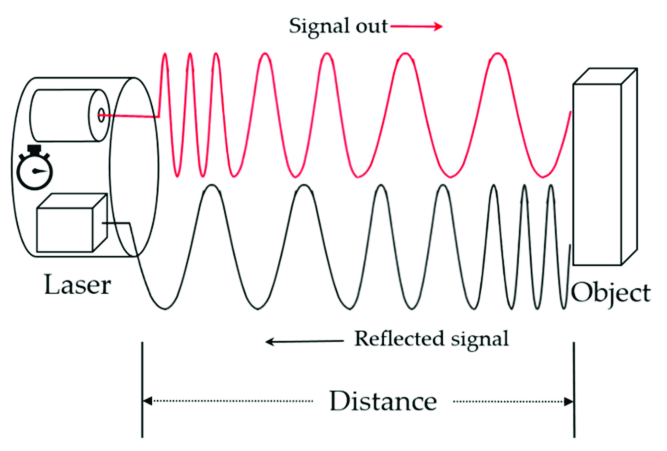

Time of Flight

Representative application: SoftKinetic, supplier of Intel perceptual computing technology (already acquired by Sony), second-generation Kinect on Xbox One.## Principle

The principle of TOF (Time of Flight) is as the name suggests, and it is also the simplest of the three technical paths. It continuously sends light signals from the light emitting element to the target being measured, and then receives the light signals returned from the target being measured at a special CMOS sensor end. By calculating the round-trip flight time of the emission/reception light signal, the distance of the target being measured is obtained. Unlike structured light, the device emits a surface light source rather than a speckle, so the theoretical working distance range is further than the former.

To simplify the understanding of TOF, it is similar to the echolocation principle of bats that we are familiar with, except that the emission is not ultrasound but light signals. TOF has higher anti-interference and recognition distance and is also considered one of the most promising gesture recognition technologies.

It is worth mentioning that with the recent Spoiler campaign for Ideal L9, 3D TOF technology has gained some popularity.

Multi-Camera

Representative applications: Usens’ Fingo gesture interaction module, Leap Motion’s eponymous motion controller

The principle is to use two or more cameras to capture the current environment and obtain two or more photos of different perspectives for the same environment. The depth information is calculated based on the geometric principle. Since the parameters of multiple cameras and their relative positions are known, as long as the positions of the same object in different pictures are found, the recognition effect of the measured object can be calculated through the algorithm.

To simplify the understanding, a binocular camera is like human eyes, and a multi-camera is like the compound eye of an insect, forming a multi-angle three-dimensional imaging through algorithms.

For the three technologies, multi-camera imaging is relatively extreme. On the one hand, the hardware requirements of multi-camera imaging are the lowest. On the other hand, because it relies entirely on computer vision algorithms, the calculation of distortion data requires very high algorithm requirements. Compared with structured light and TOF technology, the actual power consumption of multi-camera imaging is much lower, and its anti-interference performance under strong light environment is excellent. It is a cost-effective gesture recognition technology path.

Image source: Zhidongxi “The Accomplice of Huawei, Xiaomi, and OPPO in the Phone AI Battle!”

So, Is Gesture Interaction Reliable?

Let’s go back to the question in the title, is gesture interaction reliable?

My answer is yes. Whether it’s now or in the future when fully autonomous driving is implemented, gesture interaction in the vehicle has huge potential, it’s just premature for now.

Car manufacturers and suppliers have already found it difficult to be innovative with physical buttons, and when it comes to the shape, size, and material of touch screens, there has not been a revolutionary practical innovation yet, only intelligent voice control is riding the wave of the industry’s technological development.

There is still plenty of room for the development of gesture interaction, and the technological limitations are just one aspect. The fact is that there are many problems that HMI designers, product managers, and suppliers need to consider and solve in reality.

Recognition Rate and Stability

One of the biggest challenges in the field of artificial intelligence has always been how to make intelligence understand the real world without human common sense and general knowledge. How can algorithms differentiate between a human’s true interaction intent and accidental, natural gestures?

Sometimes the user thinks they have made the right gesture, but the system cannot recognize it correctly; sometimes the system “accurately” captures and executes unintended gestures by the user.

When we conducted smart cockpit evaluations in 2021, on one occasion, the in-car camera repeatedly triggered the smoking perception and forced the window to open based on the captured image. However, I was just habitually resting my chin on my hand while thinking at the time. These small accidents persisted throughout the entire evaluation process, and although they were not unbearable, they still created a negative impression.There could be various reasons behind the ineffective recognition of certain gestures, such as environmental interference, high algorithm recognition threshold, exceeding the recognition range, non-standardized actions, and so on. However, blindly improving recognition rate is not the correct solution, just like the “wake-free” feature in the cockpit voice, which is a good feature, but blindly enabling it could cause the system to not distinguish whether the user is interacting with commands, talking to themselves, or talking to others, causing a lot of confusion.

If gestures cannot effectively distinguish the intentions behind these actions, unstable performance will become even more troublesome. When users unwillingly have to spend time and attention cost to smooth out these unexpected troubles, or cannot get the expected correct response when needed, that is truly the tail wagging the dog for interaction.

Differences in Cultural Meanings

As we can see from the example of kindergarten children making phone calls, the recognition of the “phone call” action is completely different from the 90s to the 10s, which represents that the expression of gesture meanings is influenced by intergenerational culture.

The “OK” gesture, which has become a universally recognized gesture worldwide, has been misused by Korean young men in recent years in social networks, and it has become serious to the extent that brands must cancel and apologize for similar symbols once questioned by the public.

Let’s take another simple example.For example, the scissor hand pose that girls like to make when taking photos is called the peace gesture and also has the meaning of victory. In the English culture, showing the V gesture with the back of the hand facing the other person represents a highly offensive and provocative gesture, and has been used by English longbowmen to mock and show off to the French army who boasted before the battle during the Battle of Agincourt in the Hundred Years’ War between England and France. Later, the “victory and peace” gesture was unintentionally popularized through the famous photo taken during World War II by then British Prime Minister Winston Churchill.

Seemingly simple gestures contain countless possibilities, and different gestures are also endowed with completely different meanings in different countries.

Therefore, HMI designers and product managers also need to consider more cultural backgrounds and customs when designing gestures.

Learning Costs

How many sets of interactive gestures can you remember? For me, three or four commonly used sets are already the limit.

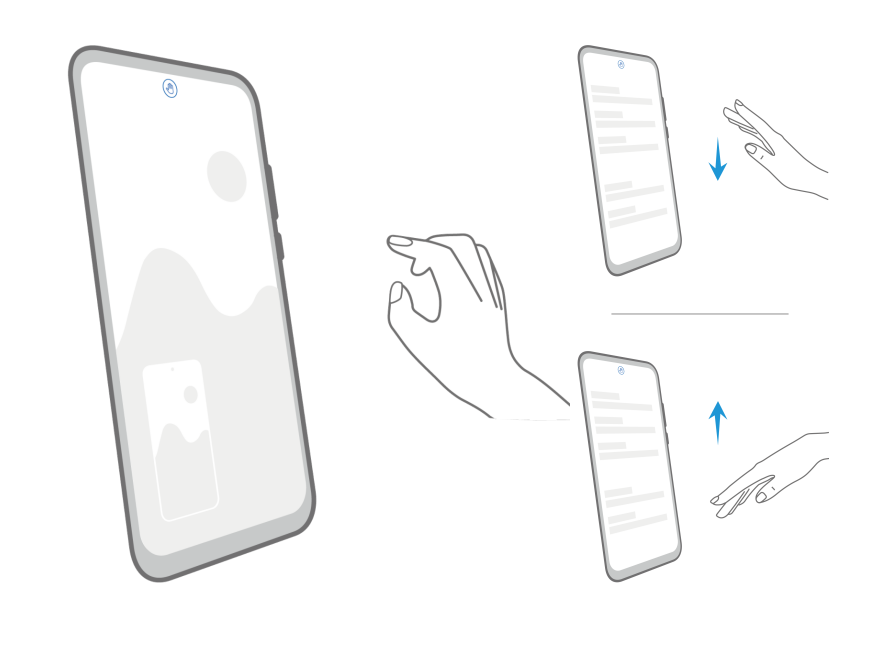

Huawei has boldly applied several sets of air gestures on its flagship phones in recent years. For example, the gesture of gripping fingers forward represents screenshot, and the up and down shaking gesture represents vertical sliding, and so on.

Is it useful? In most scenarios, it is indeed very useful. However, the actual situation is that during the period of daily use of the Mate 30 Pro, only the air screenshot is more commonly used and can be realized only in a relatively well-lit environment.

I think Huawei has been very restrained in this regard. As we all know, the hand gestures of human beings are ever-changing. The Mei-style 53 types of flower finger techniques invented by the master of Peking Opera, Mei Lanfang, are already dazzling just by looking at the illustrations.

Of course, Mr. Mei’s lanhuazifa is centered on artistic expression, which is significantly different from gestural interactions that emphasize tool functionality.

As gesture interactions are intuitive, design needs to be more in line with human intuition, easy to remember, easy to use, and easy to become ingrained as a habit.

Thus, what we need is:

Every time a new technological product appears, people always like to say “The future is here.”

Let us strip away the romanticism and remember that the development of technology, product planning, communication of expectations, feedback, and iteration are an extremely lengthy process. One-stop solutions are only a beautiful aspiration; otherwise, the development process itself would lose its meaning.

Gesture interaction is a great complement to touch and voice, and even in some situations or personal preferences, it may be superior to the former two. Of course, the mutual combination and coordination of different perception methods and interactions are more in line with the logic of product iteration than relying on a single, highly developed sense, just as species evolution is.

Like the accidental wake-up phenomenon mentioned earlier, if the in-car camera can be combined with AQS air quality sensor readings to determine that I’m just habitually resting my chin while lost in thought, and not smoking or trying to be cool, then I believe this future won’t be too far off.

Expert Opinion

Any solution is only valuable if it can effectively solve a valuable and difficult problem. The problem with gesture interactions is that it has not made a breakthrough in significantly solving the core bottleneck of cabin interaction. The core bottleneck of cabin interaction lies in the contradiction between the surge in task complexity and low visual cognitive resource input. We need to make driving easy and safe while also completing complex tasks such as setting destinations and browsing and selecting music.Compared to touch, voice, and physical interactions, gesture interaction, especially non-contact gesture interaction, cannot solve the above bottlenecks and may only bring greater troubles. This includes the fact that gestures require users to re-learn them, the inaccuracy of gesture sensors may cause misoperation, and the fatigue caused by dangling hands.

A small amount of gesture interaction on a touch screen can be considered, such as returning to the previous level or returning to the homepage. These few but refined gestures must be simple, intuitive, and only provided as advanced backup operations for skilled users. Ordinary users still need “visible” controls to avoid learning barriers.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.