By 2024, “End-to-End” begins to be a buzzword in the field of autonomous driving.

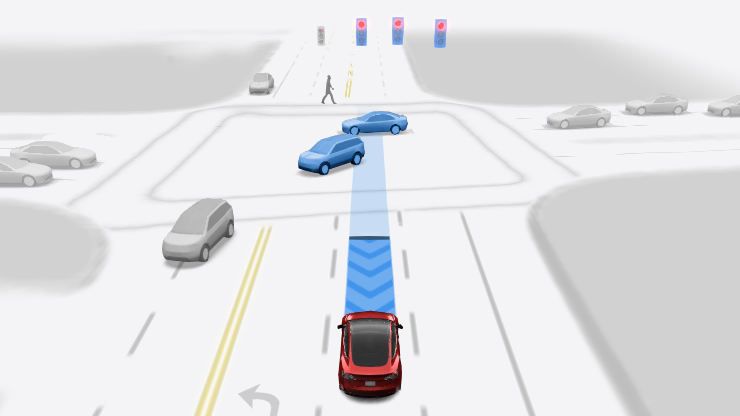

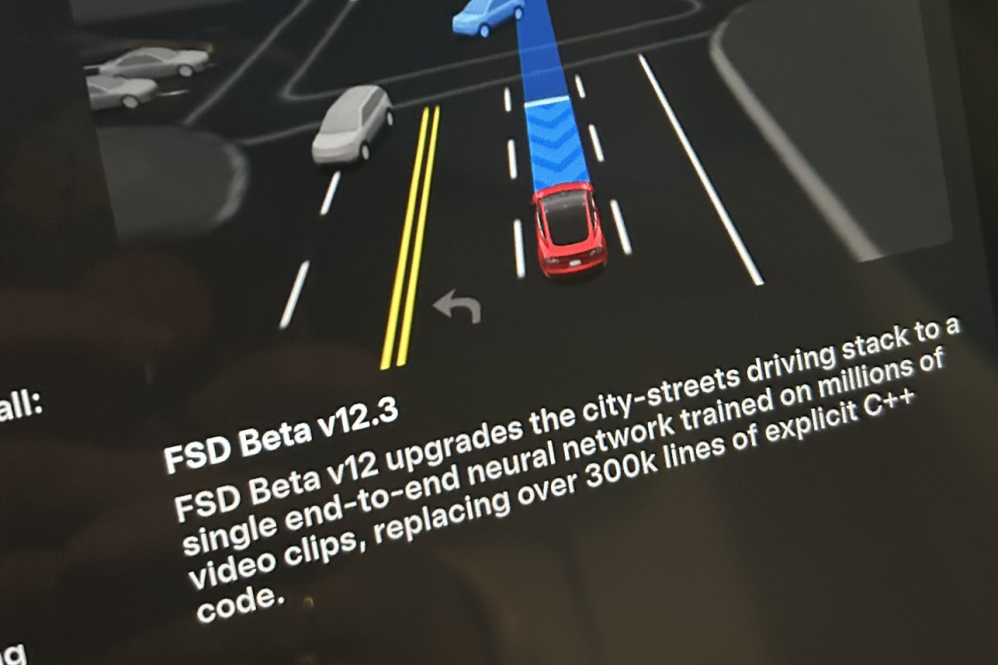

One most direct reason is that in late January of 2024, “Tesla” officially rolled out the testing version of FSD V12 for the general users. According to the instructions of this version’s roll-out, FSD V12 upgrades the software stack for driving on city streets to a single end-to-end neural network, which replaces more than 300,000 lines of C++ code after being trained by millions of video clips.

After the release of this version, many test videos about this version flooded the media platforms overseas. Plenty of netizens expressed admiration and amazement at its performance on urban roads, with some even exclaiming, “This is the future.”

Not only that, but many professionals in the field of autonomous driving also approved of the performance of “Tesla’s” FSD V12.

Of course, “Tesla’s” series of actions in the end-to-end field have also aroused keen attention in China across the Pacific Ocean. Especially in the field of intelligent driving, whether in the industry or in public opinion, end-to-end is becoming a buzzword. Some automakers who strive to be the first to land in the field of autonomous driving have even included end-to-end in their publicity pitches.

It needs to be made clear that although end-to-end is pursued in the field of autonomous driving, the industry’s technical development and commercial landing are essentially still in the exploration stage. Given this background, “Tesla”, as a forerunner in the field of end-to-end autonomous driving, deserves more attention.

So, what has “Tesla” done on the matter of end-to-end?

Tesla’s ChatGPT Moment

On May 16, 2023, after hosting the annual Tesla shareholders’ meeting, Musk was interviewed by CNBC, a well-known financial media in the United States.

When it comes to “Tesla’s” AI, Musk stated that “Tesla” has a tremendous capability in the real world AI field and is far ahead, “I can’t even say who is in second place”. Then, in response to the host’s question about ChatGPT and generative AI, Musk said:

“I think ‘Tesla’ will also meet a so-called ‘ChatGPT moment’. Even if it’s not this year, it won’t be later than next year. It means that one day, suddenly, 3 million ‘Tesla’ cars can drive by themselves… Then it’s 5 million, then it’s 10 million…”

“If we switch our positions, with ‘Tesla’ creating a large language model that is no less in output than ChatGPT, and Microsoft and OpenAI working on autonomous driving, we swap our tasks.”

“Without a doubt, we will win.”

Considering Musk’s previous long-term hype and multiple delays on Tesla’s autonomous driving technology capabilities and landing speed, Musk’s evaluation of Tesla AI and autonomous driving in this interview did not cause much of a stir at the time.

Considering Musk’s previous long-term hype and multiple delays on Tesla’s autonomous driving technology capabilities and landing speed, Musk’s evaluation of Tesla AI and autonomous driving in this interview did not cause much of a stir at the time.

However, few people noticed that, in fact, in the interview, a week before emphasizing the “Tesla will usher in a ChatGPT moment,” Musk had already mentioned for the first time the major change in Tesla’s autonomous driving technology, that is: FSD V12 is an end-to-end AI, which can input images and output steering, acceleration, and brake light actions.

In his words published three days later, FSD V12 is a complete AI capable of “input video + output control” (FSD is fully AI from video in to control out).

So, when did Tesla start doing end-to-end?

In fact, according to the information disclosed in “Elon Musk: A Biography” written by Walter Isaacson and officially published in 2023, the start time of Tesla’s end-to-end autonomous driving can be traced back to December 2022 – and it is obviously inspired by ChatGPT.

Specifically, late at night on December 2, 2022, Musk had a conversation with an engineer named Dhaval Shroff from the Tesla Autopilot AI team.

Let me introduce the background of Dhaval Shroff.

Dhaval Shroff is a brilliant student from India. He graduated from the University of Mumbai and later studied in the United States, earning a master’s degree in Robotics from Carnegie Mellon University. He joined the Tesla Autopilot team as an intern in June 2014 and converted to full time in 2015, working on R&D and AI-related tasks in the Autopilot team ever since.

In November 2022, Musk had just acquired Twitter, and he needed manpower to solve Twitter’s problems, so he sought out Dhaval Shroff and met with him.

Initially, Musk had hoped to persuade Dhaval Shroff to leave the Tesla autonomous driving team and work for Twitter, but Dhaval Shroff wanted to stay at Tesla and introduced Musk to the details of the neural network path planning project he was researching.

Of course, Dhaval Shroff was researching a cutting-edge project in autonomous driving. The project’s core was to design an autonomous vehicle system capable of learning from human behaviour. In this meeting, the words Dhaval Shroff told Musk were:> It’s akin to ChatGPT, yet applied to automobiles. We’ve processed extensive data concerning authentic human actions in complex driving scenarios; subsequently, we trained a neural network enabling the computer to mimic this behavior… We no longer solely rely on regulations to determine the correct vehicle course, instead, this is achieved through the neural network.

In essence, it’s an emulation of human behavior.

Finally, after the meeting, Dhaval Shroff retained his role within Tesla’s autonomous driving team due to Musk’s sizeable interest in this project – after all, in Musk’s perspective, Tesla had already become a company focused on artificial intelligence; Musk was already planning to hire a multitude of AI specialists to compete with OpenAI.

Thus, Dhaval Shroff and his team received direct support from Musk and officially began to innovate within the technical framework of Tesla’s autonomous driving – in the biography “Elon Musk” penned by Walter Isaacson, the project Dhaval Shroff was working on was referred to as the ‘neural network planner’.

Events later proved that this project became a key node for Tesla to completely shift to end-to-end autonomous driving.

End-to-end: Not Achieved Overnight

In fact, when talking about the term ‘end-to-end’ within the framework of autonomous driving, Tesla was not the pioneer.

In August 2016, NVIDIA, committed to making strides in the domain of autonomous driving, published an academic paper called “End to End Learning for Self-Driving Cars”, which presented a deep learning algorithm for autonomous driving. This algorithm used a Convolutional Neural Network (CNN) to translate images captured by a car’s front-facing camera into instructions for the autonomous vehicle’s navigation.

From the explanation provided in the paper itself, the tasks that this algorithm could accomplish were somewhat limited. For instance, it could only learn to control the steering wheel without considering the route and speed. However, in terms of the cognitive approach, it was definitely different from the traditional autonomous technology framework that needed to separate perception, detection, decision making and control into different modules; instead, it employed an integrated module solution.

In essence, it inputs images and outputs actions, wholly aligning with the ‘end-to-end’ concept.

Nonetheless, NVIDIA merely proposed the ‘end-to-end’ concept at the technical mystery level. In terms of large-scale production and implementation in the automotive industry, the ‘end-to-end’ solution remained unviable for quite some time – this even applied to Tesla, the most aggressive player in terms of implementing autonomous driving on a large scale.

So, how did Tesla make gradual strides towards ‘end-to-end’?

An expert in autonomous driving algorithms informed us that although the concept of ‘end-to-end’ seems quite forward and ‘high-end’, from an industrial implementation perspective, Tesla’s approach to ‘end-to-end’ has not been to completely discard its previous FSD algorithm achievements and start anew from scratch. Instead, it is highly likely that this was done through a structural adjustment based on earlier algorithm achievements.Meaning to say, Tesla’s end-to-end approach is not achieved immediately.

As an example, as early as on Tesla AI Day in August 2021, the then Tesla AI lead, Andrej Karpathy, introduced the BEV + Transformer-based perception algorithm structure. At this point, Tesla’s perception algorithm module had already fully transitioned to a Neural Network-based 2.0 version.

Furthermore, based on the information shown at the AI Day event, Tesla had already started optimizing its Planning & Control module towards Software 2.0 in 2021. In other words, Tesla had begun introducing elements of neural networks into the planning segment (though not entirely).

And by Tesla AI Day in October 2022, both the perception and planning control modules of Tesla’s AI algorithm structure had been updated. Despite they remained relatively independent modules. To clarify:

For the perception module, the new Tesla AI leader, Ashok Elluswamy, revealed Tesla’s Occupancy Network within their autonomous driving algorithm framework. Coupled with the NERF algorithm, it could offer greater universal capabilities in 3D space perception on the foundation of the BEV + Transformer perception framework.

In the planning and control module, Tesla rewrote its previous algorithms based on the advantages of the Occupancy Network. Incorporating some neural network and generative AI techniques (to generate driving trajectory prediction), it still consists of a large amount of human rule code. Overall, it represents a Software 1.0 stack designed to solve some problems using Software 2.0 code.

At this point, though Tesla’s perception and planning control modules remain relatively independent, their correlation has indeed grown ever closer.

Thus, it’s evident in Tesla’s construction process of the Autopilot software algorithm framework. While perception, planning, control modules are relatively independent, they maintain a mutual relationship. The planning and control module will evolve and upgrade, even be rewritten, with the perception module’s development. By December 2022, the perception module had finished evolving towards a neural network-focused Software 2.0. As for the planning and control module, due to its extreme complexity, still required a significant amount of rule-based, manually written C++ code.In this context, the neural network path planning project mentioned by Dhaval Shroff can be regarded as a key step towards Tesla’s “end-to-end” autonomous driving.

It should be noted that so far, there hasn’t been any public explanation from Tesla on how they integrate perception, planning, decision-making, control and other autonomous driving algorithm modules into a large neural network structure to achieve “end-to-end”. They even keep mum about it in the public eye. However, even if “end-to-end” could achieve the transition of the whole FSD algorithm framework to software 2.0, it would not completely discard human algorithm rules.

Of course, there are also skeptics who believe that “end-to-end” might just be a marketing term by Elon Musk.

Data: Tesla’s Unique Edge

For Elon Musk, supporting Dhaval Shroff’s neural network path planning project is not risk-free. In fact, any innovation for the algorithm towards the neural network often means that Tesla needs to invest time, data and computing resources for trial and error.

Even within Tesla’s internal team, some don’t believe that this neural network path planning project will be successful.

Fortunately, in just about half a year, Dhaval Shroff made a breakthrough and proved to Musk that this is the right direction.

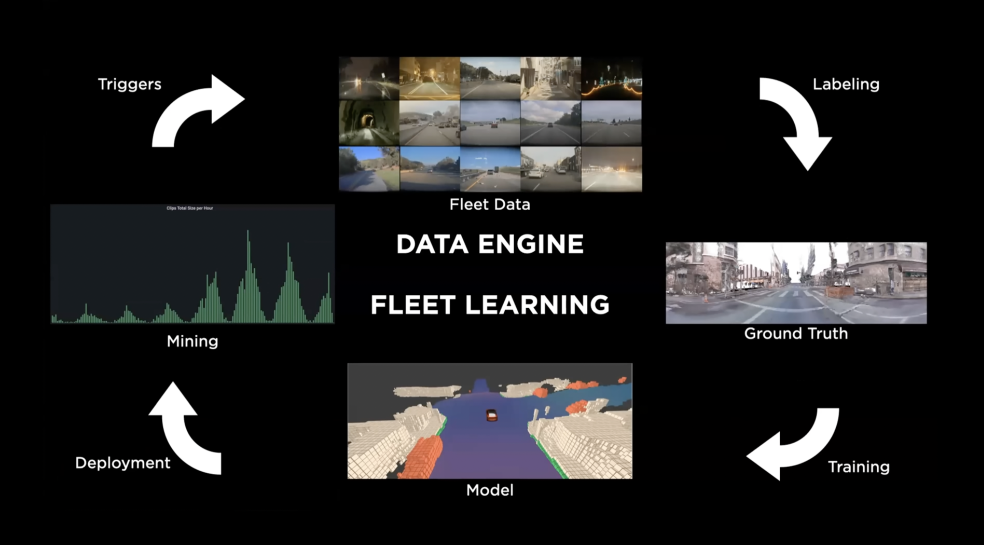

One of the contributing factors was Tesla’s significant data advantage.

In fact, based on Tesla’s autonomous driving team’s extensive experience in data processing, Dhaval Shroff’s neural network path planning project analyzed 10 million video segments from Tesla customer vehicles over several months from the end of 2022 to early 2023.

According to Dhaval Shroff, the videos chosen for data training were carefully selected. The primary criterion was that human drivers could handle various scenarios in the video clips. Only such video data would Tesla include in its training.

For this, Tesla recruited a large number of human annotators in Buffalo, New York. They evaluate and score video segments. According to Musk’s criteria, these annotators need to find what Musk calls the “Uber five-star driver approach”. Corresponding videos are used for data training.

According to a case Dhaval Shroff once showed to Musk based on path planning through a neural network, a car can navigate around obstacles, cross lane lines, and even break rules on a demonstration road strewn with garbage cans, traffic cones, and debris, guided by the neural network path planning.The following case has left Musk in a state of intrigue.

Come April 2023, in the city of Palo Alto where Tesla’s autonomous driving research team is located, Musk experienced the brand-new neural network routing technology-based autonomous driving software version for the first time. Accompanying him for this experiment were the collective likes of Tesla AI team heads, Ashok Elluswamy and Dhaval Shroff.

As part of the testing process, the team members explained to Musk how FSD trained on millions of video clips, captured via Tesla user’s on-board cameras; a point to note, is that they combined this feature to create a software stack, infinitely simpler than what traditional software stack rules could produce.

In Dhaval Shroff’s own words:

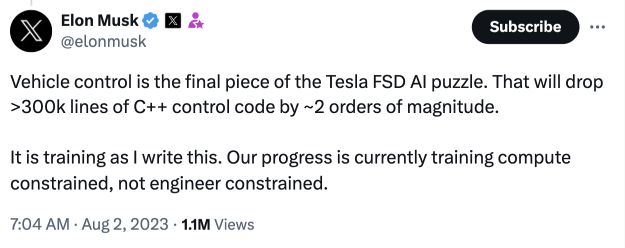

“The speed of its operation accelerates by ten times, going as far as to instantly erase up to 300,000 lines of code.” This quote later became Musk’s staple catchphrase during his Tesla’s end-to-end autonomous driving solutions promotion.

During this test drive, Musk observed an operation performed by the vehicle and concluded that it was executed even better than if he had been in control. This realization left him smitten to the point of whistle blowing. So much so, that following this test drive, he announced his endorsement of the project’s value, believing it deserving of substantial resources to push it further.

It’s crucial to acknowledge, by this stage, the Tesla autonomous driving team had discovered a key realization: A neural network required a minimum of a million video clips for effective training to reach optimized operation. The higher the number of training clips (say 1.5 million), the closer the operation would reach to ideal circumstances. Luckily, given Tesla’s veritably considerable global car population (numbering into millions), obtaining a substantially large volume of video evidence for daily training is feasible.

Elluswamy confirms Tesla’s uniquely fortunate position in data handling: Tesla has a distinct advantage.

Naturally, beyond data, Tesla’s computational power is another considerable trump card. After all, Tesla didn’t just invest in heaps of NVIDIA GPUs for data centre creation; it continued to push for the proprietary Dojo supercomputing project.

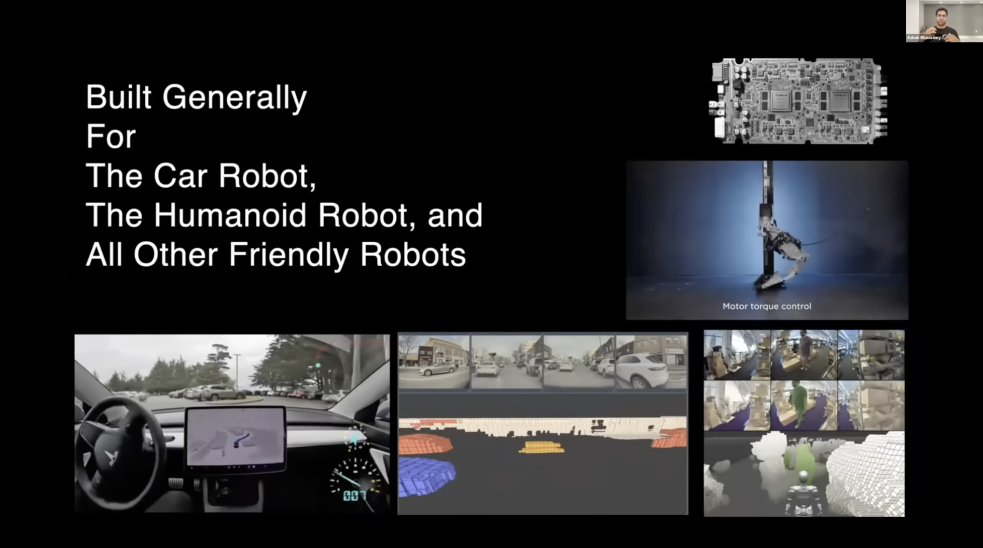

With the deployment of Tesla’s FSD Chip in vehicles, they’ve managed a combined hardware-software technological framework from cloud to vehicle. This cements Tesla’s unique “end-to-end” position in the auto industry sector.

From end-to-end, to large model thinking

Based on the current state of things, Tesla has achieved several breakthroughs in the “end-to-end” field.

Indeed, by August 2023, Musk had organised a livestream on social media about the beta test version of Tesla’s end-to-end autonomous driving (FSD V12 Beta). The content of the livestream showed Musk beaming in confidence, and the car only experienced one infraction related to traffic lights in the course of its autonomous driving. The collective effect, however, managed to catch the attention and admiration of the entire industry.Several months later, as Tesla further trained its end-to-end autonomous driving system, FSD V12 made further breakthroughs and started to expand its beta testing among Tesla internal staff in December 2023. By January 2024, FSD V12 was rolled out to journalists and eventually in February, Tesla deployed FSD V12 to selected regular users.

Based on the feedback so far, in urban scenarios, compared to FSD V11, which still relies on human-based code for path planning and control, FSD V12 based on neural networks is more acknowledged.

One such example was on March 6th when deep learning expert James Douma, after experiencing FSD V12, said that compared with V11, human intervention with V12 will reduce by more than 100 times. This is not an incremental upgrade, but a leap that has proved impressively powerful.

He also stated that Tesla’s team has managed to achieve “better than human” performance solely through gathering more high-quality data and more effective training. In response to this remark, Musk stated it was a very accurate assessment.

Of course, from what can be seen currently, Tesla’s exploration in the field of AI has clearly transcended the realm of “end-to-end”.

In fact, as early as at the top international conference in the field of computing, CVPR last year, Tesla’s AI head, Ashok Elluswamy, gave a keynote speech entitled “Foundation Models for Autonomy”. In this speech, Elluswamy made it clear that Tesla is constructing some foundation models, and the Occupancy Network is already incorporated, but not a physical entity itself.

More importantly, Ashok Elluswamy emphasized that a real Foundation Model is not a mechanical collection of many small tasks, but rather something capable of generating overflow effects.

He stated that Tesla is trying to build a more universal General World Model. It can predict the future, help the neural network learn independently, act like a neural network simulator, and even be able to generate 3D space in an AI manner (and according to human instructions for left and right turns, it can continuously maintain a high level of 3D transformation in the views of eight cameras).

It is on this premise that Musk repeatedly emphasized in February 2024 when Sora was launched, that Tesla has created a generative AI which better conforms to the laws of the physical world.At the conclusion of his presentation, Ashok Elluswamy stressed that Tesla was able to construct the afore mentioned, large foundation models owing to its vast data volume and robust computational capacity. He stated this to pique the interest of potential recruits, but at its core, the synthesis of massive data and significant computational power serves as Tesla’s cornerstone in constructing large models based on the real world.

Furthermore, Elluswamy emphasized that the construction of Tesla’s FSD is not solely for Car Robots, but also for Humanoid Robots.

At the same CVPR conference, another member of Tesla’s AI team, Phil Duan, stated in his lecture that what Tesla is constructing is a highly diversified, high-quality dataset to train a Foundation Model. In Tesla’s view, this will be its future path to empower autonomous driving and embodied AI through the construction of large models.

Interestingly, according to a video released by Musk in the latter half of last year, the Tesla Optimus humanoid robot has deployed the end-to-end neural network, identical to its autonomous driving system, demonstrating a distinct evolution in capabilities.

Looking at today, from an end-to-end standpoint, Tesla’s exploration of AI has progressed to another stage – to directly drive cars through a single foundation video network. From a technical paradigm standpoint, through end-to-end algorithmic transformation, Tesla has comprehensively shifted towards thinking about large models, similar to OpenAI’s GPT.

In Conclusion

Over the course of ten years, we find that the underlying driving force of autonomous driving technology development has often been the changes in AI technology itself.

For instance, starting from AlexNet in 2012, in the following years, Deep Convolutional Neural Networks (CNN) have become frequently used algorithms in the perception domain of autonomous driving. By 2020, after empowering natural language processing for several years, to address the efficiency and power issues of 3D spatial perception, Transformers were introduced into Tesla’s autonomous driving perception algorithm structure.

By the end of 2022 and the beginning of 2023, with the advent of ChatGPT, Tesla, inspired by large model thinking, turned towards end-to-end autonomous driving and entered into the developmental path of constructing a more generic next-generation autonomy system by training single, large foundational models.Regardless of the various large models of today, or Tesla’s end-to-end, a key component in the underlying algorithmic architecture is still the Transformer algorithm, born in 2017. It is difficult to be replaced in the short term.

Looking back, it is hard to deny that Tesla has been significantly influenced by OpenAI and ChatGPT on this path. This is also a reflection of its own business-level adhering to the Scaling Law. From this perspective, with the advancement of Tesla and Musk, the speed at which AI development directly impacts the development of autonomous driving is becoming increasingly faster. Meanwhile, the relationship between autonomous driving and AI is becoming more and more intimate.

There is even a view that when AI develops to the level of general artificial intelligence, fully unmanned autonomous driving can also be realized.

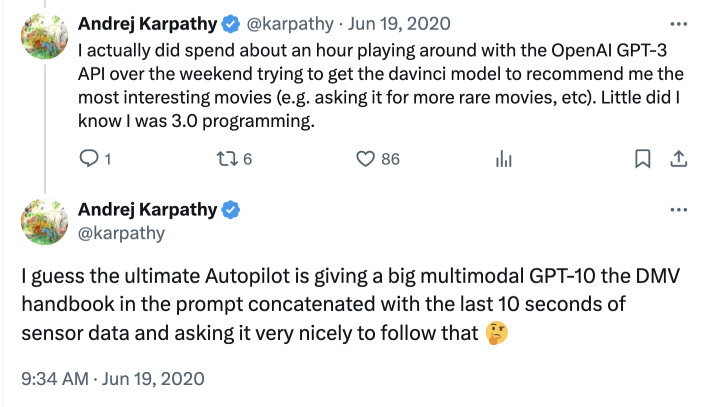

Interestingly, few people have noticed that as early as June 2020, Andrej Karpathy, a founding member of OpenAI and then head of Tesla AI, had already expressed his thoughts on GPT and the development of autonomous driving on Twitter. His words were:

The ultimate form of Autopilot should be inputting the contents of the DMV Handbook into a “larger multimodal GTP-10”, and then feeding it sensor data from the past 10 seconds, making it follow along.

From the situation at that time, Andrej Karpathy was already closely following the Transformer and GPT, and associated it with Tesla Autopilot. However, from the perspective of the present, everything we see in the field of autonomous driving and what is about to happen, was actually foreseen at that time, and the foreshadowing was laid.

This article is a translation by AI of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.