Smart driving has reached a crossroads this year.

On the one hand, OEMs and suppliers based on L2 have expanded their scenarios from high-speed to urban areas this year, represented by companies such as XPeng and Huawei. On the other hand, Robotaxi companies with high aspirations for L4 have encountered setbacks, with many enterprises being criticized by the outside world, road test stagnation, and even bankruptcy…

Although this “chilling” wave has not yet made domestic L4 companies embarrassingly desperate, transformation and seeking large-scale production have become a top priority.

And in this wave of transformation, QCraft is one of the companies with the fastest actions.

QCraft held two large-scale conferences this year. In May, QCraft released the “Dual Engines” strategy, which means that while continuing to invest in research and development of L4-level Robobus, it also provides stable and mature pre-installed intelligent driving solutions that can be freely configured to automakers.

Simply put, “Dual Engines” means walking on two legs, insisting on investing in L4 while seeking landing of L2-level pre-installed solutions.

In early November, QCraft announced the Chinese name of its self-driving solution Driven-by-QCraft – “Qingzhou Ride with the Wind”.

Generally, most L4 companies enter as suppliers, and the three main concerns of the public are:

First, how to control hardware costs? After all, most L4 companies almost pile up hardware for their test vehicles regardless of cost, and the cost of covering 360° field of view with lidars alone is untenable for mass-produced vehicles.

Regarding lidars, it needs to be mentioned that most L4 companies use mechanical lidars, which do not require much attention to the vehicle’s shape. However, mass-produced cars are completely opposite, if you want to go on the car, you must use one or more solid-state/semi-solid-state lidars.

Second, how is the algorithm’s technical ability? After all, once it is put on mass-produced cars, it needs to run around, although the test demonstration area is also part of the public road environment, when the area expands, the road environment will become more complicated, and there will be more corner cases.“`markdown

Third, what is the prospect of front-loading mass production? A cruel reality is that although every automaker is increasingly focusing on the ability of intelligent driving at the current stage, many leading automakers insist on self-research. Large OEMs such as BYD, GAC, and Great Wall have established cooperation partnerships and even mass-produced related functions. That is to say, from the current development perspective, the “cake” of intelligent driving is getting bigger, but there are not many high-quality customers left for L4.

Looking at the industry at the end of 2022, besides Momenta and IM jointly delivering mass-produced basic auxiliary driving functions, there are few L4 companies that can deliver outstanding products in cooperation with OEMs. There are still some L4 companies sticking to the L4 autonomous driving route.

Light Boat Intelligent Navigation is one of the first autonomous driving companies to proactively transform. We just took this opportunity to look at Light Boat’s problem-solving ideas.

Want to Mass-Produce? Cost Reduction Is Crucial

The first hurdle that L4 transformation to L2 function mass production needs to overcome is cost reduction. After all, the selling price of a mainstream mid-range model is only over 200,000 yuan, and there is not much cost space left for intelligent driving software and hardware.

At the press conference in May this year, Light Boat announced the DBQ V4 plan that covers scenarios from L2 to L4, which has three versions: flagship, enhanced, and standard.

Among them, the flagship version is equipped with 12 cameras, 5 semi-solid-state lidars (1 main lidar and 4 blind spot lidars), and 6 millimeter wave radars. The goal of this scheme is to achieve L4 level autonomous driving.

In terms of cost, according to a top lidar supplier, the price of its newly launched semi-solid-state blind spot lidar is priced at several thousand yuan. From this perspective, the total cost (hardware only) is likely to be around tens of thousands of yuan, which is not very friendly to mid-range car models.

In contrast, the DBQ V4 standard version with reduced hardware configuration has a better cost-performance ratio.

“`The standard version of the system is equipped with a lidar, which is located at the front of the vehicle. Qingzhou Intelligent Navigation stated that the total hardware cost of the standard version system is less than 10,000 RMB, which includes a lidar, millimeter-wave radar, cameras, and a driving domain controller.

At the same time, Qingzhou Intelligent Navigation claims to achieve 99% L4 capability with a hardware cost of 10,000 RMB or less.

In other words, Qingzhou must first compress the hardware cost to 10,000 RMB or less, and then rely on this set of hardware to achieve city-level NOA. This reminds me of Tesla FSD, which achieved FSD Beta in urban areas with relatively inexpensive cameras.

Tesla’s core secrets of low-cost FSD Beta are twofold. The first is full-stack self-development, which includes not only software but also core hardware such as the FSD chip. The second is software algorithmic ability.

So, what about Qingzhou’s software algorithmic ability?

At this year’s Qingzhou Technology Workshop, the Qingzhou software team demonstrated its core technology.

Super Fusion Perception and Spatial-Temporal Joint Planning

What is “Super Fusion”?

Qingzhou Intelligent Navigation stated: “Perception is the foundation for building towering buildings.” It is believed that no one doubts the importance of “perception ability” to autonomous driving.

Different sensors have different perception methods and data acquisition. How to process and utilize these data is crucial, and the most important thing is algorithmic modeling.

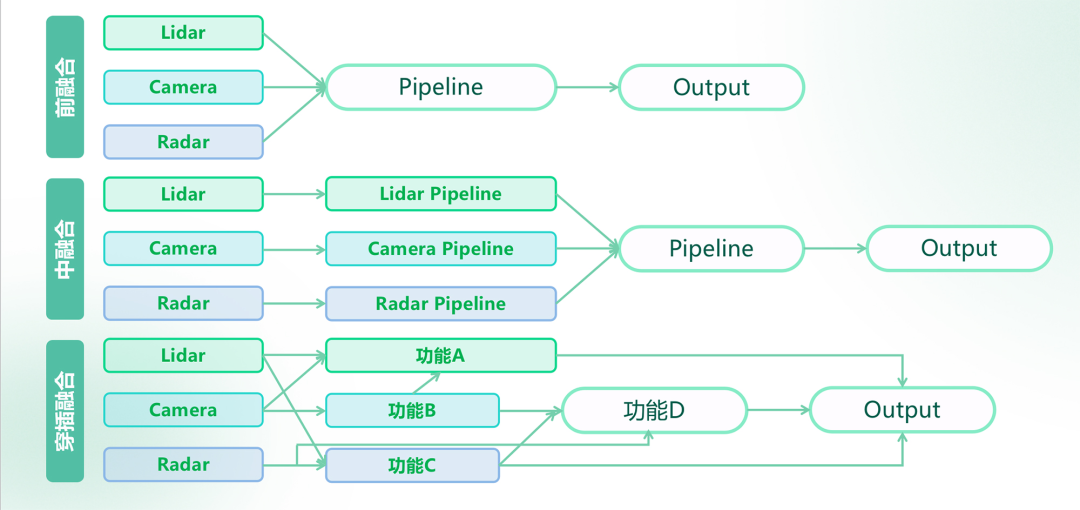

As we all know, using a multi-sensor system solution often requires fusing different data. The mainstream methods in the industry include front-end fusion, back-end fusion, and some automakers also use middle fusion.

Front-end fusion refers to data fusion, which processes the raw data of each sensor and obtains a result layer target with multidimensional comprehensive information.

For example, by fusing camera information with lidar data, the result contains not only color and object categories but also shape and distance information. If the millimeter-wave radar is added, it can also obtain relative distance and velocity information based on the vehicle speed.## Translated Markdown English text with HTML tags

Post Fusion also called Target Level Fusion, or it can also be said Result Fusion. It refers to each sensor first generating perception results, such as images, point clouds, etc.

At this time, the system brings these data into the real coordinate system for transformation, and after the target data processing is completed, fusion is performed. Post-fusion is a common fusion method used in traditional intelligent driving. The disadvantage is that low-confidence information will be filtered out, resulting in the loss of original data.

Middle Fusion also called Feature-level Fusion, which extracts important features from the raw data for preprocessing. The goal is to extract and process multi-level features (such as color, shape, etc.), for efficient and intelligent image information processing.

The Light Boat Intelligent Navigation System uses Temporal Interleaved Fusion, officially called “Super Fusion.” Light Boat Intelligent Navigation System’s “Super Fusion” includes pre, middle, and post-fusion, and is interleaved in chronological order. Through the fusion of data from different sensors, or result fusion, it better complements the strengths of different sensors.

Zhang Yu, Head of Perception at Light Boat, said: Super Fusion Perception Technology allows perception models to complement each other in different stages using different sensor information, achieving better fusion results, avoiding various false detections and missed detections, with high accuracy and strong robustness.

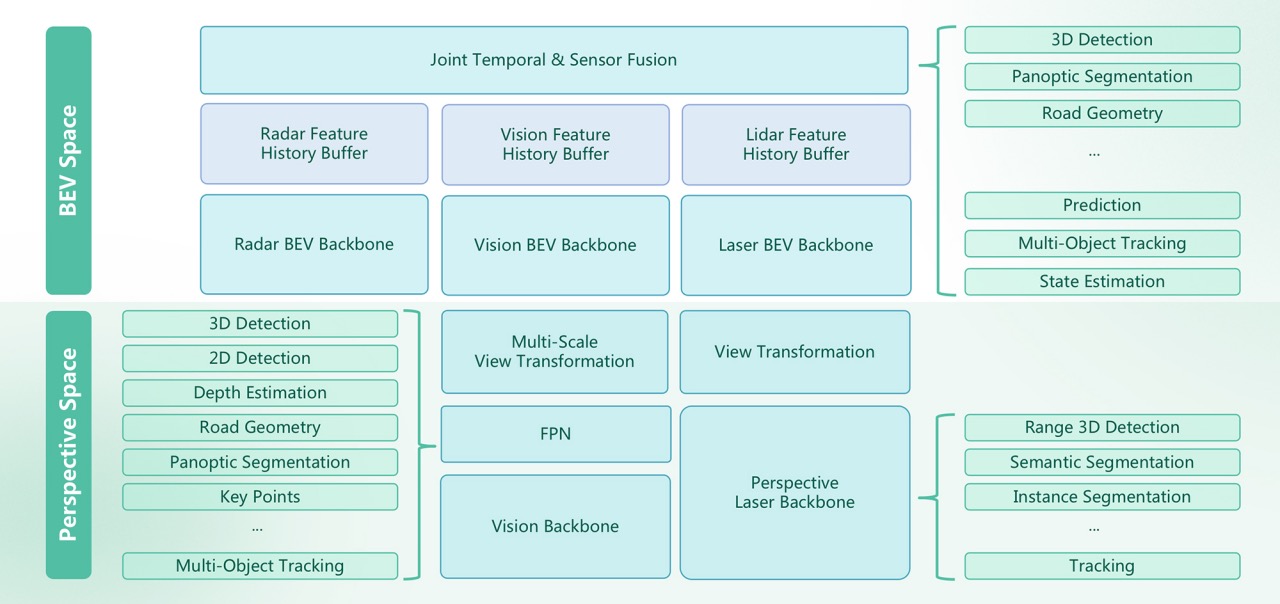

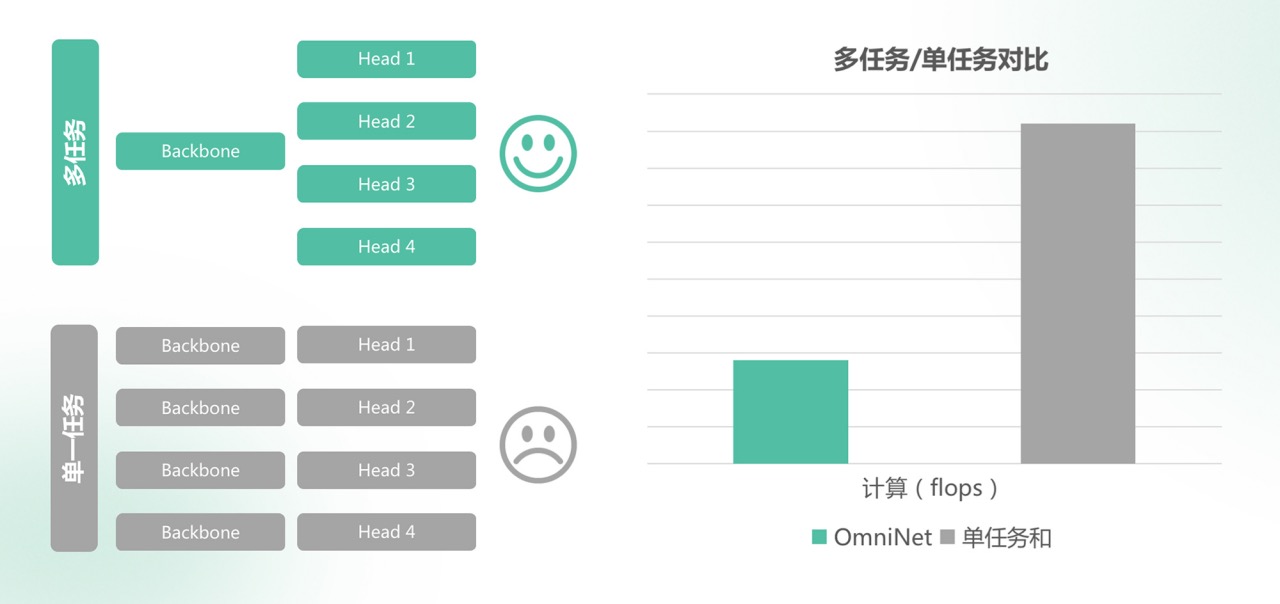

In addition, based on the Super Fusion solution, Light Boat has also created a large model OminiNet.

It is worth noting that OminiNet is a large model that can output different perception task results in the image space and BEV space by fusing visual images, lidar point clouds, and other information through front fusion and BEV space feature fusion.

Zhang Yu, Head of Perception at Light Boat, also explained the biggest difference between OminiNet and XPeng XNet. Although both are One Model, Multi-task models. In his view, there are several differences:

The first is perception. Compared with XNet, Light Boat’s OminiNet will increase multi-sensor fusion. For example, XNet’s input actually only has images, and according to the official PPT, it is a model of multiple cameras and frames. In addition to the multi-camera and multi-frame camera input, Light Boat also has Lidar and Millimeter-wave radar.The second point is that compared to XNet’s output, the output of Qingzhou will be more diverse, and it will play a role in perceiving and outputting multiple tasks. For example, XPeng will output dynamic targets and static targets that include position, pose, size, speed, prediction, and static targets such as lane lines and stop lines.

In addition to these, OminiNet, for Qingzhou, will output generic obstacle-occupied grids, similar to Tesla’s Occupancy Network, as well as other downstream outputs.

At the same time, Qingzhou also claims that OminiNet can improve perception accuracy while saving 2/3 of computing resources, so it can be deployed on mass production computing platforms.

Space-time Joint Planning Algorithm

Perception is fundamental, while PNC (planning and control) affects the experience.

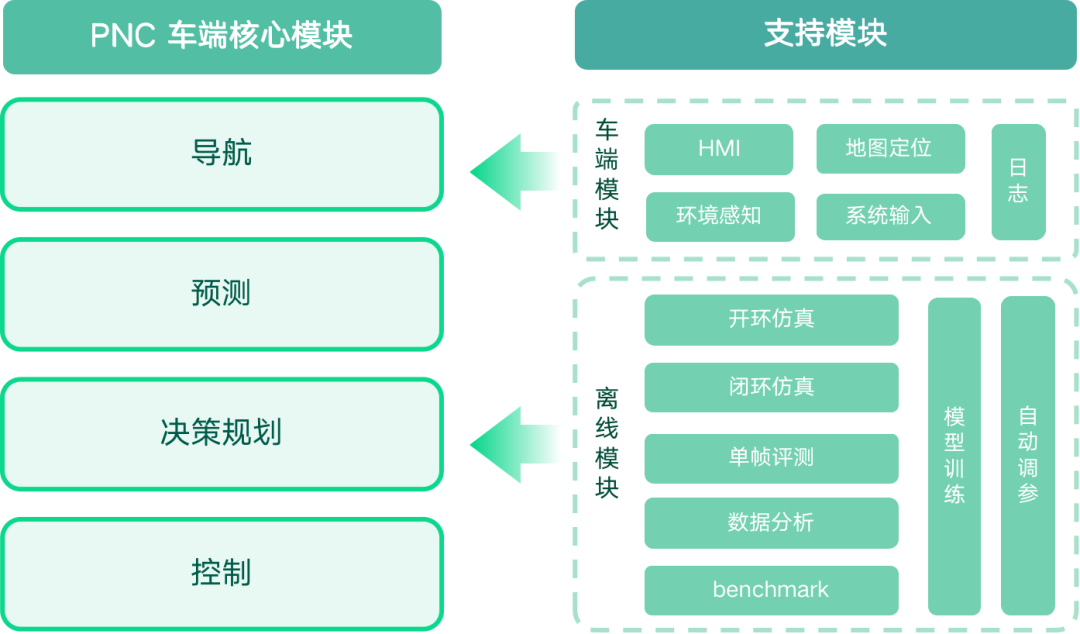

Qingzhou believes that PNC is the super brain of urban NOA, and Qingzhou’s planning and control includes vehicle-side core modules and supporting modules.

The core module includes navigation, prediction, decision planning, and control. The vehicle-side module includes HMI, location services, logs, environmental perception, and system input.

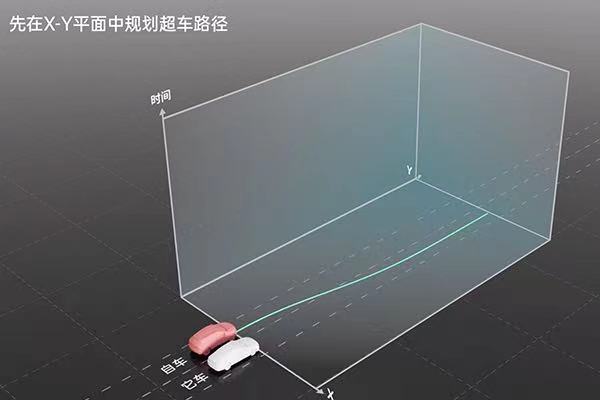

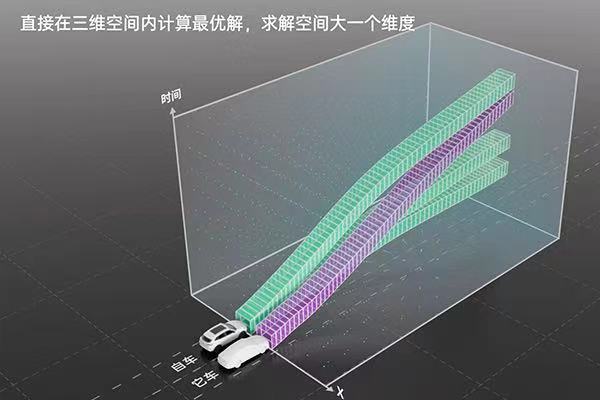

In terms of PNC, Qingzhou did not adopt the commonly used spatial-temporal separation algorithm in the industry, but instead adopted the space-time joint planning algorithm.

In short, traditional “space-time separation planning” only considers path planning and speed planning, corresponding to lateral control and longitudinal control respectively. The “space-time joint planning algorithm” considers both space and time to plan the trajectory. Based on the path, it then solves for the speed to form a trajectory, which can directly solve the optimal trajectory in the three-dimensional space of x-y-t (i.e., plane and time).## Landing on the Beach

As mentioned earlier, the success of a set of intelligent driving systems is highly related to its market influence.

For example, in the past two years, Mobileye has been completely suppressed in the Chinese market by Nvidia and even domestic suppliers like Horizon Robotics. The main reason is that its “black box” solution cannot meet the needs of new forces in the Chinese market, and Mobileye has never been known for its chip computing power.

But no one can deny the industry influence of this company. This is not only because its chip shipments have reached 100 million pieces, but also because when an automaker has a demand for L2 basic auxiliary driving functions, Mobileye still has the ability to provide a mature and stable solution.

For companies like Qzhi or Ehang, it is very important to collaborate with customers to implement features and earn a good reputation.

For Qzhi, now is a good time to enter the market.

According to the “2022 China’s Autonomous Driving Start-up Investment and Financing Series Report”, with the maturity of technology and the decrease in costs, the ADAS function gradually penetrates from high-end car models to mid-to-low-end car models.

As auxiliary driving functions are produced in larger quantities, more functions and systems will become standard and eventually penetrate into mid-to-low-end car models.

According to the iResearch Consulting Report “The Wave of Automotive Industry Reform – China Smart Driving Industry Research Report”, it is predicted that by 2025, the penetration rate of passenger car assisted driving will reach 65%, and L2 and higher-level assisted driving will enter the popularization period.

Reducing the cost and penetrating NOA functions into segmented markets is an important way for Qzhi to seize the market.

With the launch of its Dual Engine Strategy and the “Riding the Wind” plan, Qzhi is no longer just an L4 autonomous driving company deployed in Robobuses, but also a supplier of intelligent driving general solutions.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.