Author | Qiu Kaijun

Editor | Zhu Shiyun

In early August, several high-level smart car companies experienced accidents involving car owners. In situations where car owners claimed to have activated the intelligent driving assistance system, vehicles either crashed directly into stationary vehicles ahead or rear-ended them while the latter were moving slowly.

People were unable to understand: If even stationary or slow-moving vehicles couldn’t avoid collision, how could advanced-level intelligent driving be achieved?

At the Chengdu Motor Show, Salon Automobile released a lot of information about Salon Intelligent Driving before and after the event. As an intelligent electric vehicle brand initiated by Great Wall Motors, Salon Automotive proposed a goal of intelligent driving development that comprises four LiDARs, two Huawei MDC computing platforms, and an ultimate goal of Level 4. It can be said that it is remarkable in the field of intelligent driving.

So how does Salon Automotive resolve the safety issues that other car companies have already encountered?

On August 22, Salon Automotive held a communication meeting at its headquarters in Beijing’s Yizhuang district. Yang Jifeng, the senior director of the Intelligent Center, interpreted Salon Automotive’s R&D approach to intelligent driving and the functions that it can achieve.

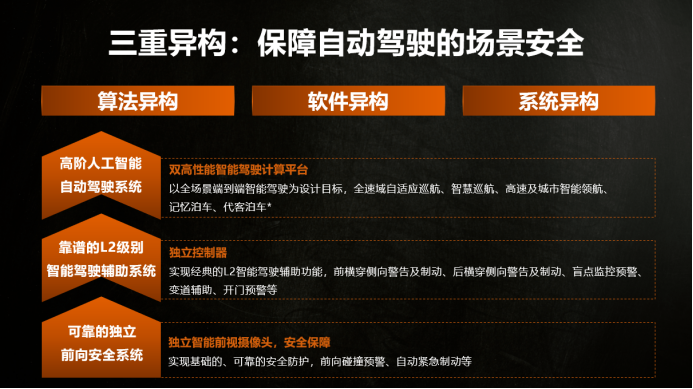

“Essentially, we are independent autonomous driving system architectural designs that have different levels of algorithm heterogeneity, software heterogeneity, and system heterogeneity,” said Yang Jifeng, “and this system ‘is the most complex system within the industry at present. We are committed to offering leading AI capabilities and human-like intelligent experience in complex scenarios while using more rule-based and validated standard methodologies in risk scenarios.’”

With such a “pile” of sensing devices and computing platforms, and building “the most complex system,” can Salon’s intelligent driving smoothly achieve both safety and intelligence in driving?

Three-pronged approach

“When everyone was developing AEB and traditional ADAS, based on traditional methodologies and standard systems, although there were failures at different levels, there were not so many discussions about accidents. Why?”

When introducing the Salon Intelligent Driving System, Yang Jifeng first raised this question.

He explained that, using a traditional example, even in the only standard regulation ALKS (Automated Lane Keeping System), which is above L3 level for self-driving, the minimum follow-up distance for cruising is determined based on speed and collision time gap, and the follow-up time gap changes linearly with the speed (see figure below).

The biggest advantage of the functional development methodology of traditional ADAS is the clear design of operating conditions and explicit driving strategies, and the scenarios and forms of functional failures are basically explainable, but its disadvantage is that it is not intelligent.“For example, in the scenario of traffic congestion on the highway, if you are driving in the rightmost lane and set the minimum following distance as 2 meters in a fixed manner, the experience will be very bad and you will always be cut in by other cars,” he said, adding that the merging of exit ramps at low speeds is a typical game scenario.

“So, can we solve this problem by adding a forward-facing Lidar? The answer is no. It can only make the minimum following distance closer, but cannot evolve into a dynamically adjusted minimum following distance based on scenarios. Because it is calculated based on the system’s collision risk. We call this kind of development methodology based on logic “knowledge-based.”

“However, with the development of high-level AI functions such as advanced cruise control and NGP, we no longer rely on simple time gaps to determine all driving strategies. We hope that the entire system has stronger robustness, better scene generalization, and more human-like intelligent experience. Compared with knowledge-based, we call this kind of product design methodology, to some extent, “data-based.”

The development mode of data-based aims to solve the design of so-called L2.99, or end-to-end intelligent driving assistance systems today, in order to solve the vast majority of driving scenarios throughout the lifecycle.

However, it is difficult to define these high-level functions like ALKS through very clear design operating conditions (ODC), clearly defined minimum risk states (MRC), and minimum risk operations (MRM).

The data-based development mode also has its flaws. Many safety concepts of knowledge-based are calculated and designed, and have been standardized. But the safety standards of data-based can only be said to reach an excellent level based on today’s datasets and scenario libraries.

However, people have high expectations for data-based development. Because this model is based on the concept of data-driven, mapping of human driving behavior characteristics, that is, humanization of machine vision, intelligent fusion, and even humanization of path planning. It may have a low starting point, but its growth potential is worth looking forward to.

“In advanced intelligent driving assistance systems, we have used many data-driven AI capabilities, such as using a machine learning model to output 3D perception end-to-end, using a machine learning model to directly output the prism of Lidar targets, using AI to make predictions, do fusion, and even do path planning,” said Yang Jifeng.So, knowledge-based and data-based are not superior or inferior to each other. Salon’s intelligent driving system strategy hopes to have the best of both worlds. “In the technology stack of advanced intelligent driving, we hope to have a humanized and highly intelligent experience that combines multi-sensor fusion under intelligent cruising and the Captain-Pilot (Salon’s captain intelligent driving system). However, we also hope to have more perceptible and rule-based driving strategies based on interpretive perception and explicit rules when entering MRC or other safety scenarios.”

“How to balance the advancement and safety of technology? We did something very complicated.”

What Yang Jifeng referred to as “very complicated” is three-pronged, “essentially multiple-sensor fusion-based, independent and heterogeneous intelligent driving systems for perception data from same sources.”

At the highest level, Salon Motors uses Huawei’s high-performance computing platform and Momenta’s algorithm software to achieve the most advanced intelligent driving assistance functions in the current industry, including full-range adaptive cruising, intelligent cruising, high-speed and city navigation, and memory parking, etc. This layer can be understood as a strong AI stack that applies a large number of data-driven algorithms to achieve a more intelligent experience in more complex scenarios.

For the latter two tiers, “some safety functions such as forward, backward and lateral crossing warning and braking in safety scenarios are also done through a relatively more rule-based and more mature knowledge-based system solution while maintaining the advanced technology stack, and will also run on an independent controller.”

For example, at the bottom level, Salon also created a forward safety system based on an independent front-view camera to ensure forward warning and braking functions.

These three sets of systems are heterogeneous, that is, they can work independently of each other, but share sensor data.

How do these three sets of systems cooperate?

Yang Jifeng explains with an example: “In the process of enabling intelligent cruising, the vehicle’s perception information will be sent to Huawei’s high-performance computing platform for data-driven AI perception calculation and path planning. However, the vehicle’s perception information will also be sent to the independent lateral safety system and independent front-view safety system for calculation using more traditional perception feature extraction and rule-based path planning methods. Then, the calculation platform will synthesize the results of the two and do “scene-based arbitration.”Yang Jifeng stated that the Salon system is “the most complex system in the world”. Logically, this system avoids accidents that may be caused by the failure or misjudgment of a single system.

5-fold 360-degree perception

Regardless of how many independent decision-making systems are used, they must be based on correct environmental perception.

Currently, many AEBs in vehicles do not work because they cannot detect stationary objects and choose to “ignore” them, resulting in collisions with stationary vehicles.

Currently, mainstream automotive sensors include cameras, millimeter-wave radar, ultrasonic radar, lidars, and so on. However, each type of sensor has its own advantages and disadvantages. Generally speaking, using a single type of sensor cannot meet the perception requirements of complex driving environments. Therefore, the mainstream technology is to fuse multiple sensors. Tesla also promotes a smart driving solution that only uses cameras as sensors, but they are the only ones.

Moreover, with the increase of intelligent driving assistance level, the requirements for sensors are getting higher, which is also the reason why lidars are being installed on cars. Before lidars were adopted, the combination of camera and millimeter-wave radar was the strongest perception solution, which could recognize some standard static obstacles, but it was still difficult to recognize non-standard objects or small obstacles. Including Li Xiang, the founder of Ideal Automotive, who said that “the combination of cameras and millimeter-wave radar is like a frog’s eyes. It is good for judging dynamic objects, but almost powerless for non-standard static objects.”

Lidars emit lasers and analyze reflected energy, amplitude, frequency, phase and other information to construct precise 3D structural information of the target and help identify and judge the target.

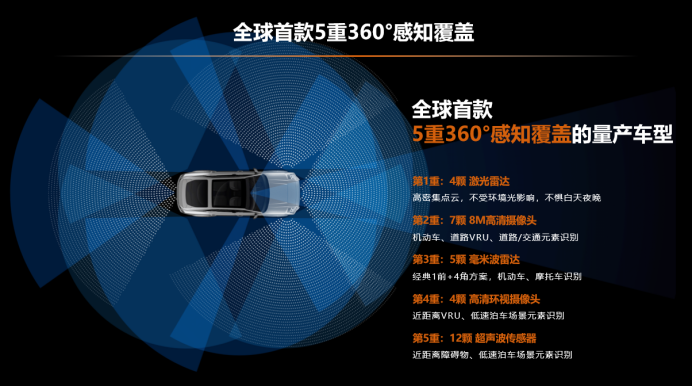

However, lidars are very expensive, and how to use them well is still being explored. Salon is the most radical in applying lidars, using four of them, the most in the industry. The layout of the radar is not only in the front and two sides, but there is also one in the rear.

In addition to four lidars, Salon also uses seven high-definition cameras, five millimeter-wave radars, four panoramic cameras, and 12 ultrasonic radars to form a 5-fold 36-degree full-coverage perception device array. It can be said that the industry has no comparable configuration.

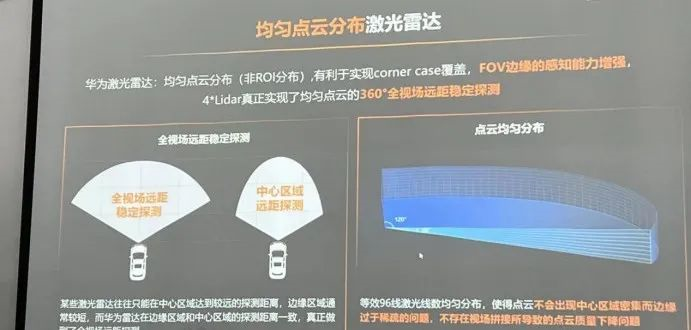

Salon uses Huawei’s 96-line hybrid solid-state lidar, with a detection range covering 120° horizontally and 25° vertically, and can output a million-level point cloud per second. When four of them are used simultaneously, it can achieve 360-degree panoramic and long-distance detection.

Yang Jifeng also explained why Huawei’s lidar was used.

“Huawei LiDAR is relatively uncommon in the industry because it does not allocate the range of interest (ROI) for point cloud processing, meaning it does not concentrate more point clouds at the front of the road,” said Yang Jifeng.

“If there is only one LiDAR, I would prefer a higher density of point clouds in the most common areas, because the functional scenario targets we need to solve in a short time are clear, which is to do a good job in the interactive scenario of road traffic participants in the forward and front-side lanes. But if I am a panoramic LiDAR, I would like the point clouds to be distributed as evenly as possible. This is to build a 360-degree complete perception system architecture more effectively, ensuring a longer research and development (R&D) lifecycle of this technology stack. From a goal perspective, I also hope that this perception system can respond well to edge scenes, including consistent data density in various scenarios.”

The advantage of a uniformly distributed point cloud is that it makes it more convenient for the system to stitch together the perceptual data of four LiDARs, and provide better problem detection capabilities for a longer lifecycle of autonomous driving systems. Ultimately, this helps to ensure a complete iteration of the technology stack in the future.

Yang Jifeng also explained why Salon chose rear LiDARs.

Firstly, the most important thing for LiDARs is to provide complete and evenly distributed perception capabilities, rather than assigning abilities to a specific scenario.

Secondly, many scenarios have shown that rear LiDARs can achieve better functions and experiences. For example, when backing up, LiDARs can help output the physical distribution of the drivable area more directly than visual systems, making difficult scenarios easier. Also, in the smart evasion function, rear LiDARs can make early evasions in their own lane when a large number of vehicles are coming quickly from the rear of the side lane.

Based on this set of sensors, Salon believes it has built powerful perception capabilities. In terms of difficult static objects, its autonomous driving system can identify difficult-to-recognize objects such as cones, no-parking signs, road studs, and pillars.

“Overall, we can achieve a leading status at present. In the future, we will do some smaller target objects and improve some perceptual capabilities based on more data,” Yang Jifeng said.

Always Have a Plan B

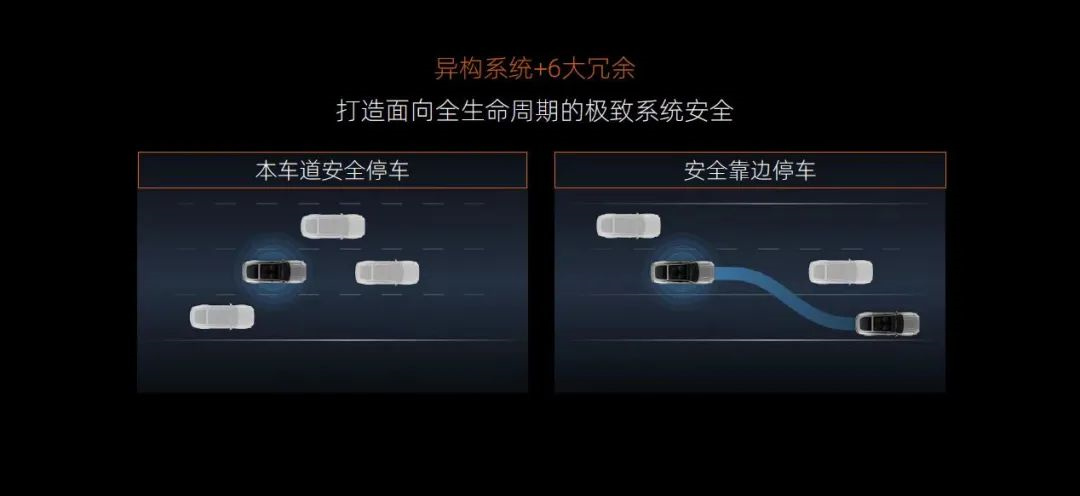

Despite having a strong perception and system capability, Salon Automotive still takes a cautious approach in building an autonomous driving system, with Plan Bs everywhere. They have summarized six redundancies.

These six safety redundancy systems are perception, control, steering, braking, communication, and power supply redundancy.

At the perception level, RoboTaxi is equipped with 4 Velodyne LiDARs, 7 high-precision cameras, 5 millimeter-wave radars, 4 surround-view cameras, and 12 ultrasonic sensors, which can be described as redundant.

At the control level, RoboTaxi adopts the Huawei MDC 610 dual computing platform, which means that even if one platform fails, the system still has sufficient perception and computing capabilities and can calmly take over or enter into security strategies. Even if the controller fails, the system can still perform emergency lane change or enter the MRC mode under certain circumstances.

At the communication level, RoboTaxi’s computing platform has both Ethernet communication links and multiple CANFD communication link designs. If any communication link for braking, steering, or power fails, another link can be switched to immediately.

In terms of power redundancy, when the vehicle experiences a power supply failure, the intelligent driving system can still rely on another power source to maintain independent and safe control.

In terms of steering redundancy, the EPS hardware used in RoboTaxi adopts dual CPUs, dual bridge drives, and dual winding motors. In case of any single circuit failure, at least 50% of the steering assist power can be provided.

Yang Jifeng expounded on the brake redundancy that is extremely relevant to accident avoidance during driving. He said that the IBC+RBC dual-redundancy brake system used in RoboTaxi is the first of its kind in the industry, and can achieve dual safety failure modes of mechanical redundancy + electronic redundancy.

Yang Jifeng said that many vehicles before also had dual brakes and dual ESP, but still had difficulty avoiding some accidents. There had been a case of simultaneous failure of mechanical and electronic redundancy, which caused a heated discussion. However, the IBC+RBC system which has both mechanical and electronic redundancy, can be regarded as the only redundant brake solution that the industry can find today to meet the requirements of Level 3 and Level 4 autonomous driving”.

Overall, the RoboTaxi intelligent driving system aims for the entire lifecycle, including Level 3 and even Level 4 function hierarchies. Therefore, RoboTaxi must actively use the latest hardware and software. However, during the stage where Level 3 has not been achieved, human-machine co-driving is an inevitable transition period. How to ensure advancement and safety during this transition phase?

Therefore, RoboTaxi’s strategy is to create a driving experience that not only has advanced AI, but also has reliable and validated functions that provide safety guarantees.

In the entire technology stack of intelligent driving, RoboTaxi ensures safety with a large amount of redundant architecture design. In the future, when the safety boundary is clearer, RoboTaxi will converge the technology stack and achieve better balance in safety, function, and cost.

–END–

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.