On the second day of the Ideal L9 launch conference, Ideal Auto held a media communication meeting. Before the QA session, as usual, responsible persons from various departments were invited to give a basic introduction of L9. However, as the introduction progressed, I found that rather than a “communication meeting”, this was more like an Ideal hardcore “technology day”.

Looking back at the speakers who took the stage, we can also find some clues. Liu Liguo, Gou Xiaofei, and Lang Xianpeng all have the title of Vice President, respectively responsible for “Vehicle Electrification”, “Intelligent Space”, and “Intelligent Driving”, and directly report to the chief engineer and co-founder Ma Donghui. “This is the first time that the leaders of the three core technology teams of Ideal Auto have appeared together in an event.”

Before this, the impression of Ideal to people has always been based on precise positioning. Innovations in technology were not prominent, and they were generally one step slower than XPeng in focusing on cabin intelligence and driver assistance.

However, after this launch conference, I believe that many people’s view of “Ideal wins by precise positioning without technology” can be changed. And we also saw Ideal’s thinking on the future of in-car space interaction and the development path of ADAS in this communication meeting.

How does Ideal understand the space inside the car?

First of all, it is worth noting a detail, “the development of intelligent space within Ideal has already become an independent first-level department”, and it is named “Intelligent Space” instead of “Intelligent Cabin” as we commonly say. This change in priority can also indicate that “in-car” has become Ideal’s new focus point.

Before the official introduction, we need to align our understanding. Although the car is a means of transportation and its purpose is to take us from point A to point B, the cabin is also a “space”. You can think of it as a “mobile space”, and I can also say it is a “transportation tool with a small space”.

Before the emergence of electric cars, the car was more defined as a “transportation tool with a space”, but after the emergence of electric cars, the car is more like a “mobile space”.

The former has more tool attributes, while the latter has a broader concept of space.

Everyone hopes that the space inside the car is larger and more comfortable. This is also why Chinese people like SUVs, and sedans with an L in their names. In higher-end models such as Mercedes S and BMW 7 Series, the rear seats are made more comfortable, and even can achieve a half-reclining sitting posture in the car.

However, the electricity in fuel-powered cars is not plentiful enough. The 12V small battery can only support basic needs for a short time. If you want to use air conditioning and audio for a long time while staying in the car, the engine needs to be started for a long time to supply power by burning fuel. There is no problem with this while driving, but it is not environmentally friendly and the fuel consumption during idle also leads to high costs for every additional minute spent in the car.

In this context, no one wants to stay in the car. The definition of a car is just a means of transportation, and the boundary between a car and a house is very clear. Therefore, the development direction of all car manufacturers is to ensure the comfort of vehicles while driving, without considering the functionality and comfort of vehicles in a stationary state.

However, when the energy form of the vehicle becomes electric and comes with a super large energy storage battery, in theory, your car cabin is the same as your home, with a plentiful supply of electricity. Therefore, the car cabin has become a rare private and independent space.

In theory, what you want to do at home can also be done in the car, the only difference is in decoration and space limitations.

We can also see that Xpeng has begun to try to expand the functionality and comfort of vehicles at rest on the P5, adding refrigerators, air mattresses, projectors, and more.

Xpeng’s attempt is very bold, and in my opinion, there is no problem with the idea. However, the result is that this wave of operations has not been accepted by too many people.

The reason behind this is not that people do not have the demand for watching movies in the car, but whether the convenience and experience of watching movies in the car have surpassed that at home, while giving up the functionality of the vehicle itself.

For example, the time required to unpack and pack the air mattress or set up and retract the projector is enough to play a game of King of Glory, while the air mattress itself covers almost half of the space in the trunk after retracting.

Therefore, the first step to having a good in-car experience is to have a comfortable space.

How to make the space inside the car more comfortable?

The two points summarized by Liu Liguo are “larger space” and “comfortable seating”. These two points are actually everywhere in our daily lives. The biggest difference between second class and business class on high-speed trains is a larger space and more comfortable seats, and the same is true for business class and first class on airplanes.

To make the space larger, Liu Liguo’s team did not simply increase the size of the car body, but improved the utilization rate of the entire vehicle space.

In the development of the entire vehicle, each segment of the car body has its own code. L103 is the entire vehicle length, L10 is the mechanical space length, and passenger space is L1. On L9, the passenger space (L1/L103) is 66.33\%.

In terms of actual spatial experience, the L9 has 2 fist-leg space in the second row and 3 finger space in the third row, both exceeding the leg room of the BMW X7 and the seating capacity of the GLS.

To make sitting more comfortable, Liu Liguo’s team created China’s unique human body standards based on the latest human body size standards from the China National Standards Committee. The design and material selection of the seats are more targeted, so the comfort of the seats on the L9 has been highly praised. Some even gave the L9 third row a higher comfort rating than the second row of the Tesla Model 3.

The next step in making the space more useful is to make it “intelligent” based on everyone’s willingness to stay inside the car.

How to make the interior of a car more useful?

Until now, I have been looking forward to electric cars, hoping to experience some new things through intelligent driving in the cockpit, because in my opinion, the new forces without organizational constraints can be bold and innovative, thereby creating some subversive experiences in the cockpit.

However, after looking at many new cars in 21, this expectation always ended in failure. Recently, I felt the subversion of the experience again in the Ideal L9.

But on second thought, does Ideal have any great innovations? Except for adding 3DToF and gesture control, what else is there? It seems like nothing.What did Ideality do? It improved some basic experiences from a score of 60 to 90. Bigger space, more comfortable seats, better screens, better speakers…

Based on these gradual changes in details, the cabin experience has undergone a qualitative transformation.

And this process is remarkably similar to the development process of the iPhone. What have we experienced from iPhone 4 to the current iPhone 13?

The iteration of chip technology, camera, screen, battery, network speed, and software capabilities. It is the gradual iteration of these underlying technologies that transforms the quantity of experience and functions into quality.

Why do we focus on improving chip computing power? Why do we focus on continuously improving network speed? Why do we need to add new hardware? Looking back, it may seem obvious now, but it is the R&D personnel’s thinking about the future at the beginning of product development that ensures that the final product development does not go off track.

Although it is difficult to predict the ultimate outcome of in-car space now, just as we cannot say that the ultimate outcome of the phone is now in 2022, the development direction is clear.

If we want to make the cabin more powerful, the car must know more information, so the way the car obtains information is crucial.

In the face of this problem, XPeng pioneered full-scene voice. Instead of treating voice as a tool, it is regarded as an interaction mode. With the drive of this concept, the voice assistant’s ability range and interaction efficiency have qualitatively changed compared to before.

The core here is to reduce the cost of human instructions by improving the vehicle’s ability to obtain information.

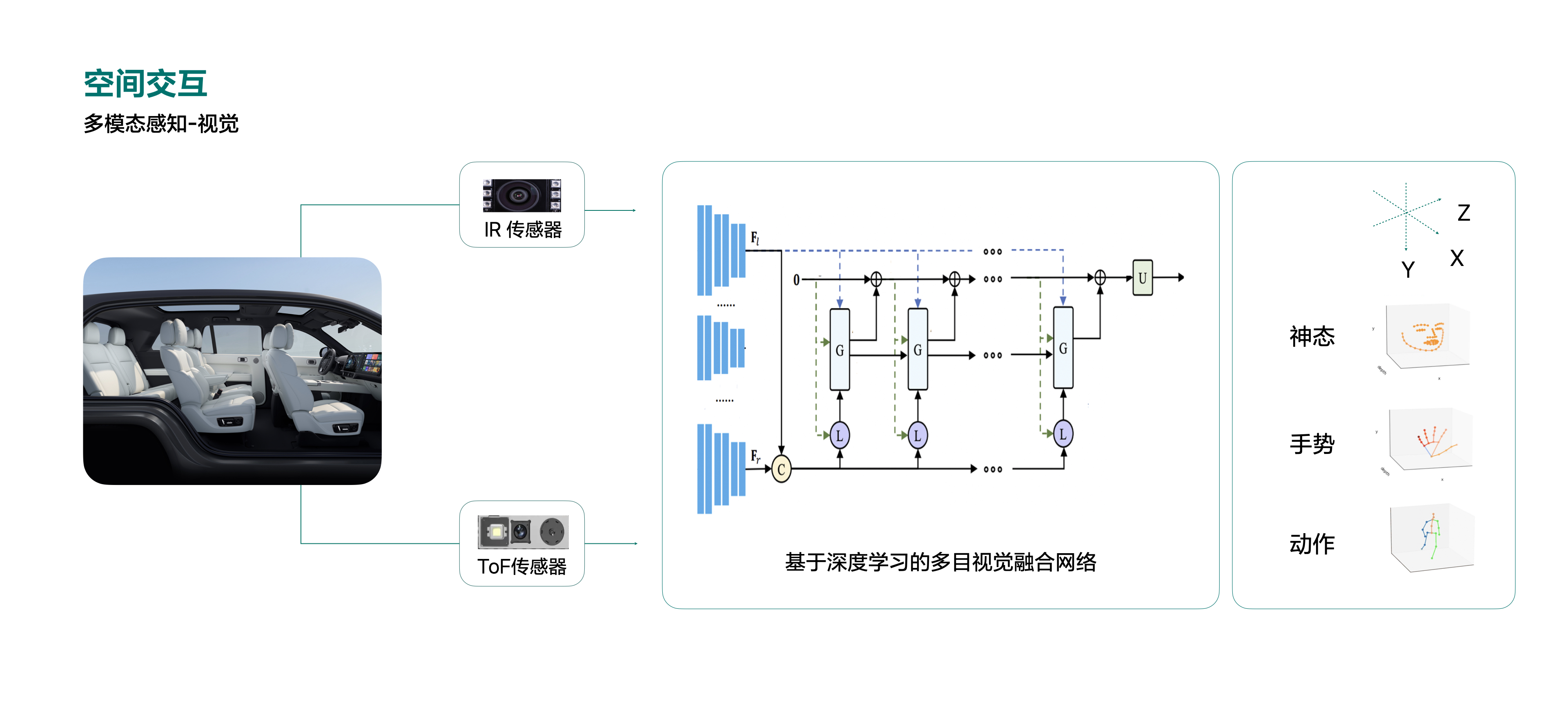

On Ideality L9, the concept of “three-dimensional spatial interaction” was first proposed.

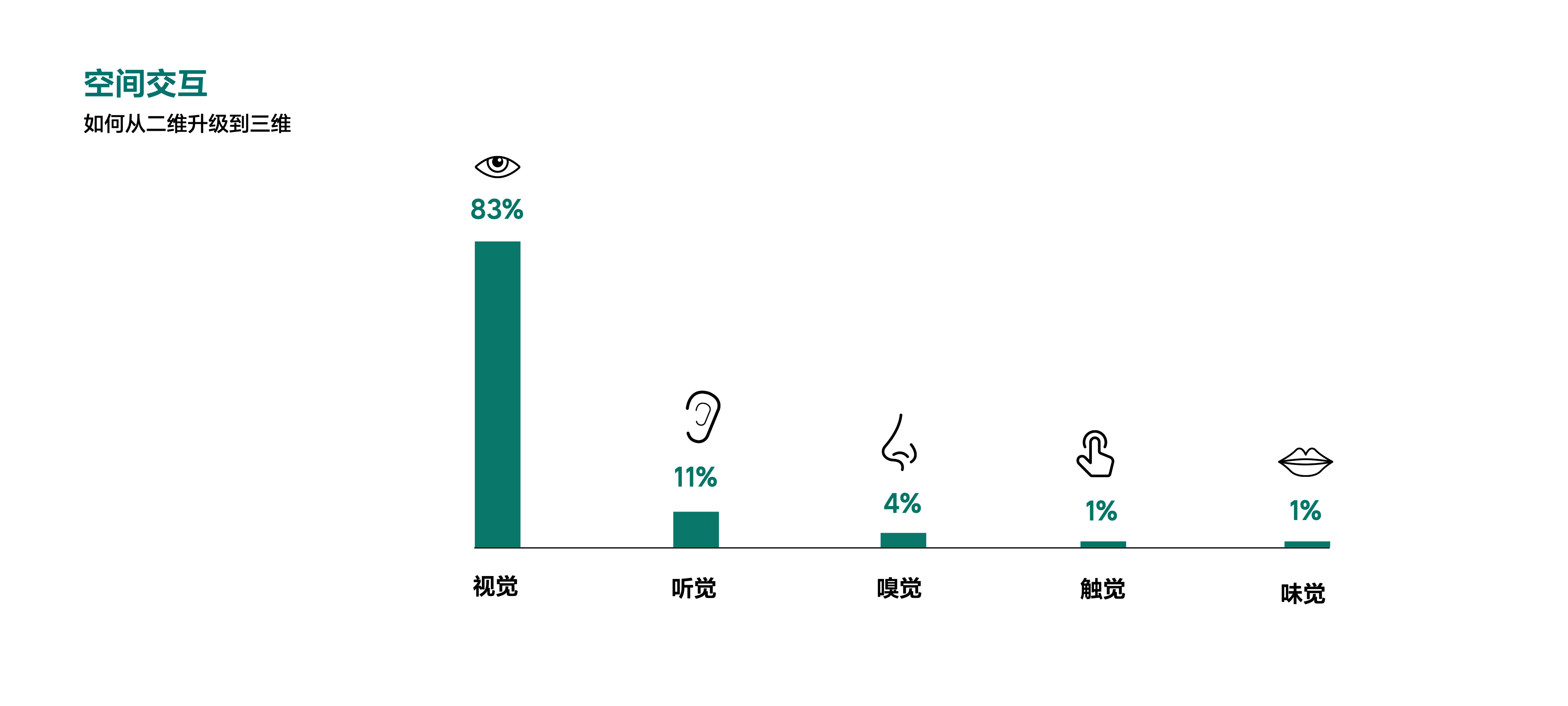

At the communication meeting, Gou Xiaofei shared a set of data. Among the five senses of humans, vision has the largest amount of information, reaching 83%, while touch and taste have the least, only 1%.

However, from the perspective of the electronic products, such as mobile phones and computers, which we commonly use, the way they obtain information is limited to “touch” from touch screens and touchpads. Even if most devices now have voice assistants and auditory perception capabilities, the information they can obtain is only 12\%. This means that what the system can do is entirely dependent on how much output you can provide through touch and voice.

In Gong Xiaofei’s opinion, the interaction methods in the car cabin are still in a state of borrowing and learning from traditional consumer electronic interaction technology, such as early-stage mouse and touchpads, mid-stage touch screens, and current-stage voice.

However, the biggest difference between the car cabin and consumer electronics is that the cabin is a three-dimensional terminal, while we interact with it in a two-dimensional way in consumer electronics. This mismatch directly limits the imagination space of the three-dimensional terminal in the car cabin.

When we go from two dimensions to three dimensions, adding another dimension, the most intuitive feeling is that when someone walks towards you, in the two-dimensional world, the image simply becomes larger. In the three-dimensional world, not only can we feel a clear sense of distance, but the sound we hear will also be louder, containing a lot of information.

Going back to the L9, the so-called “3D spatial interaction” seems to only add an IR camera to the front and a 3DToF sensor to the back seat, implementing the gesture control of the rear screen and “open this” function combined with voice control.

(Note: when the finger points to the window or sun visor, voice commands “open this” to open the corresponding window.)

Although the gesture control for the rear seats is not particularly useful and I, accustomed to physical buttons and voice commands, do not find much value in “opening this”, I still believe that this is a very imaginative way of interaction.

Once the vehicle has visual capabilities (IR cameras, 3D ToF sensors), the amount of information obtained can reach up to 95\% with a combination of voice and touch, meaning there will be 100 times the difference in perception and understanding of the physical world compared to a computer machine.

Looking back at the “open this” function, although it is difficult for us who are already familiar with touch and voice commands to perceive the value of this function from the perspective of information communication, the cabin has already begun to actively cater to your intentions.

To illustrate this, imagine a baby who wants to eat an apple on the table. Before this feature, the baby could only take it by themselves (touch to open) or clearly express their intentions through speech and let their mother help them (voice control). Now, they only need to point at it with their finger (gesture control) and say “this”, and they can eat it.

Therefore, the idea is ideal from this perspective, and what needs to be done next is to further improve the vehicle’s perception. In other words, optimizing the perception capabilities of IR and ToF sensors and the pick-up ability of microphones to capture more visual (depth) and auditory information.

This requires strong AI training capabilities. Li Xiang reveals that there are a total of 4 AI teams at Li Auto, with the largest one serving Lang Xianpeng’s autonomous driving department and the second largest serving Gou Xiaofei’s intelligent space team.

However, in order to truly obtain 95\% of the information needed, the system needs to have stronger understanding abilities.

The biggest advantage of touch interaction is that all commands are clear. However, with the introduction of full-scenario voice, fuzzy instructions, and gesture control, the demand for the system’s understanding ability has also increased.

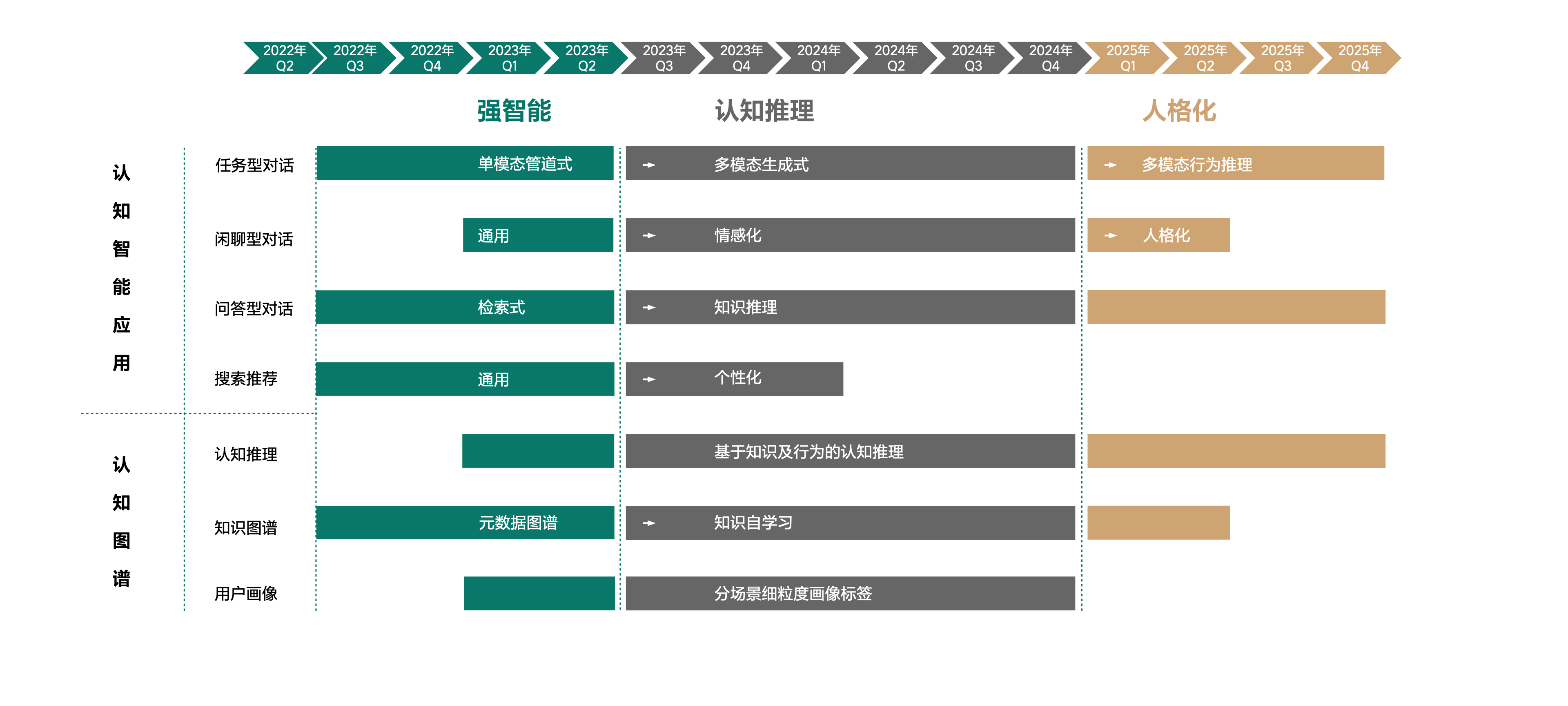

The ideal solution is to establish a cognitive map. Gou Xiaofei revealed in a communication meeting that Li Auto will have three stages:

- Stage One Strong AI (2023 Q2)

- Stage Two Cognitive Reasoning (2024 Q4)

- Stage Three Personality (2025 Q4)

In the first stage, the Li Xiang AI Drive team will feed the ideal student with more knowledge, and in the vehicle delivery stage, it will construct all the vehicle-related knowledge. This means that any questions about vehicles can be solved through the ideal student.

In the second stage, the ideal student will have its own thinking ability through the accumulation of data, be able to perform logical reasoning, and enter a self-learning stage. That is to say, it no longer needs to be taught by others. As long as it can connect to the internet, it will search for relevant literature online and learn related knowledge through the text content of the literature.

In the third stage, the ideal student will become more personalized in its speaking style, tone, and speech rate, which will become more and more similar to that of the user.

This is the ability plan of the ideal student in the “understanding” level, and finally in the “expression” level.

In terms of expression, the ideal student also caters to the two ways humans obtain the most information: visually through 4 screens + HUD, and auditorily through 7.3.4 speakers.

However, with the addition of the rear screen, the position of the ideal student will also appear on different screens depending on the wake-up position, providing a stronger sense of space. After being equipped with 7.3.4 speakers, it can also achieve sound where sound is needed, also possessing a stronger sense of space.

From this perspective, with the advancement of technology, it is not ruled out that holographic projection may appear in the ideal vehicle cabin in the future.

Li Xiang Strikes Back in Intelligent Driving

If you were asked to name the two strongest companies in known assisted driving, the first one that comes to mind is either XiaoPeng or Huawei.At the 2021 Nio ET7 launch event, Nio announced that it has officially shifted to self-developed full-stack technology. Additionally, the new car is loaded with the most powerful assisted driving sensors currently available on the market, showcasing Nio’s commitment to autonomous driving.

On the other hand, although the 2020 Ideal ONE model based on a supplier solution performs well, it lacks backward-facing radar and has limited potential for advanced features. To address this, Ideal had to install new cameras and add five millimeter-wave radars on the 2021 upgraded model, which barely matches the production model of Nio from 2018 and is inferior to the XPeng’s 2020 product.

While most consumers’ car purchase decisions are not currently impacted by assisted driving capabilities, Ideal’s passion for this technology is lukewarm at best. This is due to both subjective planning mistakes and objective funding issues.

Media reports showed that Ideal had only 1 billion yuan in cash at the end of 2018. Under such conditions, the Ideal ONE could only choose a more cost-effective supplier solution. However, the situation started to change after Ideal raised funds through its listing on Nasdaq in 2020, and in 2021, Ideal decided to start the journey of self-development.

The goals of the development of assisted driving, as opposed to the cabin, are much clearer, namely expanded functional coverage and less driver involvement. However, various technical paths are taken to achieve these goals.

Ideal’s autonomous driving research and development can be summarized into three key factors: algorithmic capability with high upper limits and safety thresholds, a significant and effective data sample, and a closed loop development process, which complement one another.

Algorithmic Capability with High Upper Limits and Safety Thresholds

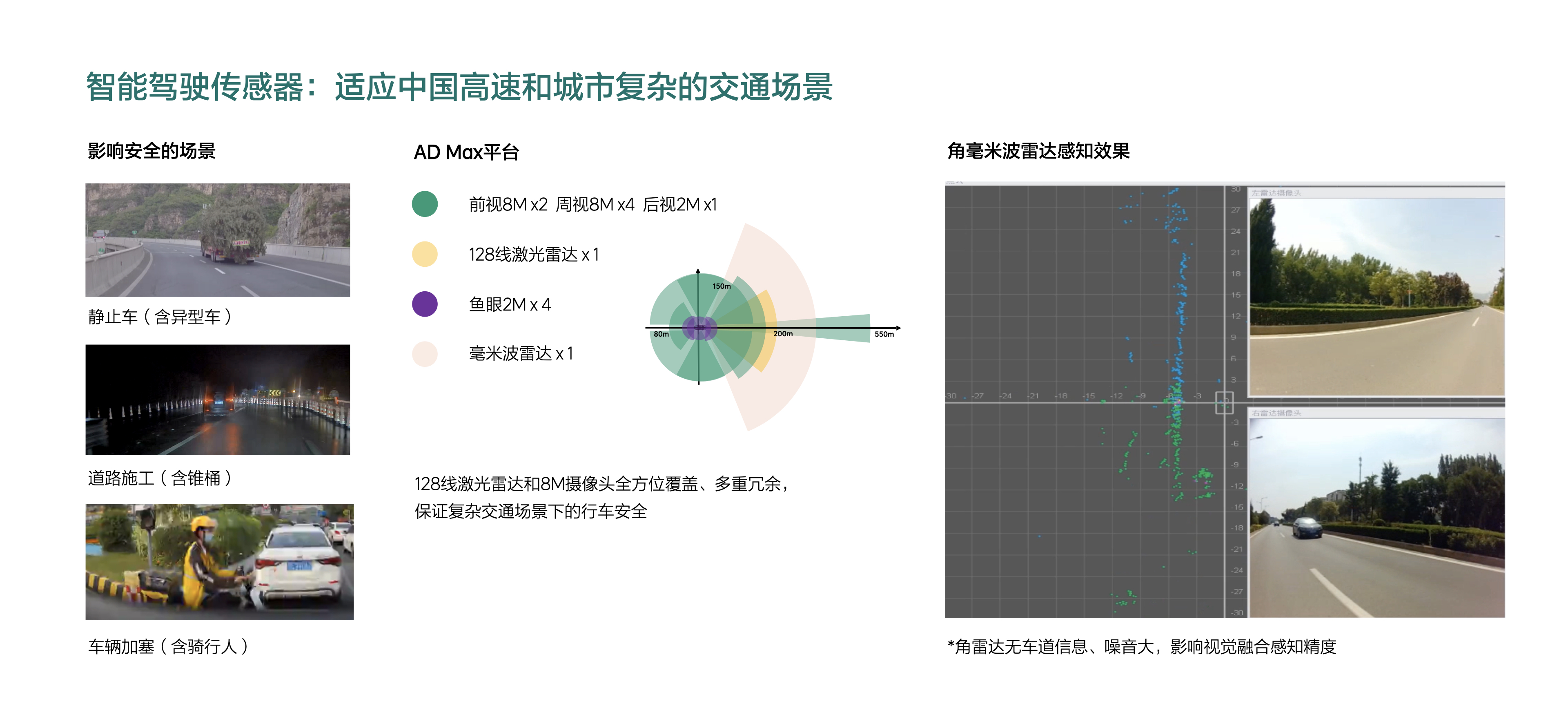

Let’s start with the Ideal L9’s assisted driving hardware:

-

7 assisted driving perception cameras (6x 8 million pixels, 1x 2 million pixels for rearview)

-

4 360-degree surround-view cameras

-

1 LiDAR

-

1 forward millimeter-wave radar

-

2 Nvidia Orin-X chips (508 tops of computing power)

In this environment with a large number of sensors, Ideal L9’s assisted driving sensor selection is exceptionally restrained, adding only one LiDAR and one millimeter-wave radar on top of its seven surround view ADAS cameras.

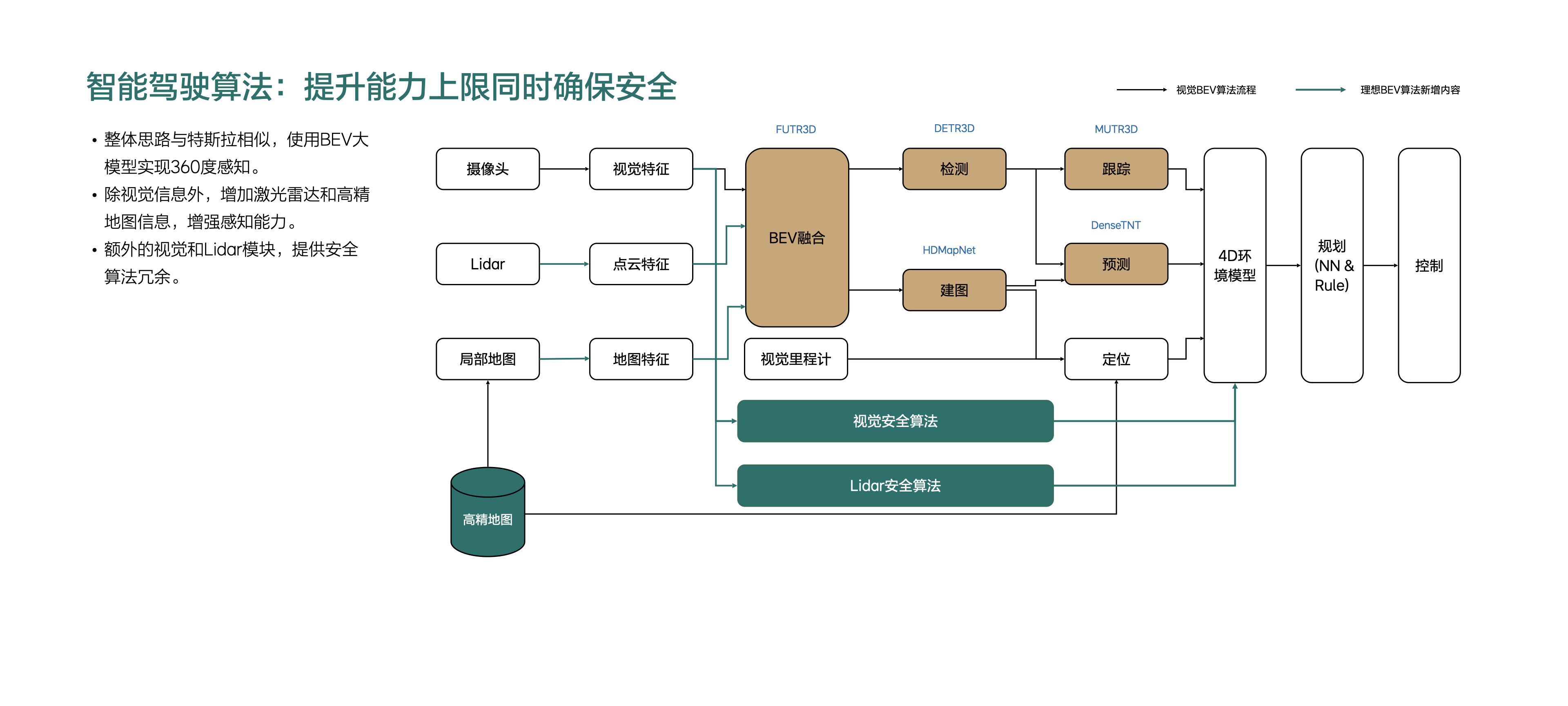

It is apparent that Ideal added only millimeter-wave and LiDAR for supplementary perception and redundancy on the front side, and uses only visual perception solutions for side and rear perception, converging with Tesla’s pure visual perception approach.During the communication meeting, Lang Xianpeng revealed that IDEAL also adopted the same 4DBEV framework model as the current Tesla. The biggest advantage of this model is that it can stitch the 7 cameras around the car into a complete picture, and add time dimension information to achieve the 4D effect.

Compared with the traditional single-camera output perception results, BEV fusion greatly improves the amount of perception information.

For example, in the traditional way of summarizing the perception results output by a single camera, it is similar to having 7 people in a car, each of whom can only see limited information from their own angle and direction, and the information cannot be connected with each other. A sees the front of the car, C sees the parking space of the car, although they see the same car, the information fed back to the center cannot reflect that it is a car.

Moreover, when only a small part of the car hood is exposed in the picture, it is difficult for the visual perception algorithm to judge in a timely and effective manner that it is a car.

However, the algorithm under the BEV framework is like a person with 7 eyes, obtaining the picture of 7 angles at the same time, and directly generating a top-down view.

So you can imagine which is more convenient, to give you information from 7 directions covering 360 degrees but without any correlation, or to give you information from a top-down view, to drive a car.

Of course, in order to achieve this, there are also great challenges at the algorithm level.

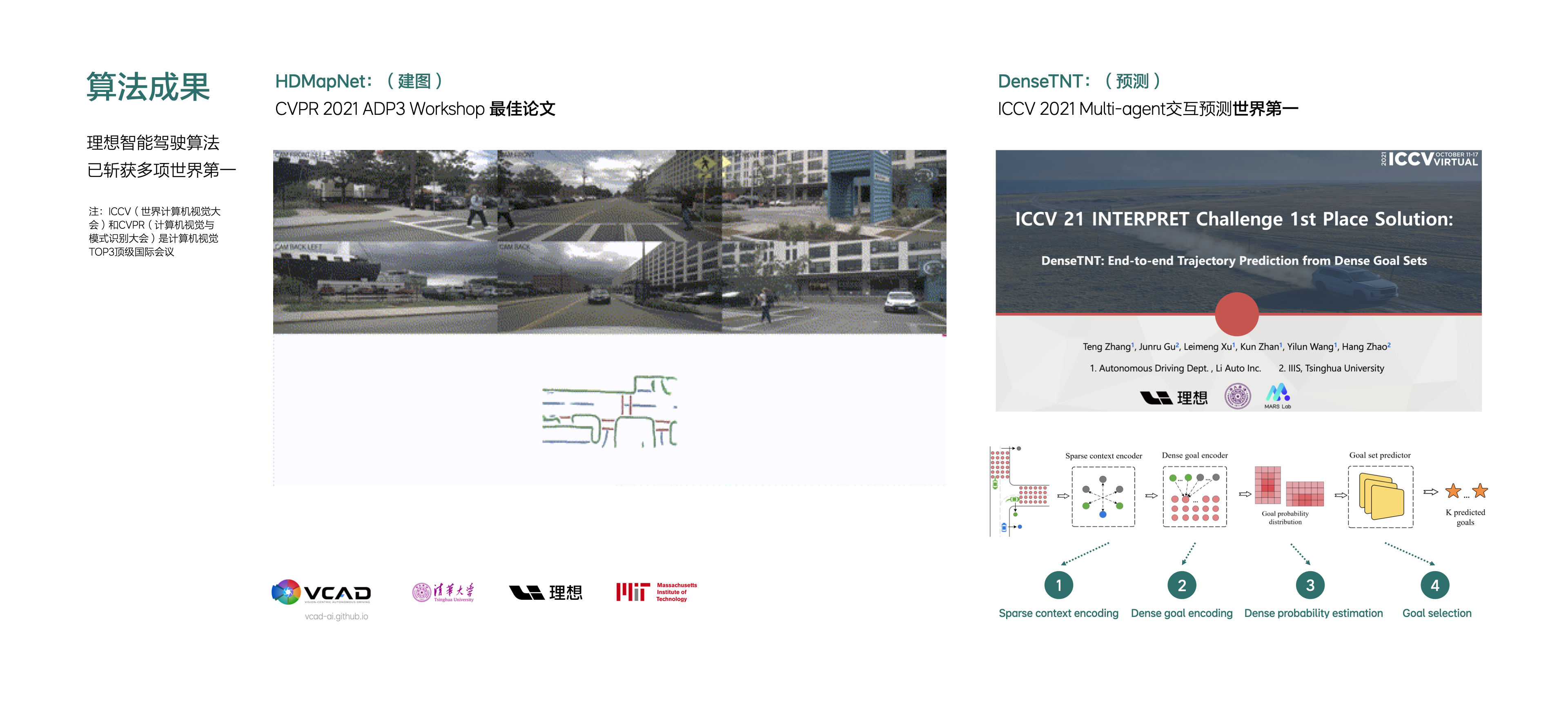

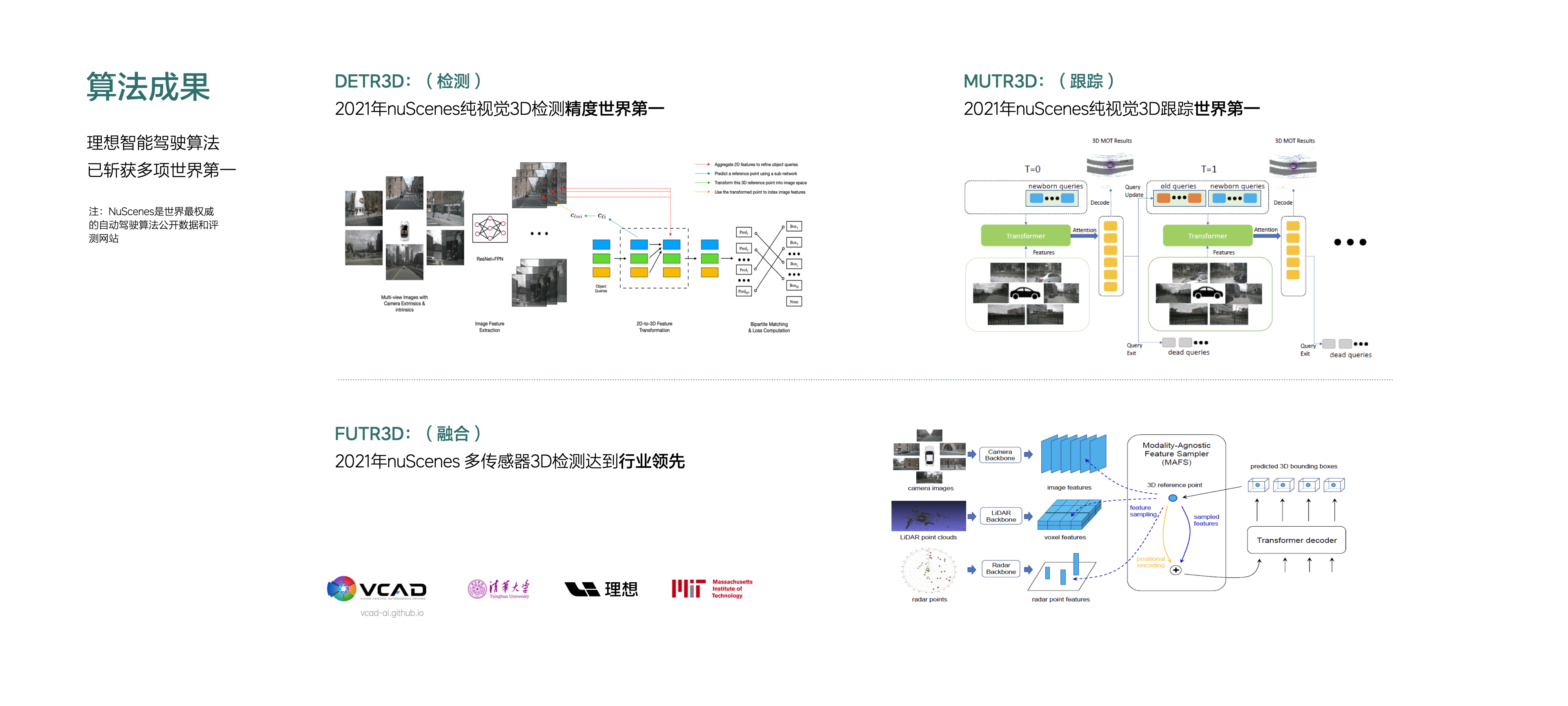

Here Lang Xianpeng shared 5 self-developed algorithms in different parts:

-

BEV fusion – FUTR3D

-

Object detection – DETR3D

-

Object tracking – MUTR3D

-

Behavior prediction – DenseTNT

-

Real-time mapping – HDMAPNet

These 5 sub-algorithms have achieved good results in different fields, but I won’t go into detail here. The focus is that these 5 algorithms have been applied to the IDEAL L9, and L9 will also open NOA high-speed navigation assisted driving in the delivery stage.

However, there are two unique points in these five algorithms. First of all, we can see from the hardware that LI has canceled four millimeter wave radar corners. In this context where everyone is keen on perception hardware, LI seems to be a bit conservative on this point. But the difference from the conservative attitude of the 2020 model is that, in my opinion, LI knows what they want now.

At the communication meeting, Lang Xianpeng displayed the millimeter wave perception results on the side with empty space and on the side with a car, and said:

“From the figure, we can see that a car is rapidly approaching us from the side behind us. However, it is difficult to locate this car directly from the waves reflected by the radar on the right side (the blue dots and green dots are signals from two different radars), because there are many interferences. It is not that this radar has no benefits, but it may affect the accuracy of our fusion perception in the end. Therefore, after comprehensive comparison, and coupled with our high confidence in vision algorithms (the vision algorithm demonstrated just now), we decided to cancel the millimeter wave radar corners.”

Objectively speaking, LI is not strong enough in forward perception to rely on pure vision. The requirements for the range and granularity of forward perception in assisted driving are extremely high, after all, it is the direction of active driving of the vehicle. The reason for cancelling the two millimeter wave radar corners on the front of the car is that there is already a lidar with a coverage angle of 120 degrees forward, which can meet the requirements. As for the two millimeter wave radar corners on the rear of the car, the range and granularity required by the system are lower, and pure vision is already sufficient in this case.

Another point that can be clarified is that more sensors for autonomous driving perception are not necessarily better. On the one hand, too much data will greatly increase the processing pressure of computing chips. On the other hand, if three sensors feedback different results for the same direction, just like three guards, two say there are no cars and one says there is a car, then which feedback should the decision-making system listen to?## Translation

One aspect is that the Ideal BEV fusion algorithm incorporates radar point cloud features and high-precision map features, indicating that the Ideal driving assistance system still relies on high-precision maps, as is the current industry trend.

To break free from the constraints of high-precision map suppliers, Xpeng and NIO plan to acquire or collaborate with companies to obtain Class A surveying and mapping qualifications. Among the three new forces, Ideal is the earliest to obtain Class B surveying and mapping qualifications. As far as I know, Ideal is also expected to obtain Class A surveying and mapping qualifications in the near future.

Therefore, we will have to wait until delivery to see how well Ideal’s self-developed algorithms perform.

A huge amount of effective data samples

Data is the foundation for training visual-based autonomous driving algorithms. The more effective data that the system is fed, the more extreme scenarios it can solve.

Pierre-Luc Charles was very confident at the communication meeting, saying: “We have already accumulated more than 3 billion kilometers of driving mileage, more than 290 million kilometers of driving assistance mileage, and more than 24.62 million kilometers of NOA navigation-assisted driving mileage. We have extracted 190 million kilometers of effective learning scenes from these driving data. Tesla has the most with more than 1 billion kilometers, and we are second. We are larger in quantity than those behind us, such as Baidu, whose data is only in the tens of millions.”

In the 2020 Ideal ONE, a second camera was added for collecting data in shadow mode, based on the foundation of a Mobileye camera. And the 2021 Ideal ONE, which comes fully equipped with driving assistance and NOA, has accumulated a large amount of data on driving assistance status.

Faced with such a vast amount of data, the first hurdle is to filter, select, and classify the data. Ideal is developing its own automatic data labeling system. Sample boxes will be pre-annotated in the image sequences, eliminating the need for manual annotation. All that’s required is to check the automatically annotated content and make some minor adjustments.

Another interesting point is that laser radar is also installed on L9 and Ideal’s automated driving test vehicles, and this labeling system can also annotate the point cloud data from the radar. Under the same scenario, the image and point cloud data can be adjusted in linkage.

After labeling, the second step is to classify the labeled image. There are more than 150 tags in Ideal’s internal system, and the system will annotate whether it is a human take-over or a system exit on the vehicle side, and annotate environmental information on the cloud side.

Finally, Ideal’s automated driving R&D engineers can directly input scene requirements in the background. For example, for all rainy night road construction warning samples in May, you can get 33,739 data. After obtaining these data, you can start training and testing.

Closed-loop development process

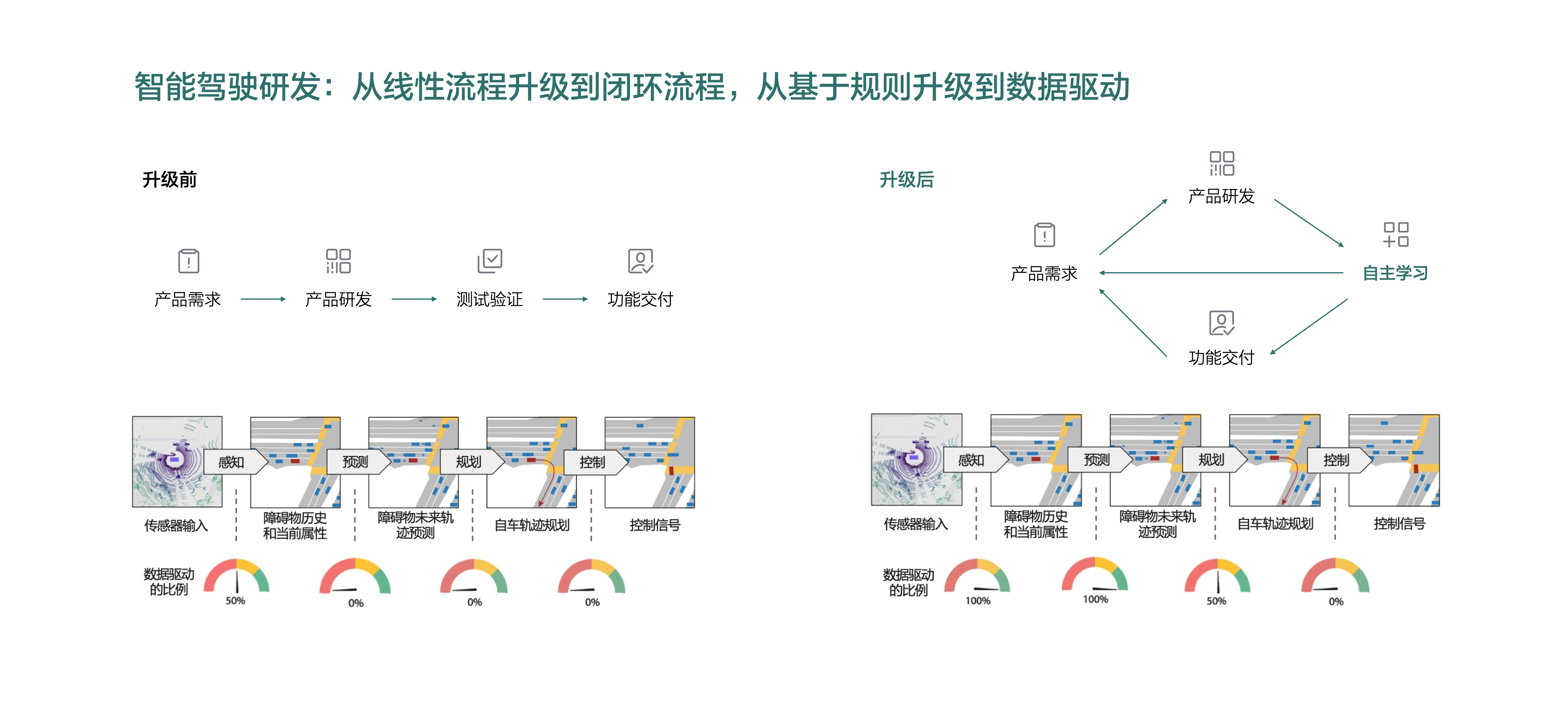

Finally, the closed-loop development process is used. In traditional function development, a linear process is adopted, that is, product requirements-product development-test verification-function delivery.

A rigid development process can ensure the normal progress of system development, but under the continuous and high-speed iteration of assisted driving requirements, this development method has two shortcomings.

-

The testing process takes relatively long and resource-intensive, which will lead to a longer product delivery cycle;

-

After product delivery, user feedback cannot return to the product design end.

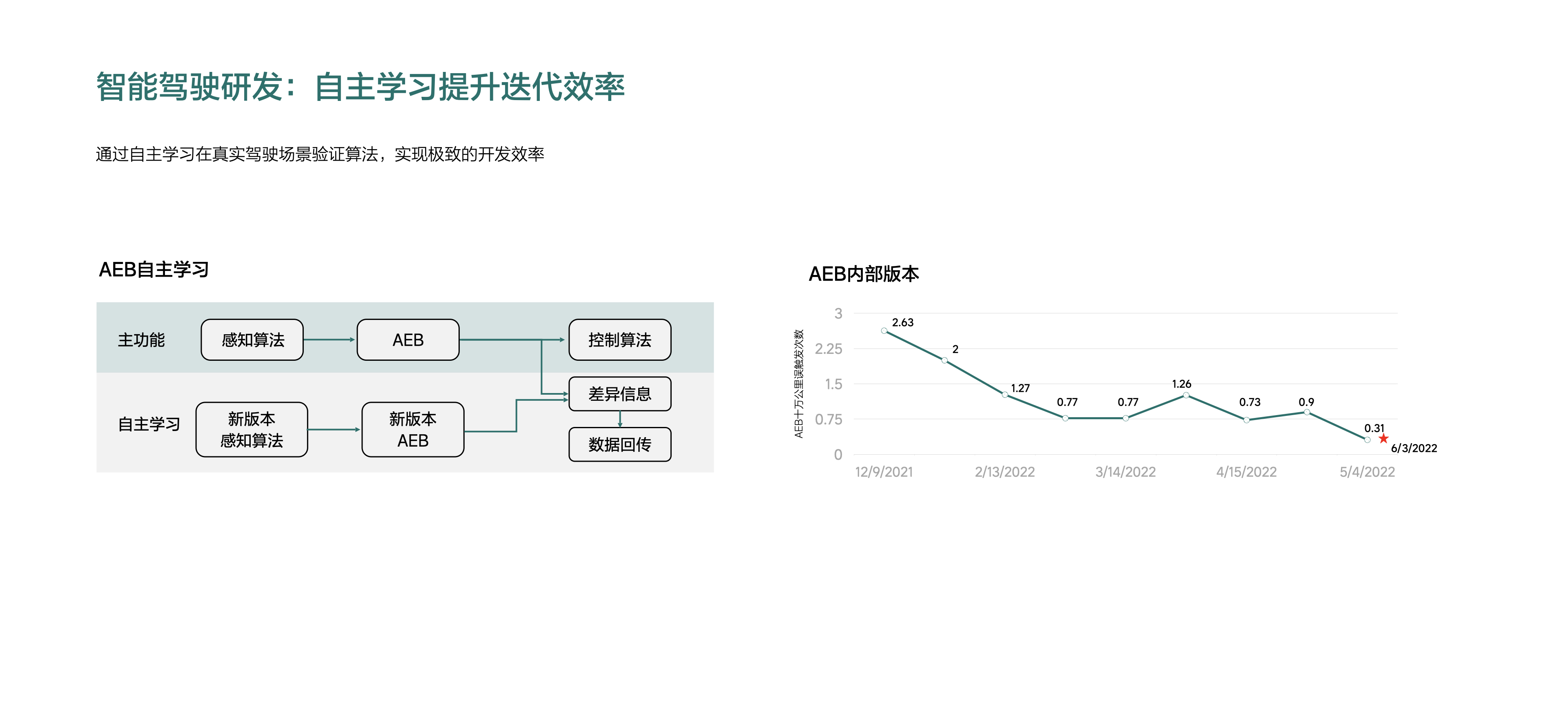

Therefore, the ideal development process has also changed from the original linear process to a closed-loop process, adding a self-learning link.

After adding the self-learning link, the AEB misoperation rate of the 2021 Ideal ONE has decreased from 2.6 times per 100,000 kilometers every six months to the current 0.31 times per 100,000 kilometers.

Obviously, this is a development process that belongs to the intelligent era.For the vast autonomous driving R&D field, the ideas shared by Dr. Lang Bo today are just the tip of the iceberg. However, for Ideal Cars, it is clear that the autonomous driving department has already begun to make efforts. During the communication meeting, Ideal Cars’ goal is to launch city NOA in 2023-2024, and city FSD in 2024-2025.

Dr. Lang also explicitly stated that if you want to achieve city-level FSD, the most fundamental data volume required is 10 billion kilometers. Meanwhile, Ideal Cars’ autonomous driving team is the first team domestically able to achieve this FSD goal.

Although the autonomous driving function is not yet sufficient to affect people’s car purchase decisions, this technology undoubtedly requires a long-term technical accumulation. When the value of this function begins to undergo qualitative changes from the user’s end, it will be too late to start development.

In Conclusion

This communication meeting, which lasted for less than 2 hours, expressed the ideas of Ideal Cars in three dimensions: vehicles, space, and intelligent driving.

Regarding the cockpit, Ideal Cars put forward the idea of “three-dimensional space interaction,” which provided the entire industry with a new idea. As for intelligent driving, NOA function based on the BEV architecture will soon be experienced on L9 through complete self-research.

For Ideal Cars, the software weaknesses criticized during the ONE era will gradually be improved in 2021.

From this moment on, the emerging powers have also officially entered the second phase of competition.

Author: Liu San

Editor: Dai Ji

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.