Author: The Office

On June 8th, Xpeng, a new car company that has been established for 462 days, finally held its first product launch event. Unfortunately, due to the process and form of the event – held on Baidu’s Metaverse app Hi-land, too much attention was drawn by elements such as Metaverse and Baidu AI digital human Hi-gaga, rather than the focus on the product itself.

Straight to the point, we still prefer to focus on the ROBO-01 concept car that was unveiled that night. Because we always believe that in the era of emerging players in the car industry, every new car company is bound to face a philosophical questioning:

Does the world need a new car brand?

And for the answer to this question, any management rhetoric is less compelling than actual products. So, less talk, more product.

At last year’s Baidu AI Developer Conference, Xpeng Chairman Li Yanhong mentioned that ROBO-01 would embody the three product philosophies of “Free Movement,” “Natural Communication,” and “Self-Growth.”

Correspondingly, we can look at this ROBO-01 from three dimensions of smart driving, human-vehicle interaction, and intelligent cockpit.

What does redundancy mean in translation?

Before the ROBO Day event, I had high expectations for Xpeng’s smart-driving technology. This is largely because one of the important factors for Xpeng’s establishment was to carry and implement Baidu Apollo’s relevant intelligent car technology.

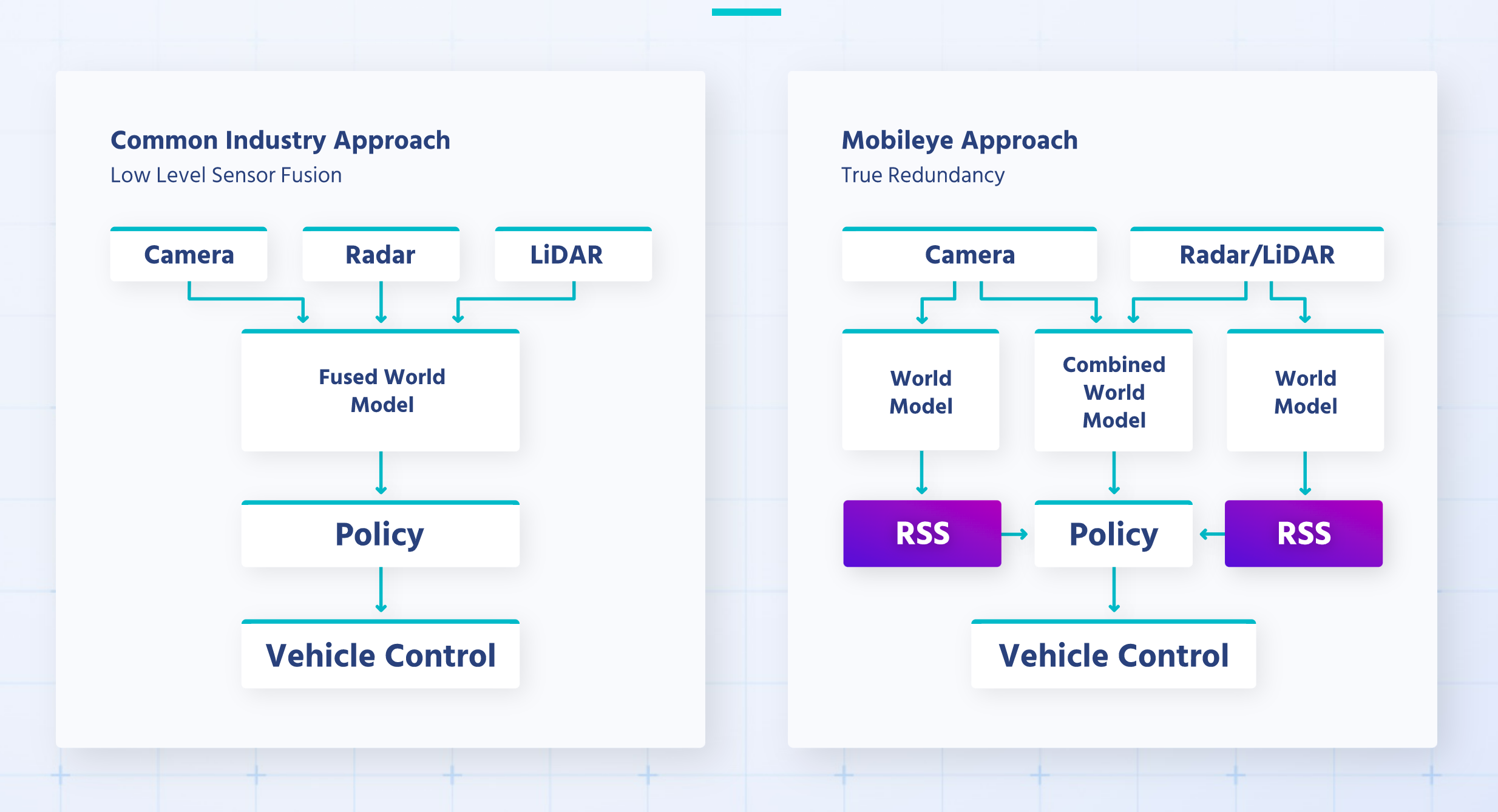

Xpeng and Baidu did not disappoint us. Ultimately, ROBO-01 unveiled the first genuine redundant intelligent driving system in China. The concept of “real redundancy” was first proposed by the globally renowned smart driving giant Mobileye, which is different from the “pure vision” route led by Tesla and the mainstream “multi-sensor fusion” route. “Real redundancy” can be said to be the most difficult technical route in the field of smart driving technology at present.Translated English Markdown Text:

Jidu did not elaborate further on ROBO Day yesterday. However, based on Mobileye’s true redundancy, my personal guess is that Jidu may have built two completely independent autonomous driving technology stacks, one of which is pure vision-driven, where perception, positioning, and regulation modules for autonomous driving are all driven by cameras, while the other is driven by “Lidar and Millimeter Wave Radar Fusion.” “True redundancy” means that the above two sets of systems are integrated into the same car, achieving true redundancy between the completely independent two sets of autonomous driving systems, rather than the complementary relationship among different sensors for different perception tasks.

Mobileye once made a diagram to illustrate the fundamental differences between the mainstream “multi-sensor fusion” and “true redundancy” routes. Back to ROBO – 01, this means that this car can achieve advanced intelligent driving technology without raising the front cover of the two Lidars.

Obviously, in the background where the mainstream “multi-sensor fusion” is still far from mature, Tesla has already taken a huge lead with its “pure vision” route. Challenging “true redundancy” is a choice that requires tremendous courage for Mobileye, including today’s Jidu.

However, Mobileye, including today’s Jidu, believes that the huge attractiveness of “true redundancy” lies in the fact that when we do intelligent driving based on the “multi-sensor fusion” route, which is essentially complementary rather than redundant, raising the scenario of safe operation to 99.999999% means billions of hours of system safety operation verification.

The essence of “true redundancy” backup means that New Operation Verification = Pure Vision × Pure “Lidar and Millimeter Wave Radar Fusion”, which can greatly reduce the verification time of system safety operation to tens of thousands of hours.

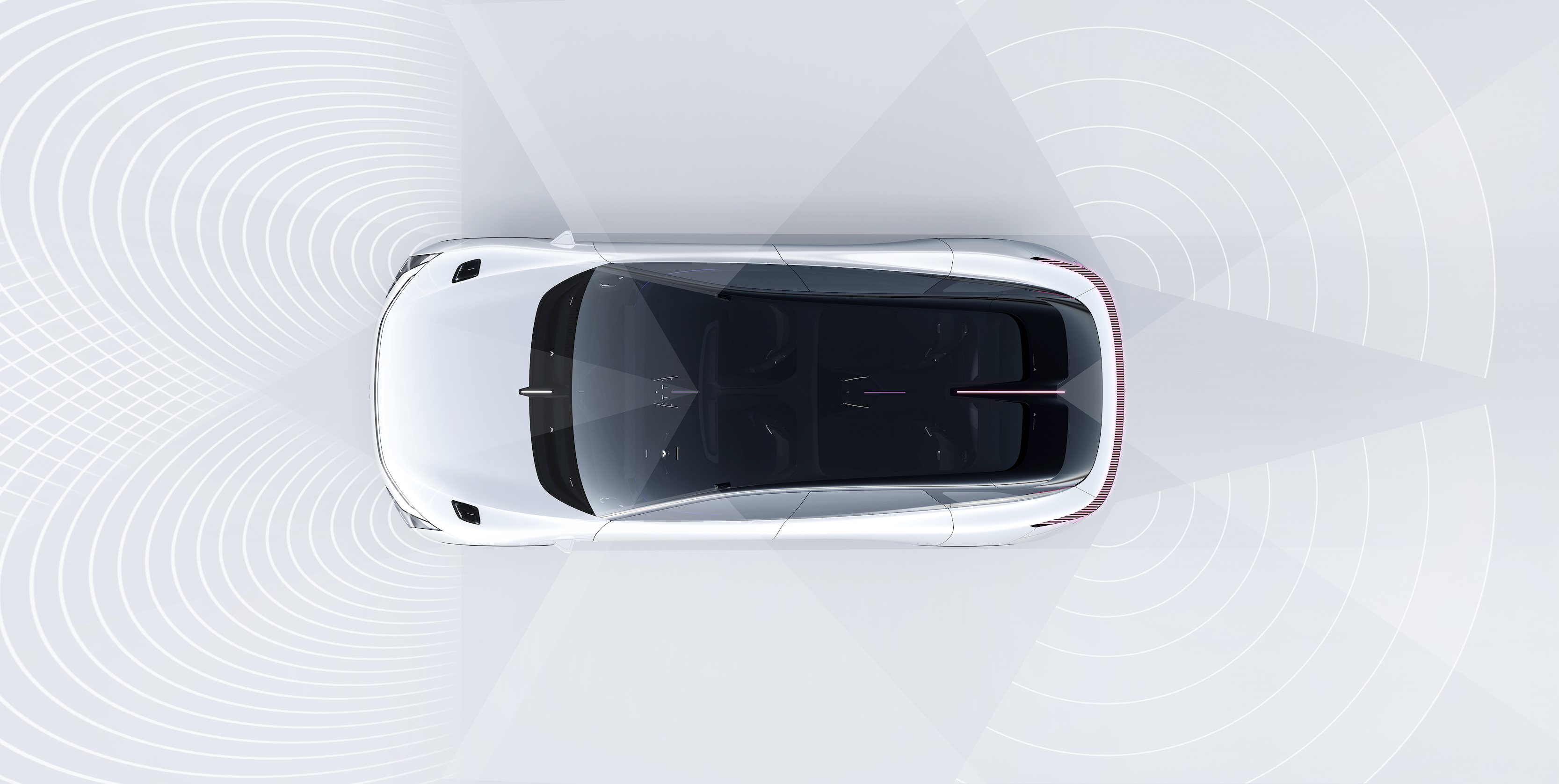

To be frank, when I saw ROBO – 01 choose the “true redundancy” route, my expectation was raised again, because this means that Baidu will reveal 100% of its algorithm, data, and engineering experience in the field of intelligent driving over the past decade. From the establishment of Baidu Institute of Deep Learning and the establishment of an autonomous driving laboratory in 2013, to the engineering accumulation of Baidu Apollo’s iterative development today.In terms of hardware, ROBO-01 is based on the dual NVIDIA Orin-X platform, equipped with 2 Velodyne AT 128 LiDARs, 5 millimeter-wave radars, and 12 cameras to provide full perception coverage of the vehicle. Overall, the hardware is not surprising but is at the forefront of 2023.

Another demonstration of Baidu Apollo’s commitment is that, according to last night’s press conference, when the mass-produced version of ROBO-01 is delivered in the second half of 2023, it will come with point-to-point intelligent driving software that’s ready to use straight out of the box.

Although there is still a year to go before delivery, this is undoubtedly a flagship event that opens the door to highly intelligent driving industry. I’m really looking forward to seeing Jidu’s true kung fu.

Advanced Intelligent Architecture

Now let’s talk about ROBO-01’s intelligent cockpit. Before Jidu or the industry as a whole achieves completely autonomous driving, the intelligent cockpit has a fundamental impact on the driving and riding experience for the driver and passengers.

ROBO-01 features a screen that runs lengthwise across the entire dashboard – rather than the currently popular three-in-one spliced screens. To put it simply, the border between screens is removed to create an integrated ultra-wide screen. If you need something similar as a comparison, the M-byte released by Byton a few years ago might be a good reference.

However, that’s where the similarity ends between ROBO-01 and M-byte. To drive this large screen, ROBO-01 is the first vehicle in China to adopt the Qualcomm Snapdragon 8295 chip with a 5nm process and an NPU computing power of 30 tops for AI learning. By comparison, the A15 chip in the iPhone 13 has an NPU computing power of 15.8 tops.

According to Jidu’s plan, based on this hardware foundation, ROBO-01 will implement a 3D human-machine cooperative driving map, allowing ROBO-01 to run on a 3D map. From the video released by Jidu, the obstacle model perceived by the intelligent driving system supports direct visualization fusion display on the 3D map, and achieving this high-level fusion UI effect relies on the cross-domain resource scheduling between the intelligent cockpit and the intelligent driving system. Jidu has developed the JET intelligent architecture, which covers electronic and electrical architecture and SOA operation systems, to ensure efficient information and resource scheduling between different domains.

According to JET, the computing power sharing, perception sharing, and service sharing can be achieved by ROBO-01. This means that in the extreme scenario where the intelligent driving system of ROBO-01 is malfunctioning, the computing power of the intelligent cabin can continue to provide support, ensuring system-level safety redundancy. This is undoubtedly a more advanced intelligent architecture design.

In terms of intelligent voice, ROBO-01 supports millisecond-level response and can achieve full-scene coverage inside and outside the car. Through the multimodal fusion of visual perception, voiceprint recognition, and lip-reading captured by Baidu AI, JET attempts to create a self-growing intelligent cabin system.

We are also looking forward to experiencing the cabin performance of the production version of ROBO-01 this fall.

How difficult is it to define a new interaction?

The concept version of ROBO-01 has no door handles, shift levers, or left and right switches. Considering that JET officially announced that the production version to be released in the fall will maintain 90% similarity with the concept car and ROBO-01 has indeed come up with corresponding solutions to define new interactions, here we will first discuss the issue based on the premise of retaining the above settings in the production car.

It is not a hidden door handle, but a complete removal of the door handle. According to JET, microphones are arranged outside the car, and the voice is standby by Bluetooth or UWB short-distance communication to unlock the door.

For example, there is no shift lever. JET did not provide detailed introduction, but one possibility is that based on the voiceprint recognition and speech recognition mentioned earlier, the driver controls the shift by voice, or uses the interaction provided by Tesla’s new Model S/X: slide the shift bar on the center control screen to complete the shift, and provide physical buttons on the armrest position as an emergency backup.

Speaking of canceling these physical controls, JET’s official press release mentioned a phrase called “simplifying complexity.” The history of human-vehicle interaction is indeed a history of simplifying complexity, from manual to automatic, from mechanical handbrake to electronic handbrake, from “physical start button” to “brake pedal electrified.” The interaction of driving a car is becoming simpler and simpler.

However, interaction is also the most resistant and difficult part to promote in vehicle product development. The new interaction must ensure lower learning costs while balancing safety, simplicity, and efficiency. In simple terms, it should be easy to use.Given the attribute of being a means of transportation, innovation in interaction in the automotive industry is always evolving slowly. In addition, regulatory authorities have enacted numerous strict laws and regulations to constrain companies. For example, both the ROBO-01’s remote control steering and its adjustable laser radar need to communicate closely with regulatory agencies to ensure approval. But at least for today, they lack the possibility of mass production under current laws and regulations.

However, Jidu is making practical progress in related fields. For instance, on December 1st, 2021, Jidu, along with Nio and Geely, became a joint unit for researching the development and standardization of remote control steering technology to drive progress in related fields.

Frankly speaking, it is difficult to discuss Jidu’s interactions today, or even if their efforts will be successful. But these efforts, which redefine interaction, show that Jidu hopes and tries to bring fresh blood into the automotive industry beyond their technology and products, which is undoubtedly worth paying attention to.

From a timing perspective, Jidu was founded only 463 days ago. As mentioned earlier, the ROBO-01 mass production version, scheduled for this fall, will retain 90% similarity to the concept car. Therefore, compared with structural innovations such as butterfly doors and gull-wing doors, I am more looking forward to Jidu’s innovation in intelligent driving, cabin, and interaction.

In other words, we hope a real “free mobility”, “natural communication,” and “self-growth” Jidu ROBO-01.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.