Author | Zhu Shiyun

Editor | Qiu Kaijun

“We are confident that the NGP effect in cities that we will release within this year will be significantly better than the FSD effect,” said He XPeng in an interview with “Electric Vehicle Observer” at the Hundred People’s Forum on March 26. XPeng Motors aims to transition to unmanned driving by 2026.

This is 16 months after He XPeng challenged Tesla founder Elon Musk to “not be able to find the East” in China’s self-driving field.

Of course, Tesla has not been idle either.

In April, Musk announced that the fully autonomous driving FSD testing version could be installed on more than 100,000 Tesla models. According to Tesla’s 2021 Influence Report released recently, compared with all US cars, the probability of accidents occurring in Tesla cars that use Autopilot’s automatic driving assistance is eight times higher. Musk said the data would tend to exceed ten times.

However, in China, due to the limited open functions and expensive FSD, the brand label of Tesla is more significant to Tesla owners than the practical value.

At the 2020 XPeng Technology Day, in a comparison test of XPeng NPG/Tesla NoA navigation driving assistance systems, the XPeng P7 performed stably, while the Tesla Model 3 had a series of inexplicable, unauthorized lane changes and incorrect exits.

This is consistent with the comparative test results of Chinese media many times: While Tesla started testing fully automated driving in North America, it still struggles on structured roads in China.

Seven years late to the game, can XPeng’s current automatic driving ability surpass Tesla? Is He XPeng just blowing hot air when he says he wants to surpass Tesla?

More importantly, what are the differences and prospects between the two major technical routes of pure visual and perception fusion represented by Tesla and XPeng, respectively, in achieving mass-produced automatic driving goals?

In China, where is Tesla “inferior” to XPeng?

Essentially, Tesla with pure visual perception and XPeng with multi-sensor fusion are two “species” with very different operating modes and habitats.

Pure Visual Perception vs. Multi-Sensor Fusion

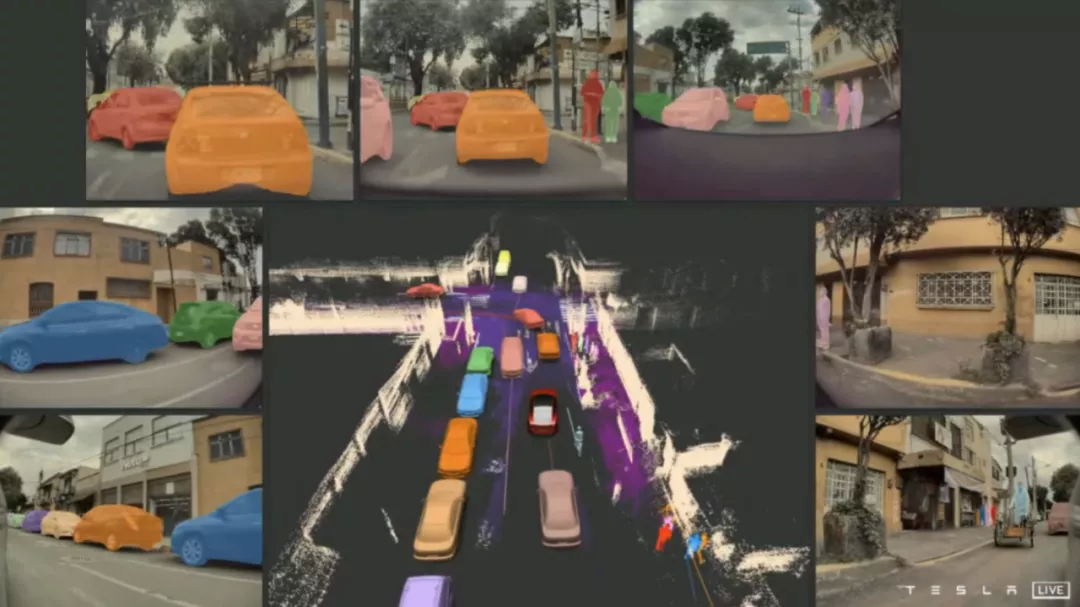

Tesla’s FSD relies entirely on “seeing”. Eight cameras around the vehicle collect RAW format images with a resolution of 1280×960 and a 12-bit depth at a speed of 36 frames per second.The original image data is directly fed into a single pure visual neural network algorithm called “HydraNets” for a series of tasks including image stitching, object classification, target tracking, time-series online calibration, and visual SLAM (simultaneous localization and mapping), all of which enable machines to understand “what I am taking a picture of”, ultimately forming a “vector space” of the road condition over time and space – a virtual mapping of the real physical world.

“The hardest part is building an accurate vector space,” Musk said. “Once you have an accurate vector space, the control problem becomes like a video game.”

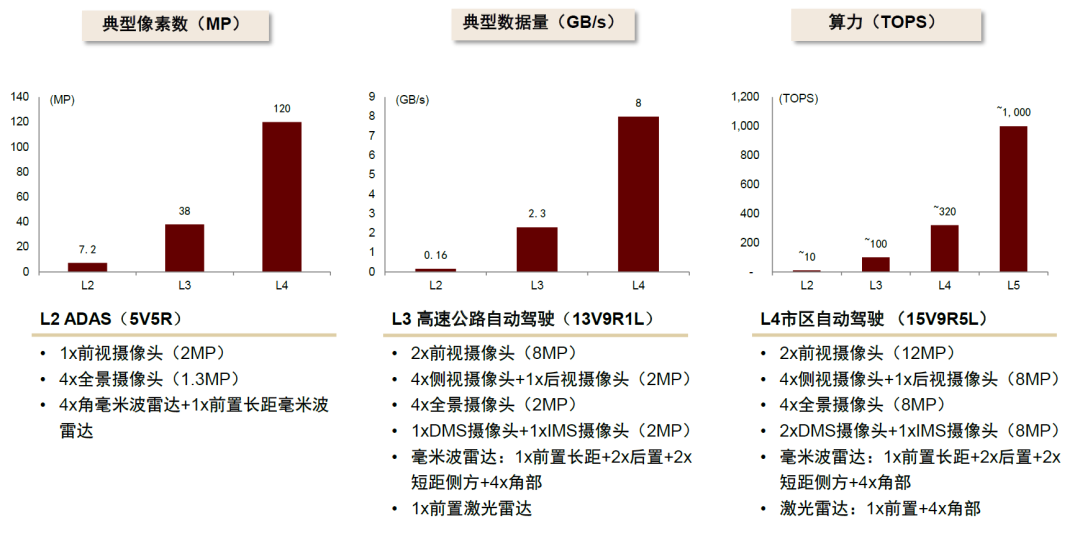

“The Vector space” is a necessary condition for all L3 and above advanced driving assistance systems, and the difference lies in how to obtain (perceive) the data of the real world.

Starting from P7, XPILOT intelligent driving assistance system (hereinafter referred to as XPILOT) has formed a “XPENG-style” fusion perception system: forward three-eye camera + side view cameras on the fenders + front view camera on the mirrors + rear view camera + five millimeter-wave radars + four surround-view cameras + 12 ultrasonic radars + high-precision maps + high-precision positioning.

Starting from P5, XPILOT introduced LIDAR.

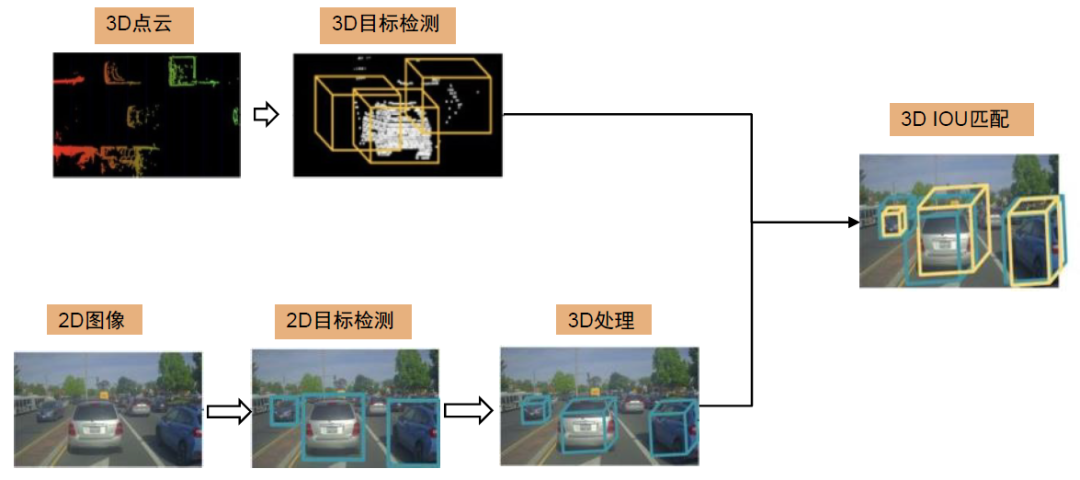

Radars provide direct information about speed, depth, distance, and some material information, with LIDAR being able to directly virtualize real scenes into 3D point clouds, while cameras perceive many details such as pedestrians, traffic signs, and road markings. Afterwards, through fusion algorithm models, the raw data or perception results of different sensors are fused into a 4D consistent fusion to establish the vector space.

Both solutions have their own advantages and disadvantages.

The visual solution has a great cost advantage. The cost of a single camera is only between 150 and 600 yuan, and the cost of a more complex three-eye camera is usually under 1,000 yuan.

Tesla’s eight cameras cost less than $200 (1400 yuan), combined with their independently developed automatic driving chip, the total cost is less than RMB 10,000.

The multi-sensor fusion solution, in addition to cameras, has a cost of about $50 for millimeter-wave radars, several hundred dollars for solid-state LIDAR, and the cost of high-precision maps.In 2019, Amap announced a standardized high-precision map cooperation price of 100 yuan/vehicle/year. However, the Headwolf Research Institute believed in the report that apart from basic services, high-precision map merchants also charge auxiliary automatic driving service fees, and the industry price may be around 700-800 yuan/vehicle/year.

Cost is a decisive factor in the mass production of technology, but the reliability and achievability of technology are more important.

Distance/depth/speed detection is one of the disadvantages of visual solutions. To build a 3D+time vector space through 2D images, not only is there a delay problem caused by “translating” 2D to 3D, but the image processing algorithm, the number/quality of scenarios used for AI learning, and the hardware computing power also require extremely high standards.

For example, after Tesla canceled millimeter-wave radar last year, the FSD beta autonomous steering function set a maximum speed of 75 mph (120 km/h) and a following distance of at least three cars. Two months later, Tesla raised the speed limit to 80 mph (128 km/h) and lowered the following distance to two car lengths.

A multi-sensor solution has distance/depth/speed data directly provided by radar, ultra-long-range prior information provided by high-precision maps, and centimeter-level positioning ability provided by high-precision positioning modules.

“This helps AI understand, decide, and plan the next action, and provides auxiliary and redundant information sources for perception capabilities based on other sensors,” said Wu Xinzhou, Vice President of Autonomous Driving at XPeng Motors, to “Electric Vehicle Observer.”

Obtaining sufficient redundancy is the main reason why L4 autonomous driving companies such as XPeng choose multi-sensor fusion instead of pure visual routes.

Currently, due to the lack of direct measurement ability for speed and acceleration in pure vision, phantom braking will be a long-term problem that is difficult to eradicate.

In the future, for expected functional safety and safety of advanced autonomous driving systems, it is necessary to prevent single system failures and narrow the expected redundancy of failure. “It is currently difficult for a pure vision system to meet the safety requirements of advanced autonomous driving.” An autonomous driving expert told “Electric Vehicle Observer.”

Tesla in the U.S. and XPeng in China

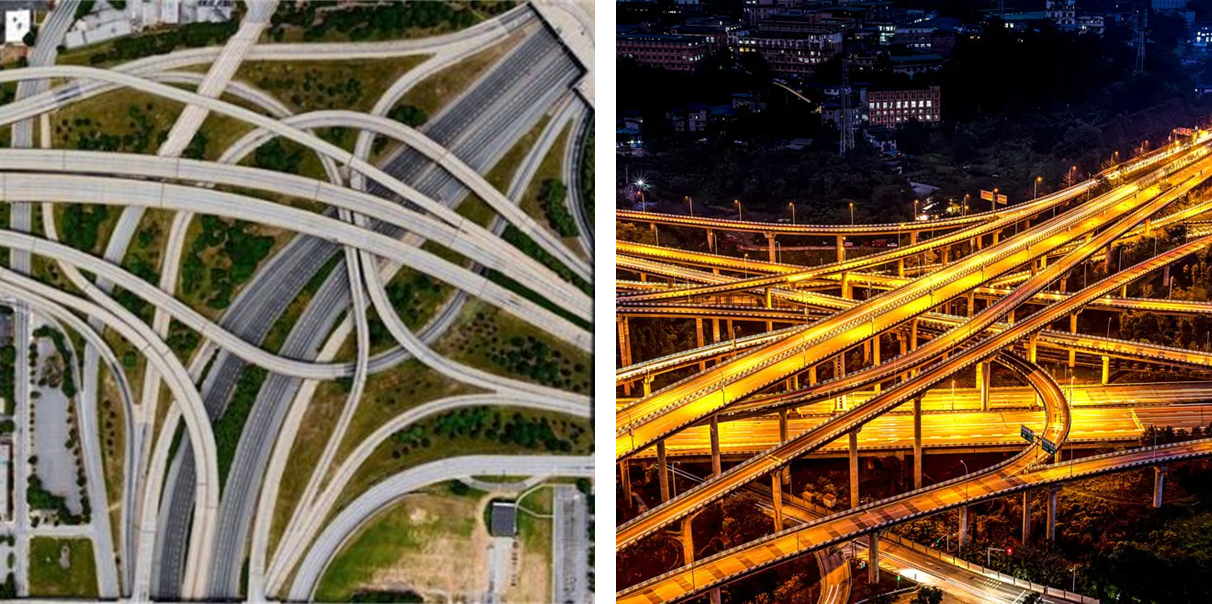

The difference in “habitat” further amplifies the landing performance of the two technological routes.#### The complexity of China’s transportation environment far exceeds that of the United States, requiring a large amount of information beyond the line of sight to be provided to decision-making systems in order to successfully navigate through it. It also means that relying solely on real-time, purely visual perception systems is difficult to implement in China.

For example, even on a closed expressway, which is considered a simple scenario, China has more curved roads and sharper turns than the United States, with some sections having two overlapping loops. The route that can be “seen at a glance” is very short-lived. China’s expressways also have longer entry and exit ramps, more frequent changes between virtual and real lanes, and even pedestrians, which should not appear on a closed road in the first place.

Some companies have found in practice that, due to differences in the degree of compliance among traffic participants, autonomous driving systems are nearly 10 times more likely to navigate through intersections in the United States than in China.

Without the use of high-precision maps or prior information, Tesla’s FSD, which has a neural network proportion of over 98%, requires massive amounts of high-quality and diverse data to evolve based solely on visual perception.

Therefore, under the feeding of data from North America, the FSD test version has achieved partial autonomous driving capabilities on unstructured road sections. However, Tesla is still unable to run smoothly on Chinese expressways because it currently lacks the ability to use Chinese scenario data.

Due to national data security requirements, Tesla’s data in China cannot be “exported”. This means that the data itself must be stored in Chinese servers, foreign IPs cannot access it through the network, and even people who read the data in China have strict nationality background restrictions.

This means that Tesla needs to “rebuild” its organization in China to adapt to the Chinese scenario.

Firstly, Tesla needs a data and R&D center in China, with “a team of over a hundred staff responsible for data acquisition and model training, as well as a range of supporting organizations such as product managers,” according to a big data engineer at a new automotive company who spoke to “Electric Vehicle Observer.”

It also needs to rebuild its workflow. Since US data cannot enter China, model parameters can only be transmitted from the US, not the data itself. “This has a significant impact on the training of the model, and a new data pipeline (data collection, processing, desensitization, cleaning, labeling, classification, and training processes) must be built in China,” said the big data engineer. This also means a process team of hundreds or even thousands of people.

According to “Electric Vehicle Observer,” Tesla began recruiting personnel for autonomous driving R&D in China in the second half of 2021, although the scale and purpose of the recruitment is still unknown.

Furthermore, like all multinational organizations, overseas branches are not just about money and personnel.“Even with all the R&D being imported, the integration between Tesla China and the US R&D teams might not be smooth,” said Zhu Chen, General Manager of ThoughtWorks IoT Business Unit, in an interview with Electric Vehicle Observer. “The most painful part of international R&D organizations is that the branch and headquarters have different ideas. For example, the Chinese R&D team makes some unique judgments based on China’s national conditions. After submission to the headquarters, whether to approve or not is up to them. This raises questions about which code to use and a series of related issues. In contrast, XPeng Motors doesn’t have to worry about these problems.”

At the inception of XPILOT, the platform was designed to serve the Chinese market.

XPeng Motors adopts a decision-making logic based on high-precision maps, which, when fused with multiple sensors, realizes high-level intelligent driving assistance capabilities for highway navigation within the L3 category, where perception and decision-making algorithm difficulty are relatively low.

Moreover, the Chinese team can specialize in optimizing local scenarios, thus surpassing Tesla’s NoA performance in China in terms of driving experience.

According to reports, XPeng has made perceptual optimizations for “Chinese-featured” scenarios such as lane cutting-in and large cargo vehicles: adjusting sensor layouts and sensing ranges and training more targeted scenarios for XP’s perception model.

To address the disadvantage of “freshness” of high-precision maps, XPeng strengthened its mapping system by modeling and supplementing new road conditions that are not reflected in high-precision maps and by enhancing the accuracy of high-precision maps to better adapt to scenes with extreme road bumps. They also completed the details that are missing from high-precision maps.

It is worth noting that enhancing high-precision maps is not just a technical issue.

In 2021, XPeng invested RMB 250 million to acquire Jiangsu Zhitu Technology to obtain scarce A-level mapping qualifications. This not only legitimizes the map augmentation but also gives XPeng a ticket to self-built high-precision maps.

XPeng is also the first new Chinese car manufacturer to obtain this qualification.

Algorithmic Differences

“Every major hardware change will bring about a major change in software algorithms,” said Yu Kai, founder of Horizon Robotics, during a speech.

The differences in perception hardware schemes are the manifestation of the “differences” between XPeng and Tesla at the current stage, while the more fundamental differences stem from the different “thinking modes” of perception systems – which are critical to deciding whether the mass production of autonomous driving can eventually be achieved in the future.

The “thinking mode” refers to the software algorithm of the autonomous driving system, which mainly consists of perception, decision-making, and control.

The perception algorithm aims to solve the problem of “what the sensors perceive” through the classification, labeling, and understanding of perceived objects, ultimately building a vector space similar to real-life road conditions on the vehicle side.- Decision algorithms need to take into account navigation routes, road conditions, the actions of other traffic participants, as well as driving standards such as safety, efficiency, and comfort. In vector space, feasible space (convex space) is first solved, then optimization methods are used to optimize the solution within feasible space to output the final trajectory.

- The control part is responsible for efficient coordination of various components of the chassis system to faithfully execute the decision-making algorithm’s “decisions”.

According to “Electric Vehicle Observer”, it was learned in an interview that in the current advanced driver assistance and autonomous driving systems, most perception algorithms already use AI neural networks for perception, and neural networks are also used for search and option convergence in the front-end of the decision-making algorithm. The back-end uses logical judgment algorithms.

So, how big is the difference in software algorithm behind the hardware solution of pure vision and multi-sensors?

Perception algorithm comparison

The perception algorithm that uses neural networks as the main AI mode is the current mainstream mode.

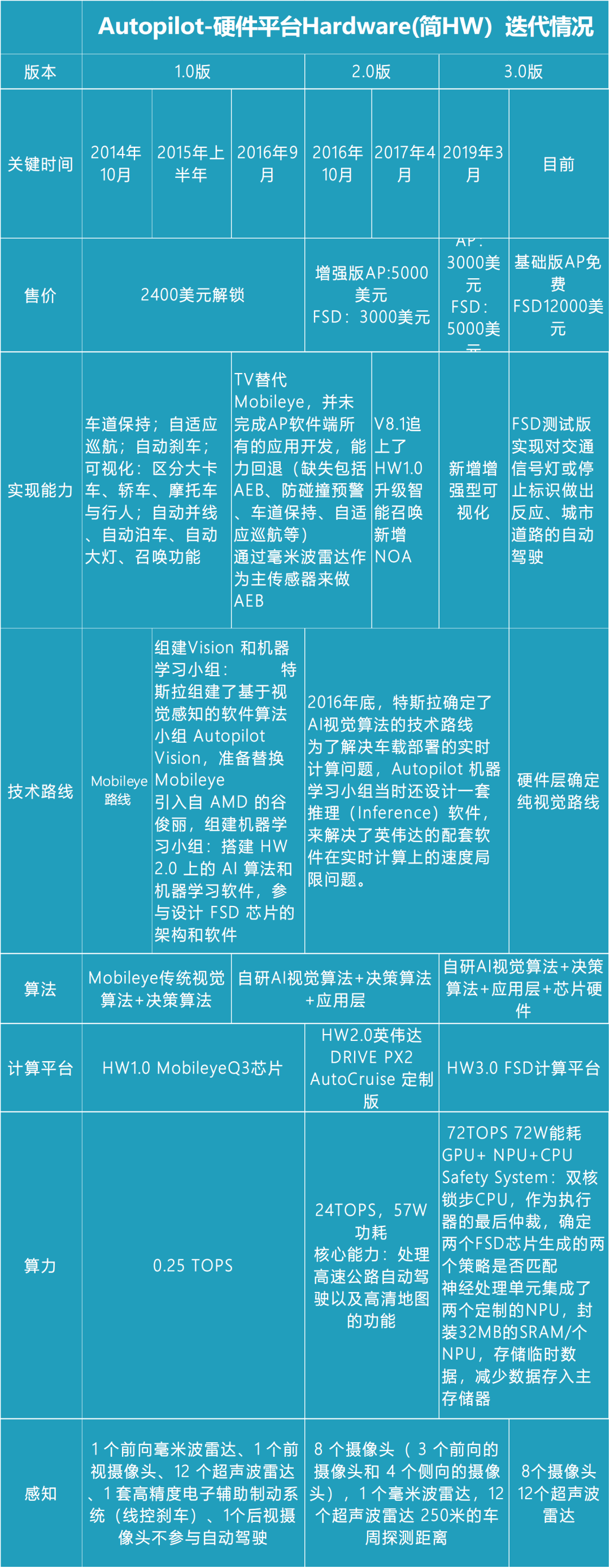

Going back to August of 2020, Musk first stated that Tesla was rewriting the fundamental architecture of FSD. One year later, at AI Day, Tesla announced that the computational power of CNN convolutional neural networks in the perception algorithm model has reached 98%, and time series has been added through RNN (recurrent neural network). By utilizing the Transformer with excellent algorithm parallelism, different camera data is fused.

Intuitively, the original data from the eight cameras on a Tesla car enter the perception algorithm model, and when the model outputs, the results are temporally and spatially consistent. Recently, in an interview, Musk said that Tesla has completed the complete mapping from vision to vector space.

Currently publicly available information shows that Tesla’s perception algorithm model contains at least 48 specific neural network structures that can simultaneously execute more than 1,000 different recognition and prediction tasks, and the training cycle requires 70,000 GPU hours.

In contrast, Xpeng, which uses a multi-sensor fusion solution, needs to take an extra step after completing the visual perception algorithm.

Currently, Xpeng P5 is equipped with a sensor scheme consisting of cameras, millimeter-wave radar, ultrasonic radar, lidar, and high-precision maps. Among them, the perception algorithm of radar is relatively simple, and high-precision maps can provide spatiotemporal prior information.

The real difficulty lies in using the algorithm model to fuse the information from vision, radar, and high-precision maps to establish vector space.Due to the different detection frequencies, information types, and accuracies of different sensors, the fusion algorithm model receives sensor information that is inconsistent in time, information and even appearance. It is difficult to integrate it into a spatiotemporal consistent vector space.

Furthermore, compared to the pure visual algorithm that relies solely on “seeing” and information consistency, the multi-sensor and high-precision map solution still faces the choice of “who to trust” – the “confidence” issue.

According to an expert in the Electric Vehicle Observer, the “confidence” issue of the perception fusion system currently relies mainly on third-party data verification in simulation and real road conditions.

The “confidence” issue handled by XPeng is not generalized. In the high-speed NGP stage, XPeng adopts a strategy based on a high-precision map, while in the city NGP stage, it will use a scheme based primarily on visual perception.

“In the city NGP, a high-precision map is still an important input. However, due to the existence of lidar and the rapid improvement of visual perception capabilities, we can handle various scenarios more safely and naturally. When the map’s boundaries or data appear to be incorrect or missing, we can have stronger tolerance,” said Wu Xinzhou to the Electric Vehicle Observer. “With the construction of the system’s capabilities, we are confident that we can catch up with or even surpass Tesla’s visual capabilities.”

The “easy” of pure vision versus the “difficult” of multi-sensor fusion

In theory, it is not an empty promise to catch up with Tesla in terms of visual perception capabilities.

Based on the image recognition neural network, visual perception has a “long” history and has therefore accumulated many concise and efficient open-source algorithms.

This is also the reason why Tesla dares to publicize its perception algorithm model logic and it has become the foundation for XPeng to catch up with and even exceed Tesla in terms of visual skills.

From the current results, XPILOT and FSD are the only car manufacturers with side (A-pillar) camera layouts in mass-produced autonomous driving systems. The reason lies in the high threshold of the algorithm that combines side images with wide-angle front-facing camera images, especially in mass-produced models.

Good visual perception algorithms are especially important. Experts interviewed by Electric Vehicle Observer generally believe that visual perception will still be the core perception scheme of future autonomous driving systems.

But why are multi-sensor fusion routes necessary? The core behind it is the ultimate pursuit of response speed and safety redundancy.

As the capabilities of cameras continue to improve, visual perception is constantly progressing in its ability to cope with adverse weather and road conditions. However, due to the “translation” process from 2D to 3D and the resulting approximately 1-second delay, it can be fatal for a car traveling on the road.Tesla has currently rewritten and integrated the underlying software system, removing the image preprocessing for human eye viewing adaptation (LSP) function, and directly transmitting the original information to the model, thereby reducing the delay of 13 milliseconds in total for eight cameras.

The radar can directly provide distance/depth/velocity information, and the data from multiple sensors can complement each other.

After developing its own perception architecture in P7, XPeng Motors applied laser radar to P5, and replaced the previous three-camera front view on G9 with a two-camera setup consisting of a narrow-view and a fisheye view. “With the stronger capabilities of XPILOT 4.0, the demand for camera resolution is also increasing. Therefore, the camera is a next-generation product that achieves higher resolution under the current situation where the resolution of the three-camera setup cannot meet the requirements,” explained Wu Xinzhou.

The problem is that there are currently few open source algorithms for multiple sensor fusion on the market.

Therefore, taking the multi-sensor fusion route, the fusion algorithm will rely more on each company’s self-development, validation and iteration, which will inevitably form different styles. However, it also lacks the advantage of “accelerating together in multiple fields around the world” like visual perception.

Moreover, the current multi-sensor fusion route will lead to a strong coupling between automakers and suppliers.

Unlike cameras that have standard data formats and universal data interfaces, radar and high-precision maps are still “non-standard products”. Laser radar also has a dispute between mechanical, solid state, and semi-solid state routes, and the data format and interface have not yet created a unified industry standard. High-precision maps also have differences in data calibration methods and accuracy among different map vendors.

Therefore, although automakers generally pursue software and hardware decoupling, in some specialized sensor areas, changing suppliers means changing the algorithm model. This has also led to automakers taking a more cautious approach when choosing suppliers for the multi-sensor fusion route. They not only establish procurement relationships, but many even establish deep cooperation relationships such as investment and joint research and development.

The more difficult part is the decision-making algorithms.

Solving “what we feel” is only the beginning of establishing a vector space.

AI technology, with the help of deep learning, continues to increase perception capabilities, but still lacks the ability to “think”: the ability to handle complex relationships such as conditional probabilities and causality, and to complete tasks such as reasoning and inference.

This ability is critical to the process of landing autonomous driving.In 2018, Uber’s test vehicle had the world’s first fatal accident. The official US report stated that the vehicle “observed the obstacle” six seconds before the accident occurred, and identified it as a bicycle that required emergency braking in the first 1.3 seconds. However, “to reduce the possibility of unstable vehicle behavior (discomfort),” the automatic emergency braking did not activate and instead slow braking was applied, which combined with the distraction of the safety driver ultimately led to the accident.

This case fully demonstrates the importance of the decision-making system, especially in complex road conditions full of game scenarios in urban traffic.

Cruise, a Level 4 autonomous driving technology company under General Motors, gave the definition of a good decision-making system during last year’s technology day: timeliness; interactive decision-making (considering the impact on other traffic participants and vehicles’ future actions); reliability, and repeatability (being able to make the same decision in the same scenario), thus outputting a safe, efficient, and “old driver”-like ride experience.

Tesla has explicitly stated that the standard for its decision-making system is safety, comfort, and efficiency at the previous AI Day.

XPILOT’s decision-making elements in more challenging urban scenarios, according to Wu Xinzhao, are safety, usability, and functionality, according to Electric Vehicle Observer.

Although the standard is similar, achieving a driving performance similar to an “old driver” is not easy.

In low-speed or simple scenarios, the decision-making algorithm will plan a collision-free, safe route based on perception data, and the vehicle will move along the specified route.

However, in complex traffic flow and road conditions, there are often problems such as trajectory changes and collisions. The root cause is the insufficient foresight of the decision-making algorithm for obstacle behavior, and the algorithm only relies on the current perception data to solve the local, rather than global, road conditions.

Therefore, when the vehicle is in unfamiliar and complex scenes, it will repeatedly emergency brake or exhibit dangerous behavior, making it difficult to meet the decision-making standard of “safety, efficiency, and comfort.”

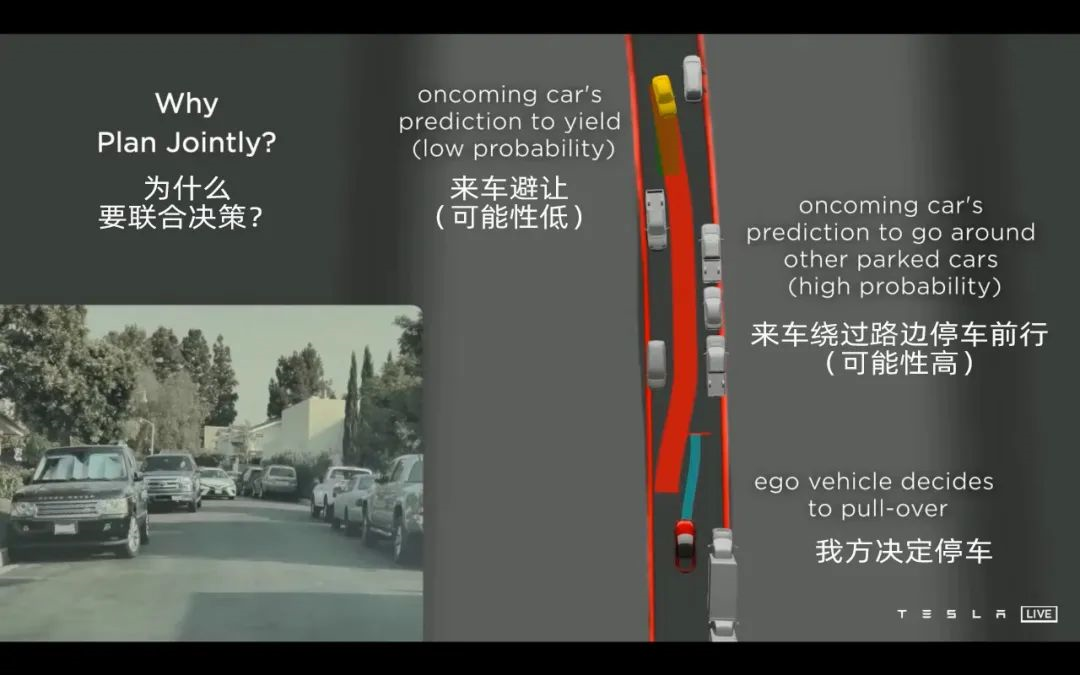

When the vehicle is driving autonomously, there may be hundreds of interactions between traffic participants and the autonomous vehicle in a single traffic scenario, and the decision-making system needs to consider the future actions of other traffic participants in the scene, project and predict various social behaviors of surrounding vehicles, form a drivable space, and then search for trajectories.

Among them, prediction is considered the most difficult part of autonomous driving system engineering. The vehicle not only needs to understand its own and the environment’s various possible movements in the future, but also needs to determine the most likely behavior of other traffic participants from countless possibilities.

To establish the predictive ability of the system, in addition to continuous algorithm optimization, the industry also needs to perform self-supervised learning of AI in the world model. The massive amount of behavioral data collected from the real world by Tesla’s shadow mode has become the best material for FSD to establish predictive ability.Below is the English Markdown text with the original HTML tags preserved.

Tesla demonstrated a narrow road passing scene at the AI DAY last year. The autonomous driving vehicle initially believed that the oncoming vehicle would continue to drive, so it waited on the right. After realizing that the oncoming vehicle had stopped, it immediately moved forward.

An autonomous driving regulation and control engineer told the Electric Vehicle Observer that most autonomous driving companies cannot handle such a scene and often choose to stop and yield, or start and stop alongside other vehicles, resulting in collision risks. “But Tesla can handle this scene very well, proving that its prediction and decision-making are very good.”

Even with prediction, searching is not easy. Autonomous driving vehicles usually need to calculate more than 5000 candidate trajectories to make the correct decision.

But “time does not wait for cars,” and decision-making planning algorithms usually run at a frequency of around 10Hz to 30Hz, that is, they need to be calculated every 30ms to 100ms, and making the correct decision in such a short time is a huge challenge.

Tesla’s FSD can search 2500 times in 1.5ms and choose the optimal trajectory by comprehensively evaluating the candidate trajectories.

However, such a method will often exceed the computing power of the platform in urban road conditions, where there is mixed traffic and complex road structures.

Therefore, Tesla introduced the MCTS framework (Monte Carlo tree search), which is over 100 times more efficient than traditional search methods. MCTS can effectively solve some problems with immense exploration space, such as the general Go algorithm, which is based on MCTS. Apple’s autonomous driving patent and Google’s AlphaDog have also adopted this method.

Xpeng has not yet revealed the model type used by its decision-making algorithm. However, Wu Xinzhou told the Electric Vehicle Observer that “in urban scenes, due to the different participants and complexity of the scene, there are completely different requirements for prediction, planning, and control. Therefore, Xpeng has greatly enhanced its location, perception, and fusion capabilities based on the high-speed scene. For the decision-making part, we have introduced a brand new architecture to meet the higher requirements of city NGP. This architecture also has very strong reverse compatibility, so we also look forward to the future in XPILOT 3.5, where our high-speed and parking lot scenes can also benefit from this new architecture, and give users a better experience.”## How Xpeng is catching up with Tesla worldwide

Tesla FSD will eventually be opened up in China, and Xpeng’s intelligent driving will also need to go beyond China. The two will be head-to-head sooner or later. Can Xpeng win the battle against Tesla in the Eastern Hemisphere and even worldwide?

What really gives Xpeng the confidence to challenge Tesla is the end-to-end full-stack self-developed system capability completed by Xpeng in 2020.

Building its own algorithmic data loop

What does full-stack self-development mean?

According to He XPeng’s interview with Electric Vehicle Observer, Xpeng’s “full-stack self-development” is not only about self-developed algorithms for vehicle-end visual perception, sensor fusion, positioning, planning, decision-making, control, etc.

It also includes a series of tools and workflows required for cloud data operation.

That is, self-development is realized in aspects such as data upload channels, front-end data upload implementation, cloud data management system, distributed network training, data collection tool development, data annotation tool development, software deployment, etc..

“This creates a closed loop of data and algorithms, laying a solid technical foundation for rapid functional iteration.”

Different from the logic-based algorithm model, which depends on the engineers’ intelligence, the autonomous driving system mainly adopts the neural network algorithm model, which has the characteristic of “growing based on data”: the algorithm is gradually formed in the data flow through the collection, storage, transfer, training, and management of core data.

The algorithm is driven by data to iterate, and the iterative algorithm brings new data. The improvement of the system’s capabilities is a circular process in the data.

In this growth loop, any intervention by others in any link will affect the speed and quality of the enterprise’s “own” iteration and upgrade of the autonomous driving system.

In the traditional automobile industry, prior to Tesla breaking industry conventions, there was no rhythm of their “own”. Although the OEMs occupied a strong position in the industry chain, the iteration cycle of their car models was more limited by the technology and commercial rhythm of the component suppliers.

In the Model S fatal accident in June 2016, a divergence in the time to implement AEB (emergency braking) through visual solutions led to a complete “breakup” between Tesla and Mobileye.For vehicles that do not have active emergency brake (AEB) function in the event of an accident, DanGalves, the Chief Communication Officer of Mobileye, stated that “currently (in 2016) AEB is included in the avoidance of rear-end collision system, (and therefore is unable to address the lateral appearance of vehicles in front). However, Mobileye will bring road-side turning (LTAP) detection from 2018 onwards.”

However, Tesla did not want to wait until 2018, nor did they want to follow Mobileye’s traditional visual perception route.

Thus, Autopilot Vision (TV) and Machine Learning team, which had only been established for a year, “forcibly” took over Mobileye’s position in October 2016, and determined the technical route for AI visual perception at the end of the year.

At its debut, TV did not complete all of AP software applications, including AEB, collision warning, lane-keeping, adaptive cruise control, and other critical functions for several months, during which time it used the millimeter wave radar to cover up AEB, resulting in many cases of “phantom braking”.

It was not until April 2017 when Tesla pushed out V8.1, that its self-developed AI visual algorithm capabilities caught up with HW1.0 supported by Mobileye, thus opening up an unprecedented pace of iteration for the automotive industry and forcing the entire industry to keep up with Tesla’s pace.

“XPeng is the first company in the industry to keep pace with Tesla not only on the functional level, but also on the software stack level through comprehensive self-development.”

In 2018, with XPeng G3, XPILOT 2.0 was officially productized and put into use, achieving mass production of end-to-end self-developed data loop automatic parking system.

In 2019, XPILOT 2.5 system on XPeng G3i achieved ALC automatic lane change function, except for parking. XPeng independently developed the lowest-level line control, path planning, and control algorithm, while perception algorithm still relied on the supplier.

In 2020, XPeng P7 and XPILOT 3.0 made their debut together, realizing NGP and parking memory functions. For the first time, XPeng completed deep software full-stack self-development, established its own visual perception capability, driving perception evolutionary data loop system, high-level assisted driving algorithm, and software architecture. It became the second vehicle enterprise in the world to achieve full-stack self-development of automatic driving systems, algorithms, and data loop.

“Compared with non-self-developed modes, the advantages of using ‘full-stack self-development’ in terms of organization, talent, and research and development investment are definitely heavier. However, the advantages are also obvious.” Wu Xinzhou said.

The advantages are indeed obvious.From the perspective of high-speed navigation, XPeng’s NGP landed in 2020, while NIO, although slightly earlier, still relies on Mobileye’s semi-self-developed product. Li Auto will not have this feature until after upgrading in September 2021.

However, brands such as ZAD, Huawei ADS, IM AD, NIO, and Leap Pilot, although all have plans for level 3 advanced driving capabilities, still have a considerable time difference compared to XPeng.

“Compared to themselves,” XPeng has made rapid progress. In 2020, besides its self-developed solution, XPeng’s P7 also adopted an intelligent controller mainly composed of one forward-facing camera and an Infineon Aurix MCU 2.0 as a redundancy plan, whose perception and decision-making algorithms also came from Bosch. On the 2021 P5, this third-party redundancy plan was removed, and the P5 was equipped only with an NVIDIA Xavier platform, and an additional LIDAR was added as a sensor.

As planned, XPeng will realize the core NGP function of XP3.5 on the NVIDIA Xavier platform with 30TOPS of computing power. The Tesla FSD chip with the same high-level driving assistance function has a computation power of 144TOPS.

“(Full-stack self-development) has exercised the team’s ultimate engineering capability and achieved the landing of relatively complex functions under limited computation power,” Wu Xinzhong said to “Electric Vehicle Observer,” “From XPILOT 3.0 to 3.5, to the future 4.0 and 5.0, XPeng’s technical route is very continuous and naturally evolves.”

Efficiency competition. Although XPeng has taken a technical route somewhat different from that of Tesla, the two have the same goal: to achieve mass production of autonomous driving technology through full-stack self-development.

In Wu Xinzhong’s view, one of the competitions aimed at this goal is data volume, and the other is the correct network architecture.

“Tesla’s current network architecture has high requirements for the system’s capabilities. In terms of data acquisition, labeling, and training, other manufacturers have a huge gap in the construction and investment of the system’s capabilities compared to Tesla.”

In terms of data volume, Tesla is currently unbeatable worldwide.At CVPR 2021, Andrej, the AI Director of Tesla, announced that as of the end of June 2021, Tesla has a million-strong fleet and has collected one million highly diversified scene video data, with 36 frames and 10-second duration, occupying 1.5 PB of storage. It has acquired 6 billion object annotations with accurate depth and acceleration, and has gone through seven rounds of shadow mode iteration.

This scale of data collection is not only far beyond NIO and other self-driving car companies, but also surpasses Waymo’s latest data, which reached a total of 10 million miles of road tests as of October last year. By comparison, Tesla’s data as of June last year amounted to nearly 15 million miles, including 1.7 million miles collected while Autopilot was activated.

Data is fuel for the evolution of autonomous driving systems algorithm models. Tesla has built an efficient closed-loop system to turn this massive data into “anthracite”.

Based on the million-vehicle fleet, Tesla uses “shadow mode” to selectively collect massive corner-case scenario data and driver operation data in those scenarios, and provide higher-quality semi-supervised or supervised learning guidance to neural networks.

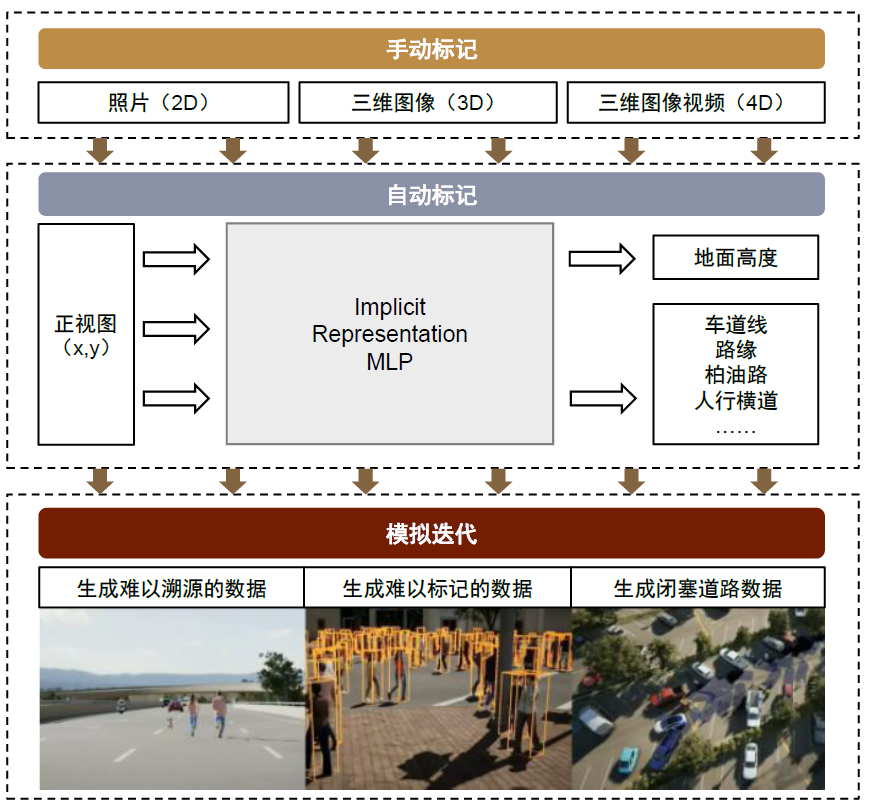

These raw data need to be marked with various features before they can be used as learning materials for neural networks.

Prior to this, this type of unstructured data relied heavily on manual annotation, which was a labor-intensive industry. Many companies outsourced the task to third-party companies. However, the third-party annotation process is inefficient and slow, leading to delays in marking, analyzing, and processing training data.

Tesla has built a data annotation team of over 1,000 people, divided into four teams: manual data annotation, automatic data annotation, simulation, and data scaling. Technically, it has advanced from 2D annotation to 4D annotation and automatic annotation. The automatic annotation tool can synchronize the annotation of all camera multi-angle and multi-frame images with a single annotation, and can also annotate the time dimension.

After building its own data annotation system, Tesla also built a training ground for data – a supercomputing cluster with a compute power of 1.1 EFLOPS, composed of 3,000 Tesla-developed Dojo D1 chips. It is among the world’s highest-performance computing equipment alongside Google(1 EFLOP) and SenseTime(1.1 EFLOPS).Compared with the universal supercomputer clusters of Google and SenseTime, Dojo is more focused on video processing and more targeted to Tesla’s autonomous driving system model training, thus effectively reducing algorithm costs.

“We believe that the gap in system architecture is more important than the gap in data. XPeng has been committed to building its own system capacity for the past few years. The results of complex system engineering presented at the end are not determined by a single variable, but by the overall design and hardware matching,” said Wu Xinzhou to Electric Vehicle Observer.

“In the future, we will continue to achieve a balance in algorithm optimization and sensor selection or changes, use appropriate hardware to develop higher-level driving assistance capability, and continue to evolve towards autonomous driving,” he added.

Efficiency and cost are decisive factors that determine the successful large-scale production of any product. Tesla’s construction of this set of data closed-loop system for efficiency and cost reduction not only relies on its own technical capabilities but also is closely related to its strong financial strength.

In 2021, Tesla’s R&D expenses were approximately 16.8 billion yuan (2.591 billion U.S. dollars, 6.5 yuan/dollar). By comparison, XPeng was 4.114 billion yuan and Great Wall was 9.07 billion yuan.

But this does not mean that XPeng has no chance of winning in the second half of the competition with Tesla in mass production of autonomous driving.

“Compared with Tesla’s product idea that fully starts from the perspective of a technology company, XPeng thinks more about whether it can combine China’s applicable scenarios to truly help car owners with product functions,” said Zhu Chen to Electric Vehicle Observer.

“Features that are more suitable for the needs of Chinese users will help XPeng scale up sales in China, truly realize the mass production of XPILOT, and help it establish a data and system advantage in the Chinese context,” he added.

In 2020, the purchase rate of FSD (Tesla’s fully automatic driving system) in China was only 1-2%, lower than the ratio of 10%-15% in North America (measured by foreign media). In Q4 2021, the FSD adoption rate on Model 3 in the Asia-Pacific region was 0.9%, while in Europe and North America it was 21.4% and 24.2%, respectively (according to the statistics of Troy Teslike, a blogger who has long been concerned about Tesla).

As of the end of the third quarter of last year, the activation rate of XPILOT 3.0, which is similar to Tesla’s Autopilot enhanced version, was nearly 60%. Wu Xinzhou did not disclose XPeng’s data acquisition mode but said, “We will learn from the advanced experience of the world.”

In terms of computing power and space perception, XPeng is currently no less powerful with the “external force”.

In terms of computing power and space perception, XPeng is currently no less powerful with the “external force”.

XPeng G9 will carry XPILOT 4.0, which uses the NVIDIA Orin-X chip with 508 TOPS application, and a highly integrated field controller with a gigabit Ethernet. NVIDIA’s AI training server, EOS, released this year, has up to 18.4 EFLOPS of computational power.

Moreover, XPeng’s ability to grasp the scene compared to Tesla has already begun to show.

In March of this year, XPeng Motors released version 3.1.0 of Xmart OS, which achieved the VPA-L cross-floor parking memory parking function for up to 2 kilometers. Nearly at the same time, rumors had it that Tesla was developing “Smart Park”: with the presence of drivers, vehicles automatically park in designated locations such as “closest to the door”, “near the shopping cart exit”, “the end of the parking lot”, etc. Based on the description of the functions, Smart Park is very similar to memory parking, with leaders and followers reversing their positions.

As XPeng previously stated, “We will meet” even further overseas.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.