When it comes to the progress of autonomous driving technology, there should be a lot of discussion about the core, which is on the chip. From the domestic new car’s war of chip technology stacking that began in the middle of last year to the emergence of domestic suppliers of autonomous driving chips, the topic of autonomous driving chips suddenly became much more talked about.

In the war of chip technology stacking led by several new car manufacturers last year, some people raised doubts about whether high computing power represented advanced autonomous driving capability. In addition to verifying the final actual effects, should we also compare the AI chips that are the core of the discussion on the issues to evaluate which design can meet the actual needs of the high-speed development of autonomous driving technology?

The background of the dispute between chip computing power and algorithm optimization route

The dispute between chip computing power and algorithm optimization route is not particularly obvious on the surface. The main reason is that the current mainstream algorithm “big boss” Tesla is purely showing off its muscles to its internal test users by regularly pushing out beta test versions every few days.

The Autopilot HW 3.0 currently used by Tesla with 144 TOPS of computing power (72 TOPS for a single chip) may have been technologically superior when it was released three years ago. However, in the computing power stacking war that easily reached 400+, 500+, or even 1000+ TOPS last year, it seems to have fallen behind.

This dispute of routes has become a soap opera between Tesla, which takes the pure vision route, and L4 Robotaxi companies, which take the LiDAR route and carry the banner of autonomous driving, in the past few years. It is as fiercely debated as whether LiDAR is meaningful, to some extent.Dr. Luoheng Bo, in his recent public lecture at Horizon, discussed the background of the debate about the improvement of chip computing power and algorithm optimization.

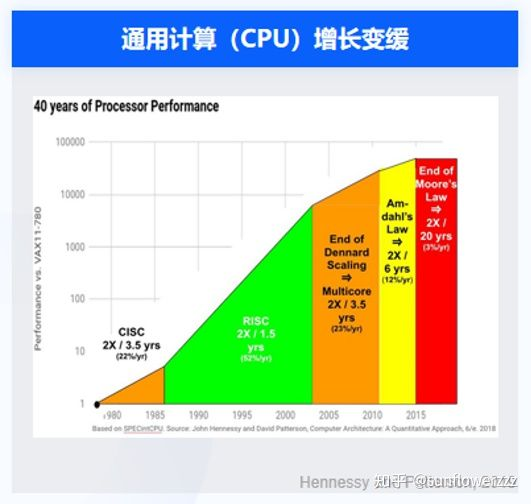

The most common perspective on chips is Moore’s Law, where people believe that chip computing power can double in a certain period of time. However, this “rule” has already been broken for general-purpose computing (CPU) since 2015.

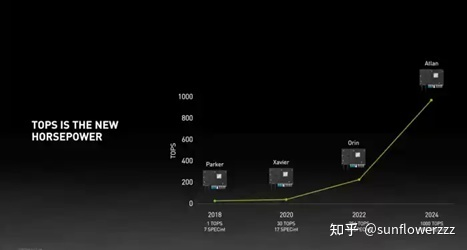

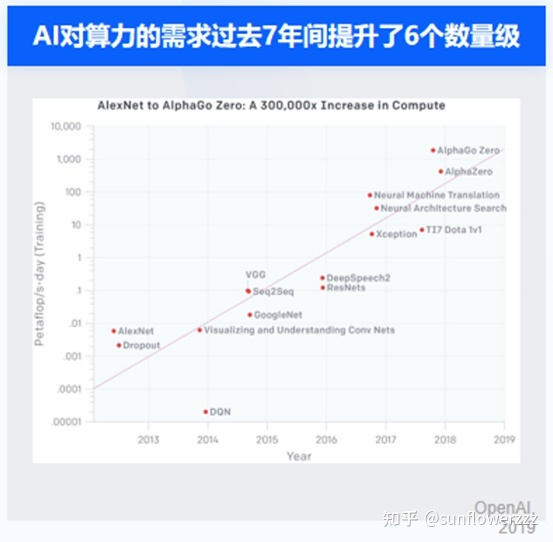

Autonomous driving tasks are actually a type of AI computing. The demand for AI computing as a whole has grown rapidly in recent years, accompanied by a large number of proprietary architecture chips with significant differences from CPUs. However, even for specialized AI chips, there are enormous challenges in meeting the rapid growth of AI computing demands.

The growth in the demand for AI computing power is severely mismatched with the improvement of CPU computing power. As a result, people have begun to look for other solutions, particularly in the direction of AI-specific accelerators. For example, Google has switched from using CPUs to GPUs and then to its own highly customized AI inference chip, TPU. It has been proven that algorithm optimization is more important in meeting the demands of data centers.

Although there are many differences between autonomous driving tasks and data center tasks, the former requires even more AI acceleration to improve performance. It goes without saying that algorithm optimization is of utmost importance to the improvement of autonomous driving performance.

In different tasks and scenarios, there are different performance evaluation targets for AI computing tasks. Based on the background conditions of chip physical implementation costs and power consumption, it is also necessary to consider the computation speed and accuracy of AI algorithms. The development of computing power and algorithm optimization form a complementary binding relationship in the demand of AI computing tasks.

In different tasks and scenarios, there are different performance evaluation targets for AI computing tasks. Based on the background conditions of chip physical implementation costs and power consumption, it is also necessary to consider the computation speed and accuracy of AI algorithms. The development of computing power and algorithm optimization form a complementary binding relationship in the demand of AI computing tasks.

It can be said that the ability of hardware domination and software solutions optimized by algorithm optimization are both necessary to achieve the ultimate optimization of AI computing tasks. In the journey of implementing intelligent assisted driving, instead of arguing about the route, it is better to find an AI chip that truly achieves both, and is truly oriented towards automatic driving tasks.

How to make an AI chip truly oriented towards automatic driving tasks

The problem of how to make an AI chip truly oriented towards automatic driving tasks can be first narrowed down to a sub-problem, which is to find an objective indicator to evaluate the performance of an AI chip.

The peak computing power of the chip is of course an important indicator that cannot be bypassed, but just like the two AI accelerators in the above figure with peak computing powers that differ by several times, different model’s actual operating results may have similar performance. Therefore, evaluating the performance of an AI chip should be based on the model it ultimately faces.

The actual core load of autonomous driving is high-resolution object detection. For this core load, Dr. Luo Hengbo of Horizon Robotics mentioned in a public lecture the evaluation indicators for autonomous driving chips that Horizon Robotics believes in, which is the mean accuracy-guaranteed processing speed (MAPS) of object detection. He also showed the comparison of actual running results of the same target model with Horizon J5 and Xavier, as well as predictions of Orin-X.

Under the same accuracy, the J5 frame rate is significantly higher than Xavier, and the corresponding prediction results of Orin-X also show a clear improvement. If we also consider the power consumption difference (J5 power consumption of 20w, Orin-X power consumption of 65w), the efficiency ratio of Horizon J5 is more than 6 times higher.

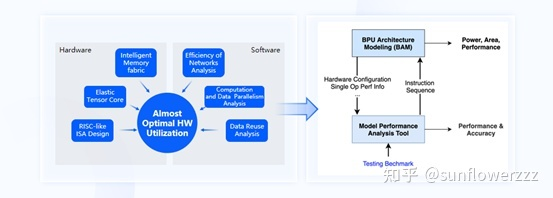

Why is this effective? Dr. Luo Hengbo from Horizon Robotics mentioned the advantages of algorithm-oriented design at the chip design level. By designing specialized chips for algorithm needs and integrating software and hardware to build a complete algorithm verification and collaborative optimization, it is possible to return to the autonomous driving scenario.

Why is this effective? Dr. Luo Hengbo from Horizon Robotics mentioned the advantages of algorithm-oriented design at the chip design level. By designing specialized chips for algorithm needs and integrating software and hardware to build a complete algorithm verification and collaborative optimization, it is possible to return to the autonomous driving scenario.

This problem-solving approach is not limited to algorithm evaluation using simulated computation datasets. In actual autonomous driving scenarios, it can also bring advantages in the overall solution, from target recognition frame rate to the success rate of final function implementation.

Conclusion

In general, high chip computing power is indeed advantageous for the high-speed development of the autonomous driving field. However, algorithm optimization for autonomous driving tasks is equally important. It is more suitable to develop excellent and efficient autonomous driving algorithms and practical effects by providing a complete tool chain that integrates algorithm optimization, chip software and hardware design.

This solution that connects the front and rear links should also be the most favorable accelerator key to help struggling car companies find a way forward in the increasingly competitive intelligent assisted driving market.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.