Translation

Author: Chang Yan

Recently, my favorite podcast “Radiotopia’s Ear Hustle” did an episode about travel. A guest talked about Israel, which is one of the most memorable travel destinations for him.

This triggered many memories and resonances for me about traveling in Israel. Surprisingly, what impressed me the most was not any cultural heritage, but rather the Mobileye L4 autonomous vehicle that could navigate the streets of Jerusalem freely using just cameras back in 2018.

Every year in early January, I always listen to Mobileye’s keynote at CES. While other autonomous driving companies are eager to throw away their past products and move on in the new year, Mobileye is always thinking about what it takes to make autonomous driving a reality.

What is autonomous driving all about?

In 2021, even experienced media reporters were confused about autonomous driving. The field is home to many players, with divergent product forms and technical routes. The differences and distinctions between them have become increasingly blurred due to the uneven development of hardware and software.

Sometimes they take different paths to reach the same goal, and sometimes they create their own ways.

Through their “three main business pillars,” Mobileye provides an observation of the entire industry.

Amnon Shashua, Senior Vice President of Intel and CEO of Mobileye, said, “The first is a new category of emerging advanced driver assistance systems (ADAS), which we call L2.5.” This can be understood as the natural upgrade and extension of the current ADAS, evolving gradually from a single function to a “full-surround perceptual system.” We will see this in production cars this year.

“The backbone of Mobileye’s second-largest business is the widely-known L4 Robotaxi, which has two key definitions: firstly, it must operate in restricted areas, and secondly, the cost is around $150,000.

“The backbone of Mobileye’s second-largest business is the widely-known L4 Robotaxi, which has two key definitions: firstly, it must operate in restricted areas, and secondly, the cost is around $150,000.

Additionally, there is another L4 consumer-level AV self-driving car which is set to be released between 2024 and 2025. These products are still intended for sale to end-users and differ from the aforementioned Robotaxi in that they can travel anywhere and the cost of the automatic driving component is about $10,000 in suggested retail price.

If other brands are good at presenting concepts, Mobileye’s interest lies in making these concepts a reality. The core point lies in balancing cost and scalability, and architectural design is based on these two factors.

Mobileye believes that building good solutions in autonomous driving requires three pillars:

“The first is capability – the ability to support a wide range of design running capabilities.” This requires the system to support a wide range of Operating Design Domains (ODDs) on various types of roads and is similar to human driving strategy, “just like when we are driving ourselves.”

“The second is a very long average time between failures,” which is ten, one hundred, or even a thousand times longer than the average time between failures of human drivers.

“The third is efficiency, and efficiency is cost.” “I mentioned earlier that we hope the cost of calculation and sensor can be far below $5,000, and at the same time, can be expanded to travel anywhere.” These are the three pillars.

How can we push efficiency to the extreme?

Similarly, we have witnessed too many additions made by this industry in the past year.

Just as we left behind the criticism of expensively-priced Robotaxi sensors in the past few years, we have happily begun to add various hardware devices to civil cars.”We are witnessing the moment when computing power has surpassed 1000 TOPS, and also witnessing the evolution of LiDAR from mounting to four modules.

This may be a solution, but not necessarily the best one, nor the most inclusive.

Mobileye’s core logic is to achieve a balance between efficiency and lean calculation through good software and hardware design.

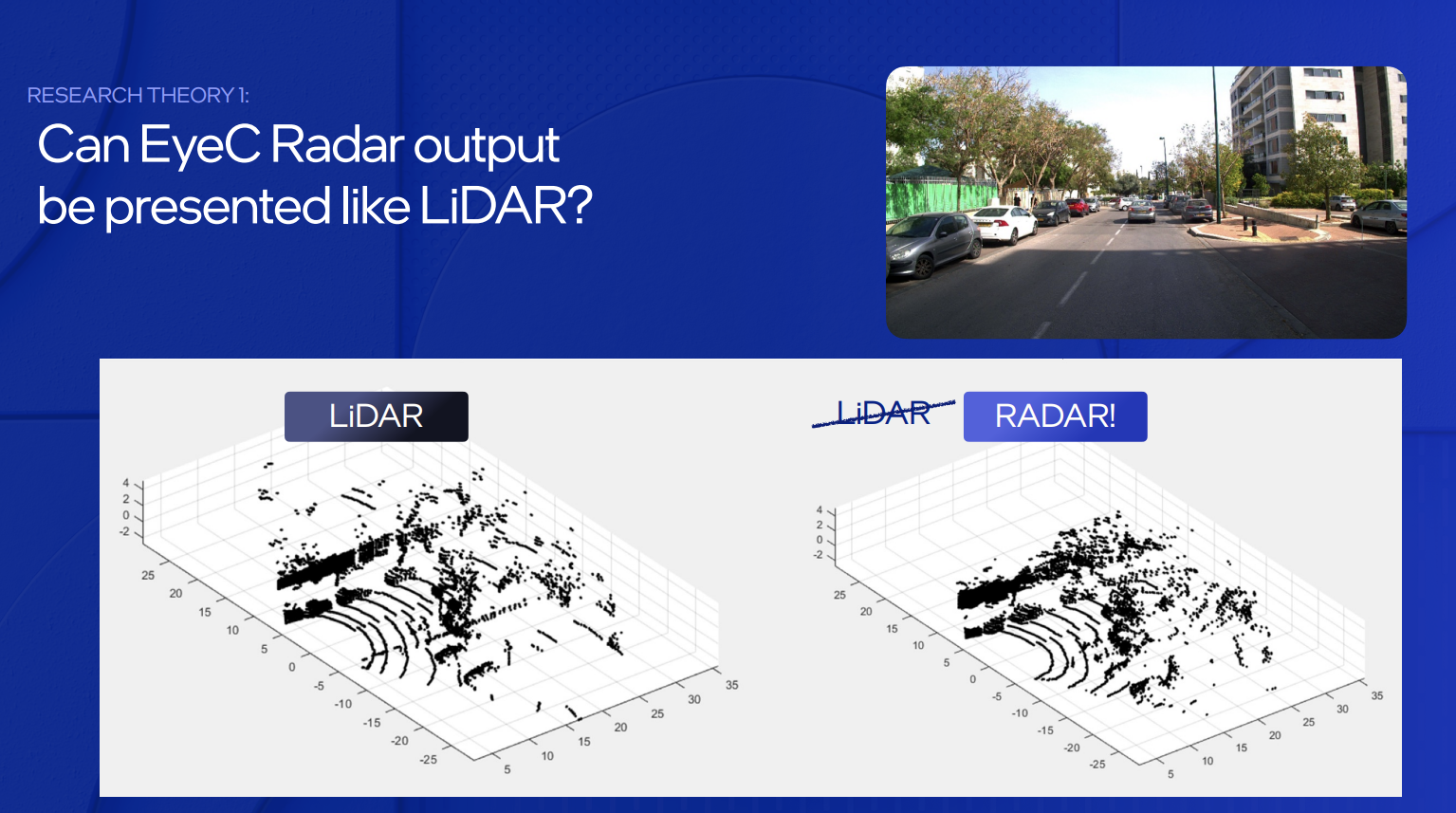

As for hardware, since the cost of traditional radar is only one-fifth to one-tenth of that of LiDAR, Mobileye wants to use “software-defined radar” to achieve the effect of LiDAR by using millimeter-wave radar with deep learning.

“We trained another neural network to map the radar output to the camera image, which is equivalent to combining traditional radar and camera, and then we trained it with the neural network.”

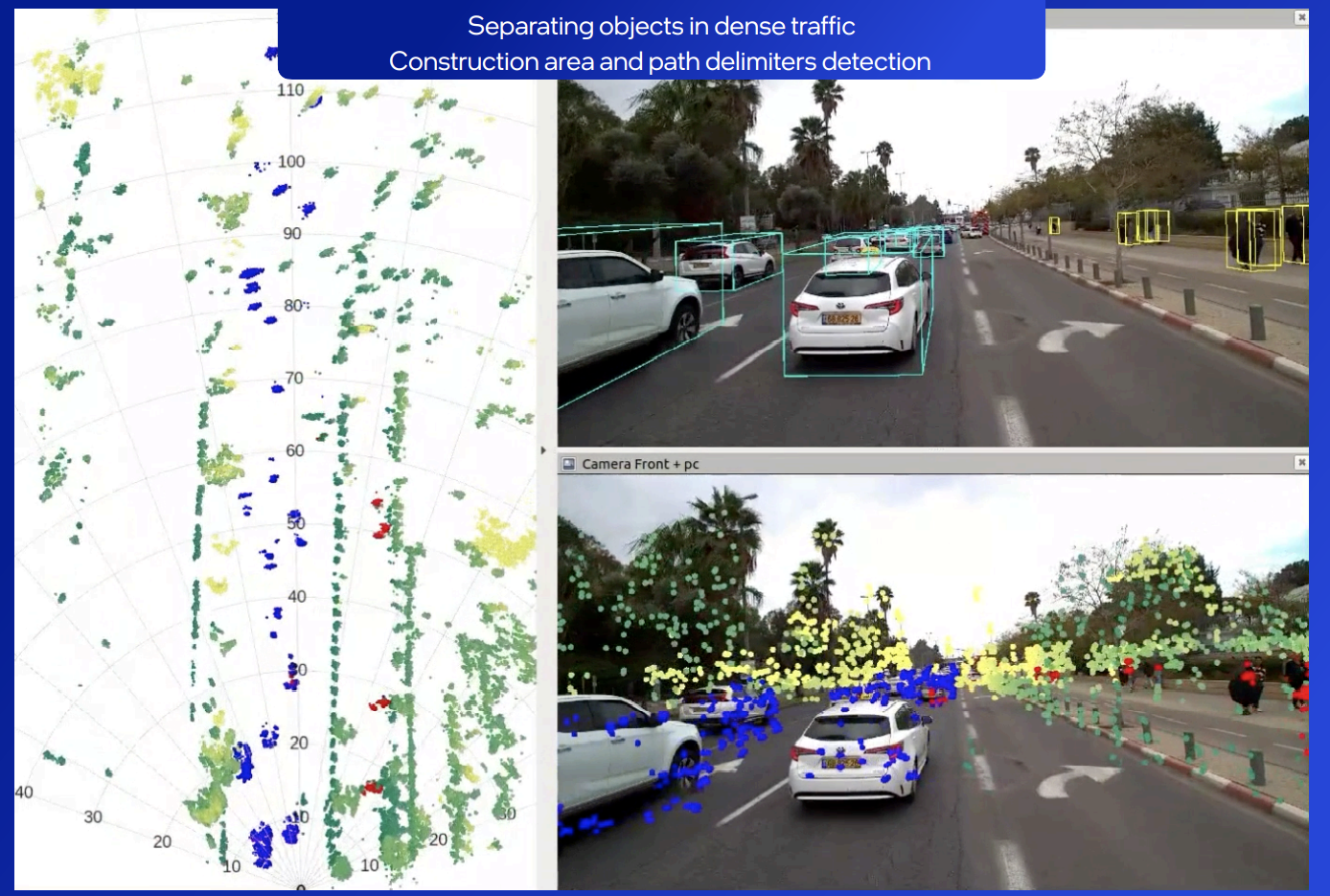

“If this is achieved, by 2025, the sensor configuration will have only one front LiDAR, but at the same time will achieve three redundancies, LiDAR, camera, and imaging radar.

The camera provides full coverage, the imaging radar provides full coverage, and LiDAR will not provide full coverage because the radar will be an independent system, just like today’s radar and LiDAR are independent systems.

In terms of cost, this will change all the rules of the game and even increase more robustness, because now there will be three redundancies in the front, not just two.”

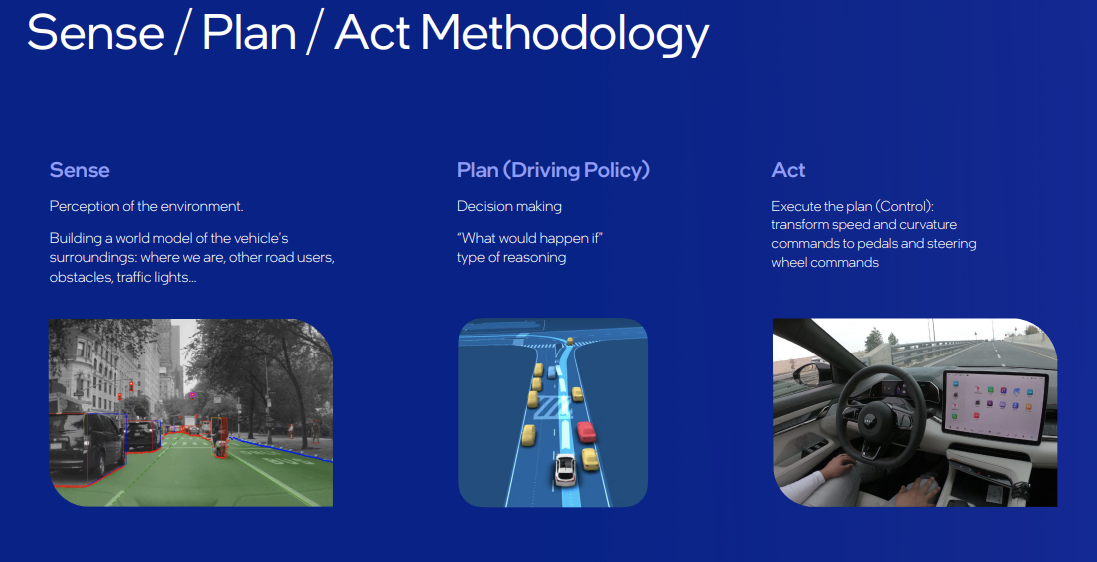

As for software, achieving “lean calculation” involves all cycles of perception, planning and execution.

“When considering future situations, the first step is to consider all participants, and the second step is to consider all possible actions of all participants, like an exponentially increasing tree. This is the strength calculation method, which requires high-intensity calculation, but eventually can obtain a very good solution, and the key point is calculation.”# Translation

The neural network is no longer involved in the safety aspect but in the comfort aspect. Our goal is not to predict what other road users will do, but to detect their intentions, which may include letting others pass, merging, parking, or performing a U-turn. We have a long list of intentions.

Then, let’s go back to the chips that everyone cares about.

Professor Amnon Shashua has an excellent argument that key is not only computational power but also efficiency, which requires an in-depth understanding of the interaction between software and hardware, what is the core, and what algorithm to support the corresponding core.

Following this line of thought, we can find the two core demands of chip value for OEMs.

Firstly, “What OEMs need is a scalable solution, which means it should be able to fit a low-end market scenario and can match mid-range and high-end markets by adding additional features and functions,” as said by Erez Dagan, Vice President of Mobileye Products and Strategy Execution at Intel.

Secondly, “We are also very frank that TOPS is a very inadequate computing power index. The computational model integrated into the EyeQ chip is very complicated and cannot be quantified by a single index,” “The energy consumption required by computation is very crucial for product economy. We know that the power consumption of Mobileye’s Drive, an AI-powered robotaxi running on EyeQ Ultra, will be 100 watts, which is unheard of in the world of electric vehicles,” “We don’t want critical energy consumption to deplete battery life.”

This may be Mobileye’s deepest consideration that sets the computation power of their flagship autonomous driving chip, EyeQ Ultra, at 176TOPS.

What about the Chinese market?

Somehow, in 2021, Chinese automakers seem to have had enough of Mobileye’s difficulties.

On January 11, the CEO of a new force car maker said on Weibo that “we stopped working with Mobileye at the end of 2020 due to our inability to meet the needs of our full-stack self-developed intelligent driving (the most important perception algorithm is a black box)”.During the interview session of the CES 2022 Mobileye China Media Sharing Conference that I attended, things were obviously quite heated.

However, it is obvious that Mobileye is actively promoting change through both technological and operational means.

“In the past two years, we have collaborated with numerous OEMs worldwide in a co-development model, which means that some of the computing power of EyeQ5 can be used to execute technology from our partners’ R&D departments. This is rooted in Mobileye’s strategy because EyeQ5 is designed for this purpose and it is the first SOC designed for central calculation of multiple sensors. At that time, we had already released an SDK for this SoC and worked with our partners to develop software technology for the computing module.”

And the recently released EyeQ6 family goes even further in terms of configurability: “On EyeQ6, we go further. EyeQ6H can support video playback and achieve visualization through GPU and ISP. We hope that these can meet the needs of OEM customization and we can cooperate with them. This means that we support an external software layer defined by our partners.”

Erez Dagan also talked about the importance of the Chinese team, believing that “a strong and important Chinese team is a very important step for Mobileye,” and “we have drawn personnel from Intel internally and deployed technical personnel from Israel to China, and REM will be used in China in a fully compliant and fully localized way. This is very important for Mobileye.”

In 2022, Mobileye will land in China to collaborate with more OEMs, “we have very strong business operations in China, and localization operated in China is key to our further expansion of business in China.”

Throughout the content of CES, Mobileye seems to be saying, “Don’t be impatient, everyone, everything has just begun.”

Throughout the content of CES, Mobileye seems to be saying, “Don’t be impatient, everyone, everything has just begun.”

“After all, ‘we have been working in the field of autonomous driving products for over 20 years’, ‘we have more than 20 years of experience in integrating into consumer-level vehicles’.”

The long-distance race has not yet reached the final game.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.