Author: Chris Zheng

On May 25th, Tesla’s official blog announced that Autopilot is transitioning to Tesla Vision, a camera-based system.

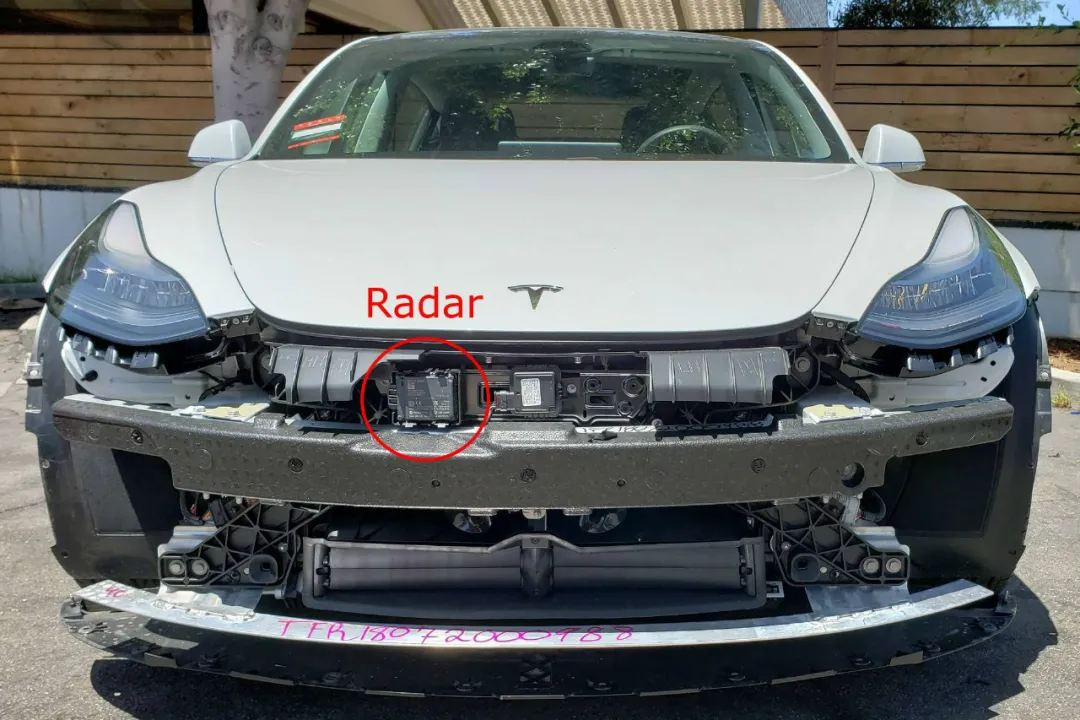

Starting from May 2021, Model 3 and Model Y manufactured in North America will no longer be equipped with millimeter-wave radar. These models will be supported by Tesla’s camera vision and deep neural network for Autopilot, FSD full autonomous driving, and some active safety features.

For Continental Group, which is Tesla’s millimeter-wave radar supplier and a top-tier 1 supplier, the single-unit price of about RMB 300, and the sales volume of over 450,000 vehicles/year (2020 data), losing an order of over 100 million yuan midstream is not a pleasant news.

Removing millimeter-wave radar

Although Tesla clearly stated that computer vision and deep neural network processing will meet the perception needs of active safety/Autopilot/FSD, as soon as the blog was published, all parties responded immediately.

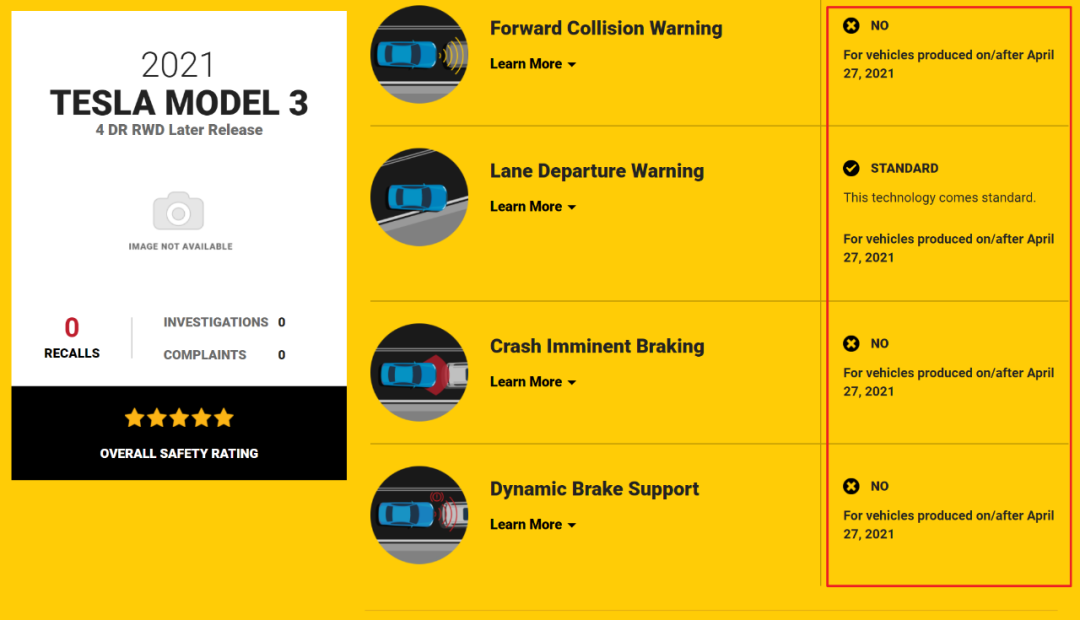

The National Highway Traffic Safety Administration (NHTSA) website updated the active safety feature page for the 2021 Model 3 and Model Y, including Forward Collision Warning (FCW), Collision Mitigation Braking (CIB), and Dynamic Brake Support (DBS), which were all explicitly stated as no longer available on models produced after April 27, 2021.

Meanwhile, Consumer Reports announced the suspension of the 2021 Model 3’s “recommended” rating, and the Insurance Institute for Highway Safety (IIHS) cancelled the Model 3’s highest safety rating, Top Safety Pick +.

In short, Tesla said that we removed the millimeter-wave radar and achieved the capability before that with the camera, but everyone just heard the first half.

In my opinion, major civil and regulatory safety agencies are now somewhat hypersensitive to Tesla. In fact, if we look at the history of the world’s largest visual perception supplier, Mobileye, over the years, it is a history of gradually removing radar from the active safety category of cars.

-

In 2007, Mobileye’s active safety technology first entered the automotive industry.- In 2010, Mobileye AEB, which integrates radar and camera, was equipped in Volvo production models.

-

In 2011, Mobileye’s Pure Vision Forward Collision Warning (FCW) was mass-produced in BMW, GM, and Opel brands.

-

In 2013, Mobileye’s Pure Vision Vehicle and Pedestrian Automatic Emergency Braking (AEB) was mass-produced in BMW and Japanese brands.

-

In 2013, Mobileye’s Pure Vision Adaptive Cruise Control (ACC) was mass-produced in BMW.

-

In 2015, Mobileye’s Pure Vision Full-Function AEB entered multiple OEMs.

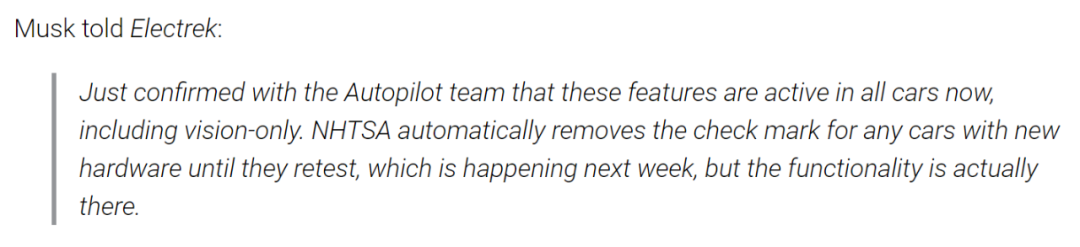

However, the situation is escalating. Tesla CEO Elon Musk had to refute rumors through Electrek: all active safety features are effective in the new models, and NHTSA will retest the new models next week. The current models without radar are equipped with these features as standard.

But public doubts have not been dispelled. For example, how will Tesla solve the problem that radar is good at measuring the distance and speed of obstacles, which is traditionally a weakness of cameras?

Or, isn’t it better to have two sensors than one? Even if the camera can do what the radar does, can’t the two sensors detect better together?

Next, let’s discuss these issues.

Computer Vision + RNN > Radar?

We first need to understand the technical principles of radar and its role in autonomous driving.

Millimeter-wave (Millimeter-Wave) radar obtains the relative velocity, relative distance, angle, and motion direction of other obstacles around the vehicle by emitting electromagnetic signals and receiving target reflection signals.

By processing the above information, cars can be equipped with a series of active safety functions, such as Adaptive Cruise Control (ACC), Forward Collision Warning (FCW), Lane Change Assistance (LCA), Automatic Follow-up (S&G), and even Blind Spot Detection (BSD).

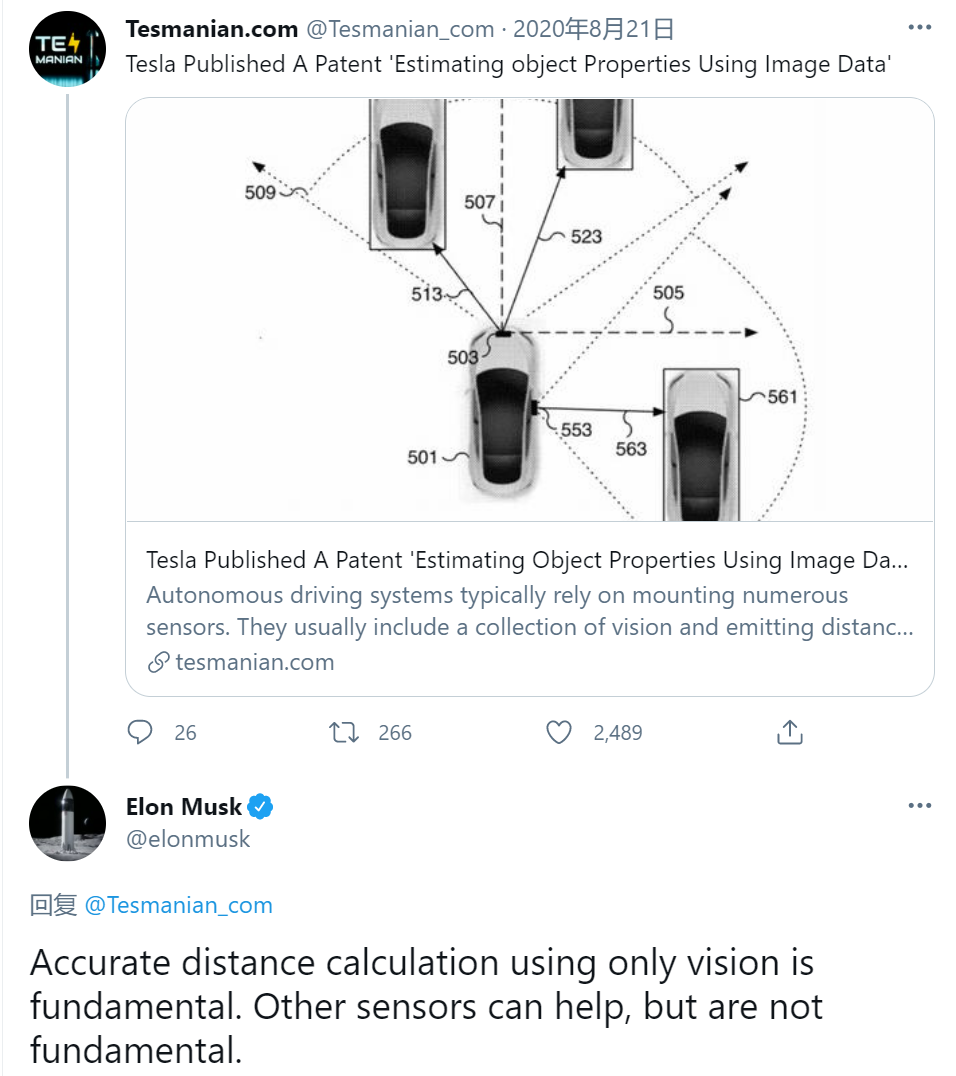

So, how does Tesla use cameras to obtain the above information, such as judging the distance to the front vehicle?On August 21, 2020, Elon Musk stated on Twitter that “accurate distance calculation through pure vision is fundamental, and other sensors can provide assistance, but that is not fundamental.” He was replying to a tweet which introduced Tesla’s patent titled “Estimating Object Properties Using Image Data”.

On April 13, a Tesla Model 3 owner and Facebook Distributed AI and Machine Learning Software Engineer, Tristan Rice, “hacked” into Autopilot’s firmware and revealed the technical details of Tesla’s technology that replaces radar with machine learning.

According to Tristan, the deep neural network of Autopilot has added many new outputs. In addition to existing xyz output, Autopilot now includes many traditional radar output data, such as distance, speed, and acceleration.

Can a deep neural network read speed and acceleration from a static image? Definitely not.

Tesla has trained a highly accurate RNN that predicts obstacle speed and acceleration by using a time-series video with 15 frames per second.

So, what is an RNN? The key feature of an RNN is prediction. Recurrent Neural Networks (RNNs) are based on circular neural networks to transmit and process information. They use “internal memory” to accurately predict what will happen next for any input sequence.

NVIDIA’s AI blog once gave a classic example: assuming that restaurant dishes follow a set pattern, with a hamburger on Mondays, fried corn tortillas on Tuesdays, pizza on Wednesdays, sushi on Thursdays, and pasta on Fridays.

For an RNN, if you input “sushi” and ask “what’s for Friday?” it will output the predicted result: pasta. This is because the RNN already knows the order, and on Thursday sushi has been finished, indicating that what is coming next is pasta.For Autopilot’s RNN, by giving it the moving path of pedestrians, vehicles, and other obstacles around the car, it can predict the next movement trajectory, including position, speed, and acceleration.

In fact, a few months before Tesla officially announced the removal of radar on May 25th, Tesla had been running its RNN in parallel with the radar in its global fleet to improve the accuracy of RNN predictions by cross-checking the correct data output by the radar and the RNN output results.

To handle the classic problem of cutting in during Chinese traffic, Tesla also achieved better performance through similar route changes.

Tesla’s AI senior director, Andrej Karpathy, revealed in an online speech at CVPR 2021 that for cut-in recognition, Tesla has replaced traditional rule algorithms with deep neural networks.

Specifically, Autopilot used to detect cut-ins based on a hardcoded rule: first, recognize the lane and track the vehicle ahead (bounding box) until the speed of the preceding vehicle matches the threshold level for cut-ins, and then execute the command.

Now, Autopilot’s cut-in recognition has removed these rules and relies entirely on RNNs to predict the behavior of the preceding vehicle based on annotated massive data. If the RNN predicts that the preceding vehicle will cut in, it will execute the command.

This is the technical principle behind the significant improvement in Tesla’s cut-in recognition over the past few months.

As mentioned earlier, Tesla’s patents explain in detail how it trains RNNs.

Tesla associates the correct data output by the radar and the Luminar lidar (non-production vehicle team within Tesla) with the objects recognized by RNN to accurately estimate object attributes such as distance.Tesla has developed tools to automate the collection and correlation of auxiliary data and visual data, eliminating the need for manual annotation. In addition, after correlation, training data can be automatically generated for training RNNs, which accurately predict object properties.

Due to Tesla’s fleet exceeding one million vehicles worldwide, Tesla has been able to rapidly improve the performance of its RNN through training with massive scene data.

Once RNN accuracy matches that of radar output, it will have a huge advantage over relative millimeter-wave radar.

This is because Tesla Autopilot is only equipped with forward-looking radar, making it difficult to accurately predict pedestrians, cyclists, and motorcyclists swarming around the vehicle from all directions under urban driving conditions. Even obstacles directly in front of the vehicle within its 45° detection range cannot be distinguished by the previous version of Autopilot radar if two obstacles are at the same distance and speed.

However, Autopilot’s eight cameras provide 360-degree coverage around the vehicle, and the vehicle’s BEV neural network can seamlessly predict the movement of multiple obstacles in any direction of the vehicle.

So why doesn’t Tesla keep the radar and use both sensors for double verification?

Elon Musk has explained his views on radar and cameras in detail:

In the wavelength of radar, the real world looks like a strange ghost world. Except for metal, almost everything is transparent.

When radar and visual perception are inconsistent, which one do you trust? Vision has higher precision, so it is more wise to invest twice the effort to improve vision than to bet on the fusion of two sensors.

The nature of the sensor is the bit stream. The amount of information in bits/second for cameras is several orders of magnitude higher than that for radar and lidar. Radar must meaningfully increase the signal-to-noise ratio of the bit stream to make it worth integrating.

As visual processing capabilities improve, the performance of cameras will be far better than that of current radar.

This statement seems subtle. Our previous article, “Tesla: I Endorse Lidar”, wrote about Elon Musk’s attitude toward millimeter-wave radar. In this statement, he did not “sentence” radar to death at Tesla.

“Radar must meaningfully increase the signal-to-noise ratio of the bit stream to make it worth integrating.” Will the upcoming Tesla Autopilot include imaging radar?

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.