Author: YanZhizhi Auto Annual Meeting

At the “First YanZhizhi Auto Annual Meeting,” Cheng Zengmu, former project manager of the Hyundai Motor R&D Center (China), presented on the topic of “Hyundai Motor Group’s Path to Sensor Fusion” and introduced the technology of information fusion between LIDAR and cameras, as well as target tracking solutions and Kalman filtering methods. He stated that the two main directions currently in focus are domain controllers and Ethernet. Above Layer 4, Ethernet is a necessary technology.

Interpretation of Multi-Sensor Fusion Technology

Cheng Zengmu first introduced multi-sensor fusion technology in combination with a certain model. It is equipped with an ADAS driving assistance system, which mainly includes adaptive cruise control, forward collision avoidance system for pedestrian, bicycle, vehicle, and intersection, to meet the latest regulatory standards in 2021. Additionally, it includes lane detection warning, panoramic imaging of blind spots, parking assistance, as well as rear panoramic imaging cameras, radar, and ultrasonic sensors.

The vehicle model uses the 5R1V12U ADAS solution for safety features that enable vehicles to independently judge driving environments, predict accidents, and actively intervene. Safety configurations include forward collision avoidance assistance, safety exit, and rear cross-traffic collision avoidance. For convenience features, it includes adaptive cruise control, vehicle following, and highway driving assistance functions.

He stated that multi-sensor fusion technology is a process of information processing for subsequent decision-making and estimation that utilizes computer technology to automatically analyze and synthesize multi-element information and data from multiple sensors in accordance with certain rules. The processing method is similar to how the human brain processes information. It combines multiple sensors through multi-level, multi-space information complementarity and information optimization to produce explanations for the consistency of perceived environments. In the fusion process, information needs to be recognized and combined in multiple ways to generate more effective information. It not only requires multiple sensors to work together but also needs to improve the quality of the data source.

The basic principles include five parts: the need to collect active and passive data; the output of data, including discrete and continuous function variables, to directly label a vector, conduct feature extraction, and make a series of transformations; the need to carry out pattern recognition and processing on special vectors, including algorithms that complete the sensory labeling of various targets; grouping and association; and finally, using fusion algorithms to synthesize and achieve consistency of the target.

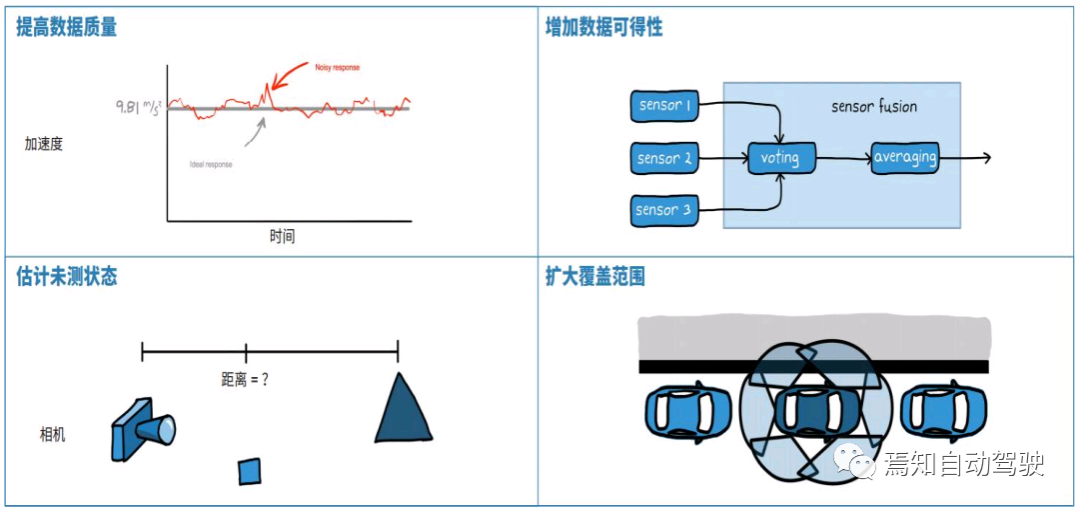

Compared with a single sensor, sensor fusion is a comprehensive information processing process with many advantages, including improving data quality, increasing data availability, sensing unmeasured states through the fusion of multiple sensors, and expanding the coverage range.Sensor fusion mainly adopts three processing structures: centralized, distributed, and hybrid, each with its own advantages. Distributed processing entails locally processing the raw information obtained by each independent sensor before sending it to the fusion center for optimization and obtaining the final result. Centralized processing involves sending the raw data obtained by each sensor directly to the central sensor for fusion without processing, enabling real-time fusion. This method involves a large amount of data and is difficult to implement. Hybrid processing has strong adaptability and combines the advantages of both.

Fusion of LiDAR and Camera Information

Cheng Zengmu then introduced the problem of joint target detection in the fusion of LiDAR and camera information. After completing the information fusion, a target needs to be identified, which includes two parts: the first part is time synchronization because the data acquisition and sending frequencies of different sensors differ, and the data collected at different times needs to be synchronized in time coordinates; the second part is spatial alignment when fusing data from multiple sensors. Currently, ROS is used for time synchronization. In ROS, different sensor topics that need to be fused are subscribed to, and the synchronization module is used to uniformly receive data from multiple sensors, generate a callback function for synchronization results, and then extract the variables in the callback function to achieve time delay synchronization.

Spatial calibration mainly involves converting three-dimensional point cloud data into two-dimensional data, then converting it into a Birdseye view, which is a spatial perspective view, and then converting it into a two-dimensional perspective view, selecting an area of interest (ROI), transporting it to the pixel position after filtering, creating an image array, and then performing alignment. Currently, Baidu Apollo’s open-source calibration method and Autowire are well-performed.

After fusing LiDAR and camera, an RGB-D image is obtained, which can be used to detect the environment using two-dimensional detection results and specific information. It includes three parts:

The first part is Frustum Proposal, which mainly functions for IPN, detecting the secondary border to identify the object border, and determining the type of barrier, whether it is a person or a vehicle, based on CV technology.

The second part is the combination of 2D border and RDB-D information to obtain LiDAR point cloud information.

The third part is 3D Instance Segmentation, which is the Frustum Proposal module. Generally, it can be observed that it detects more than humans. How to deal with this? It is necessary to continue to extract point clouds using a point cloud background network architecture or others’ point operation architecture to obtain object information. Finally, the T-Net is used to estimate the three-dimensional size of the object to accurately extract its features.The joint detection of a certain brand of LiDAR and camera is adopted in the automobile industry. The image is processed with deep learning, and the commonly used training methods are open-source YoloV3 or Foster-RCNN. Point cloud clustering is mainly used for pedestrian detection. For vehicle detection, the central processor can classify the recognized objects, and the label for pedestrians is “person”. Compared to using LiDAR alone, the fusion method has the following advantages: first, the object classification is more accurate and diverse; second, because some LiDAR point clouds are sparse in certain environments, such as in pedestrian channels from 20 to 30 meters away where there are usually only two to three point cloud data, it is difficult to classify objects based solely on LiDAR detection. Therefore, using images to extract possible objects can complement this issue. Tesla is an example of this approach and confidently uses only cameras for object detection and classification. Its detection is more accurate, which can provide higher-quality inputs for subsequent multi-object tracking modules.

Multi-Object Tracking

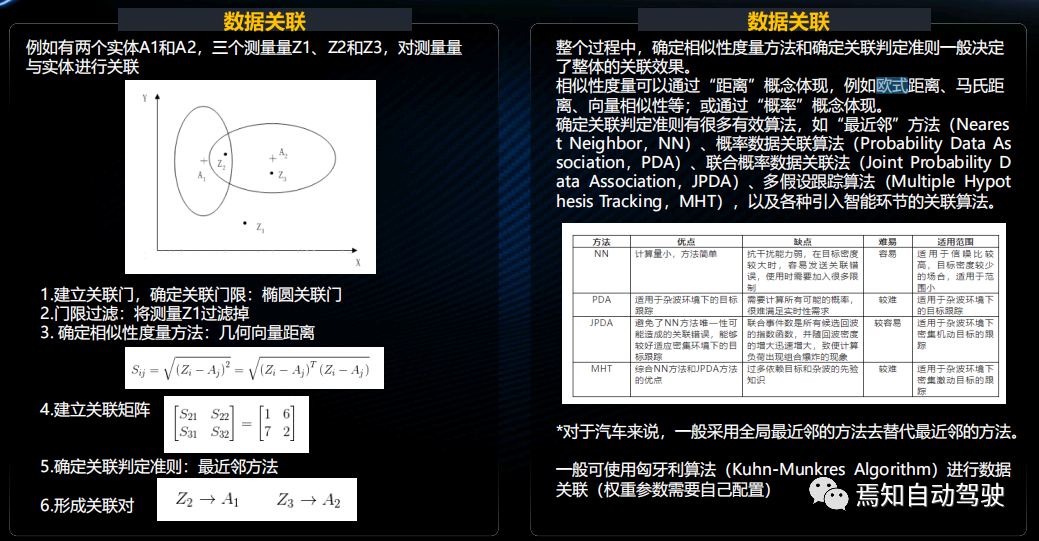

Cheng Zengmu introduced that multi-object tracking technology mainly includes target starting, ending, and tracking maintenance. The processing and formation of measurement data are the results of multi-sensor information fusion, and data association and tracking maintenance are the two most important issues in multi-object tracking. Data association is generally divided into several steps: establishing an association gate, determining the association threshold, threshold filtering, determining the similarity of targets, establishing an association matrix, and establishing a judgment criterion, and finally forming an association.

The data association concept is difficult to understand, so let’s take a very simple example. For example, there are two entities, A1 and A2, and three measurements, Z1, Z2, and Z3, in space that need to be associated. The first step is to establish an association gate and determine the association threshold. The association gate is composed of two ellipses. The second step is to filter it. Z1 is not a variable within the association range, so it is removed first. The third step is to determine the similarity measurement method, which can use geometric measurement methods, mainly through the Euclidean distance calculation formula. Fourth is to establish an association matrix. After it is established, use an association judgment criterion to form an association pair. In the association, Z2 and A1 are in the same team, so they are closely related, while Z3 and A2 are also in the same team, so they are the most critical variables.

Usually, using data association to determine the similarity measurement method and the association judgment criterion determines the overall association effect. Similarity measurement can be reflected by distance, such as Euclidean distance or Mahalanobis distance. The similarity in the form of vector similarity is expressed by probability.There are several specific methods for judgment, each with its advantages, disadvantages, and applicable range. For the automotive industry, the generally adopted method is to replace the nearest neighbor method with the global nearest neighbor method. Whether in the industrial sector or the academic field, the best method currently available is the Hungarian algorithm data association.

In terms of tracking maintenance, after the current perceptual information of the data association module is matched with the target tracking information of the previous frame, the matching result is divided into the tracking and maintenance modules, which is the perception hierarchy of the vehicle. The main purpose of the tracking and maintenance module is to continuously track the target, and predict the trajectory that the target may appear to ensure that the target is tracked without false tracking or loss of tracking.

The tracking algorithm is mainly divided into two categories: single-model algorithm and multi-modal algorithm. The former uses one model at a time, while the latter needs to design multiple models to determine some possible motion modes that may occur in the future. Each model has its own filter, and the overall estimation is processed according to the weighting of each filter. The most commonly used method is the Kalman filter.

Currently, the best method for unmanned driving is the Unscented Kalman Filter (UKF). It can solve some limiting factors in traditional Kalman filter algorithms and extend the Kalman filter. The extended Kalman filter mainly solves some nonlinear scenes, but it has a fatal problem, which is time delay. For applications that require very strong timeliness, such as intelligent connected vehicles and unmanned driving, it is necessary to solve the problem of time delay, and the extended Kalman filter happens to solve the two problems perfectly.

In the modeling process, a quadratic motion model is generally used, mainly the following two types, one of which is CTRA as a tracking model. The difference is that the extended Kalman filter is linear acceleration, but what we predict is angular acceleration, which is the system’s handling of noise. The noise in UKF can be represented by a state transition matrix. This process can achieve better tracking effect.

L4 level Nexo

Cheng Zengmu also showcased the light fuel L4 level car from Hyundai Motor Group, Nexo. He said that the car was equipped with various sensors from Seoul to Busan. There is a button in the car design that can directly start autonomous driving. It recognizes objects with cameras. In tunnels, GPS is not very useful. At this time, with heading compensation, forward speed can be measured. Currently, overpasses and intersections are relatively difficult to handle because of the vertical stacking relationship between intersections. In extreme situations with very high curvature, pedestrian recognition, three-way intersection traffic recognition, and some traffic sign recognition can currently be achieved. This is also the basic function of L4 level, which includes rotary operation.## Technical Outlook: Domain Controller

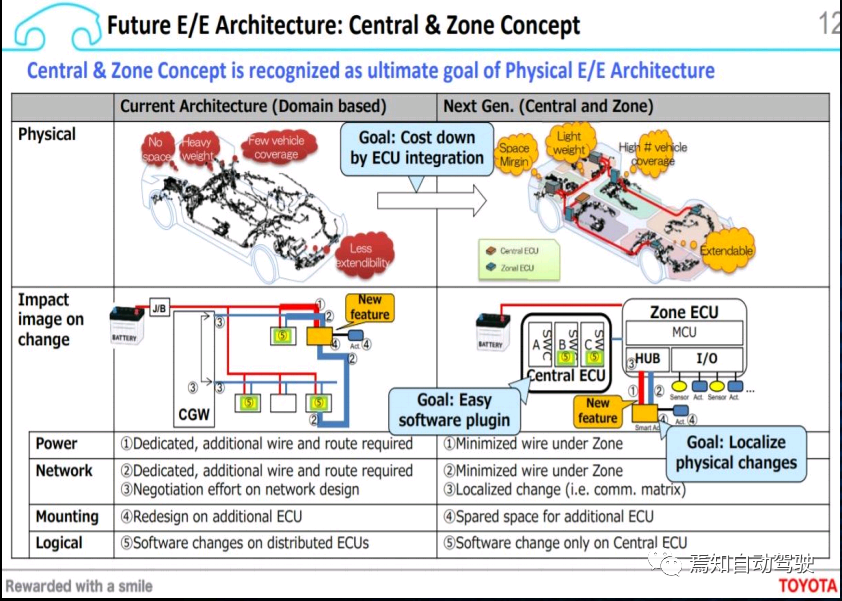

Cheng Zengmu ultimately expounds on the future technological development. There are two main directions: domain controller and Ethernet. The L4 communication must be done through Ethernet. He combines the introduction of Toyota’s domain controller architecture to explain that the original logic of a domain controller was not to reduce the number of vehicle ECUs but to integrate data and enhance computing power.

Currently, OEMs still divide according to traditional domains, such as engine domain controllers, and “Three Electrics” for new energy OEMs, which can be merged into one domain controller. In addition, the chassis and L4-level line control steering require the use of a domain controller to process, as well as electronic and electrical, intelligent network connection domains, etc. If divided into central domains (CZ), the development of new domains seems to be based on layer tasks: application layer, perception layer, network driver layer, hardware layer, vehicle layer, etc. The OEM hierarchy is not conducive to development.

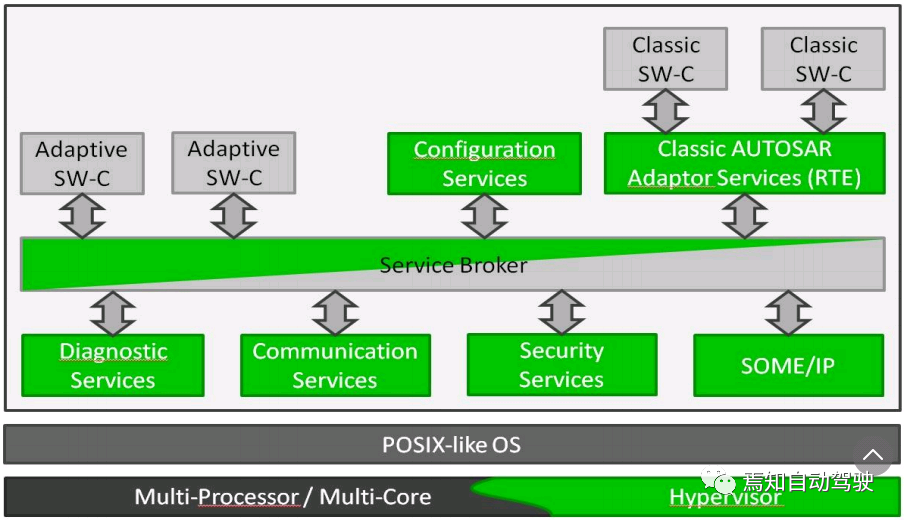

Now, the internal architecture of most domain controllers is based on AUTOSAR or OSEK, with fewer and fewer people using OSEK. Developing a static drive system, during the software system running process, is mostly calling functions, calling up the pre-arranged sorting files in order, one by one, to meet the demanding operating requirements for driving safety. For example, for the airbag system, the questions of whether to open the function or whether to check every millisecond in an emergency situation to ensure that the airbag can open. Then using multi-core processors, for function features that do not have particularly high runtime requirements, the dynamic drive system has more advantages, such as being service-oriented, able to perform software upgrades. For this system, AUTOSAR has also proposed a set of Adaptive-AUTOSAR solutions to cover the advantages of the dynamic drive system and provide interfaces for traditional AUTOSAR.

In this way, numerous software packages from the vehicle manufacturers and different suppliers respectively constitute diagnostic services, security measures, communication services, and other functional blocks, and are integrated into the Adaptive-AUTOSAR working group. All software communicates with each other through Service-Broker and provides interfaces for traditional AUTOSAR software.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.