Author: Liu Hong

Introduction

In the history of autonomous vehicles, these cars looked very bulky and “out of the norm” with multiple protruding devices around them, equipped with various cameras, radar and lidar. These three sensors are all necessary to solve the problem of autonomous driving, as they complement each other.

Today, the sensor market is filled with technologies that are competing against each other, and selecting the appropriate technology to integrate into vehicles is a difficult challenge. Product designers need to carefully consider the pros and cons of each sensor category.

In recent years, with the gradual approach of unmanned driving vehicles, a “360°” 4D imaging lidar technology has emerged. Although the industry has mixed opinions on it, this technology still has its unique features, and there are manufacturers both domestically and internationally that are developing and pre-installing it on a small scale.

Opportunities for Overtaking on Curves

Radar is critical to the automotive industry, and as a long-range sensor, almost all new cars have been deployed with it. The acceleration of ADAS innovation is a great boon for the radar industry, but to achieve higher levels of safety and automation, and to enter the era of autonomous driving without hands or feet, radar must innovate.

4D imaging lidar also belongs to millimeter-wave radar. In terms of ADAS applications, traditional millimeter-wave radar has been on mass-produced vehicles for more than twenty years. The product performance is mature and stable, and the market share has been monopolized by several giants. It is not easy for new companies to break through.

Around 2015, some start-ups began to challenge traditional millimeter-wave radar: by increasing the resolution to achieve a level close to that of low-beam lidar, they replaced traditional simple target detection with environmental mapping (point cloud mode) to cope with increasingly complex driving tasks.

Some people in China predict that 4D imaging lidar will be pre-installed on a small scale from 2022, and the installed capacity is expected to exceed one million by 2023.

Resolution and Detection Capabilities that are Difficult to Adapt to All Environments

As vehicles become more electrified and electronic, sensors, screens and ADAS functions are also increasing. While safety technology has improved, it has also increased the complexity, cost and weight of cars.

Each type of sensor has its advantages and disadvantages. Cameras are good at high-resolution detection of objects, but are susceptible to adverse weather and lighting conditions; radar works well in adverse weather, but has lower resolution; lidar can accurately detect object details, but does not work in adverse weather.

-

Cameras and other optical solutions: can effectively detect targets, measure distances, provide precise imaging, and track multiple targets. For example, RGB cameras are relatively inexpensive, and their high-resolution data can be used to train computer vision-based classification and recognition algorithms, but their field of view is limited and they are powerless in adverse weather, lighting and shadow areas. Cameras also face privacy concerns that are of great concern to both businesses and consumers.- Passive Infrared (PIR) Sensor: Detects motion by memorizing the infrared image of the surrounding area and detecting slight changes. Its common deployment is in vehicles, as it is inexpensive, compact, and highly efficient, providing accurate detection, but with limited range. Its performance varies with distance and it is difficult to detect very slow movement of the human body.

-

Thermal Infrared Sensor: Detects heat, regardless of wavelength. Low-end solutions are obstructed due to slow detection and response time, while high-performance sensors are often expensive. Thermal sources and airflows can also cause significant interference, and sensitivity is severely affected when the ambient temperature approaches body temperature.

-

Active Infrared (IR) Sensor: Typically installed outdoors, it uses emitted infrared waves to determine whether the received signal is a person or an object. Like PIR sensors, it is inexpensive and requires low power. They work effectively in all lighting conditions, but have limited range and are susceptible to adverse environmental effects. In addition, existing solutions are also hindered by low data transmission rates.

-

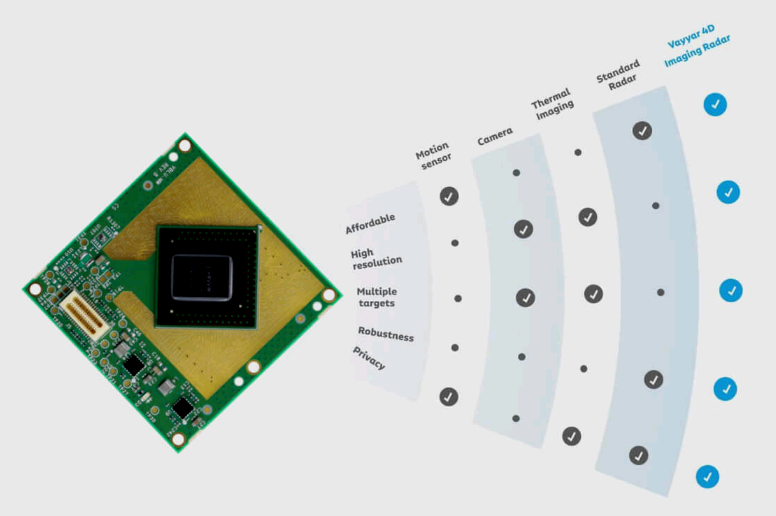

Standard Radar: Also known as radar velocity measurement, it can detect presence, direction, distance, and speed, representing a powerful and scalable solution that can protect privacy. However, the data output resolution of the small antenna array is low, and it cannot generate rich images. Its narrow field of view is mainly concentrated on one axis, and due to its limited angular resolution, it cannot distinguish close-range targets.

In terms of data processing, designers also need to consider economic efficiency. Edge processing can greatly reduce the processing and storage costs associated with the cloud. As more and more devices are deployed, edge processing can save significant costs in the long term. Using embedded processors to perform edge processing helps eliminate the need for high-power, high-cost processors in product design.

Vayyar: Smarter Sensors, Richer Data

Vayyar Co-founder and CEO Raviv Melamed believes that “data collection relies on sensors – the ‘eyes and ears’ of any smart device, but processing information and directing actions requires a ‘brain’ – artificial intelligence. Creating products that meet ever-changing human needs means creating machine learning algorithms that intelligently utilize data based on business logic. This means selecting not only the most effective sensor technology, but also the most powerful data processing platform.”

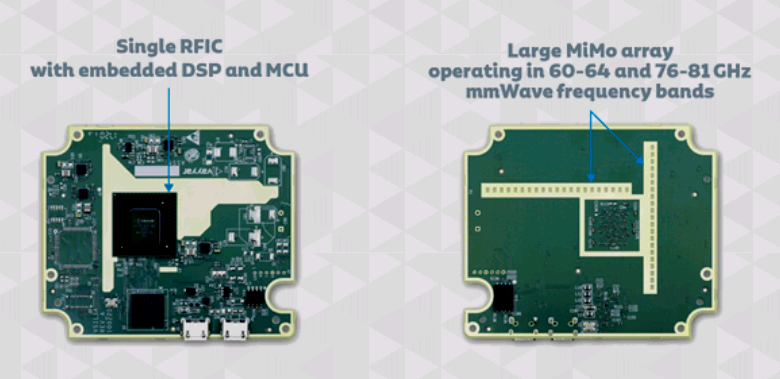

Founded in 2011, Vayyar aims to completely improve vehicle safety and cost through its “omnidirectional” 4D sensors. Using its Radar-on-Chip (RoC) platform on its chip, Vayyar has created a breakthrough vehicle safety solution that can replace more than a dozen other sensors without expensive lidars and cameras.

The 4D imaging radar can provide all the benefits of alternative technologies while overcoming their limitations. Unlike 2D radar, it uses a multi-input and multi-output (MIMO) array of 46 antennas to achieve high-resolution real-time tracking. Scanning the environment with so many antennas allows for the precise detection and tracking of multiple targets simultaneously, accurately identifying the presence of objects and providing rich data. Advanced classification algorithms enable the device to react in a timely manner.

The 4D imaging radar can provide all the benefits of alternative technologies while overcoming their limitations. Unlike 2D radar, it uses a multi-input and multi-output (MIMO) array of 46 antennas to achieve high-resolution real-time tracking. Scanning the environment with so many antennas allows for the precise detection and tracking of multiple targets simultaneously, accurately identifying the presence of objects and providing rich data. Advanced classification algorithms enable the device to react in a timely manner.

According to reports, the 4D imaging radar chip not only has a reasonable price in bulk pricing but also provides significant added value: richer data, higher accuracy, and more powerful functions. In terms of cost-effectiveness balance, it helps reduce development costs and shorten time-to-market. Because it does not involve optical devices, this technology is very robust under all lighting and weather conditions, performs consistently, and ensures user privacy.

The high-performance RF IC in the radar supports up to 72 transceivers across ultra-wideband (UWB) and millimeter-wave frequencies ranging from 3-81GHz, and is equipped with an internal digital signal processor (DSP) and real-time signal processing microcontroller unit (MCU). RoC supports a variety of systems, including intrusion alarms, child presence detection, enhanced seat belt reminders, and eCall that alerts emergency services in the event of a collision. It can “see through” objects and work effectively under all weather conditions.

Ian Podkamien, head of Vayyar’s automotive division, has been leading the RoC development team in adjusting and developing its automotive technology over the past five years. He believes that sensors have wiring and connectors and consume power, all of which add to the weight of the vehicle and present a major challenge to the range of electric vehicles. Sensor integration can provide multiple functions, saving wiring, weight, and cost.

Vayyar’s credit card-sized sensors use 4D high-resolution point clouds to map the entire cabin into 5cm pixels, capturing the shapes, postures, and movements of each passenger. A real-time 4D point cloud solution can work under any environmental conditions, tracking the cabin inside and out without the need for a camera.

To help customers utilize chip development in underlying radio frequency management, algorithms, filtering, etc., Vayyar provides complete reference designs, including antenna and array designs, eliminating the need for customers to reach out to multiple suppliers. Vayyar’s data set platform can also quickly respond to any new legislation, implement OTA software updates, and save integration, testing, and verification time and costs.

To help customers utilize chip development in underlying radio frequency management, algorithms, filtering, etc., Vayyar provides complete reference designs, including antenna and array designs, eliminating the need for customers to reach out to multiple suppliers. Vayyar’s data set platform can also quickly respond to any new legislation, implement OTA software updates, and save integration, testing, and verification time and costs.

Vayyar’s validated sensor chips and all components meet automotive-grade standards, so incorporating them into new models means there will be no delay in going to market due to unforeseen problems. In addition, it helps minimize overall production costs, which is also an important factor for OEMs to consider.

Arbe: Ultra-High Resolution Evaluation of Distance, Height, Depth and Velocity

Arbe Robotics is also an Israeli company established in 2015, which uses breakthrough technology to build a new type of 4D imaging radar from scratch, specifically for automotive applications. It combines the advantages of current sensor groups while eliminating some disadvantages, making it have outstanding resolution and target detection capabilities in all environmental conditions. CEO Kobi Marenko said: “Providing OEMs and Tier 1 suppliers with a 100x more accurate sensing solution than any other radar on the market, meet L1-L5 vehicle requirements, this is where Arbe’s 4D imaging radar has a profound impact on autonomous driving cars.”

The 4D imaging radar based on Arbe’s proprietary chip solution is the first radar to offer high sensitivity, high resolution, and full-space sensing (including elevation). It provides high accuracy image quality with no Doppler-blurring sensing problems in all weather and lighting conditions, helping to make long-distance and wide-field-of-view decisions.

Regarding target detection, it can accurately determine the boundaries of obstacles in the lane, such as trucks under bridges, fences or tires in the lane, motorcycles next to trucks, and then prompt the autonomous driving, emergency braking, and steering systems. It also provides reliable detection of vulnerable road users, such as pedestrians and bicycles, solving road accident problems related to ADAS and autonomous driving.

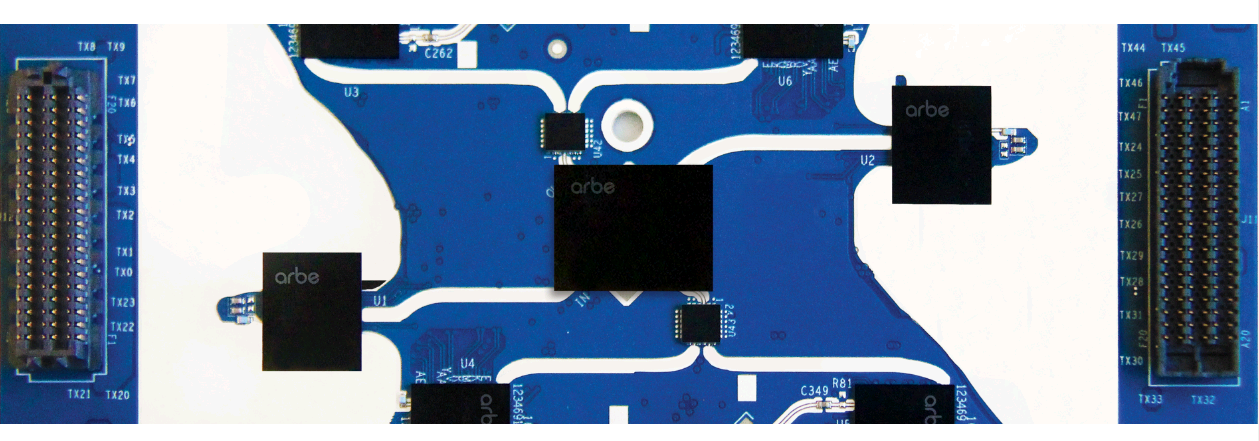

Arbe’s technology has a resolution that is two orders of magnitude higher than current products and supports detection of more than 100,000 times per frame. It has the highest point cloud density on the market. Its 4D imaging radar chip has more than 2,000 virtual channels, tracking hundreds of objects at a full scan rate of 30 frames per second while optimizing costs and power consumption. Evaluating distance, height, depth and speed with high resolution, it repositions the radar from a supporting role to the backbone of the sensing suite.

Unlike Vayyar, Arbe uses enhanced FMCW (Frequency-Modulated Continuous Wave) technology with a chip set that can transmit and receive signals from multiple antennas. By converting information from time domain to frequency domain (FFT, Fast Fourier Transform), Arbe can provide ultra-high element density 4D images with high azimuth and elevation resolutions, and real-time long-range environmental sensing in a large field of view. In addition, Arbe’s technology reduces the occurrence of sidelobes to a level close to zero, solves the distance-doppler (RD) ambiguity problem, and avoids interference from other radars.

Arbe’s proprietary millimeter-wave automotive-grade radar RF chipset has another advantage – superior processing technology. It includes a transmitter chip with 24 output channels and a receiver chip with 12 input channels. Adopting the new 22nm FD-SOI (Fully-Depleted Silicon-on-Insulator) CMOS process, the chipset supports TD-MIMO, which has the best performance in channel isolation, noise factor, and transmission power among its peers. Using the latest RF processing technology, Arbe achieves the most advanced RF performance at the lowest cost per channel in the market.

Arbe has also made breakthroughs in radar processing technology. Its proprietary baseband processing chip combines radar processing unit (RPU) architecture with embedded radar signal processing algorithms to convert large amounts of raw data in real-time, while maintaining low silicon power consumption. The processing chip can manage up to 48 Rx channels and 48 Tx channels in real-time, generate 30 frames of complete 4D images per second, and achieve an equivalent processing throughput of 3 Tb/s. Arbe’s physical resolution using proprietary technology is 2 to 10 times higher than competitors’ synthetic or statistical resolution enhancement methods (such as super-resolution technology). Therefore, Arbe’s chipset is still effective in low signal-to-noise ratio (SNR) and multi-target scenarios, which often cause other methods to fail. While using super-resolution to precisely analyze object boundaries, Arbe’s technology does not rely on this technique to generate high-quality images.

Failure to distinguish threats from false alarms is the main cause of accidents involving autonomous vehicles. Arbe’s enhanced FMCW, superior channel separation, and advanced post-processing reduce false alarms, almost eliminate false object instances, and eliminate false positive and false negative scenarios.

In this way, the ADAS system can trust the radar readings, achieve fast response, and prevent unnecessary parking. Therefore, 4D imaging radar provides the basis for navigation, path planning, and obstacle avoidance, and can support the sensing requirements for L4 and L5 autonomous vehicle safety and accuracy.

In this way, the ADAS system can trust the radar readings, achieve fast response, and prevent unnecessary parking. Therefore, 4D imaging radar provides the basis for navigation, path planning, and obstacle avoidance, and can support the sensing requirements for L4 and L5 autonomous vehicle safety and accuracy.

Suzhou Haomibo: 4D Millimeter Wave Radar with Camera Fusion

Suzhou Haomibo was founded in 2016 by Professor Bai Jie, a “national high-level talent,” and overseas returning experts in RF technology as the core team. Its goal is to break foreign monopolies, fill domestic gaps, and actively develop intelligent sensing technology and artificial intelligence systems with high levels of independent intellectual property rights.

As the name suggests, the company specializes in millimeter wave technology, mastering a complete set of ADAS integrated control algorithms and core automotive millimeter wave radar technologies, and customizing designs and cooperating with development according to OEM requirements. Its products have been mass-produced by OEMs such as Jiangling and JAC, including car-mounted BSD (blind spot vehicle identification) systems and FCW (forward collision warning) systems. The maximum detection distance of the BSD system is 100 meters, and the angle range is 150 degrees; the maximum detection distance of the FCW system is 200 meters.

Professor Bai believes that multi-sensor fusion is a necessary condition for building a stable perception system, especially the fusion of radar and cameras. He said, “People often encounter some complex weather conditions, such as heavy rain, fog, sandstorms, strong light, and night scenes. These are very harsh scenarios for images and LiDAR that are difficult to handle with a single sensor. Some intelligent driving systems that are considered to be relatively mature have also had numerous crashes caused by the failure of their sensor systems, which has led to tragic consequences.”

He explained that from the perspective of local data processing, sensors are mainly divided into centralized, distributed and hybrid structures. Currently, hybrid structures are commonly used, in which distributed sensors perform data processing separately, get the target information list, and then fuse it, because the millimeter wave radar is a target point cloud, which is the processed result; the LiDAR is the same.

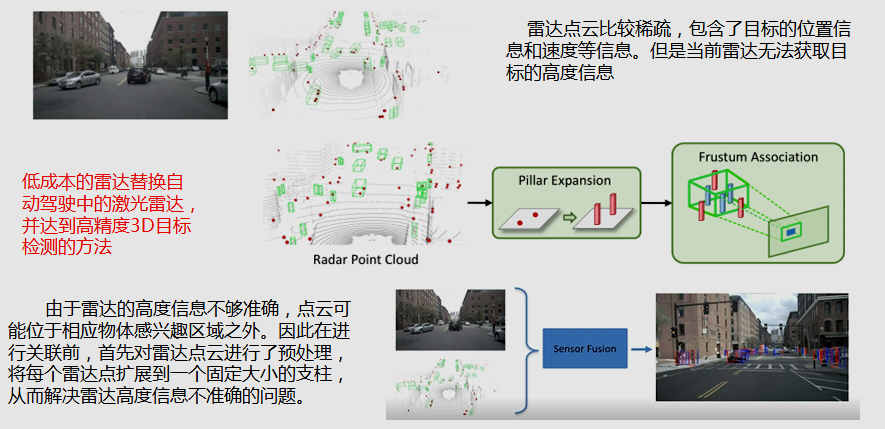

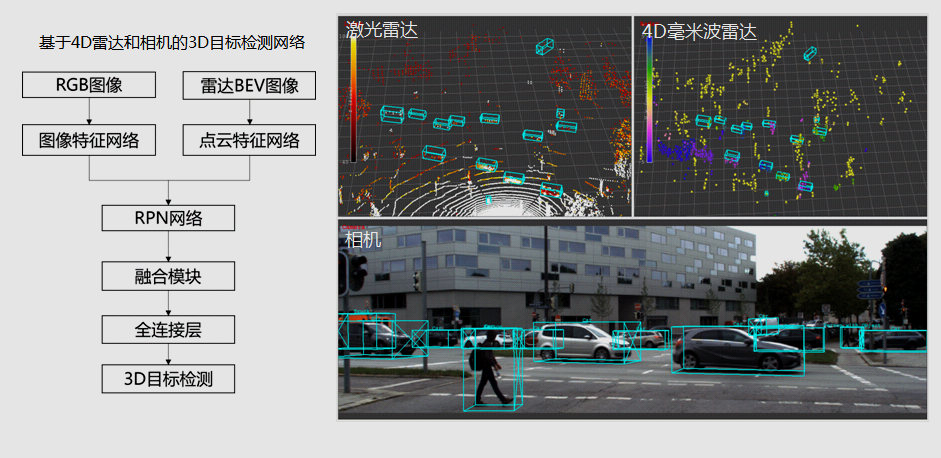

With the development of deep learning research, some cutting-edge fusion tracking solutions have emerged in recent years: ordinary radar point cloud + camera, radar RF image + camera, and 4D radar point cloud + camera. Currently, most sensor fusion methods use LiDAR and cameras to achieve high-precision 3D object detection. However, this method has its limitations. Cameras and LiDAR are sensitive to adverse weather conditions, and detection of distant targets is not accurate enough. In addition, LiDAR is costly and difficult to popularize. Because radar has good robustness against adverse weather conditions, very long detection distance, accurate measurement of target speed, and low cost, it is increasingly being valued in autonomous driving.

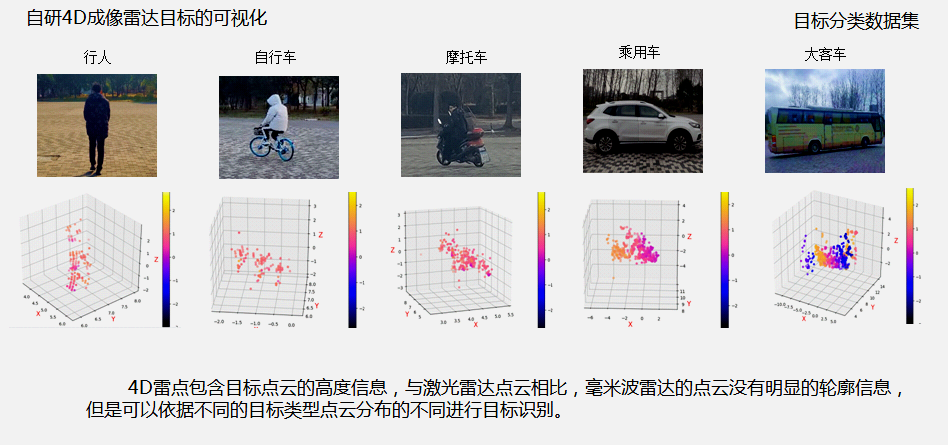

Based on TI’s AWR2243 chip, research on classification and detection of urban road traffic participants’ targets using 4D radar has been conducted. The visualization results of the target detection and classification dataset collected at Tongji University’s testing field indicate that 4D radar can output target point clouds with height, reflecting the outline and shape of the target. Although different from the imaging principle of laser radar point clouds, it is still difficult to accurately determine the additional shape characteristics of a target only from the point cloud of millimeter-wave radar. However, the scattering characteristics of its point cloud have certain regularity.

Based on TI’s AWR2243 chip, research on classification and detection of urban road traffic participants’ targets using 4D radar has been conducted. The visualization results of the target detection and classification dataset collected at Tongji University’s testing field indicate that 4D radar can output target point clouds with height, reflecting the outline and shape of the target. Although different from the imaging principle of laser radar point clouds, it is still difficult to accurately determine the additional shape characteristics of a target only from the point cloud of millimeter-wave radar. However, the scattering characteristics of its point cloud have certain regularity.

By using machine learning target classification algorithms to absorb several features of point clouds, such as Doppler speed, point cloud intensity distribution, and distance-related correlation features, high classification performance has been achieved in the classification algorithm data set test, with pedestrians reaching over 95% and large vehicles achieving 99%. The characteristic of machine learning is that it has fewer parameters, which is suitable for embedded classification.

Although 3D radar point clouds are sparser than laser radar, they have longer detection distance and contain the velocity information of targets. 4D millimeter-wave radar can assist in 3D target detection. As can be seen in the same scene, the point cloud distribution of 4D millimeter-wave radar is distinctly different from the 16-line laser radar. Each target point cloud of the former is richer and has a longer detection distance.

Imagining the Future

All of the above-mentioned companies believe that rich point clouds will enable 4D radar to play a more important role in L4 and above systems, which means that it will greatly improve the status of millimeter-wave radar perception systems. Since the processors integrated in the radar have embedded software, the automakers can focus more on image processing and machine learning, rather than spending energy on low-level radar algorithm development.

The future belongs to 4D millimeter-wave radar!

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.