Tesla Autopilot: Not So NB?

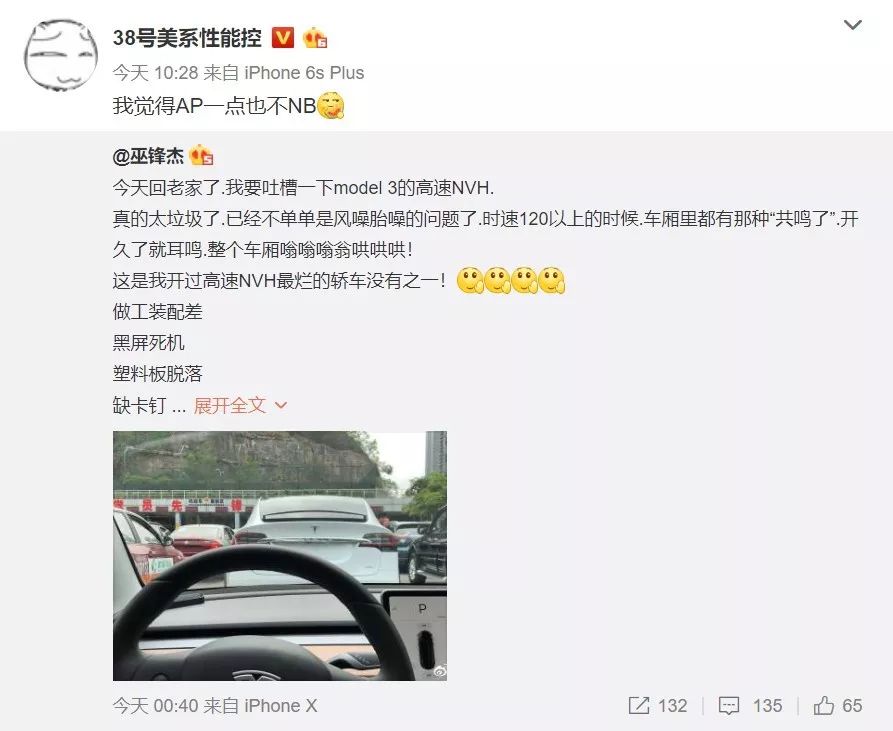

The core topic of today is Tesla Autopilot. At 10:28 this morning, the well-known car reviewer 38号美系性能控 (referred to as 38号) forwarded a Weibo post saying: “I don’t think Autopilot is NB at all.” In the subsequent Weibo post, 38号 explained further the basis of Autopilot’s non-NB status: as a car owner, Autopilot is not user-friendly, dangerous, and has poor user experience, so it is not considered NB.

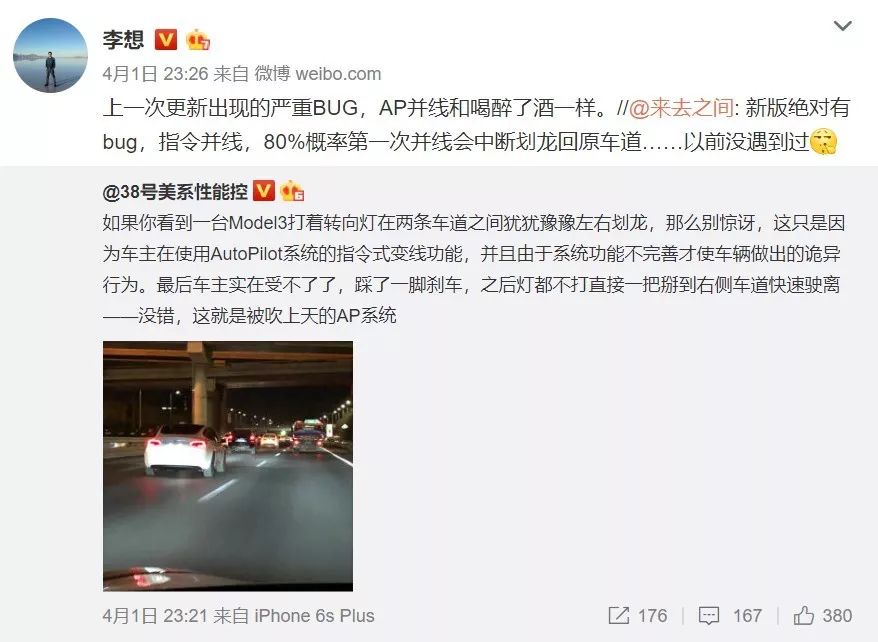

In fact, as early as April 1st, 38号 specifically complained on Weibo about the bewildering bug of Autopilot’s lane changing, and received recognition from two other Sina Weibo users and Tesla Model S/3 owners, @来去之间 and Tesla Model X/3 owner @李想. This shows that this is not an isolated incident but a systemic bug. However, both netizens agreed that this was a bug in the new version. In our actual driving experience, 42HOW’s Model 3 also has the same problem.

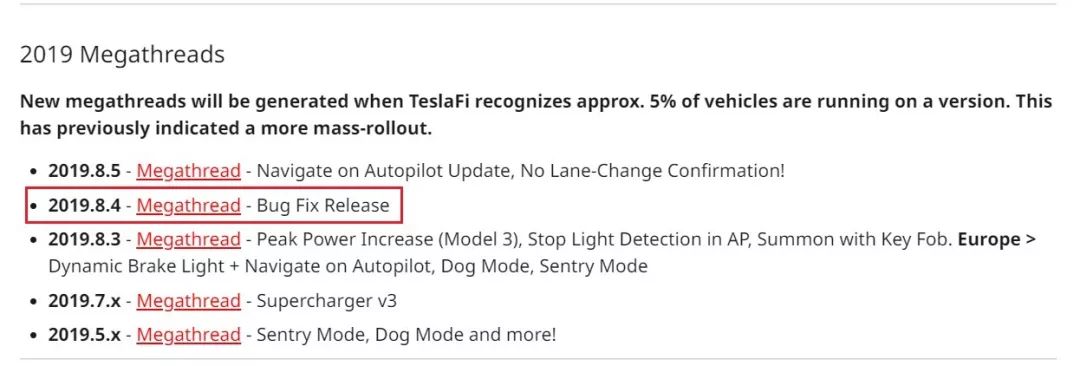

It can be confirmed that this is a bug in version 2019.5.15. If it is updated to the latest version in China, version 2019.8.4 (which is specifically fixing bugs), the lane changing bug has already been fixed.

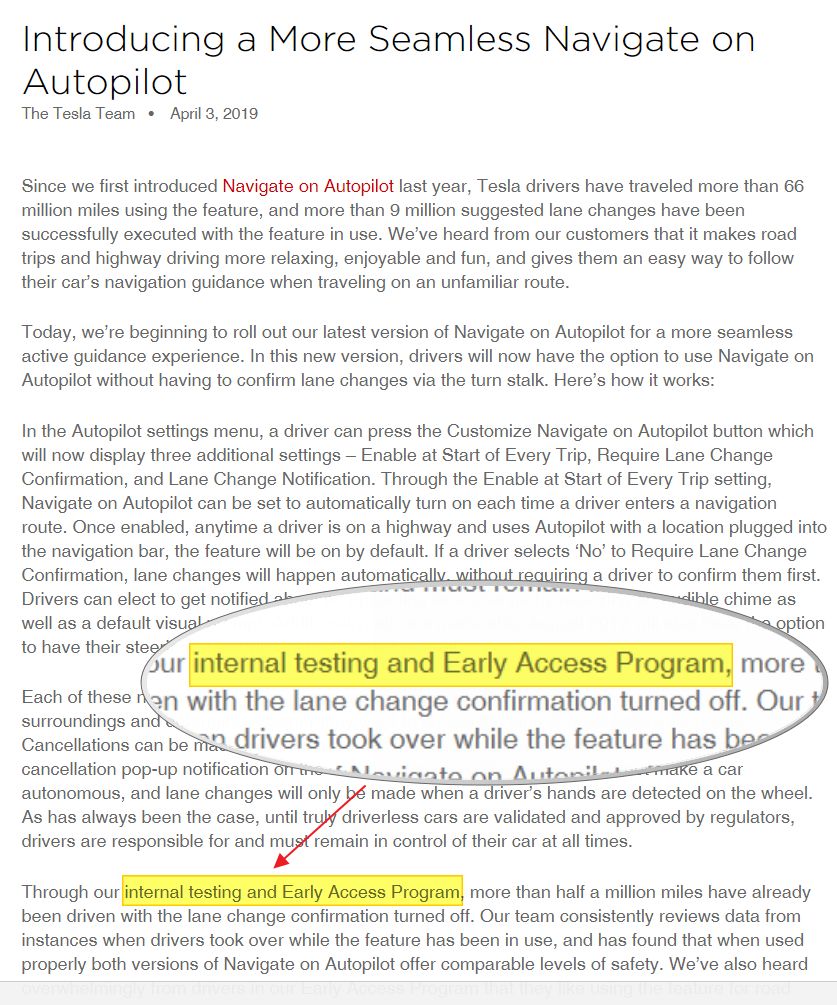

Of course, just because the bug is fixed does not mean that “nothing happened”. In fact, this bug has exposed a serious problem with Tesla’s Autopilot team. As we all know, Tesla launched an Early Access Program in North America, mainly composed of Tesla employee owners and core fan owners.

After the AP team completes the function coding, the new software will be pushed to the early testing project members for testing to ensure the reliability of the function. During the early user acceptance period, all bugs exposed during actual operation will be collected for repair. Then comes the global official push.

After the AP team completes the function coding, the new software will be pushed to the early testing project members for testing to ensure the reliability of the function. During the early user acceptance period, all bugs exposed during actual operation will be collected for repair. Then comes the global official push.

However, the mysterious bug that appeared after the massive push of version 2019.5.15 means that the early testing project was futile and could not play a role in discovering and eliminating bugs in early testing to improve software quality and enhance user experience.

In any case, being a guinea pig for verifying new functions on behalf of car companies is unacceptable for users. What can we do? For those users who cannot accept serious bugs left by a certain update, you can choose “not to use AP, not to install AP, not to buy Tesla” to express resistance and protest against Tesla’s fatal mistakes.

As mentioned repeatedly on Weibo, from a user perspective, I fully agree with the conclusion that “AP is not easy to use and can cause dangerous situations” reached by Weibo user No. 38, but I also believe that the conclusion “AP is not at all NB” cannot be drawn from the poor user experience of a single version alone.

Regarding how to view Tesla Autopilot, I have been contemplating this topic for a long time. A netizen commented a few days ago, asking what is the difference between L2 in 100,000 and 1 million yuan cars? Let’s talk about this issue below.

In my opinion, the representatives of the advanced auxiliary driving systems in the entire industry are: Bosch’s L2 solution, NIO Pilot from NIO, and Tesla Autopilot.

Firstly, the Bosch L2 level autonomous driving solution has been tested for a long time and appeared as a supplier solution representative here. Bosch’s L2 solution has many OEM customers; the main models of Chinese car brands such as Geely, Great Wall, Roewe, Changan, and Baojun all feature Bosch L2.The prowess of Bosch lies in the fact that it independently defines the configuration of the entire system’s software and hardware, as well as the solutions. Even with Tier 2 suppliers providing software or parts, Bosch, as a top-tier Tier 1, has absolute control over the system. Bosch has invested significantly in conducting thorough requirements analysis, function development, and validation testing to ensure that the system is competitive and meets the safety and reliability standards of the automotive industry.

So what’s the problem with Bosch L2? Since all R&D work is fully led by Bosch from the bottom up, automakers have no knowledge of the system’s know-how. For automakers, this system is close to a black box, meaning that if any bugs are neglected in the testing process by Bosch (100% testing coverage doesn’t exist, if it did, autonomous driving would have been achieved earlier), the automaker would be totally helpless once a bug arises during user operation.

The only thing automakers can do is to submit bugs to Bosch and wait for Bosch to provide technical support and maintenance. As for the development iteration based on user demands and feature additions, automakers also have to rely on Bosch’s development progress, making it difficult to ensure timeliness and achieve differentiation in competitiveness.

So can’t automakers do independent research and development?

In fact, Bosch L2’s high quality ensures its popularity among automakers, while the economy of scale resulting from massive procurement by automakers promotes cost reduction, highlighting its “good quality to low price” ratio. For Chinese automakers, whose profit margins are generally not optimistic, it is not a question that can be agonized over whether to invest in Bosch-level human and financial resources for development or to self-develop or purchase.

It should be noted that Bosch is not a “one-size-fits-all” system. For cars with different positioning, Bosch will provide differentiated systems in terms of configuration, function, and price to ensure differentiation. Moreover, even though Bosch leads the development, automakers still need to invest energy to adapt the system to the vehicle and complete engineering work for road testing validation.

Secondly, there’s the NIO Pilot by NIO, just like Bosch. This camp also includes companies like Chehejia and XPeng, whose founders all come from an internet background and have a clear understanding of Tesla’s Autopilot system. Besides the controversy generated by Autopilot, they have all witnessed the system’s strong vitality compared to supplier solutions.

The problem is that behind Autopilot’s growth lies a large number of engineering challenges to overcome. First, the vehicle itself must have the capability for over-the-air updates, and secondly, automakers must independently develop at least half of the entire system, mastering an understanding of the technical details of the system.The development of automatic driving systems involves multiple technological domains, such as perception, path planning, decision-making, control, simulation, cloud computing, advanced mapping, and hardware. It’s a high barrier for entry and an absolute black hole for funding and talent. This is why it’s very understandable why NIO initially considered Bosch’s L2 solution. However, NIO Pilot’s approach is still more ambitious than just purchasing “supplier solutions.” In the three major components of automatic assisted driving – perception, planning, and execution – NIO uses Mobileye’s EyeQ4 chips and visual perception algorithms for perception. Except for this, most of the remaining work is led by the NIO Pilot team.

As mentioned earlier, the development threshold for automatic driving systems is very high. NIO spent a considerable amount of money to attract people from Silicon Valley to work on automatic driving system research and development. However, even today, NIO Pilot still has significant functional deficiencies compared to L2. According to official statements, NIO will push a large update in June-July this year to achieve basic L2-level automatic driving functions. We wait and see.

Finally, there is Tesla’s Autopilot, which stands out. AP is different from the previous two camps in that, globally, only AP is available.

In conclusion, AP 2.0 truly embodies Tesla’s willingness to face strong Tier 1 technological capabilities, and the ambition and courage to take back technology dominance and stand on the shoulders of top-tier Tier 1 suppliers to achieve breakthrough technology advancements.

In October 2016, Tesla started mass-producing vehicles equipped with AP 2.0 hardware. Tesla established Tesla Vision, a visual processing tool, a new underlying software technology architecture, cloud-based big data infrastructure, and redefined the roadmap for Autopilot capabilities.

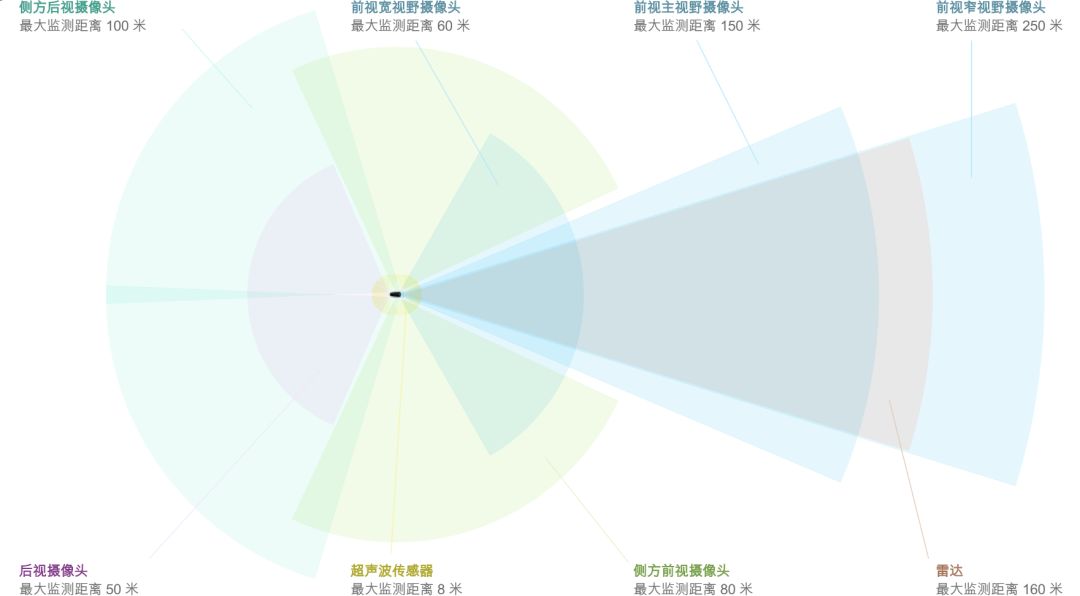

While software can be developed autonomously, hardware sensors must be purchased. However, even in terms of hardware, Tesla is significantly different from other automotive companies. For example, for cameras, millimeter-wave radars, and ultrasonic systems, Tesla disregards the supplier’s provided algorithms and obtains raw data output from the sensors. Furthermore, for computing platforms, Tesla purchased Nvidia’s Drive PX2 computing platform, but it runs on Tesla’s self-developed deep neural network.

As a result, all suppliers who use the hardware + algorithm module mode have become pure hardware vendors at Tesla, while Tesla possesses the technical feasibility of “completely self-developing functions.”AP 2.0 implemented Hardware Abstraction Layer (HAL) for the first time. In the era of supplier solutions, many sensors are proprietary. For example, the front camera can only be used by the AEB/ACC system, and the 4 surrounding cameras can only be used by the 360 panoramic reversing image. However, Tesla’s completely independent development of AP 2.0 means that any function can call any sensor, or multiple sensors can work together to achieve perception.

Take the 360 panoramic view as an example. AP 2.0’s 8 cameras can not only synthesize 360 images through software algorithms, but also deeply participate in driving perception, achieving different functions such as lane change assistance and side collision prevention. However, the standard 4 surrounding cameras of NIO models are limited by detection distance and angle, and are difficult to function in driving perception, becoming special cameras for 360 panoramic reversing images.

Sensors have become an independent public resource to meet the needs of system-level function development. This is not only different from Bosch L2, but also the most significant feature differentiating from NIO models.

I have been talking about the high threshold of fully autonomous driving system development, now let me explain in detail where the high threshold lies.

From a hardware perspective, Tesla has independently overcome the challenge of designing car-level products. The AP team needs to overcome dozens of harsh indicators required by regulations such as temperature, humidity, radiation, conduction, delay, and surges and reverse connections of power signals. Just the temperature requirement alone requires all components to operate stably in the range of minus 40°C to 85°C.

At the software level, Tesla’s AP 2.0 has turned to a visual perception path with front triple cameras and 8 cameras covering around the vehicle, which has a significant difference in technical principles from the monocular vision in the AP 1.0 era.

AP 2.0 is the first in the industry to adopt a triple-camera visual perception solution, in which a long-focus camera with a detection distance of 250 meters is responsible for long-distance perception in high-speed driving scenarios; the main camera with a detection distance of 150 meters is responsible for most of the perception in mainstream scenarios; and a fisheye lens camera with a detection distance of only 60 meters but a detection width of 120 degrees is responsible for detecting traffic lights, road obstacles, and most short-distance targets.

Triple-camera vision maximizes the simulation of the rapid zooming of human eyes, covering the characteristics of long and short-distance ranges. But at the same time, it also brings huge challenges to the software and engineering departments.The three-camera visual system has departed from the old route of matching single-camera vision with databases and turned to the route of real-time calculation of disparity distance, which requires vehicles to have higher computing power. Considering the “light radar and heavy camera” route of Tesla, the camera needs to real-time compute and recognize over 90% of environmental information, including motor vehicles, non-motor vehicles, motorcycles/bicycles, pedestrians, animals, road signs, the road itself, traffic lights, lane lines, etc. The algorithmic complexity and required computing power of three-way image recognition and fusion have doubled.

The engineering challenges of three-camera vision are much greater than those of software challenges. The three-camera visual system is a solution that has not been applied to mass-produced vehicles in the entire industry. It requires the integration of three cameras at the cramped windshield, and simultaneously considers the problem of measuring distance errors caused by thermal expansion and contraction, which poses unprecedented challenges in terms of manufacturing processes, costs, reliability, and accuracy.

Considering that AP 2.0 has from the beginning of its design considered the pluggable central computing chip and can replace the Tesla self-developed AI chip to meet the computing power demands when it is not enough.

It can be said that all L2 level ADAS systems in the industry define their products based on L2, and only AP 2.0 considers L2 as a foundation. From software to hardware, from the bottom to the top, AP 2.0 fully considers the upward iteration of technologies such as L3 and higher levels and the feasibility of subsequent commercial deployment.

You may say that AP and Bosch L2 are no different. Don’t forget that when it was mass-produced in October 2016, none of the eight cameras of AP 2.0 were enabled, which was an unusable system. So, don’t underestimate young people in poverty.

Finally, let’s conclude with Li Xiang’s evaluation of the core function of AP 2.0, Navigate on Autopilot.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.