English Markdown Text

Recently, I have been doing some in-depth research and thinking about interesting things every day. The automotive industry actually utilizes a lot of fundamental scientific knowledge, such as physics, with optics being one of the most important areas.

From the perspective of optical systems, the core technology mainly focuses on the transmitter, optical components and micro-modules, detector, and integrated circuit and algorithm.

Note: I have a background in instrumentation and Yanyan specializes in optical information, but both fields include a part of optics, although we haven’t delved into it deeply.

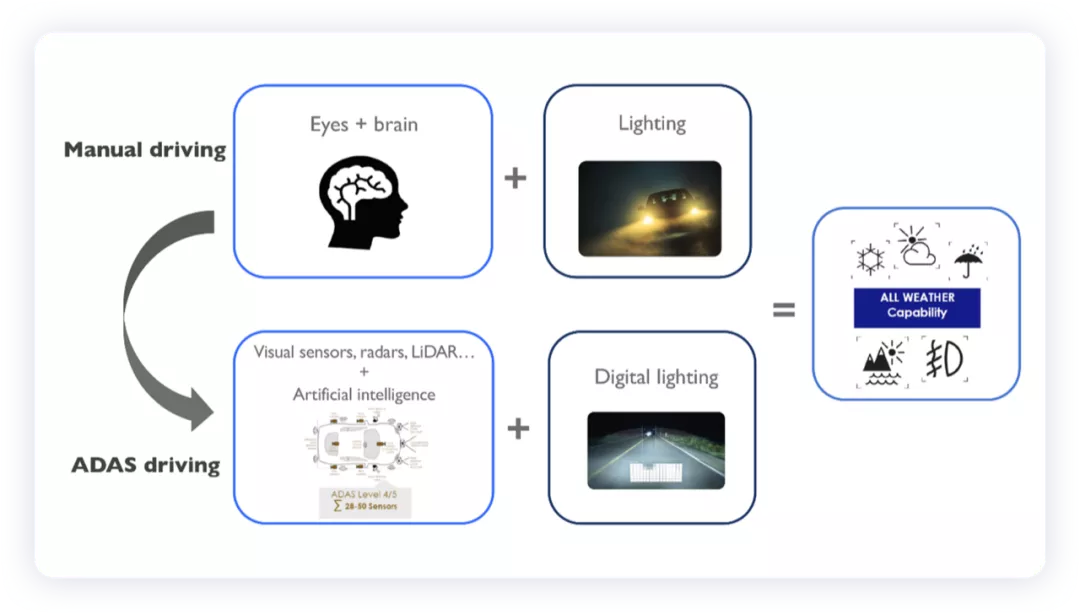

On a larger scale, it can be divided into two technical directions: driving around people and driving with assisted automated systems (aiming for the eventual shift to machines driving).

Technology for Driving around People

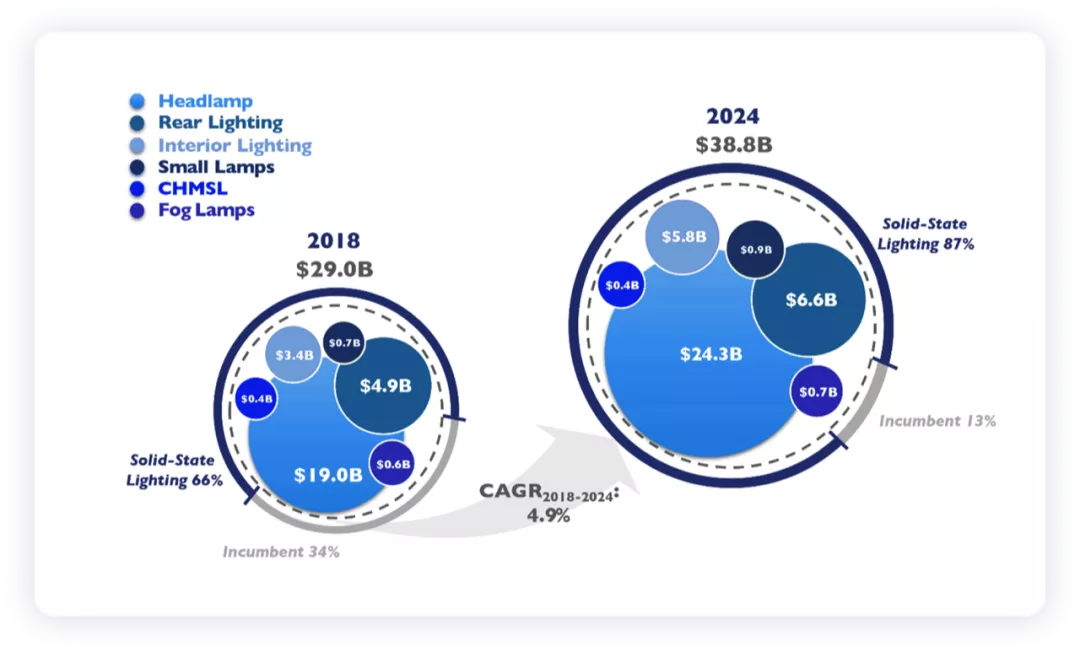

Automotive lighting systems that provide driving visibility and vehicle interaction services:

(Traceable back to 1910, the earliest electrical headlight ever invented), and various light applications that relied on the reflection principle, such as brake lights, taillights and instrument-assisted lights, have been carried over to the present day. Logically speaking, as long as there are people driving, there is a need for lighting, which is becoming increasingly intelligent.

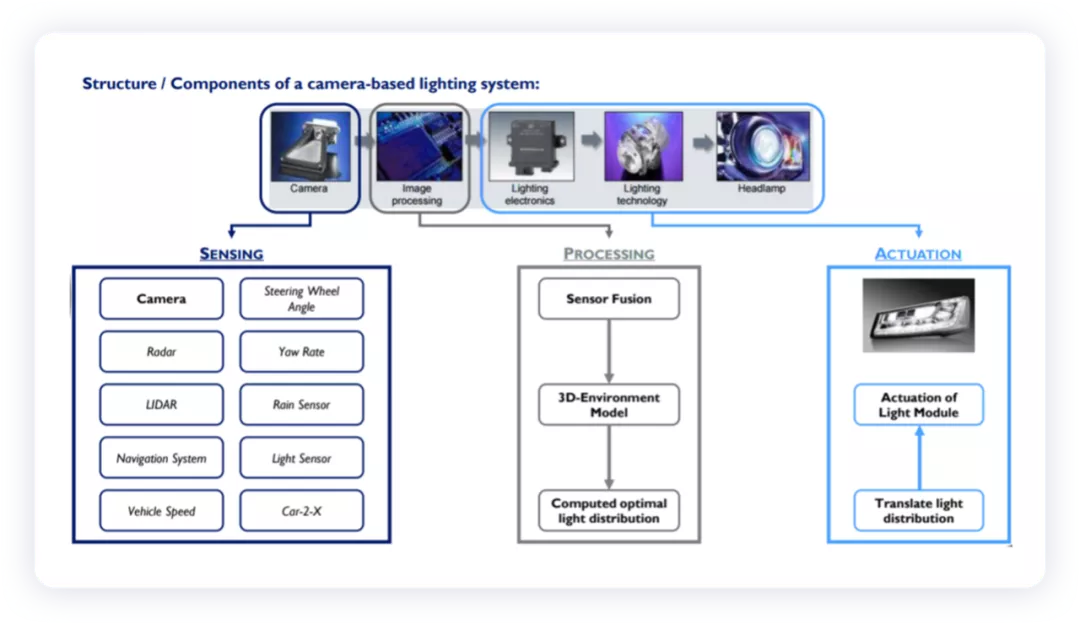

From the perspective of lighting systems, the focus is on improving the intelligence of the lighting system by separating camera technology and individual lighting controls to establish a single intelligent lighting system, as shown in the following figure.

The watershed moment here is when ADAS began to develop into higher-level automated driving assist systems. Previously, the focus was on studying how people could see clearly in various adverse weather conditions, but the latter began to focus on whether cameras could see clearly.

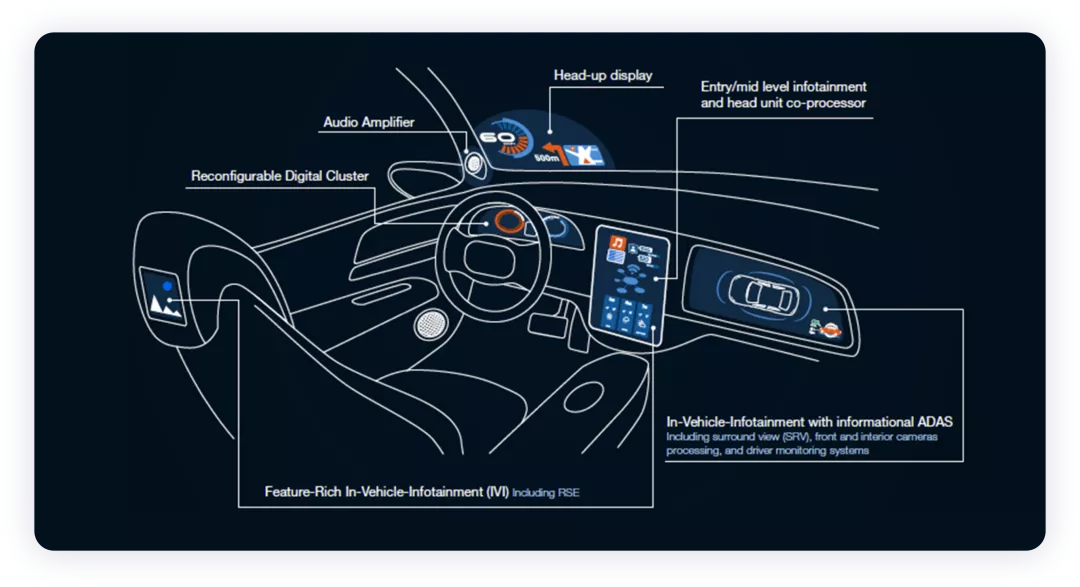

Optical systems in the cabin:

Regarding the application of optics in the cabin, the various optical display devices used for driver interaction, such as LCD instrument panels, central control screens, HUD, and various interior ambient lights (here becoming increasingly specific, such as foot lights, welcome lights, etc.), are the new optical equipment that gives cars a sense of technology.

We’ll discuss this separately.

Technologies surrounding the autonomous driving assistance system

The mainstream system built around the car camera, the most important optical sensor, is the automatic driving assistant system.

● From the camera’s perspective, the optical imaging mechanism can be summarized as follows: when light passes through the lens, it converges on the image sensor. The sensor records its image information and converts it into a digital signal through an analog-to-digital converter. This signal is then processed by the image processor and finally sent to the image processing chip. The main components of the camera module are the CMOS image sensor and the lens assembly.

Tesla uses pure vision to achieve advanced autonomous driving by utilizing the high-performance cameras mounted on the vehicle, which can perform professional analysis of the driving environment based on the Tesla Vision deep neural network.

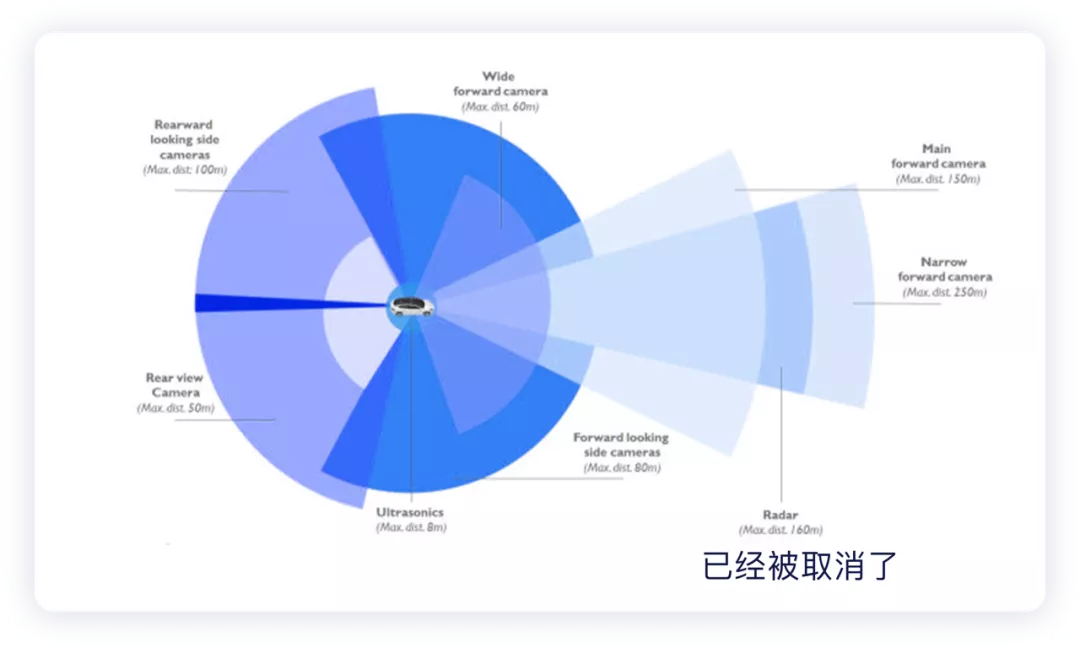

This autonomous driving system around the car body is equipped with eight cameras, with a field of view of 360 degrees and a maximum monitoring distance of 250 meters. The maximum monitoring distances for the main front-facing camera, wide-angle camera, and narrow-angle camera are 150 meters, 60 meters, and 250 meters, respectively. These cameras are used to identify objects and provide navigation, detect objects that may affect the vehicle, and take braking measures as appropriate. The maximum monitoring distances for the two side-rear cameras and the two side-front cameras are 100 meters and 80 meters respectively. The maximum monitoring distance for the rear camera is 50 meters. The latest AI day has provided more detailed information.

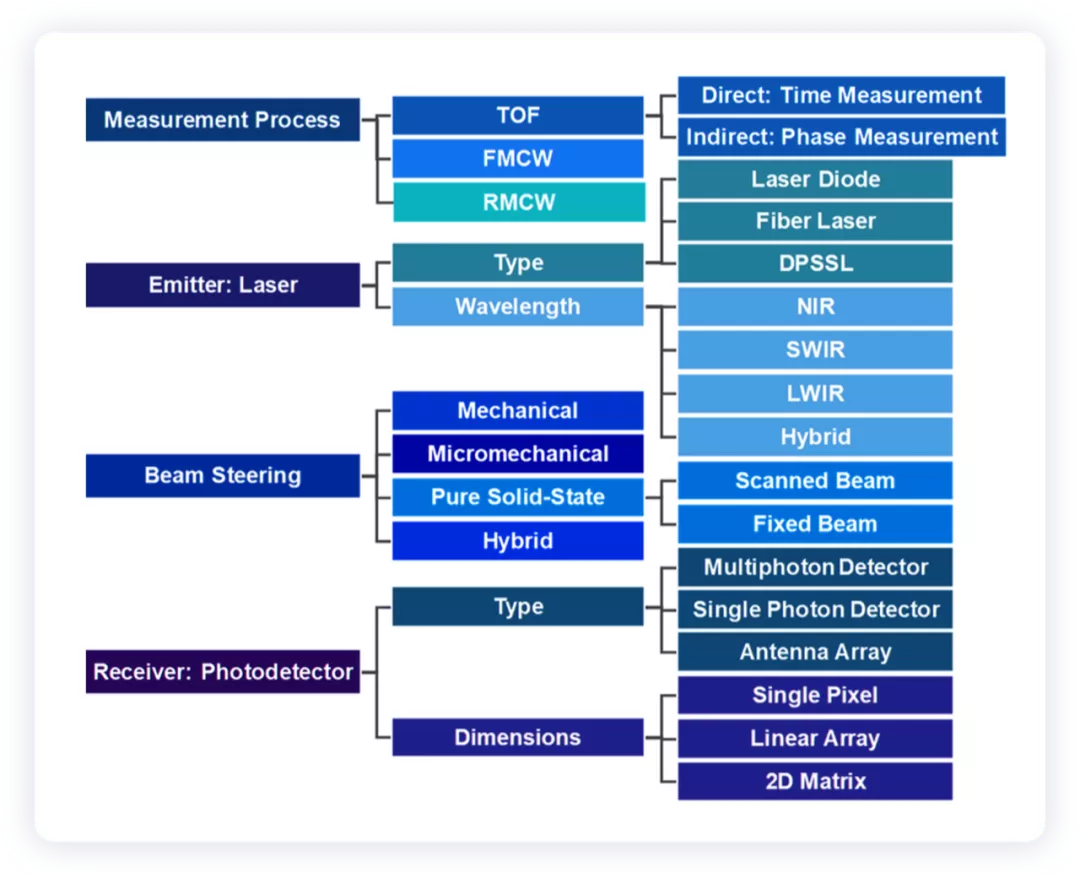

In China, companies have begun a “hardware arms race” for perception cameras towards high-level autonomous driving, with companies like JiXiang Automobile (15 cameras), IM Automobile (12 cameras), and NIO (11 cameras) (based on 5 cameras previously). Cameras are becoming more high-definition, obtaining longer detection distances and more information. Car companies are even evaluating that the perception distance of the 8-megapixel pixel camera is three times that of the 1.2-megapixel pixel camera.●From the perspective of LIDAR, both domestic and foreign industries have developed laser radars for different distances, including short, medium, and long ranges, and also around different technological routes such as diffuse light, pure solid-state, MEMS, and mechanical scanning for iteration.

This technological path has already advanced without a consensus from the entire industry and has shown its strengths in various car applications.

Summary: Optics has a deep evolutionary path for both human-driven and machine-driven technology directions, and this path is mainly completed by suppliers. The optical application in the automotive field is still very practical. The inevitable mode of software and hardware separation is brought about by the intelligent driving of optical devices, especially the integration of cameras and intelligent lighting systems, as well as the concentration output of the cockpit system for the instruments and central control. How to evaluate this value requires careful consideration.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.