Introduction

With the increase of the intelligence level of automobiles, the intelligent functions and entertainment properties of automobiles have become the key focus for the car manufacturers to create differentiation. As the “eyes” of automobiles, the onboard camera is an essential visual perception sensor for creating intelligent driving and intelligent cabins.

Application Trends of Onboard Cameras

From assisted driving to autonomous driving, the application requirements for onboard cameras have shifted from imaging lenses to perception lenses, and the usage scenarios have expanded from single scenes to multiple directions. The number of cameras used has changed from a single camera to multiple cameras. In short, the usage of onboard cameras will continue to increase, and the resolution of cameras will become higher in the future.

Gradual Increase in the Number of Cameras on Each Vehicle

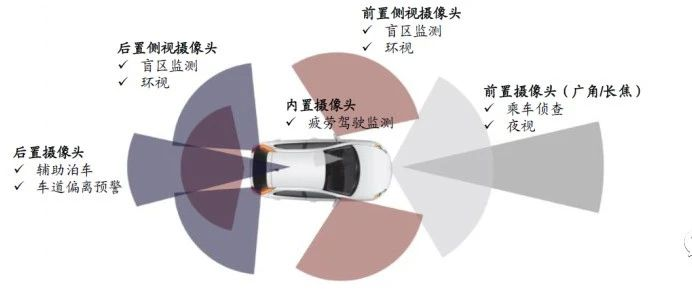

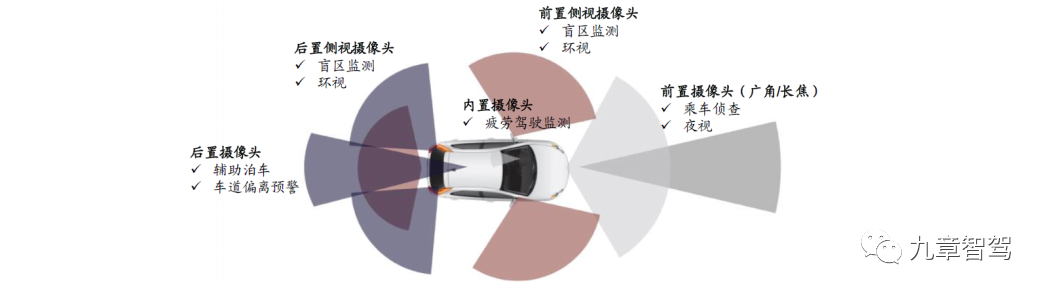

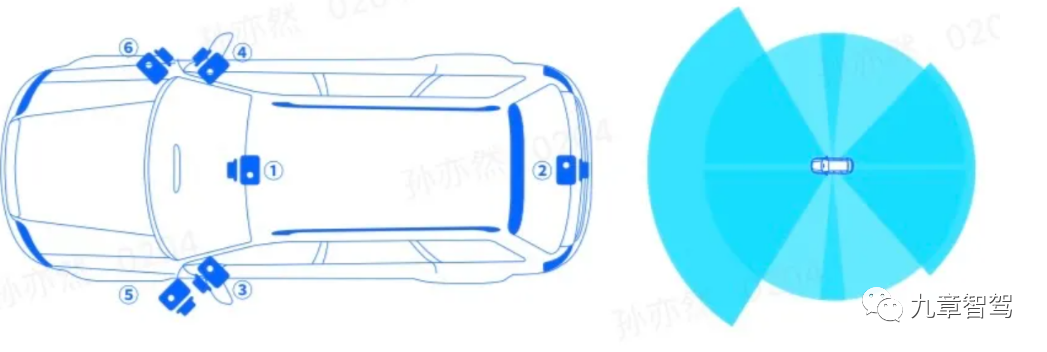

In low-speed parking applications, from having only one reversing camera, it has developed to having 4-5 panoramic cameras. In driving assistance applications, from having only one forward-looking monocular camera, it has developed to having 3-4 forward-looking cameras, side cameras, rear cameras, and a total of 7-8 driving assistance cameras, and some cars are even equipped with driver monitoring cameras and passenger monitoring cameras in the car.

Driven by functional needs and regulatory policies, as well as the decrease in camera hardware costs, having more than 11 cameras in an L2+ level intelligent car will become a common phenomenon.

1) Driven by Functional Needs

Automotive intelligence has raised higher requirements for the safety of vehicles, pedestrians, and drivers monitoring. As the most important onboard sensor, cameras can play the following roles:

Environmental Monitoring of the Outside of the Vehicle: a. Assist or replace drivers in perceiving the external environment, such as lane deviation warning, traffic sign recognition, pedestrian/cyclist-AEB, etc.; b. Replace internal/external rearview mirrors to provide images to the driver which will replace traditional internal/external rearview mirrors to “monitor” the environment of the rear and side.

Environmental Monitoring Inside of the Vehicle: Driver monitoring system DMS has been extended to the passenger monitoring system OMS, and its monitoring range has been expanded from driver monitoring to object detection in the entire cabin, such as monitoring forgotten children in the car, monitoring of abnormal situations in the cabin, etc.

2) Driven by Regulatory Policies

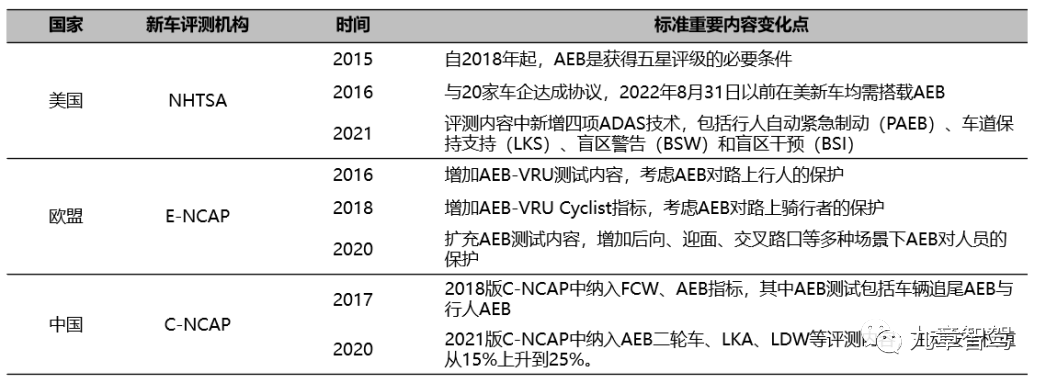

The upgrade of automotive intelligence has also promoted the corresponding improvement of the safety technology standards of automobiles. The EU’s E-NCAP, IIHS of the United States, and C-NCAP of China continue to expand the performance tests of active safety technology in their evaluation tests, including driving assistance functions such as AEB, FCW, and LDW implemented by the forward-looking camera, as well as driver monitoring and passenger monitoring functions implemented based on the cabin camera.

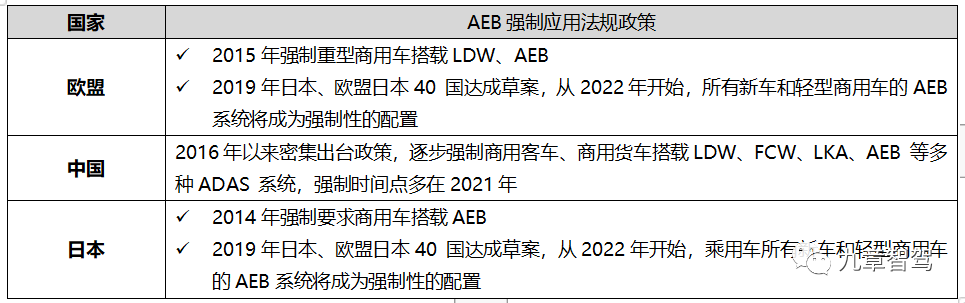

Mandatory AEB regulations

Governments in major countries and regions have formulated timetables for the full standardization of AEB, and the coverage of policies has gradually expanded from the commercial vehicle field to the passenger vehicle field.

Regulations on electronic side mirrors

The regulations on electronic side mirrors were lifted in the European Union and Japan in 2016 and 2017 respectively, and electronic side mirrors in automobiles were the first to develop in these two regions.

Although electronic side mirrors cannot yet replace traditional rearview mirrors in China, in June 2020, the Ministry of Industry and Information Technology published the “Performance and Installation Requirements for Indirect Vision Devices for Motor Vehicles” for comments, providing a “reassurance” for the application of electronic side mirrors in the domestic market.

DMS driver monitoring related regulations

The latest 2025 roadmap released by Europe’s E-NCAP requires all new cars to be equipped with DMS starting from July 2022. In China, the national commercial vehicle DMS system mandatory installation regulations have been under research and development and are expected to be introduced at the end of this year at the earliest.

3) Simplification of camera hardware and cost reduction

The centralized control architecture of the system drives the simplification of hardware, and cameras are gradually redefined as lightweight sensor devices that only capture, but not calculate. In the evolution trend of the whole vehicle’s E/E architecture, the ECU has evolved from distributed to centralized, and the computing power is centralized. The hardware of sensors, including cameras, can be simplified, and the visual computing and processing modules will move to the “central brain”. The camera will only be used for “image capture”.

The hardware composition of the camera becomes simpler, with CIS and lenses becoming the core components, and the hardware cost of the camera is greatly reduced. An industry insider proposed: “A camera with 1.2 million pixels averages at around 150 yuan, a 5 million pixel camera is priced at around 300 yuan, and an 8 million pixel camera is priced at around 500 yuan.”

Camera resolution is increasing

With the improvement of autonomous driving levels, the requirements for cameras are increasing. The initial application of in-car cameras was for reverse aid and driving recording, mainly to provide images and videos for people to view, and resolutions of several hundred thousand pixels could satisfy the requirements. With the expansion of functionality and application scenarios, the focus of camera applications has shifted to assisting the system in perceiving the surrounding environment during driving.The demand for higher resolution of cameras in the automotive industry has been on the rise, starting from 300,000 pixels to over one million, and now, up to two million pixels. With further technological advancements and market demands, plans are being made to incorporate 8 million-pixel high-definition cameras in future advanced autonomous vehicles for identifying and monitoring distant targets.

The decision on whether to use 8 million-pixel cameras in cars is dependent on the cost and technical ability of the car manufacturers. As the head of autonomous driving for a car manufacturing company once said, piling on hardware may give the wrong impression that more sensors lead to better autonomous driving, but if testing and algorithms fail to keep up, all hardware will be useless.

If every camera reaches 8 million pixels, the difficulty of processing data at the calculation platform will increase, and the overall cost of the system will inevitably rise. Therefore, the cost of the camera should not be viewed separately but rather from the perspective of the entire system. Additionally, car-mounted cameras do not depend solely on the ability to assemble pixels like that of a smartphone camera but require algorithmic capabilities compatible with high-resolution cameras.

If there is no algorithm and testing ability matching the high-resolution camera, then undoubtedly there is a case of putting the cart before the horse. Although the configuration may be higher, performance gains may be limited. As a result, the performance of a high-resolution camera would remain underutilized. In such cases, an 8 million-pixel camera would be a mere advertising gimmick, increasing the hype but not the performance.

Although algorithmic and testing abilities may not yet meet the speed of camera technology development, the future trend of high-resolution cameras is an undeniable result of the dual drive of technology and the market.

Application of 8 million-pixel cameras

At the Shanghai Auto Show this year, some OEMs announced plans to incorporate 8 million-pixel cameras, and suppliers of autonomous driving sensing solutions also adopted 8 million-pixel cameras as the primary sensor in front view applications.

Application by car manufacturers

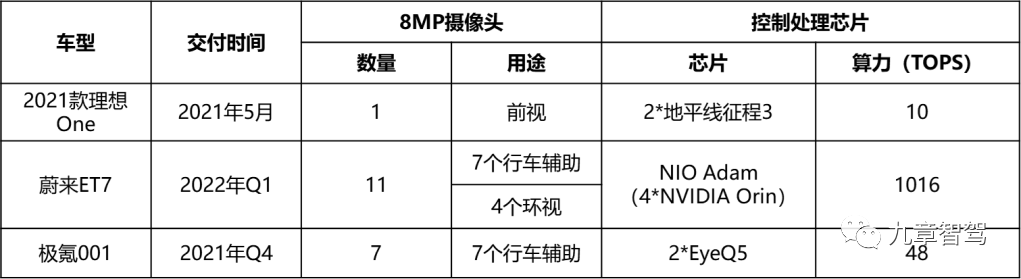

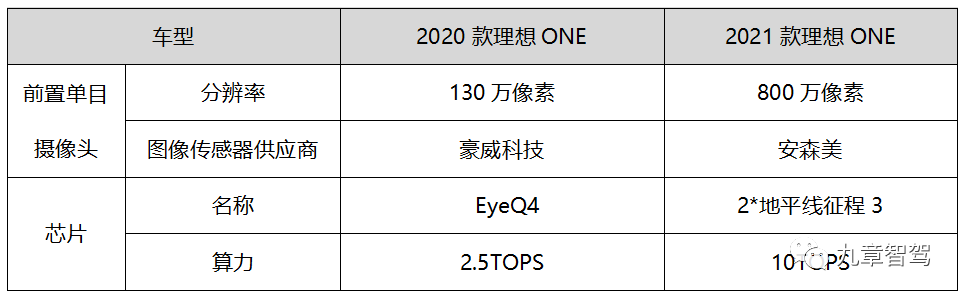

1) 2021 Ideal ONE

The first mass-produced car equipped with an 8 million-pixel camera for detecting cars and pedestrians at distances of up to over 200m, increasing the effective detection distance from the previous 1.5km to over 2km as well as expanding the camera’s horizontal field of view from the previous 52° to 120°.On the control decision chip, the assisted driving chip in 2021 Ideal ONE has been changed from EyeQ4 to Horizon Journey 3, and the computing power has been increased from 2.5 TOPS to 10 TOPS.

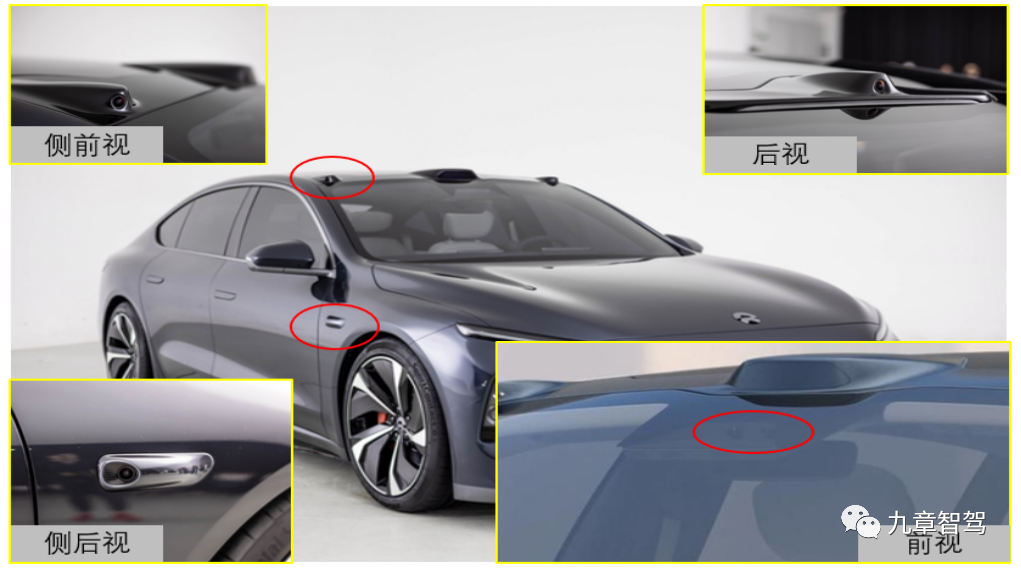

2) NIO ET7

NIO ET7 is scheduled to be launched in the first quarter of 2022. The vehicle is equipped with 11 8MP cameras, including 7 driving assistance cameras (4 forward + 3 backward) and 4 panoramic cameras. The driving assistance cameras are supplied by Lianchuang Electronics, and the panoramic camera supplier is Desay SV.

On the control chip, NIO ET7 uses its self-developed supercomputing platform – NIO Adam, which consists of 2 main control chips, 1 redundant backup chip, and 1 group intelligence and individual training dedicated chip for a total of 4 NVIDIA Orin chips, with a total computing power of 1016 TOPS.

3) JiKe 001

JiKe 001 is equipped with the Falcon Eye Vidar vision fusion perception system, which has 15 high-definition cameras, including 7 8MP long-distance high-definition cameras, 4 short-distance panoramic high-definition cameras, 2 in-vehicle monitoring cameras, 1 external monitoring camera, and 1 rear streaming media camera. Two Mobileye EyeQ5 chips are used on the control chip, and the chip’s computing power is close to 50 TOPS.

Application of Autonomous Driving System Solution Providers

1) DJI

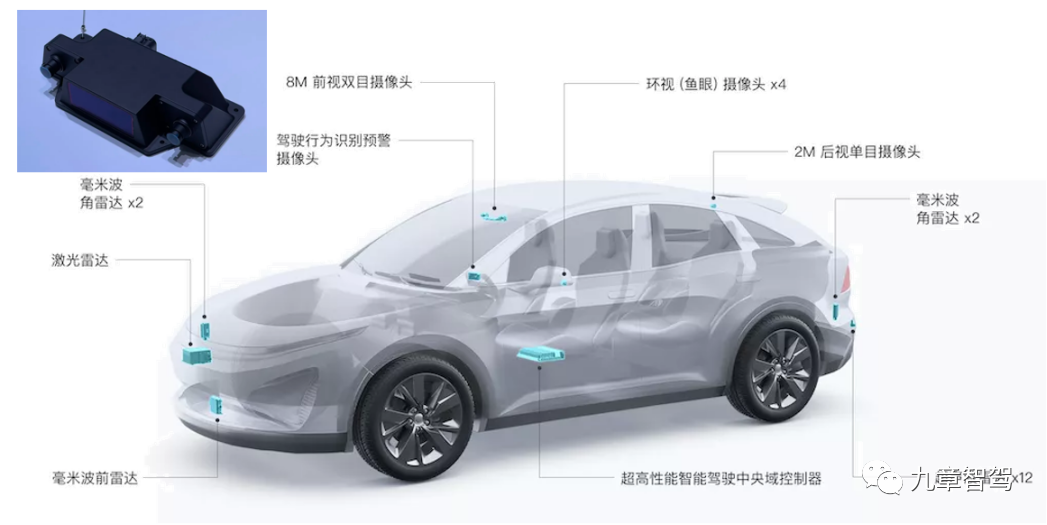

At the 2021 Shanghai Auto Show, DJI’s D130 and D130+ autonomous driving solutions adopted an 8 million-pixel dual-camera front view head.

a. Perception Solution: LiDAR (front) * 1 + front millimeter-wave radar * 1 + corner radar * 4 + front view (8 MP dual-camera – two camera specifications are exactly the same) + panoramic * 4 + rear view * 1 (2 MP) + DMS monitoring camera * 1

b. Intelligent Driving Central Domain Controller (calculating power up to 100 TOPS)

D130/D130+ Autonomous Driving System Solution (Image Source: DJI Promotional Materials)

2)Horizon

At the 2021 Shanghai Auto Show, Horizon unveiled its autonomous driving solutions, Matrix Mono and Matrix Pilot.

-

Matrix Mono uses an 8-megapixel monocular camera and Horizon Journey 3 controller to achieve L2-level autonomous driving functions.

-

Matrix Pilot uses six cameras: one front-facing 8-megapixel monocular camera with a 120-degree horizontal field of view, and five 2-megapixel cameras with 100-degree horizontal field of view located on the left, right, and rear sides of the vehicle to detect traffic conditions in diagonal and rear directions. The system also uses Horizon Journey 3 control to achieve L2+ level autonomous driving functions.

Relationship between 8MP Camera, Computation Power, Algorithms, and Data

As the debate over the 8-megapixel camera reignites with its first mass production application on the 2021 Ideal ONE, many are curious about the relationship between the 8-megapixel camera, computation power, algorithms, and data. For instance, what is the required computation power for a chip to support one or more 8-megapixel cameras, and does the existing algorithm need to be rewritten? Can the data gathered from low-resolution cameras still be reused now that more high-resolution cameras are available?

8MP Camera and Computation Power

What factors are related to the computational power required for cameras? Is there a formula to estimate the required computational power for one or more 8-megapixel cameras in cars? The computational power required for cameras is not only related to the camera’s own performance parameters, such as the number of bits, resolution, and frame rate, but also to its application scenarios and the algorithm models used. If the algorithm is fixed and the same object is recognized, a higher camera resolution requires more computation power; however, in general, the application scenarios and algorithm models and strategies have a greater impact on the required computation power.According to Li Lele, the vice president of Desay SV, “The higher the camera resolution, the larger the required storage and computing capacity. However, there is no direct correspondence between the resolution and the required computing capacity, and it is not easy to measure this relationship with a formula.”

“For example, in the planned new energy vehicle models, the camera configuration is basically the same, but the computing power of the platform varies greatly. Firstly, this is because the algorithmic model may be different. Secondly, the demand for computing power is continuously increasing, and the hardware’s computing resources need to be reserved in advance. The degree of their consideration of the reservation of computing power resources is also different.”

“At the same time, it also depends on the specific application scenario. For example, if the application scenario is limited to identifying vehicles, pedestrians, and lane lines based on the same resolution of the camera, its demand for computing power is relatively lightweight.”

“If the application scenario requires the system to recognize more targets, detect longer distances, and achieve higher accuracy, it not only needs to identify the above three categories of targets, but also needs to recognize traffic lights, speed limit signs, road signs, poles, and multi-lane lane lines. The distinction between obstacles not only includes vehicles and pedestrians, but also includes cyclists, tricyclists, and even non-standard targets for detection. Such application scenarios undoubtedly have a higher demand for computing power.”

Regarding whether the original algorithm needs to be rewritten when using an 8-megapixel camera instead of a low-resolution camera, most professionals believe that it does not need to be completely rewritten, but some parts need to be rewritten. There is no clear answer as to what percentage of the algorithm needs to be rewritten because different OEMs have different development situations and plans.

One professional explained: “After using a high-resolution camera, it does not require a complete rewrite in terms of the algorithm, but the Deep Learning model needs to be retrained. The original data accumulation can be used as pre-training, but new high-resolution data must be collected and used together.”

Li Lele of Desay SV expressed: “Whether the algorithm model needs to be adjusted or not is related to both the camera and the SoC of the controller. For controllers that support 1-megapixel cameras, they are likely to be lower-level, weak in computing power, and have relatively simple application scenarios, such as lane line detection or ACC and AEB based on simple object recognition and radar fusion. Most of the algorithms for these application scenarios may still be based on some pattern recognition algorithms of computer vision, and maybe they have not yet used neural networks.””For simple application scenarios and traditional algorithms, they can be accomplished by ARM processors without the need for accelerators. Even for AEB, with the upgrading of NCAP standards, the camera resolution and angle need to be further enlarged to support, requiring stronger processing power and upgraded algorithms. While the strategy of the algorithm and the engineering accumulation can be inherited, they cannot be completely copied and need to be upgraded synchronously.”

“Nowadays, the SoC computing power adopted by an 8 million-pixel camera with a field controller will certainly be stronger, mainly using AI-based algorithms, and the complexity of the algorithm model will also be higher. For this situation, most of the original algorithms cannot be reused, and only the strategy aspects of the algorithm can be reused.”

“To put it simply, if the control chip SoC type used in the front and back generations of the system is the same and the current popular neural network model and deep learning algorithm are used, then when the camera is upgraded to 8 million, most of the original algorithm models can be reused. However, algorithmic advancement is often very fast, and the algorithm of a generation of products has limitations that a new generation of the product has. Algorithm upgrades usually need to follow the upgrade of the chip.”

“For example, XPeng’s Xpilot3.5 system, which uses three 2-megapixel cameras for its front view, is driven by Nvidia’s Xavier as the main control chip. The next generation of control chip SoC needs to be upgraded to Nvidia’s Orin X chip, which provides higher computational power, and the camera needs to be upgraded to 8 million pixels. As the types of the two generations of SoC are the same, the algorithm model of the previous generation can basically be reused in this generation, but it will also be upgraded and become more complex to improve its overall performance.”

8 Million Pixel Camera and Data

The algorithm modules of autonomous driving, especially the perception and prediction modules, are mostly data-driven, indicating the importance of data in algorithm iteration. Can the data collected from low-resolution sensors be used for algorithm training after upgrading to an 8 million pixel camera?

The answer is yes. The 8 million pixel camera can inherit some capabilities from the previous data when training the algorithm model, such as detection accuracy and false alarm rate. However, the 8 million pixel camera detects objects at farther distances, requiring the collection of new data to deal with new scenarios and to broaden its performance boundaries.

Taking Tesla as an example, even though they have accumulated a large amount of scene data through shadow mode over the years, switching to an 8 million pixel camera will still require collecting a large amount of new scene data to iteratively refine the algorithm model and constantly approach the best performance effect of the 8 million pixel camera.

Other Thoughts on 8 Million Pixel Camera

What are the main application scenarios for 8 million pixel cameras in the future?The future applications of 8 million-pixel car cameras include: surrounding view (front, side, rear), panoramic view, electronic rearview mirror cameras, and interior cameras. Among them, front view and interior cameras are the main scenarios that are most likely to be widely promoted in the near future.

For front view scenarios, it is the most urgent application for high-resolution cameras because it needs to solve the most scenes and the most complex object recognition tasks. Its application scenarios not only require higher image resolution to recognize small target objects at longer distances, but also need a certain range of field of view to react promptly to targets entering the current lane.

For side and rear view scenarios, their detection distance is not as far as the front view detection requirements, and the target recognition tasks of the cameras are relatively simple. The main targets for detection are moving targets in the side lane and the current lane, and there is no need to recognize traffic lights, road signs, and other tasks. Currently, 2-5 million pixel cameras can fully meet the application requirements of side/rear view. Considering cost and performance, the demand for 8 million pixel cameras in the short term may not be that urgent for side/rear view scenarios.

For interior visual application scenarios, some traditional OEMs in order to enhance their luxury and sense of technology will configure high-resolution color cameras (8 million pixels) inside the car to meet the entertainment and office needs inside the car, such as selfies, video conferencing, etc.

For electronic rearview mirror application scenarios, the mainstream is still 2 million pixels, but in the future, an 8 million pixel camera will be needed. Electronic rearview mirrors have a requirement for the frame rate of cameras, which should be at least 90 frames. In the long run, there is a trend of shared use between the cameras of electronic rearview mirrors and side/rear view.

For panoramic view application scenarios, cameras mainly provide images for human observation, and can also be used for perceptual assistance, such as detecting the current lane lines, assisting the system in identifying and perceiving the lane lines. Currently, the resolution of fisheye cameras used in applications is mainly distributed between 1-2 million pixels, of which fisheye cameras with around 1 million pixels have been widely applied, and 2 million pixel panoramic views have just begun to be produced in quantity.

Compared to 1-2 million pixel cameras, what performance advantages does an 8 million pixel camera have? Will the improvement of camera resolution affect other performance parameters?

The camera can achieve longer detection distance while having a larger field of view. Taking front view cameras as an example, when the effective detection distance of a 1-2 million pixel camera is 100-150 meters, the field of view is only about 50 degrees, but an 8 million pixel camera can achieve a detection distance of 200-250 meters while having a field of view of about 120 degrees.Meanwhile, high-resolution cameras have higher dynamic range (HDR) and better LED flicker mitigation (LFM) capabilities.

Under the same conditions, the higher the resolution of the camera, the smaller the size of its individual pixels, which results in lower photoelectric conversion efficiency in low-light conditions, thereby affecting the camera’s performance in low light. The advantage of high-resolution cameras is that they can see farther and clearer, but their night vision performance is relatively poorer. Therefore, when selecting a camera system, the pursuit of high pixel count cannot be blindly pursued and a balance must be struck.

Why are traditional OEMs in foreign countries relatively conservative compared to emerging automakers in using 8-megapixel cameras?

Firstly, the development process and methodological concepts are different. Emerging automakers in China are relatively more aggressive in the application of new products and technologies, using the rapid iteration method to apply them even before the technologies and products are completely mature. However, traditional OEMs in foreign countries have higher requirements for their development processes, resulting in longer development cycles.

Secondly, foreign traditional OEMs still primarily cooperate with Mobileye. In terms of algorithms, most OEMs still rely on Mobileye’s algorithms. If we switch to a chip company that is decoupled from the algorithm, such as Nvidia’s high-performance computing platform or other higher-performance computing platforms, there is still a need for corresponding algorithmic support, but currently there is a lack of such algorithmic companies in Europe to do these things. If the OEM wants to adopt a multi-camera solution with high resolution and does not have a high-performance computing platform and corresponding algorithmic capability, simply using a high-resolution camera is meaningless.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.