Author: James

Entering the era of smart cars, it’s embarrassing to call yourself a smart car without intelligent cockpit and intelligent driving.

Recently, we got a Marvel R long-term test car. Let’s take a look at how the intelligent cockpit of this car performs.

Let’s start with the basics:

Marvel R is the first pure electric vehicle under SAIC R Automotive and was officially launched in February this year.

Hardware configuration: Marvel R is equipped with a 12.3-inch LCD display + 19.4-inch central control touch screen, the latter uses a Corning Gorilla Glass touch panel, the screen resolution is up to 1200 × 1600, and in terms of processor, it adopts MediaTek MTK chip.

Car system: Zebra intellitronics Venus intelligent system. A new generation intelligent network connection system developed by Zebra intellitronics based on self-developed operating system AliOS.

Interaction: Four interaction modes are provided, including touch screen, voice, physical buttons and natural body language interaction (including gestures and head movements).

Next, let’s get to the point.

Instrument: MR-Driving is very practical

The 12.3-inch LCD instrument panel + 19.4-inch central control touch screen constitute the entire Maevel R cockpit interaction.

In the instrument panel part, driving-related information and entertainment-related information are displayed on the left and right sides respectively, both of which can be customized. In the middle is the display of vehicle status content (display of forward road conditions and auxiliary driving-related information).

Of course, these functions are all basic.

However, I think R Automotive is one of the companies that use the instrument screen best.

Earlier, SAIC took the lead in mass producing AR-Driving, and truly utilized the instrument screen. Now, the MR-Driving used by Marvel R has taken it to the next level: “Integrating AR augmented reality and VR virtual reality scenes, to achieve seamless switching between virtual and real assists for driving, ultra-distance perception and short-distance perception.”

In plain language, it always appears at the right time, providing navigation information. For example, when passing through intersections or entering and exiting ramps, there will be a real-time road condition display with arrow dynamic display of the driving direction, and the accuracy displayed on the road is very high.

It is worth mentioning that the “appropriate” timing is actually very difficult to grasp. It not only needs to avoid distracting the driver but also needs to appear at the right time. There are still many challenges. However, based on the actual use in recent days, the team of Marvel R has optimized it well.

At the same time, when pedestrians are crossing from left to right, Marvel R will mark it on the dashboard and give a voice prompt to ensure driving safety. It is very useful for novice drivers and users who are not familiar with the road.

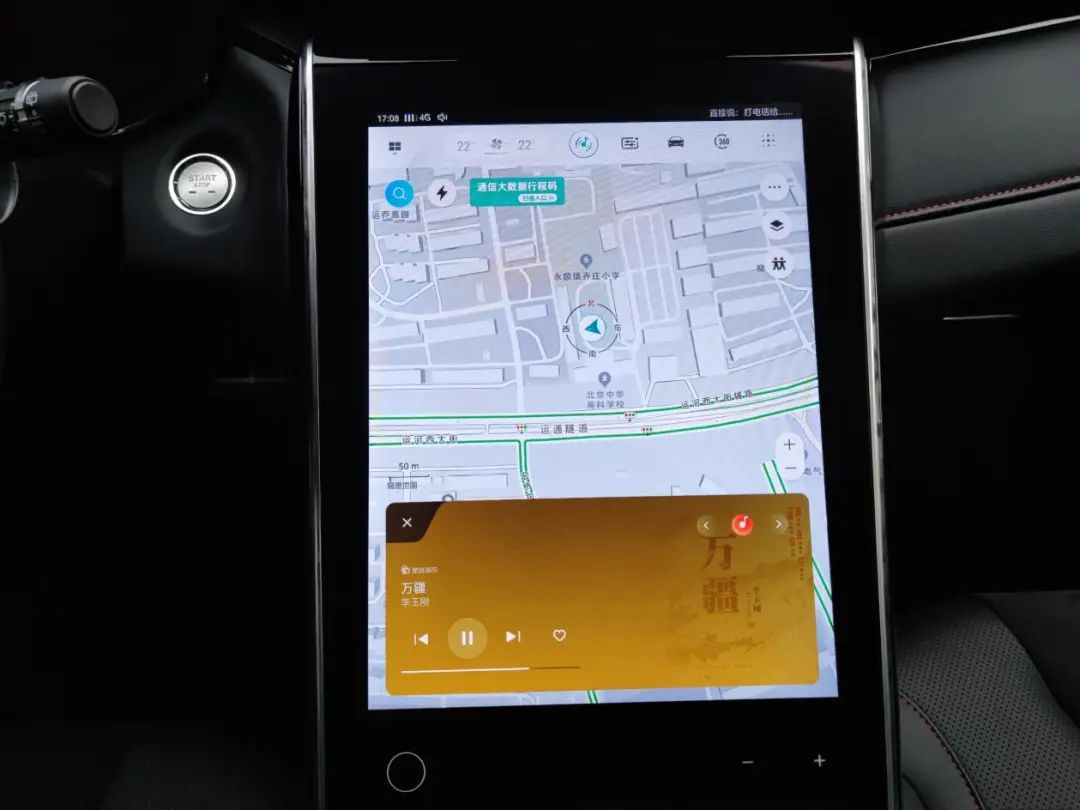

Since we are talking about maps, let’s talk about it in detail. The car map of Marvel R comes from Amap, and the accuracy is very high; it can also display traffic event information. If there is a faster route, it will actively ask whether to switch routes, which is very useful. At complex intersections, it will automatically switch to 3D street view navigation.

In the navigation scene, the car machine can basically replace the mobile phone. Marvel R deserves a thumbs up for this.

Zebra Intelligent Driving System: AB World

The Marvel R car machine is equipped with the Zebra Intelligent Driving Venus intelligent system.

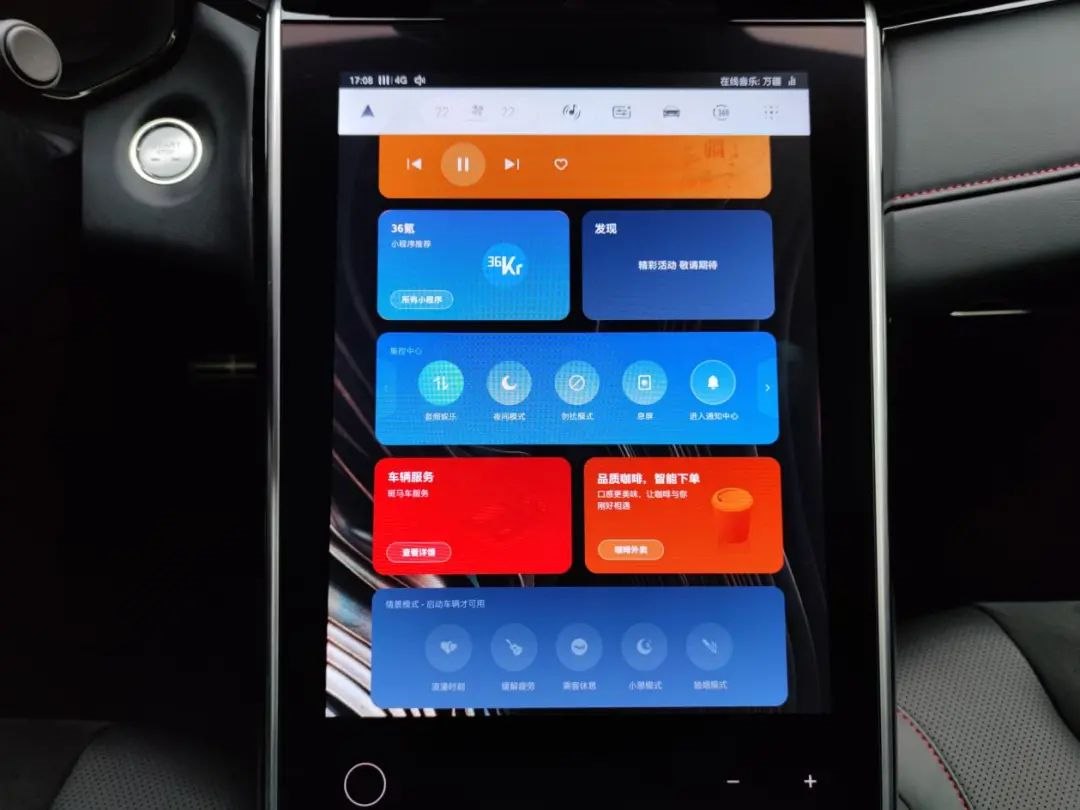

The car machine adopts the “AB world” presentation.

The logic of world A is that the map is the desktop. All other applications serve the map.

World B interface uses a card layout, providing services including music, navigation, and itinerary. You can define the services you want, and the card style can also be switched according to your preferences.

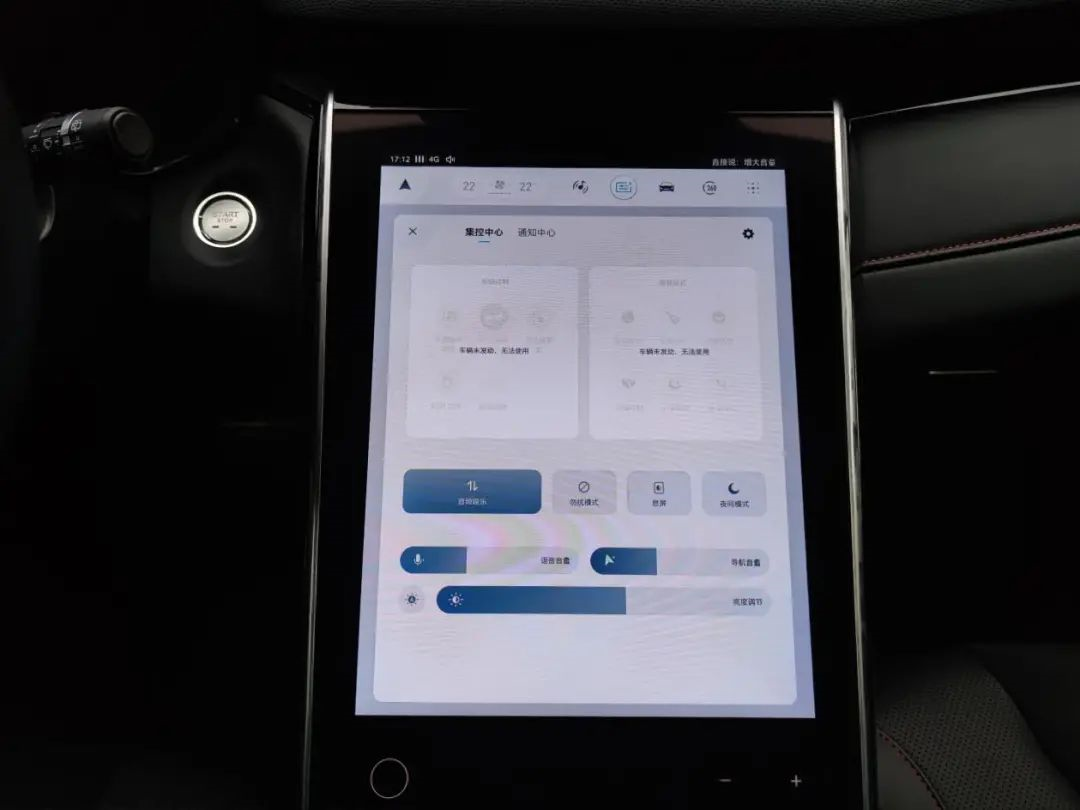

You can switch between the two worlds by pressing the button in the upper left corner of the central control screen. Frequently used applications such as music and air conditioning are placed in the top bar.

Under different A/B worlds, the display mode is also different. In world A, music, the central control center, and quick start will be in small window mode on the map, below the central control, making it easy to operate while not affecting the use of navigation. In world B, these applications will be displayed in full-screen mode.

The entire car machine logic is relatively clear, and the hierarchy is not too complicated. Basically, you can find the desired application in one or two steps.

Intelligent Voice: Praise-worthy# Marvel R’s intelligent voice recognition is impressive

No matter if you use official commands, mixed English and Chinese, or natural language, Marvel R can recognize and execute them well.

Once you set your home and work addresses in the map, simply say “I want to go home” or “navigate to work” and the car will automatically start navigation. You can also change songs by simply saying “next song.”

This is all thanks to Marvel R’s voice recognition without wake words. You don’t need to wake up the car assistant, just say specific commands.

This experience of not needing to wake up your assistant and driving right away is really great.

In addition, Marvel R supports continuous conversations. Whether you use “Hey Zebra” or “No Wake Words” to wake up the assistant, the voice identifier will continue to appear on the screen. The system supports continuous conversation, and you can continue to issue commands. Users can also choose the duration of the continuous conversation in the settings.

Furthermore, the intelligent voice assistant greets you when you get in the car. Although the tone is a bit mechanical, it can still give single dog owners a sense of care 🙂

However, the voice control options for the car are still limited. For example, it cannot control hardware such as seats through voice commands. It is suggested that Marvel R expand the capabilities of voice control to provide a better user experience.

In addition to voice, touch, and button interactions, Marvel R is now trying to incorporate natural bodily language interaction.

This natural bodily language interaction uses cameras to recognize and perceive eye contact, gestures, and head movements to achieve interaction. For example, when adjusting the air conditioner or sunroof, you can adjust it directly with gestures. If the system detects you smoking, it will ask if you want to open a window. In this case, all you need to do is nod, and the system will open the window for you.

However, these abilities are still in the beta version, and from the actual experience, the accuracy of recognition still needs improvement.

Application: The small-scale ecosystem of Marvel R still needs improvement.Currently, although the Marvel R car system does not have an app store, there are still quite a few third-party applications available. For example, the music and online radio functions use Kuwo Music and Ximalaya, respectively.

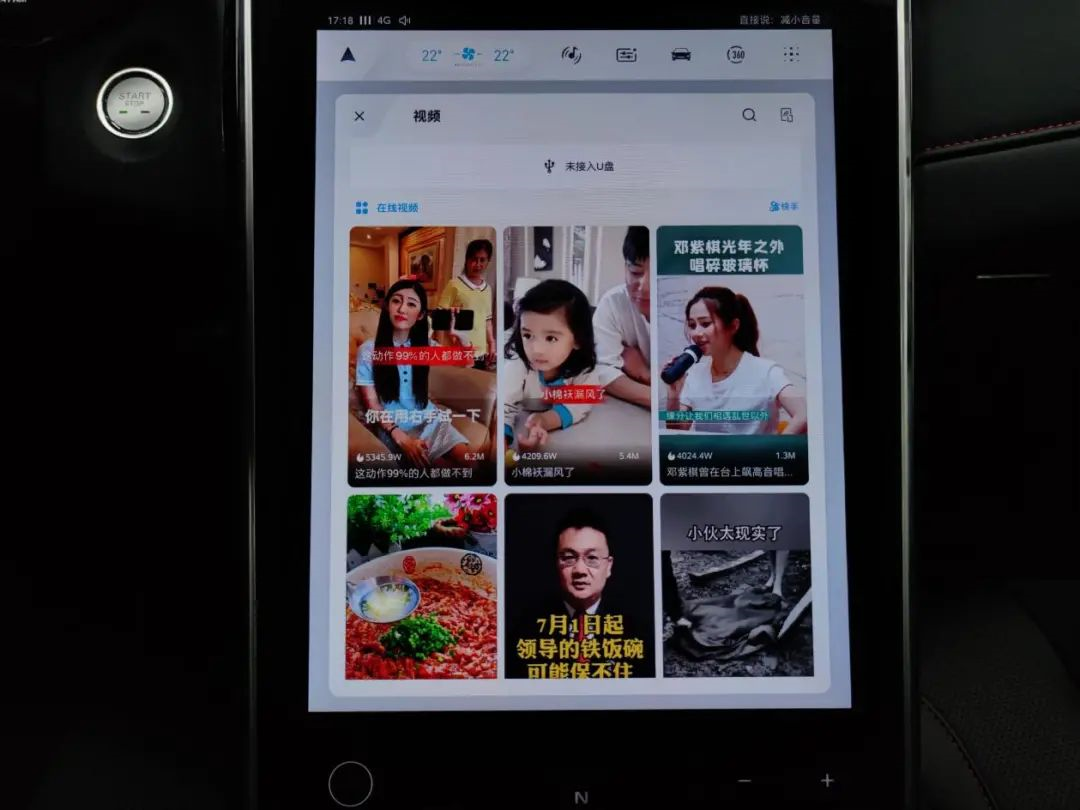

As for video, although Kuaishou is compatible, it falls a bit short in terms of content updates and adaptability.

For example, when switching videos on the car system, scrolling up and down does not work; you must tap the screen instead, and you cannot search for the desired content.

This area may need further optimization.

In addition to these applications, Marvel R has access to nearly 30 mini-programs, covering areas such as travel, weather, and Mango TV.

Although this greatly enriches the ecosystem, the frequency of use is actually quite low, and the applications are more suited for long-tail scenarios.

However, this brings up an issue: the entire car system becomes quite heavy, and the computational power requirements are also significant. If not handled properly, this can cause lagging and slow response times, which can negatively impact the user experience.

Since there is no suitable smart home hardware, the experience of car-home interconnection will not be discussed for now.

Summary

Overall, Marvel R’s system has two core ideas: first, the map is the desktop, and second, a move away from app-based interfaces.

From an experiential perspective, there is still considerable room for improvement, such as the smoothness of the car system, the threshold for voice control, and optimization of small details.

However, looking at the transition from passive voice-activated services to active camera-dependent services, we can see that Marvel R is making some new explorations and attempts in the realm of intelligent cabins to improve the car system experience.

With the continuous expansion of R-car user groups and large-scale data feeding, we believe it will promote the continuous optimization and progress of the Marvel R car system. Of course, this will also require many collaborative partners to work together, constantly expanding boundaries, and improving car system capabilities.

In a few months, we may see an even stronger Marvel R car system.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.