Article:

937 words in total

Estimated reading time: 5 minutes

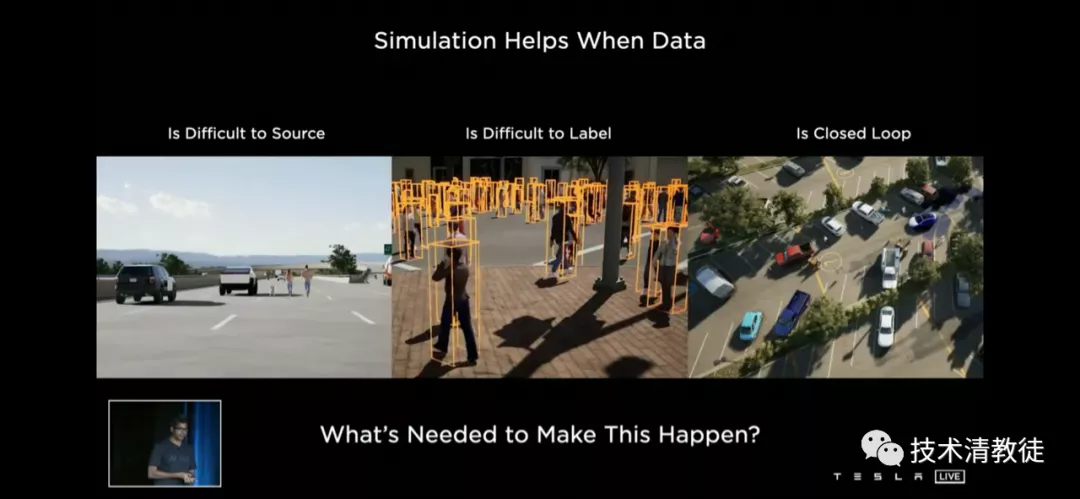

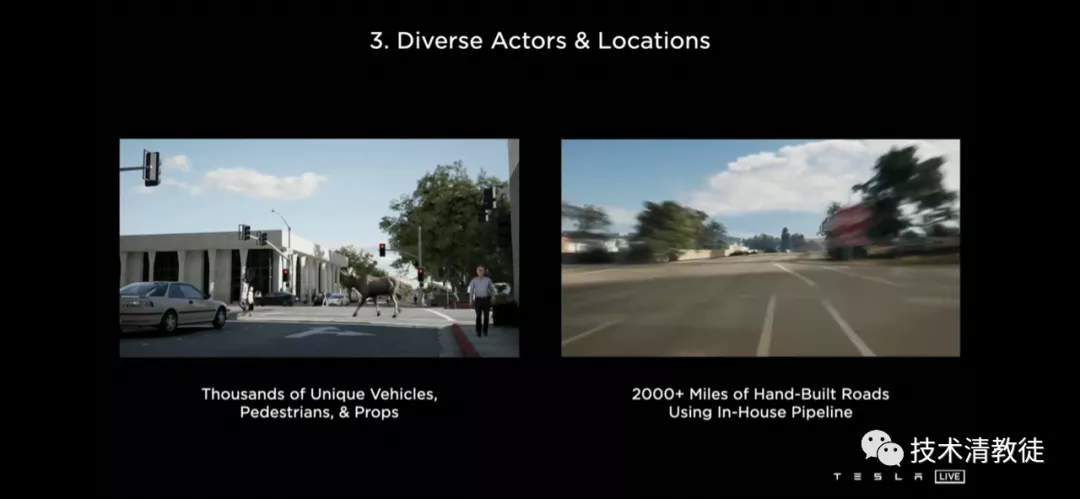

To verify some uncommon scenarios, Tesla created a 3D virtual world using simulation technology, where vehicles can drive around just like playing games. Tesla demonstrated some examples, such as a family with a dog running in the middle of a highway, a street full of pedestrians, or a new type of truck (cybertruck) never seen before on the road.

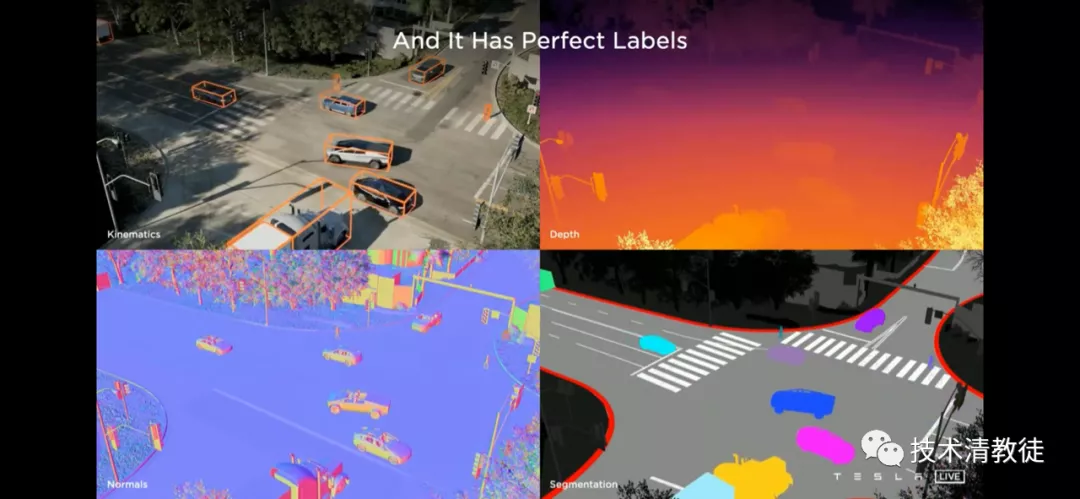

Even with an automated annotation system, it can be challenging to label a street filled with pedestrians. Therefore, creating a simulated virtual world where the system knows the location and speed of each pedestrian clearly will make it easier to train the neural network.

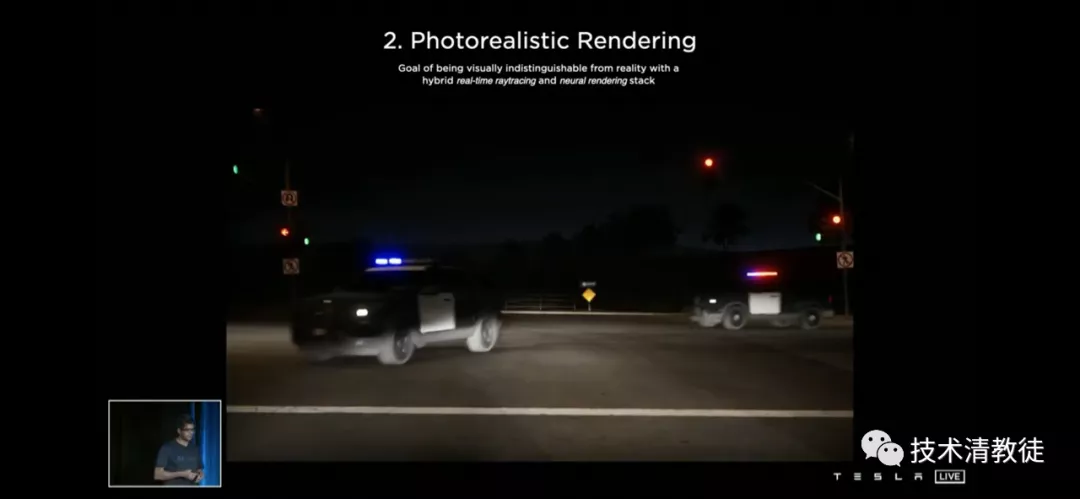

To achieve accurate simulation, Tesla has done a lot of work to reproduce the conditions seen in real-world camera scenes as closely as possible. For example, the lighting conditions and variations in light must be accurately reproduced, and road surface noise also needs to be added – Tesla even has a neural network specifically designed to add noise and textures to images.

Tesla now has thousands of different vehicles, pedestrians, animals, and props in the simulation engine. In this virtual world, there are also over 2,000 miles of various roads and streets.

Whenever Tesla wants a scene, the simulation engine creates it like an artist. Besides, Tesla can adjust the scene conditions at any time, such as night, day, rainy, or snowy, etc.

The fact that Tesla can automatically create any scene they want to build virtually means they can use adversarial machine learning techniques to create a virtual scene that can deceive the car’s vision recognition system. Then they can use this scene to train the car’s vision recognition system in reverse, and so on, to make the vision recognition system better.

The fact that Tesla can automatically create any scene they want to build virtually means they can use adversarial machine learning techniques to create a virtual scene that can deceive the car’s vision recognition system. Then they can use this scene to train the car’s vision recognition system in reverse, and so on, to make the vision recognition system better.

Looking at it this way, it is too conservative to say that Tesla’s toolchain for autonomous driving iteration is only one or two steps ahead of other companies. It should be said that this toolchain is extremely complex and may also be the most powerful in the world.

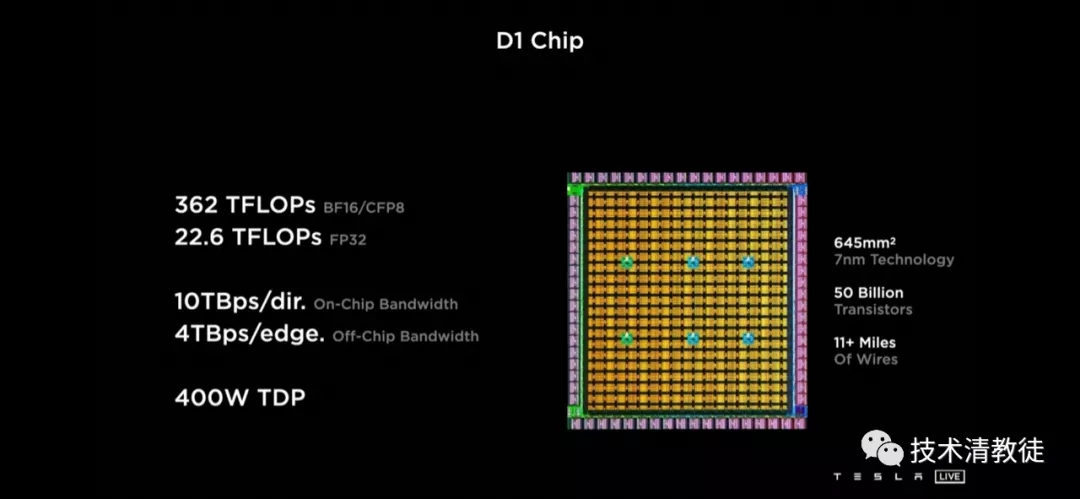

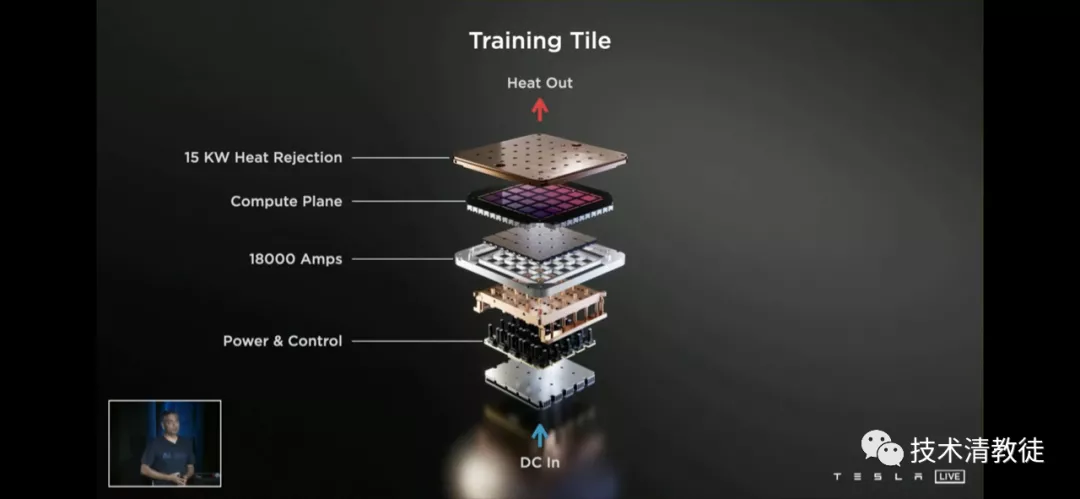

DoJo is Tesla’s next-generation super data training center. Currently, Tesla is using a training cluster consisting of thousands of NVIDIA A100 GPUs. However, Tesla believes that if they can customize a dedicated AI training chip for their own needs, they can further improve training efficiency. This dedicated training chip is much more powerful than the current GPU chip and is designed specifically for building supercomputing clusters.

It’s not too much to say that this dedicated training chip, called DoJo, is world-class in terms of packaging, cooling design, power supply system, integration, and super large bandwidth (easily surpassing existing products by 10 times performance).

DoJo is currently in the manufacturing process and is expected to start running in 4-6 months. When it is officially put into operation, Tesla’s training iteration efficiency will once again take a step forward.

(The end of the article)

The original author of this article, @cosmacelf, is a technical writer on Reddit. The original title and link are:

“Layman’s Explanation of Tesla AI Day”

https://www.reddit.com/r/teslamotors/comments/pcgz6d/laymansexplanationofteslaai_day

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.