Author: Winslow

Following the previous article:

The Tesla AI Day for Non-experts (Part III): Planning and Control

Body:

Word count: 1,244

Estimated reading time: 7 minutes

In the previous chapters, we talked about how Tesla’s neural networks perceive the world and plan decisions to achieve autonomous driving. Now, let’s talk about how Tesla trained this set of neural networks.

As mentioned in the first chapter (The Tesla AI Day for Non-experts (Part I): Neural Networks), Tesla, like all companies in the industry, needs to “feed” the neural networks with millions of annotated images to teach them to recognize objects.

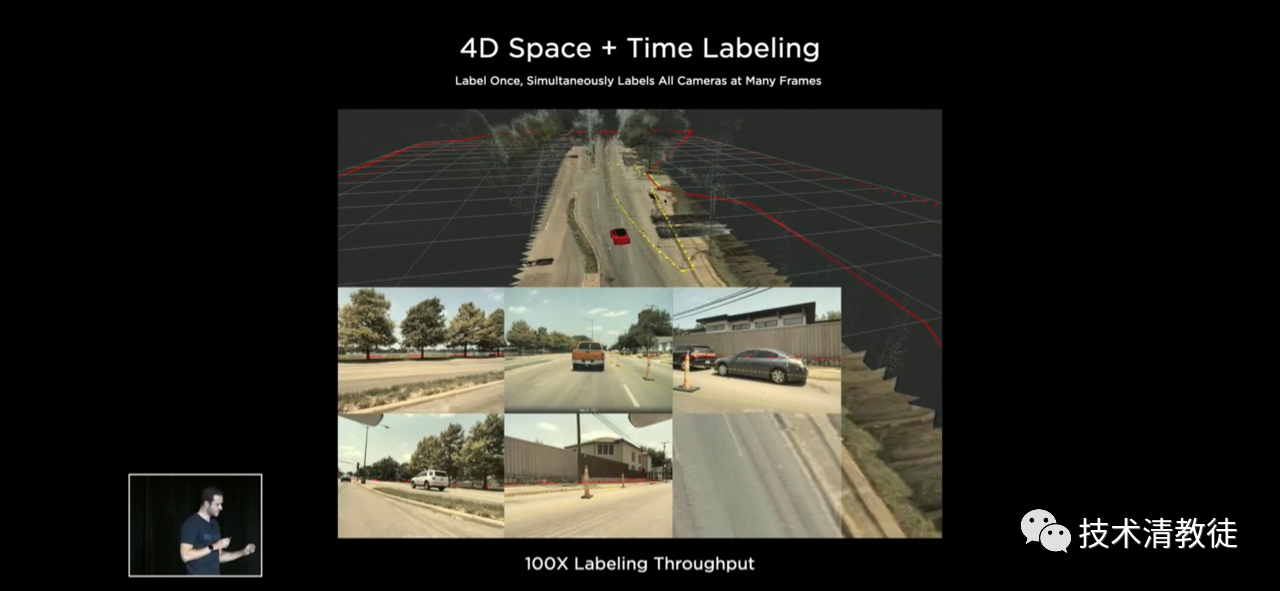

Initially, Tesla, like other companies, manually labeled objects in each image (hence why some in the industry referred to the labor-intensive process in artificial intelligence as “artificial” intelligence). However, Tesla realized that this process was not scalable. Therefore, Tesla developed a tool that could achieve semi-automatic annotation in the 3D vector world fused with eight cameras. For example, when a human manually labeled the edge of a road in this tool, the tool could automatically synchronize this annotation into the images captured by the cameras on the car.

By using this tool with visually enhanced annotations that are more in line with human spatial understanding, Tesla greatly accelerated the speed of manual annotation.

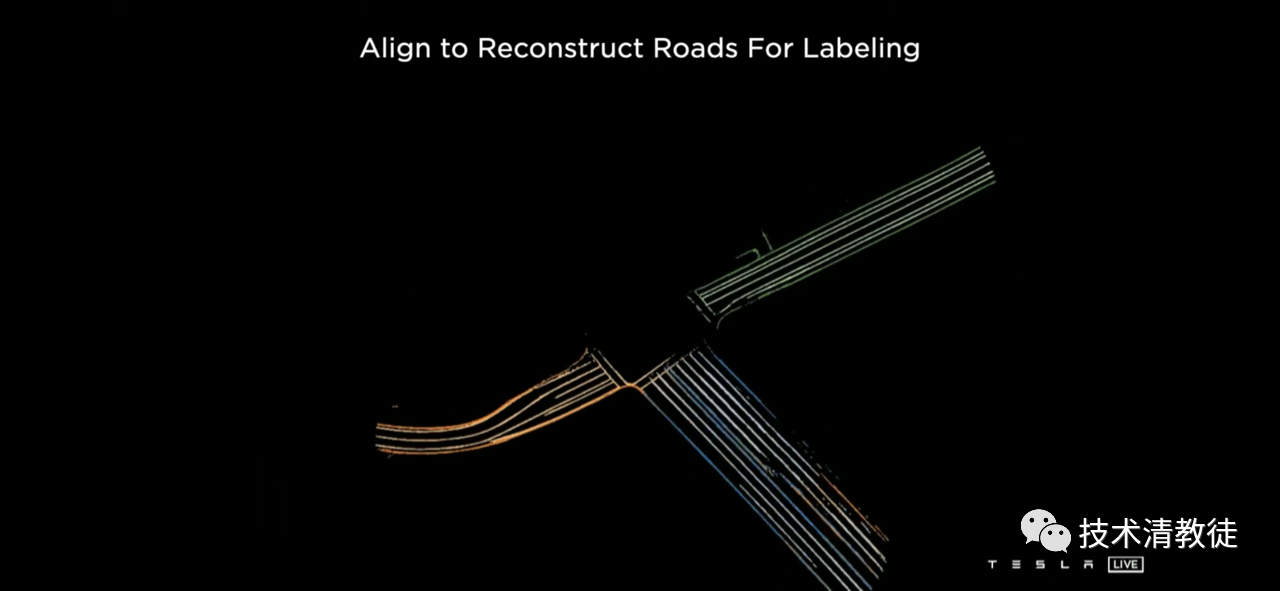

Tesla also realized that when the number of cars increased, they would pass the same intersection multiple times under different time, weather, and lighting conditions. Therefore, Tesla used GPS to associate these images together, and only one manual annotation was needed for all the thousands of associated images of the same intersection in the 3D vector space.

For example, when a Tesla car passed an intersection, a human manually marked a traffic light as an object in this specific 3D vector space using this system. Then, in the future, any other Tesla driving through the same intersection could automatically annotate the traffic light in their images without requiring manual annotation, regardless of their direction, weather, and lighting conditions.

Tesla has developed a fully automated labeling system. As we know, the speed of labeling is limited for an individual AI chip due to its computing power. However, when Tesla combines 1000 GPUs together into a GPU cluster, the speed of automatic labeling for new images is greatly accelerated.

In the first half of 2021, Tesla only spent 3 months developing a pure visual automated driving assistance version without millimeter-wave radar in the United States. The reason why the pure visual version can be comparable to the visual+millimeter-wave radar version in such a short period of time is due to this fully automated labeling system.

When running the pure visual version at that time, Tesla encountered some technical issues. Here is an example that is not common but can happen in reality: When the car follows a snowplow truck, a pile of snow falls from the snowplow truck in front and covers the car’s camera, resulting in poor visibility within the surrounding camera’s line of sight.

Tesla realized that it needed to improve the car’s performance in situations with poor visual visibility, which required the system to automatically label images in situations with poor visibility. Therefore, Tesla’s global fleet of vehicles was instructed to provide videos of similar low-visibility scenarios. In less than a week, the system automatically labeled nearly 10,000 low-visibility videos.

Tesla engineers said that this kind of work could take several months to complete if done manually.

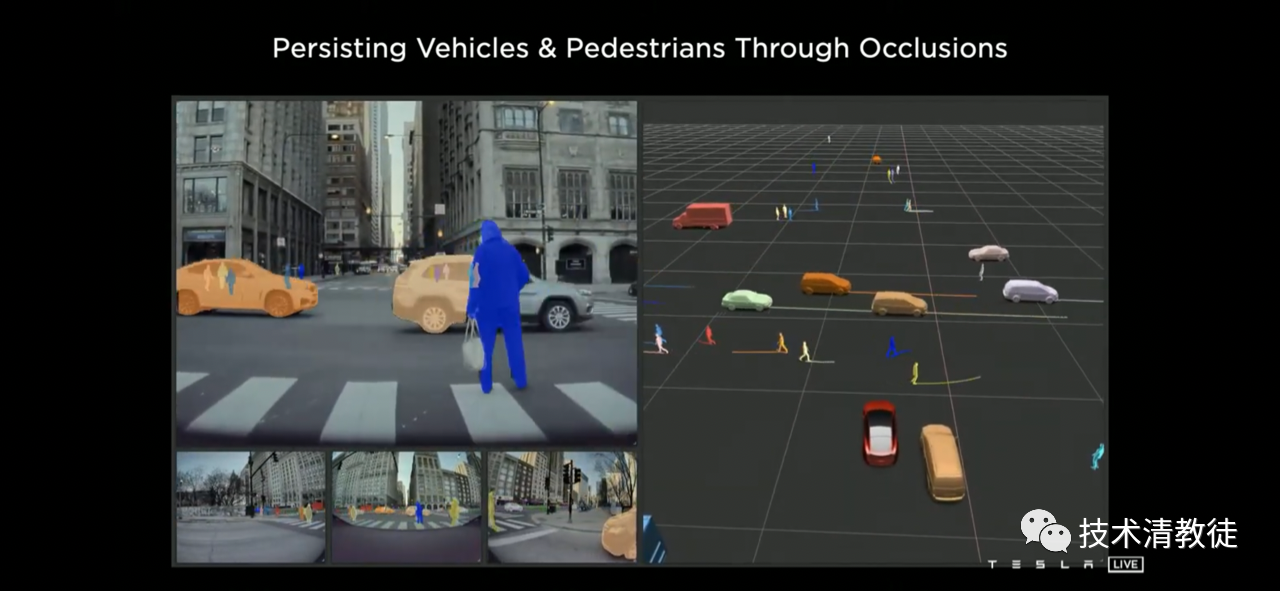

After large-scale labeling and neural network training for low-visibility scenarios, when encountering such situations, Tesla can know and predict the position and trajectory of surrounding objects, similar to human drivers.

In the following sections, we will discuss Tesla’s simulation in detail.

(to be continued)

The original author of this article, @cosmacelf, is a Reddit technology author. The original title and link of the article are:

“Layman’s Explanation of Tesla AI Day”

Tesla AI Day 普通人解释

2021 年 8 月 20 日,特斯拉举行了一场名为 “AI Day”的活动,向公众展示他们在人工智能领域的最新进展和未来计划。以下是这场活动的一些亮点和普通人可以理解的解释:

Dojo 超级计算机

Dojo 是特斯拉正在构建的超级计算机,旨在处理特斯拉收集到的大量数据,例如在公路上行驶的车辆的图像、视频和传感器数据。Dojo 能够每秒处理 1 EFLOPS(即每秒 10 的 18 次方次浮点运算),这相当于比当前最快的超级计算机快 10 倍!

人工智能芯片

特斯拉展示了他们的最新人工智能芯片,称之为 D1。这款芯片是由特斯拉自主设计和制造的,可与 Dojo 超级计算机配对使用,以进行高效的机器学习任务处理。D1 可以处理 9 petaOPS 的运算,并且比目前任何其他芯片都更快,更紧凑。

自动驾驶

特斯拉展示了他们最新的自动驾驶技术,包括 DNN 改进、车道线检测、路径规划和交通灯识别等。这些技术有望进一步提高特斯拉的自动驾驶功能,更加接近于完全自动驾驶。

人形机器人

最后,特斯拉宣布他们正在开发一种名为 Tesla Bot 的人形机器人。这种机器人可以执行人类语音和手势指令,以及执行简单的任务,例如拾取物品和进行清洁。特斯拉表示,这个机器人的设计目的是为了替代人类做繁重的工作,让人们有更多的时间用于创造性和有趣的事情。

欢迎在评论中分享您对这些技术的想法和看法!

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.