Tesla: What Kind of Company Is It?

- In 2003, Tesla appeared as a new car company.

- In 2016, Tesla, which acquired Solarcity, became an energy company.

- In 2019, Tesla introduced its self-developed FSD chip and became a self-driving car company.

- In 2020, Tesla got involved in battery design and development, advancing to a power battery company.

- Elon Musk is in the fifth layer. Today, Tesla’s self-developed large-scale deep neural network training cluster Dojo and AI robot Tesla Bot confirm Elon’s words: “In the long run, people will see Tesla as an AI robotics company, just like it is viewed today as an automotive or energy company.”

- At 5pm on August 19th, U.S. Eastern Time, Tesla held AI Day, which was divided into three sessions:

- Andrej Karpathy, Tesla’s Director of AI and Vision, Ashok Elluswamy, Tesla’s Autopilot Full-Stack Algorithm Director, and Milan Kovac, Tesla’s Autopilot Engineering Director, introduced the latest developments in FSD full self-driving.

- Ganesh Venkataramanan, Senior Director of Tesla Project Dojo, presented Tesla’s large-scale deep neural network training cluster.

- Elon Musk unveiled Tesla’s robot, Tesla Bot.

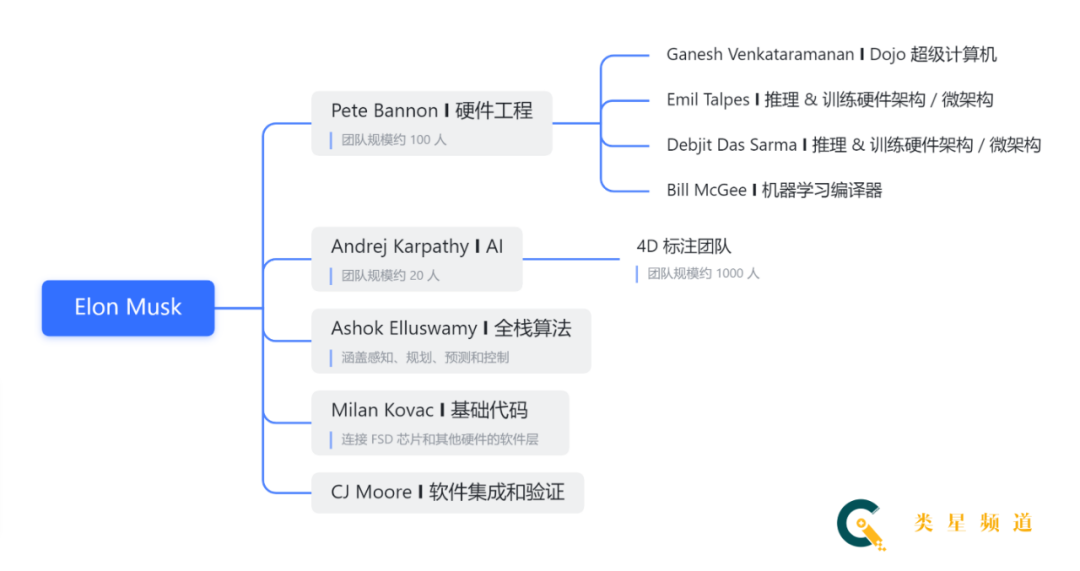

Regarding the Tesla executives outside of Elon, this organizational structure chart can be used as a reference:

The Latest Developments in FSD

Compared with the brand-new Dojo D1 chip and Tesla Bot robot, FSD, which was partially elaborated on during Tesla’s self-driving day in 2019, is more like a phased update. We’ll talk about a few parts worth noting.

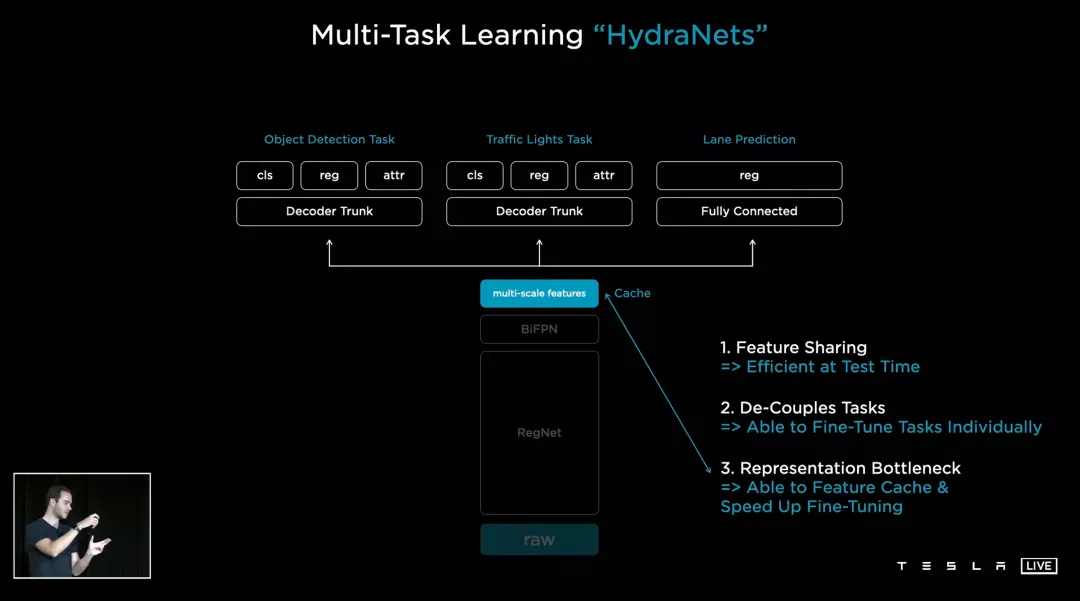

Firstly, HydraNets, the simplified changes to the multi-task learning network of the nine-headed snake. As is known to all, the architecture based on the image-level was proved to be ineffective in the vector space when Tesla’s FSD development reached the “smart summoning” stage. Therefore, Tesla completely rewrote the entire deep neural network stack from scratch.

Here Andrej mentioned the core idea of starting over with FSD, which is extremely simplified. This includes streamlining all tasks from camera calibration, caching, fleet management, to optimizing and simplifying all architectural design.

Here Andrej mentioned the core idea of starting over with FSD, which is extremely simplified. This includes streamlining all tasks from camera calibration, caching, fleet management, to optimizing and simplifying all architectural design.

This “extreme simplification” is one of Elon’s most important engineering design concepts. He has repeatedly stressed the need to question the validity of a problem before attempting to solve it. The biggest pitfall for genius engineers is attempting to solve a problem that does not exist, while rarely questioning the problem itself.

Undoubtedly, “extreme simplification” will have a wide impact on the development of FSD. In conclusion, Andrej showed off FSD’s latest architecture, which is more integrated than ever before.

From the current point of view, the architecture of multi-camera video based on time series has clearly achieved huge benefits. Apart from the significant improvement in performance compared to Beta 8 in Beta 9, Tesla has completely abandoned forward radar over the past three months and started operating Autopilot and FSD based on pure vision, better measuring depth and speed information through the new architecture.

Under the new architecture, when a vehicle passes through a complex intersection, FSD will use a special recurrent neural network to make predictions. If multiple Teslas pass through the same intersection, or if one car passes through multiple times, the deep neural network’s prediction for intersections will become “smarter.” Eventually, FSD will learn to drive automatically in similar but previously unvisited intersections.

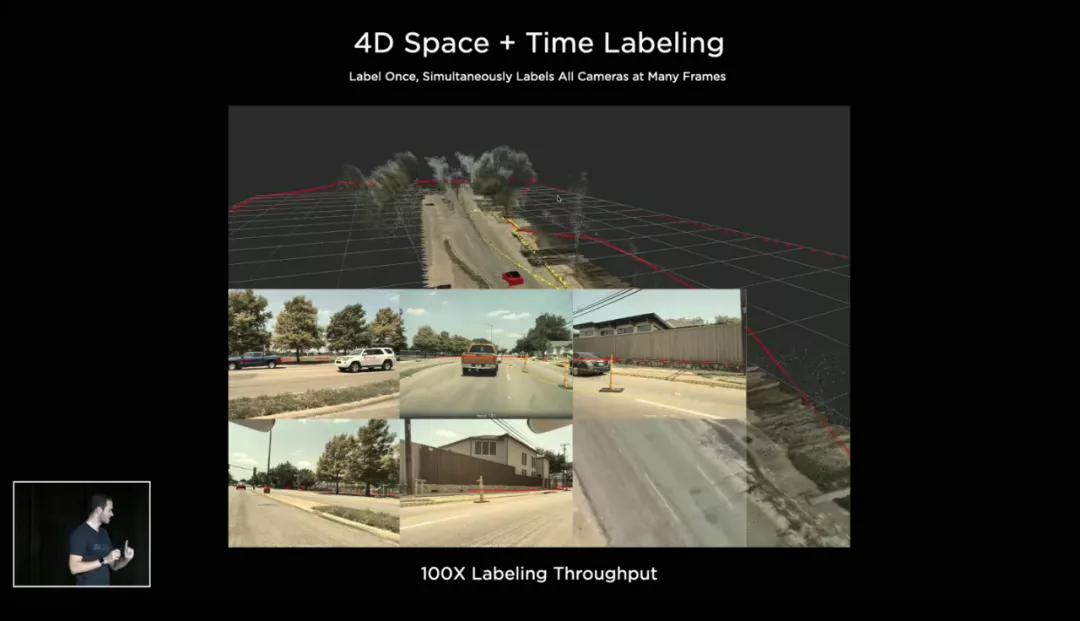

Secondly, data annotation. Prior to this, Tesla had worked with third-party annotation companies to obtain annotated datasets for deep neural network training, which is industry-standard practice. However, based on the principle of vertical integration, Tesla’s data annotation has fully shifted to internal self-promotion, and Tesla now has a data annotation team of 1000 people.

Initially, Tesla’s annotation datasets were based on 2D annotations for images, but when the entire perception architecture moved to 4D, 2D annotations in the vector space were not efficient, and data annotation was transitioned to 4D annotations. Ashok pointed out that there are too many annotations to be made, and it is difficult to sustain manual annotations. Therefore, Tesla has developed an automatic annotation tool to help with this process.Translated Markdown text:

According to Ashok, Tesla’s fleet can collect and automatically label 10,000 videos in a week. Tesla has completed over 10 billion annotations.

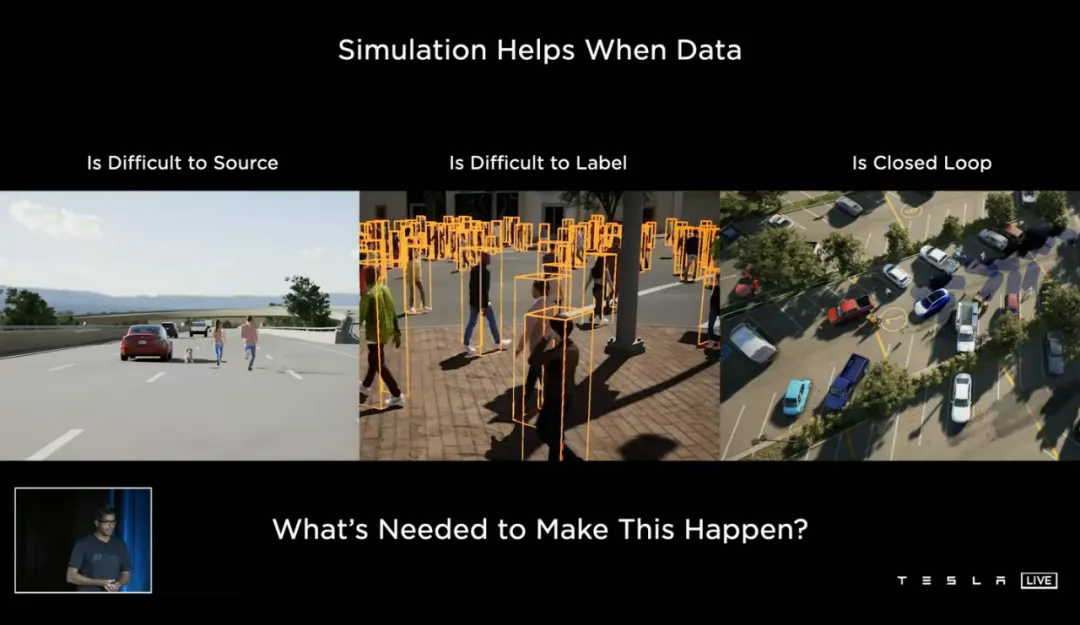

Finally, there is the simulation phase. At the 2019 Autonomous Driving Day, Elon was not very enthusiastic about developing autonomous driving algorithms based on simulation. However, today’s simulation phase mentioned the tremendous value of simulation. In simple terms, there are three situations:

Rare situations (such as a person running on a highway, even if such situations are rarely captured by global vehicle fleets);

Difficult to annotate (such as videos with dozens of people);

The planning of vehicle behavior in the closed loop (which can be designed for greater efficiency by humans)

It is worth mentioning that Elon’s evaluation of simulation technology in 2019 was that no simulation technology can match the authenticity and complexity of real-world scenarios captured by cameras, unless a 1:1 real-world scenario simulator is specially designed. However, the cost of such an approach would be much higher than collecting data from actual scenes.

So, this is Autopilot’s simulator today.

To be honest, it’s already hard for us to distinguish between Tesla’s simulation and real scenes with the naked eye, except for the driver in the car. According to Ashok, the Autopilot simulator has 2,000 miles of built roads, 371 million simulated images and 480 million cubes.

By comparing Tesla’s attitudes towards simulation in 2019 and 2021, we can see that Tesla’s FSD development has entered a “deep water zone of reform”. By 2021, highly accurate simulation has become the most efficient strategy for extreme scenarios that some cameras have difficulty capturing.

Of course, this is fundamentally different from the simulation strategy led by Waymo (which has covered 20 million miles of actual scenes and over 10 billion simulated miles).

Overall, even based on the current HW 3.0 hardware, the bottleneck in the evolution of Tesla’s FSD algorithm seems quite far away. Combined with Elon’s previous disclosure that Beta 10 will involve major changes in architecture, we have reason to believe that the potential of FSD is far more than what we see now.

Dojo SupercomputerThe motivation for the birth of Dojo is straightforward. Ganesh said that a few years ago, Elon wanted a super-fast training computer to train Autopilot. Now, Dojo is here.

Below is Ganesh’s muscle show. The goal of Dojo is to achieve the best AI training performance; enable larger and more complex deep neural network models with high efficiency and low cost.

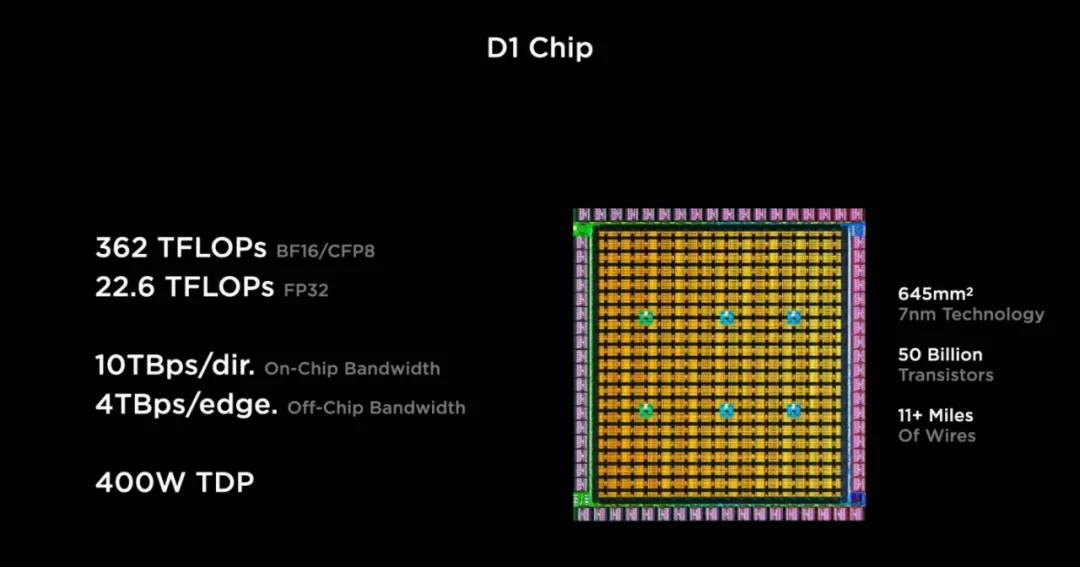

Dojo D1 chip is built on 7nm process with the following core parameters:

Single-chip FP32 computing power 22.6 TFLOPs

BF 16 computing power 362 TFLOPs

On-chip bandwidth 10 TB/second

Off-chip bandwidth 4 TB/second

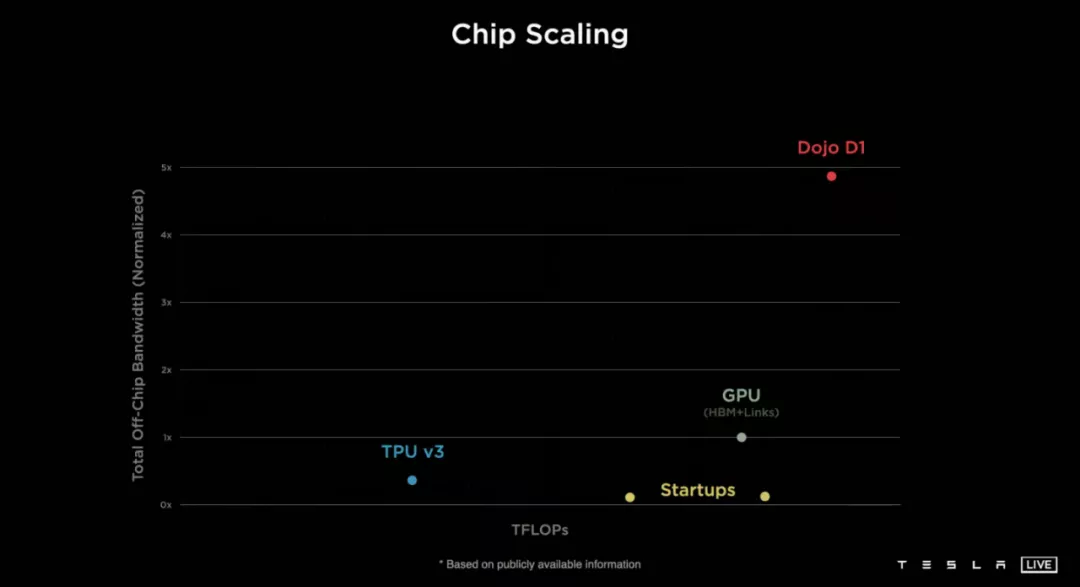

What level of bandwidth is this? It far exceeds Google’s TPUv3 and is far ahead of Nvidia’s latest GPU.

The invitation letter displayed before the press conference showed a training module composed of 25 D1 chips, with a single-module computing power of 9 PFLOPs and a bandwidth of 36 TB/second.

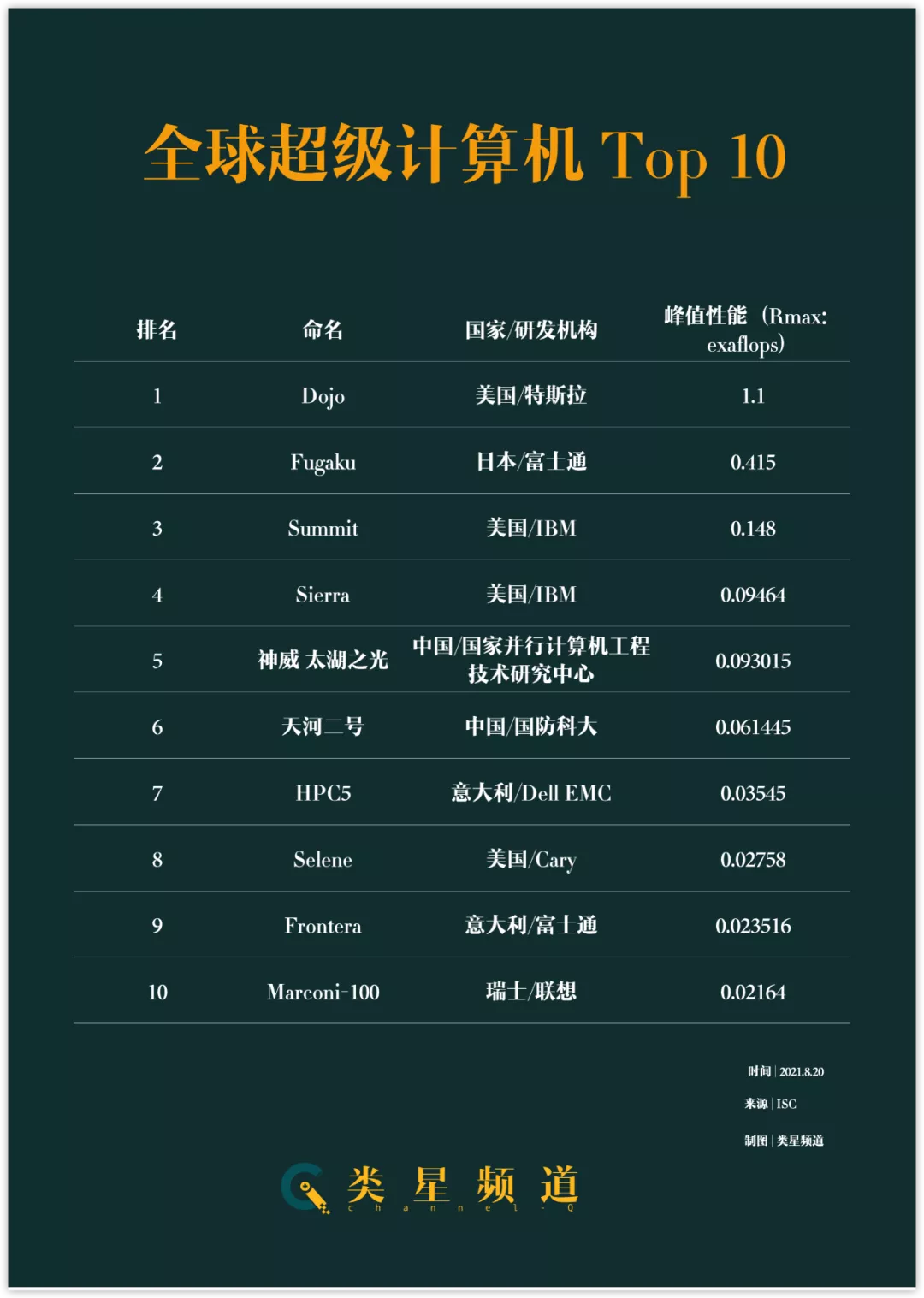

Dojo ExaPOD consists of 120 training modules and 3000 D1 chips, with a total computing power exceeding 1.1 EFLOPs, surpassing Fujitsu’s 0.415 EFLOPs computing power and becoming the world’s number one.

Ultimately, Dojo will become the fastest AI training computer, with a performance increase of 4 times and an energy efficiency increase of 1.3 times compared to existing computers, while reducing floor space to one-fifth of its previous size.

So how did Tesla do it?

In Tesla’s official rhetoric, Dojo is referred to as a Pure Learning Machine. In a sense, how the FSD chip was refined two years ago is how Dojo was refined.## Tesla Bot

According to Tesla’s Vice President of Hardware Engineering, Pete Bannon, the biggest advantage of the FSD chip is that it has “only one customer, Tesla.” The same goes for Dojo. During a QA session today, Elon stated that “Dojo is a computer specifically designed for training deep neural networks, CPU and GPU are not designed for training.”, and “Let’s fully ASIC (Application Specific Integrated Circuit) everything”.

There is still much more to think about regarding Dojo.

So, what have supercomputers been used for before? Developed by professional computer equipment manufacturers and held by national-level laboratories of major countries, the primary applications are large-scale computing scenarios such as medium- to long-term weather forecasting, oil and gas exploration, physical simulations, quantum mechanics, and epidemic prevention and control research.

And Tesla? A small car manufacturer with an annual sales volume of 500,000 vehicles (as of 2020), has now created the world’s most powerful supercomputer.

Overall, Tesla’s strategy to advance autonomous driving research is consistent with OpenAI’s method of promoting AI algorithm iteration through the pursuit of brute force aesthetics. Although this strategy is controversial in the industry, Tesla is undoubtedly verifying the potential of large-scale data through tangible actions.

This is fundamentally different from the research and development strategies of all other autonomous driving companies at present.

Tesla Bot, an AI robot hosted by Elon, was the only section of the event presided over by him. Specific information is as follows:

- Height: 5 feet 8 inches, about 172 cm;

- Weight: 125 pounds, about 56.7 kg;

- Carrying capacity: 45 pounds, about 20 kg;

- Maximum walking speed: 5 miles/hour, about 8 km/h.

Tesla Bot’s neck, arms, hands, and legs are equipped with a total of 40 electromechanical push rods.

The Autopilot camera will serve as the Bot’s eyes, and the Tesla FSD chip will be located inside its chest. In addition, the deep neural network architecture for multi-camera vision, including planning, automatic labeling, simulation, and Dojo training, which was mentioned earlier, will also be used in the development of the Bot.# Tesla Bot Sets a New Benchmark for Tesla’s Product Sales “Expectations”

The prototype for this new product will not be unveiled until 2022, but the Tesla Bot has already made history. The product launch featured a live performance of a real person dressed up as the bot.

Regarding the Tesla Bot, Elon explained after the launch event that “Tesla has almost all the components needed for humanoid robots, and we’ve already made robots with wheels.”

This alone is unlikely to justify a new product launch, as the cost (based on hardware estimates) is difficult to lower and the functional capacity of the Tesla Bot (20 kg weight capacity) is relatively weak. Today, the Tesla Bot cannot change the world nor play a role in managing Tesla’s market value.

So what is Tesla’s underlying motivation?

During the Q&A session that followed, Elon unsurprisingly mentioned Universal Basic Income.

What happens when there is no shortage of labor due to the rise of robots? That’s why I think, long term, there will have to be universal basic income. But not right now because the bot doesn’t work yet. In the future, physical work will be a choice.

Elon and his friends, including Google founder Larry Page, Twitter CEO Jack Dorsey, and OpenAI CEO Sam Altman, have been discussing the socioeconomic paradigm of the post-artificial intelligence era, where robots replace humans in repetitive labor, and government-led implementation of Universal Basic Income will be a critical component.

The first step in replacing humans with robots for repetitive labor is to manufacture a robot.

The Tesla Bot is unlikely to have a significant impact on our production and life in the immediate future; on the contrary, it is far from perfect and does not even have a prototype yet. It is more bewildering to place an order for an artificial intelligence robot than to spend $100,000 on a pure electric sports car in 2004. Nevertheless, it is a starting point, and Tesla is embarking on a new expansion.

Tesla Bot is like the Tesla VIN 001 Roadster handcrafted by JB Straubel in 2004. “In the end, people will see Tesla as an artificial intelligence robotics company.”

Tesla Bot is like the Tesla VIN 001 Roadster handcrafted by JB Straubel in 2004. “In the end, people will see Tesla as an artificial intelligence robotics company.”

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.