After watching Tesla’s AI Day, my biggest takeaway is that Tesla’s technology is really impressive, not only in vehicles and chips, but also on the server level, and even more so in the direction of robotics in the future. Tesla is an all-around player. However, I have a faint concern that this technology route may not be feasible in China due to national security concerns.

Let me share my understanding of Tesla’s AI Day:

We’ve been misunderstanding Tesla all along

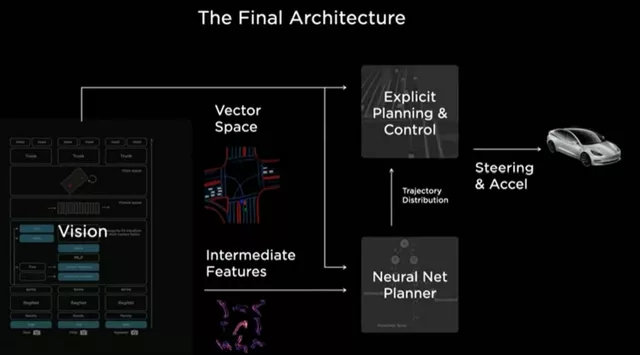

After watching Tesla’s AI Day, my first impression is that because Tesla sells cars, we have always thought it is just a car manufacturing company. But in reality, it is an artificial intelligence company or even more diverse. Just making a car isn’t a big deal, but making a car with 8 cameras (1280×960 12-Bit HDR 36Hz) that can input image data on the road into a single neural network and integrate it into a 3D environmental perception (Vector Space) is really impressive.

It can be understood that Tesla is doing autonomous driving entirely based on biology. It really has eyes, nerves, and brains, and creates different execution results through different data inputs for different functions and purposes. It’s not a car that can move by itself, but an intelligent system that drives following human logic.

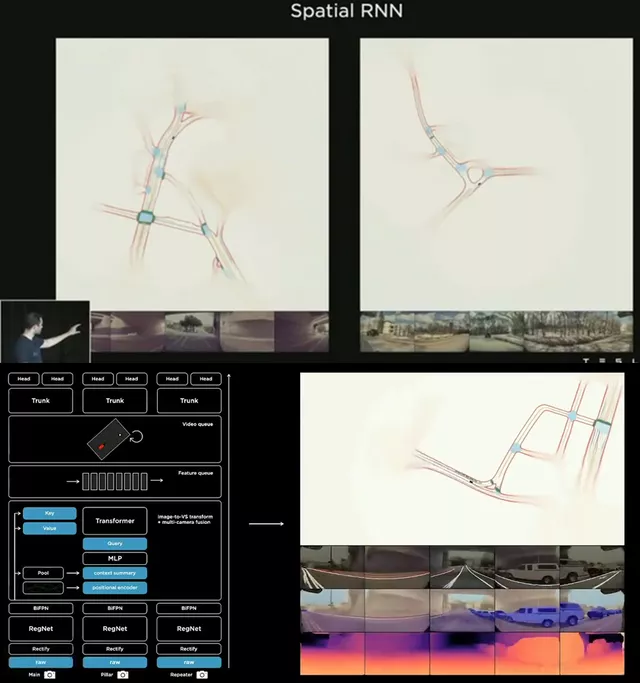

Here, Recurrent Neural Network (RNN) is introduced. Based on the observation that “human cognition is based on past experience and memory”, RNN processes input sequences of any time sequence to predict what will happen next. As shown in the figure below, Tesla’s camera can continuously update environmental perception of the surroundings during the vehicle’s journey. Tesla’s autonomous driving function is actually a real-time map survey process that reflects the road information in the car’s brain, and of course also sends it to Tesla’s cloud backend, especially after the introduction of the Dojo supercomputer later. In name, the supercomputer is to empower autonomous driving, and with this data, it is difficult for us to imagine where the ceiling of autonomous driving is.

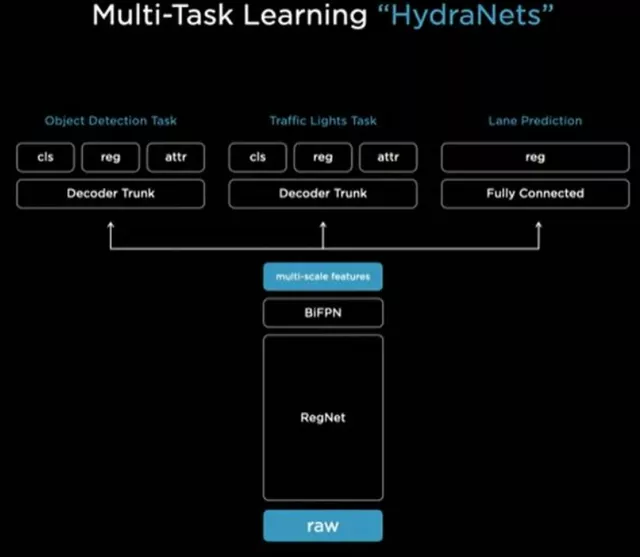

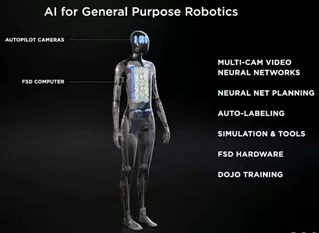

This system is based on HydraNets multitask learning, which recognizes visual content from different cameras and independently adjusts micro-tasks (object recognition, traffic light recognition, and road line recognition) to efficiently predict and annotate features. With the expansion of Tesla’s chips, it can do more than just autonomous driving-related tasks.

My understanding of this press conference is that Tesla has really been able to generate maps in real-time, and after integrating them into their servers and vehicles, form maps of cities and regions.

The Playability of Tesla’s Simulation Technology

Tesla’s autonomous driving scene simulation system consists of five parts:

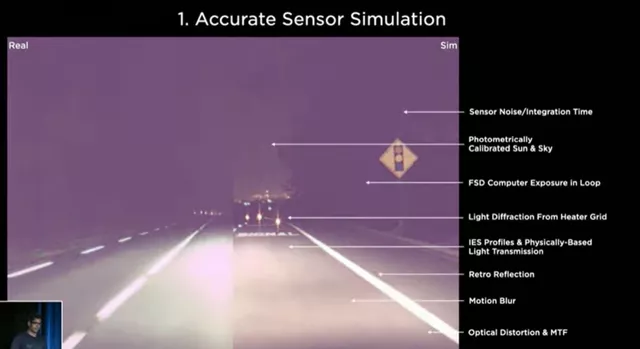

(1) Accurate sensor simulation: simulate perception data of front vehicles;

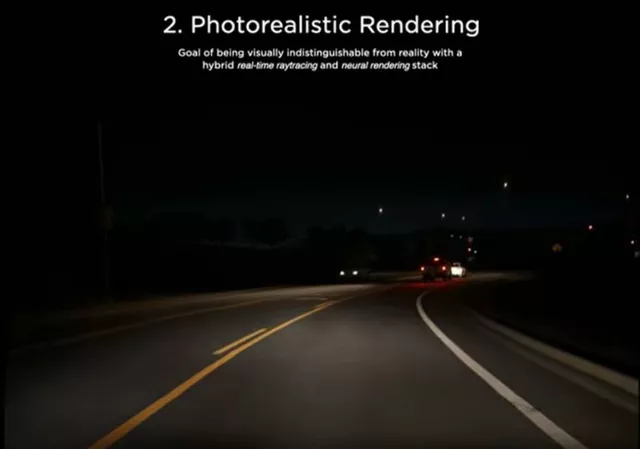

(2) Photorealistic Rendering: real-time simulation based on images, predicting potential situations through visual neural networks;

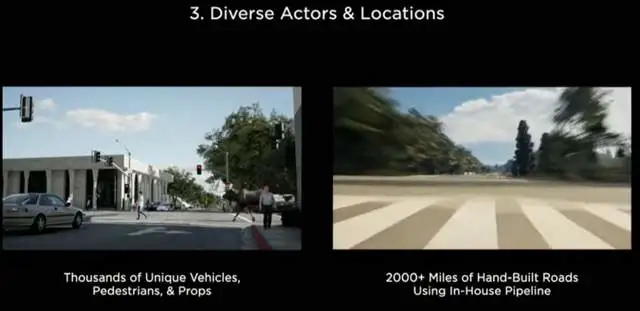

(3) Diverse Actors & Locations: incorporate extreme road conditions;

(4) Scalable Scenario Generation: superimpose data on previously collected data to improve data authenticity and image maturity;

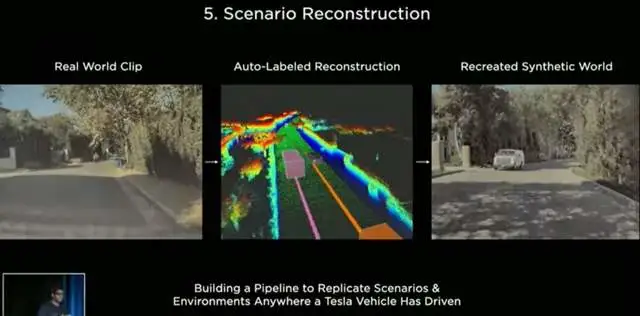

(5) Scenario Reconstruction: combine predicted and real situations;

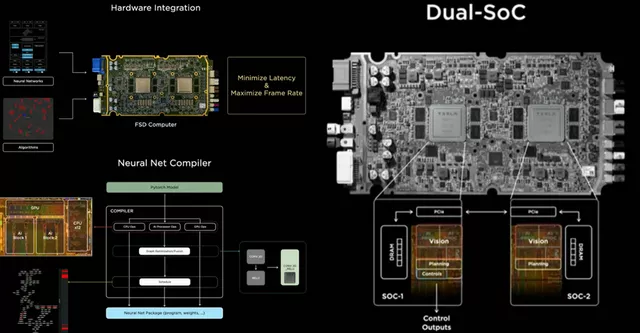

The Autopilot hardware installed in the car efficiently supports the completion of the entire calculation.

The Autopilot hardware installed in the car efficiently supports the completion of the entire calculation.

Based on the Autopilot on the car and the AI backend supported in the background, Tesla has the ability to build a very comprehensive information database by collecting information about roads around a city.

These technologies are what sets Tesla apart from other traditional car-selling companies. It has the ability to extract China’s perceived national security information completely, and how it will be used, we do not know.

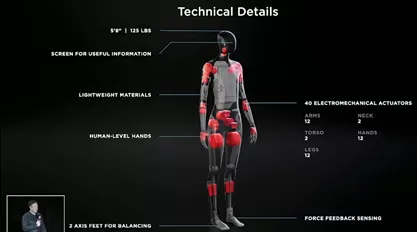

At the end of the day, you can’t just treat Tesla as a traditional car company. Its capabilities include IT, AI technology, big data, and cloud backend, and it even completes the construction of maps in this process. Most surprisingly, Tesla can use its autonomous driving technology to build humans.

In turn, OTA the machine can analyze language and visual separately and handle the task as a person would when the car has enough computing power. Under HydraNets multi-task learning, you can’t confirm what the car can do during the driving process that you can’t imagine.

From an AI perspective, the boundary between robots and vehicles is blurred.

In summary, what I want to say is that Tesla’s current status is actually very taboo for the Chinese regulatory authorities. This is not something that can be managed by just the MIIT, and it will definitely involve national interests in the future.

Because we can’t explore and restrict what Tesla can do with so many cars in China. The biggest challenge we may face is that we are happy to buy a car, but all the important information for the country has been leaked. The lack of understanding and broad possibilities in these aspects are also the source of fear.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.