On August 15th, Tesla pushed its latest version of Full Self-Driving Beta 9.2 to its 2000 internal testing users. This version was only one day late compared to Elon Musk’s promise of bi-weekly updates, without the “Two weeks” delay.

The release notes for the latest version 2021.12.25.15 provided to internal testing users showed no difference from the previous version. However, Elon Musk provided detailed technical updates about FSD Beta 9.2 on Twitter and hinted at more improvements in future versions.

Jason Cartwright, the founder of techAU, provided a detailed breakdown of the seven changes made in FSD Beta 9.2. The summary is as follows:

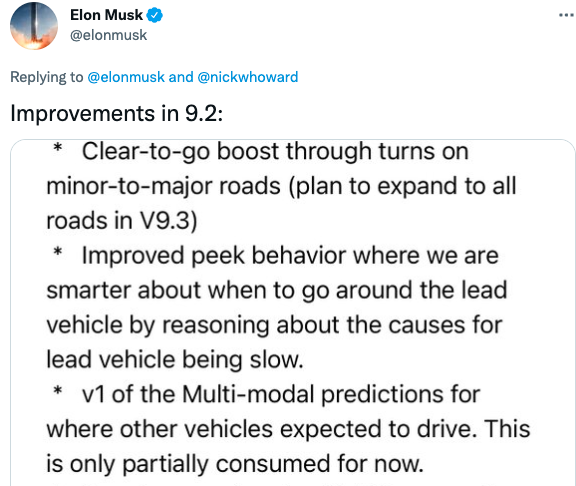

This is Elon’s tweet, which explains these changes in detail. Although I would like to see this level of detail in public release notes, I can understand that most ordinary people may be confused by this information. Perhaps release notes could have an advanced option to display technical information about changes to help beta testers understand what changes have been made to their cars. This may become more important as we move towards public testing.

There are only seven key points here, but there are many things to explain in this tweet, so let’s analyze them one by one.

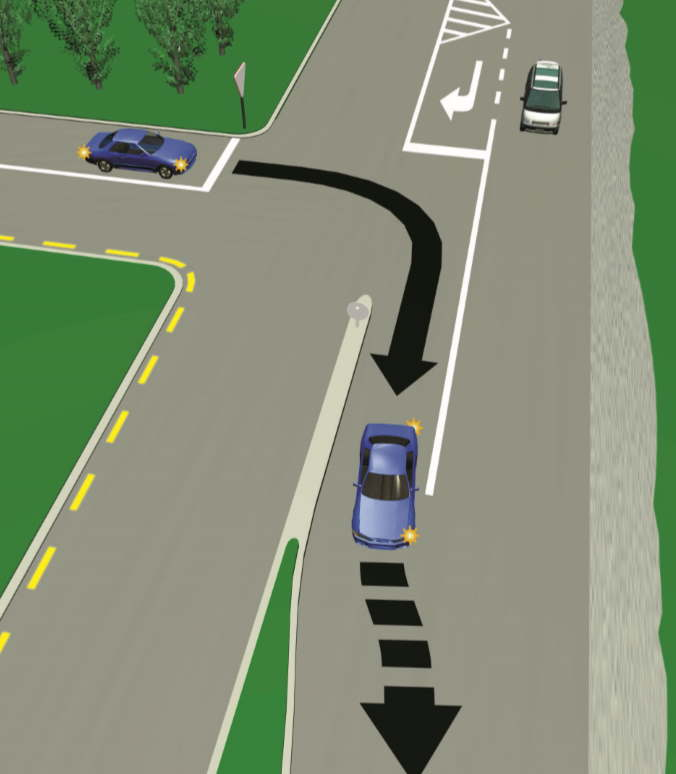

- “Clear-to-go boost” through turns on minor-to-major roads (plan to expand to all roads in V9.3)

This is the first time we have heard Tesla use the term “Clear-to-go boost.” This refers to the use of computer vision to identify whether there is a safe distance in the traffic flow when the vehicle merges onto the main road. This allows the vehicle to naturally move to a higher speed limit road section.

When you leave a side road and merge onto a main road as a driver, we usually accelerate to reach the speed limit or follow the flow of traffic. This improvement has some benefits, such as not affecting the vehicles behind you when merging into traffic.

When you leave a side road and merge onto a main road as a driver, we usually accelerate to reach the speed limit or follow the flow of traffic. This improvement has some benefits, such as not affecting the vehicles behind you when merging into traffic.

Elon stated that the next version 9.3 of FSD will be released in two weeks, and this feature of version 9.3 will be applicable to all roads.

Improved peek behavior where we are smarter about when to go around the lead vehicle by reasoning about the causes for lead vehicle being slow.

The improved peek behavior strategy. By reasoning about the causes for the lead vehicle being slow, we understand smarter about when to go around the lead vehicle.

Compared with the public version of FSD, the uniqueness of the FSD Beta version is that the vehicle’s path planning is based on the drivable space but prioritizes lane keeping and road regulations. This means that, in safe situations, the vehicle will cross over the lane it is currently in and overtake other vehicles parked in that lane (i.e., lane change).

After watching a lot of FSD Beta 9.1 videos on YouTube, you will find that the vehicle will try to perform lane change operations when it should have stopped and waited.

This improvement in the “peek behavior” strategy shows that FSD Beta 9.2 is now smarter in deciding when to overtake. We often use cues from the lead vehicle (or head vehicle) to guide our driving.

If a vehicle stops in front of us, it may be due to many factors. If a vehicle is parked parallel to the roadside with hazard lights on, it is clear that the vehicle is unlikely to move in the short term, and we will bypass it. If the lead vehicle crosses the lane to avoid a stopped vehicle, we are likely to do the same. Similarly, if the lead vehicle is avoiding an accident or road construction, we can learn a lot from the lead vehicle’s behavior, and most of the time, we should follow it, which is correct.

Here, the goal of FSD is to accurately estimate the unknown environment ahead and predict the correct driving strategy. Choosing to stop completely and wait for a stalled lead vehicle to move is clearly wrong. Because when the waiting time is too long, the driver behind you will overtake you and make you feel frustrated, so this is a good balance.# Multi-modal prediction for where other vehicles expected to drive V1.0. This is only partially predicted for now.

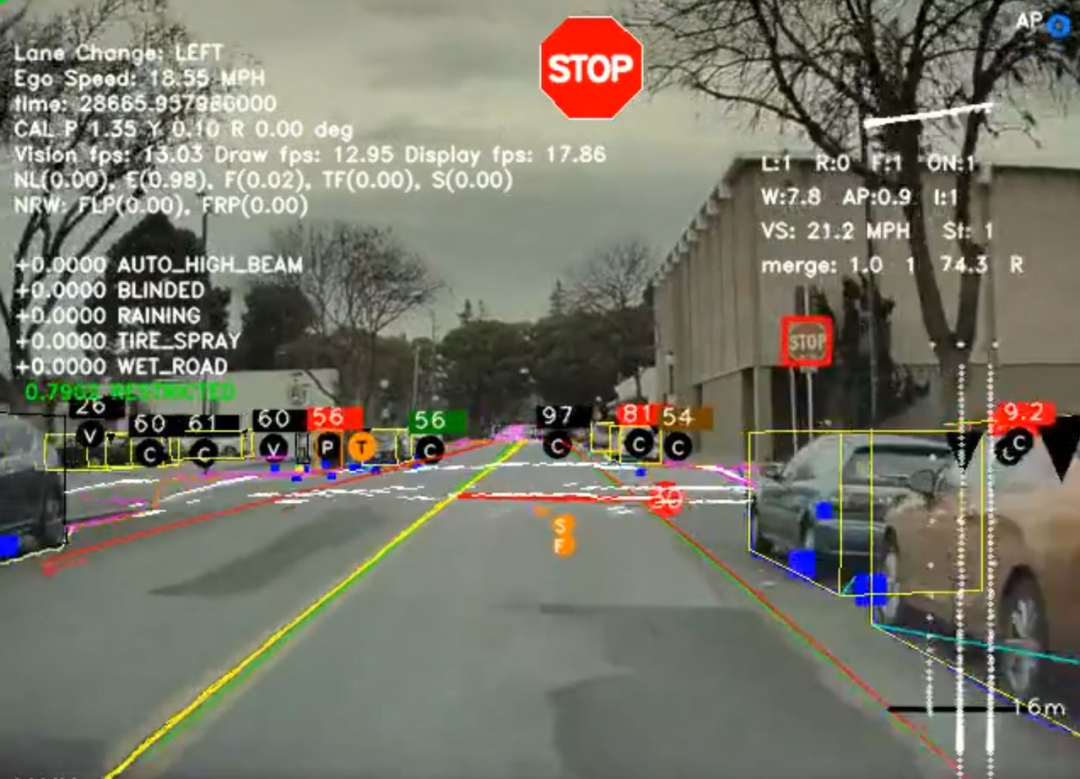

The multi-modal prediction is a prediction of the state and potential behavior of surrounding objects in the future. This involves calculating the potential behavior trajectories of other vehicles, pedestrians, non-motor vehicles, animals, etc., which are the basis for deciding the driving route and whether to apply the brakes.

The second sentence explains that currently it is only partially predicted, indicating that Tesla will predict more potential scenarios like this in the future. If the vehicle can accurately predict when pedestrians will walk in front of the vehicle or whether the driver of the nearby parked car will open the door and invade the vehicle’s lane, FSD Beta can safely avoid them, ensuring everyone’s safety.

In complex environments, such as efficiently predicting these behaviors, require a challenge in computational resources. It is much more difficult than simply knowing where you are and where everything else around you is, requiring a model to predict what will happen in the future, and importantly, to discover when predictions go wrong.

Suppose we take an example when you approach an intersection with oncoming traffic, and you want to cross the road. One case is that the oncoming vehicles go straight, another is that the oncoming vehicles also turn, and another is that the oncoming vehicles stop and do nothing. Based on millions of similar situations, if we test these three cases, people can make good judgments, hit the brakes, slow down and make natural turns to avoid colliding with opposing vehicles. This is complex for computers, but after years of training, our daily driving strategies become automated.

New Lanes network with 50k more clips (almost double) from the new auto-labeling pipeline

The lane depth neural network trained on 50,000 (almost double) automatically labeled video clips.

Compared with manually labeled images used by Tesla to train neural networks in the past, automatic labeling is a huge step forward. Of course, Tesla has not only one neural network, but many, and they are constantly increasing; each neural network focuses on different parts of the autonomous driving challenge.

Tesla’s director of artificial intelligence, Andrej Karpathy, previously mentioned the concept of automatic labeling, which is aimed at significantly speeding up the process of training neural networks.You can think of it as a question and answer. If we find a driving video, when an accident occurs, you can find the answer in a few seconds. Then you record the first few seconds of the video (when the problem occurs), and train the deep neural network around different combinations of similar scenarios. The frames in the video can answer the question, “What should I do here?” posed by the deep neural network, rather than humans providing detailed annotations to the deep neural network (what is the extreme scenario).

Although in some cases, drivers may make incorrect driving behaviors, the vast majority of drivers will take the correct strategy in any given situation, so providing the deep neural network with a large number of example scenes can automatically identify the correct strategies to be taken.

In theory, the more data, the better the model (through training), and the model is pushed to the FSD Beta version, and as Tesla turns to processing more video clips rather than individual frames, it can provide more contextual information in each case, like panoramic imaging (using all 8 cameras instead of just one).

The “New Lanes” network uses approximately 50,000 or more automatically annotated video clips, indicating that Tesla is now expanding its neural network in their data engine while running more networks.

The doubling of automatically annotated video clips to 50,000 marks a significant change in computing power, and this may be the result of the launch of Tesla Dojo supercomputer, whose details will be announced at the AI Day scheduled for August 19 (US time).

The new VRU velocity model improves velocity by 12% and has better performance. This is the first model trained with “Quantization-Aware-Training,” an improved technique to mitigate int8 quantization.Vulnerable road users refer to road users who are not in cars, buses, or trucks, usually including pedestrians, non-motorized vehicles, and motorcycle users. When Tesla talks about increasing the speed by 12% for these road users, it may refer to the degree to which vehicles track objects over time, possibly in milliseconds. As we discussed before, it’s always better to track objects in the scene faster, especially for objects like VRUs that may intersect with the vehicle’s trajectory.

When it comes to “clear-to-go,” it indicates that if a pedestrian is crossing in front of our vehicle, the road is not clear, and we need to brake. Once the pedestrian has crossed, the road will be clear again for acceleration, but it’s important to know when it’s “clear-to-go.”

If FSD can predict human (or head) behaviors more accurately, it can not only better determine when it’s safe to pass (i.e., when they’re on the sidewalk), but also calculate the distance between us and the intersection, making it smoother for the vehicle to pass through the intersection. In short, “clear-to-go” should leave enough space for VRUs to pass safely, but not too aggressively, striking a good balance here.

Many of the most advanced deep learning models are too large and slow, and Tesla is a good example. Despite their powerful HW3 platform, it still has some limitations, including power, storage, memory, and processor speed.

Quantization reduces model size by storing model parameters and performing calculations using 8-bit integers rather than 32-bit floats (hence Int8). Using QAT can improve model performance, but there are also drawbacks such as calculation errors and reduced model accuracy. These errors accumulate with each operation required to compute the final answer.

If you’re interested in further information, you can watch a fascinating video on quantization-aware training by Pulkit Bhuwalka, a software engineer at TensorFlow.

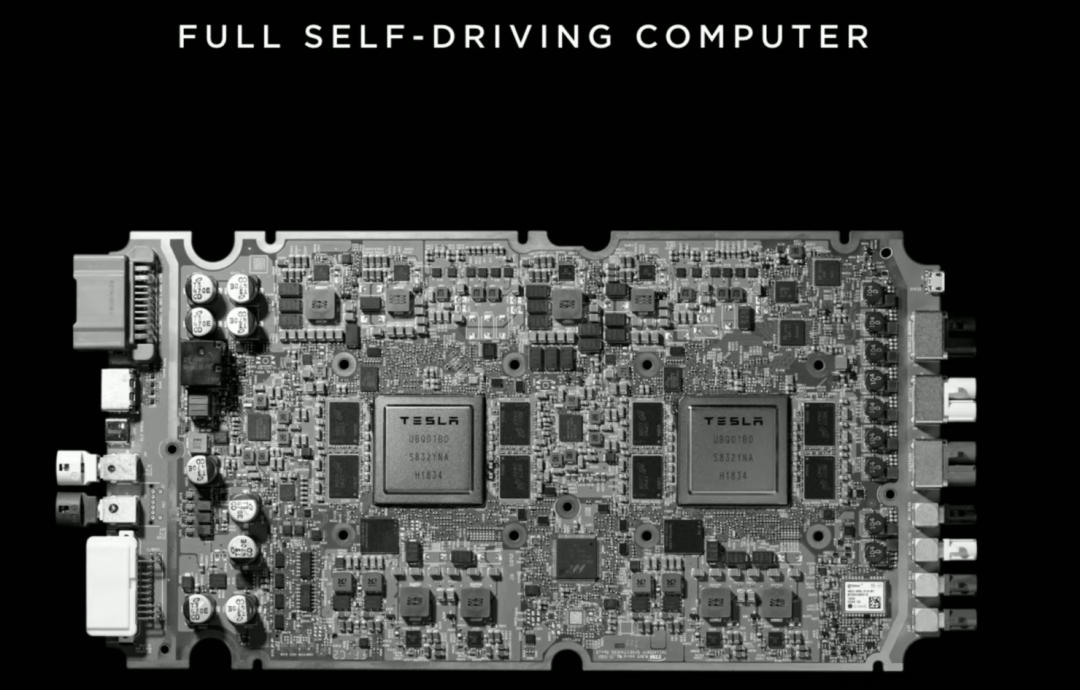

Enabled Inter-SoC synchronous compute scheduling between vision and vector space processes. Planner in the loop is happening in v10.Tesla’s fully automated driving computer, also known as HW3.0, has a dual System on a Chip (SoC) redundancy function. If one of the chips fails, the other chip will have enough processing power to control the vehicle, as most of the time the chips are running normally, thus Tesla will utilize the performance of these two chips for “synchronous calculation”.

At this point, Tesla explained that in FSD Beta 9.2, they have allowed cross-SoC workload calculation through two SoCs. The best way to describe this is the process of converting all objects around the vehicle in the visual stack (i.e. camera input) into vector space. While we see a beautiful image model on the screen, the car also sees a 3D object with an outline in the background.

The reference to Planner in v10 may be related to the process of generating route planning rather than planning routes. Once the vector space is established and the trajectory is understood, the next logical step is to plan your path in that environment. Allocating these potential path plans between SoCs may yield some performance advantages.

Shadow mode for new crossing/merging targets network which will help improve VRU control

Shadow mode for the new crossing/merging target network will help improve VRU (Vulnerable Road User) control.

Finally, we come to shadow mode. This concept was first demonstrated at Tesla’s Autonomy Day, where Tesla explained that they would run simulations on your vehicle to imagine “what-if scenarios”. In the same scene as the production version, what will the next (unreleased) code branch do? Will it make a better or worse decision? If it’s a better decision, the fleet will report this change as positive and it will move into the next stage of production. If not, more training is needed.

This shadow mode was first proven for potential lane changes, but as part of the autonomous driving solution, it can indeed be applied to most decision-making processes.

The last point indicates that Tesla is now using shadow mode to process the new crossing/merging target network, aiming to improve VRU control. Regarding the crossing or merging part, imagine you are at a very busy intersection, maybe even a four-way intersection where pedestrians can cross diagonally like in Shanghai’s Nanjing Road.

Once the green light is on, the vehicle needs to make sure the road is clear, but if a pedestrian crosses behind an obstacle, the line of sight will be blocked, which poses a problem for predicting the pedestrian’s path trajectory. Therefore, a question arises – where will the pedestrian appear? In FSD 9.2, Tesla seems to use the shadow mode to test whether they can better track and predict the trajectory of VRUs.

Once the green light is on, the vehicle needs to make sure the road is clear, but if a pedestrian crosses behind an obstacle, the line of sight will be blocked, which poses a problem for predicting the pedestrian’s path trajectory. Therefore, a question arises – where will the pedestrian appear? In FSD 9.2, Tesla seems to use the shadow mode to test whether they can better track and predict the trajectory of VRUs.

Okay, about all the details of this version, it raises a question again about a wider public beta and when it can be pushed. Elon previously stated that V10 may be widely pushed, but V11 will be fully pushed for sure.

This time, Elon said that 9.3 will be the next FSD Beta version (as we know, this should be 2 weeks later). Next is 9.4, also 2 weeks later, or about mid-September.

After that, Elon said that FSD Beta 10 may enter the US-wide promotion and that FSD 10 will have significant architectural changes. However, the significant structural changes in FSD 10 may reduce the expected wider promotion of FSD 10 (previously set up by Elon), as significant changes will certainly need to be tested by internal beta users first.

This will push the promotion time of FSD Beta V10 to around the end of September. As for what the major architectural changes may be, Elon has previously revealed that Navigation on Autopilot (NoA) and Smart Summon will be transferred to a pure visual route, which is currently used for city street driving technology stack for FSD Beta.

I think we have learned a lot today about Tesla’s efforts for autonomous driving, but it also shows that we still need some time to achieve fully functional FSD by the end of 2021 as previously set by Elon.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.