Introduction

With the coming of the “Intelligentization” trend, major car manufacturers at home and abroad have started the arms race in the field of autonomous driving. New forces in domestic car making, such as NIO, XPeng, and Ideal, are all gearing up and showing their strengths, just to obtain a ticket to the autonomous driving track before 2025.

The Vertical Evolution Path of Autonomous Driving Systems

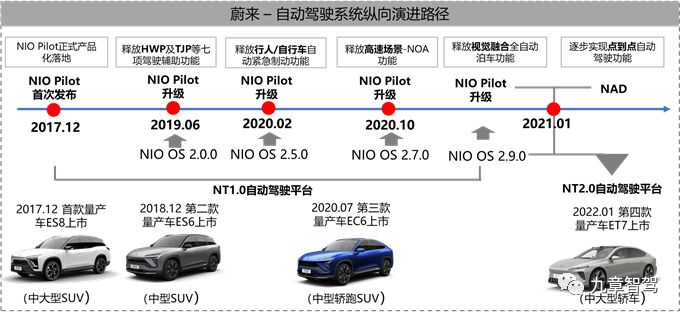

NIO – The Vertical Evolution Path of Autonomous Driving System

-

In December 2017, NIO Pilot, the first driving assistance system, was released during NIO Day.

-

In June 2019, the vehicle system, NIO OS, was upgraded to version 2.0.0 through OTA, and the NIO Pilot system completed its first major upgrade, adding seven new features, including high-speed automatic driving assistance, congestion automatic driving assistance, turn signal control for change of lanes, road traffic sign recognition, lane keeping assistance, front side incoming car warning and automatic parking assistance system.

-

In February 2020, the NIO OS vehicle system was upgraded to version 2.5.0, with NIO Pilot system adding automatic emergency braking for pedestrians and bicycles, overtaking assistance, and lane avoidance functions.

-

In October 2020, the NIO OS vehicle system was upgraded to version 2.7.0, and the NIO Pilot system added the use of high-precision maps, automatic navigation driving assistance function (NOA), and active braking function on the basis of the rear-crossing vehicle warning function. The driver fatigue monitoring function was upgraded, adding camera recognition information for the driver’s facial, eye, and head postures, and making comprehensive judgments through multiple types of information.

-

In January 2021, the NIO OS vehicle system was upgraded to version 2.9.0. The NIO Pilot system added the visual fusion fully automatic parking and the close-range summons of the vehicle. The NOA function was optimized: enhancing the stability of the function in the scenes of active lane changing and merging into/driving away from the main road.

-

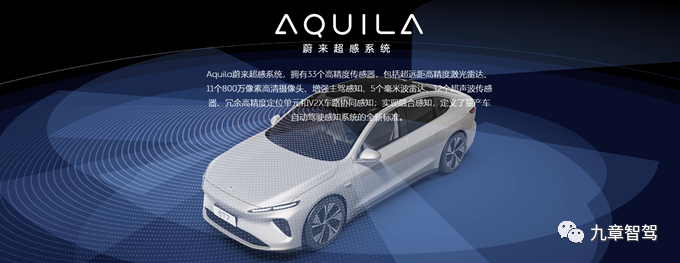

In January 2021, NAD, the autonomous driving system, was released during NIO Day, including NIO Aquila, the NIO Super Sensing System, and NIO Adam, the NIO Super Computing Platform. And they will gradually achieve a point-to-point autonomous driving experience in all scenarios, including highways, city areas, and parking lots.

XPeng – The Vertical Evolution Path of Autonomous Driving System

1) Xpilot 2.0: The first localized version of Xpilot automated driving assistance system.

-

In January 2019, the Xpilot 2.0 system was first upgraded and sent to Xpeng G3 users via OTA, which added the key calling function and optimized the automatic parking function, adapting to more scenarios and supporting precise identification of parking space shadows and ground locks.

-

In June 2019, the Xmart OS 1.4 version was upgraded and sent to Xpeng G3 users via OTA, with the ICA intelligent cruise assistance function (LCC lane centering assistance above 60 km/h) added to completed upgrade.

2) Xpilot 2.5: L2 autonomous driving assistance system in mass production.

- In July 2019, the Xmart OS 1.5 version was upgraded and send to G3 users via OTA, adding more driving assistance functions such as automatic lane change assistance and traffic congestion assistance.

3) Xpilot 3.0: Release of high-speed NGP (automatic navigation auxiliary driving) function.

-

In the second quarter of 2020, the XPILOT 3.0 hardware was applied to the Xpeng P7.

-

In the fourth quarter of 2020, the basic functions of XPILOT 3.0 were delivered, which opened ACC/LCC/ALC functions via OTA.

-

In the first quarter of 2021, the Xmart OS 2.5.0 version was upgraded and sent to P7 users via OTA, giving the Xpeng P7 equipped with XPILOT 3.0 system the NGP automatic navigation and auxiliary driving function on highways.

-

In June 2021, the public beta of the Xmart OS 2.6.0 version began with the Xpilot 3.0 system adding parking memory, intelligent high beam and driver state monitoring, optimizing NGP automatic navigation and auxiliary driving functions and lane centering capabilities.

4) Xpilot 3.5: Release of NGP urban navigation feature.

- Xpilot 3.5 added the city NGP function and optimized the parking memory function.- The plan is to start delivering the XPeng P5 to users in the fourth quarter of 2021. The NGP function in Xpilot3.5 system’s urban scene will be internally tested at the end of the year and provided to users via OTA early in 2022.

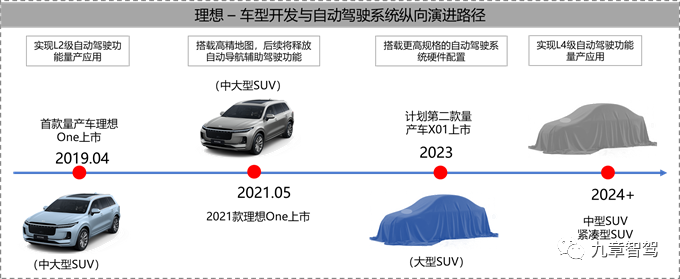

Ideal – Evolutionary Path of Automatic Driving System

Compared with NIO and XPeng, the development of Ideal’s autonomous driving is slightly behind, but since the company’s IPO in the United States in July 2020 and the appointment of Wang Kai, the former chief architect of Mobileye, in September, Ideal has clearly accelerated its research and development pace in the field of autonomous driving.

Li Xiang disclosed the roadmap for Ideal’s autonomous driving development in an interview in 2020:

-

2021-2022: Achieve automatic navigation and driver assistance functions;

-

2023: Introduce a new model, Ideal X01, equipped with hardware system that supports L4 autonomous driving capability;

-

2024: Implement L4 autonomous driving capability through OTA on mass-produced models.

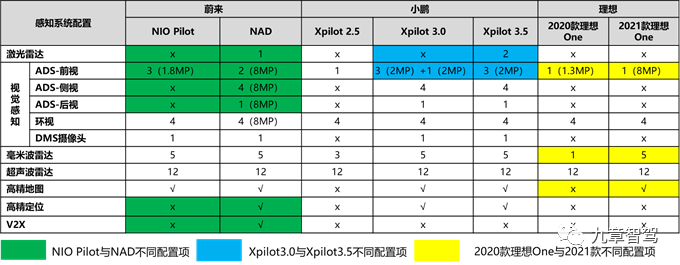

Autonomous Driving System – Perception Solution

Table 1: Comparison of Perception System Solutions of Three Car Manufacturing Forces

Note: √ has this configuration, × does not have this configuration

NIO – Perception Solution for Automatic Driving System

Note: 1) √ has this configuration, N/A not applicable, – unknown

2) Some of the 11 8-million-pixel HD cameras of NIO ET7’s are supplied by Lianchuang Electronics. By consulting the official website of Lianchuang Electronics, it is understood that the company’s main application area for in-car cameras is panoramic and rear view. Therefore, it can be inferred that NIO ET7’s panoramic cameras are most likely supplied by Lianchuang Electronics.

Compared with the NIO Pilot system, the perception solution of the NAD system is more complete:

-

Camera Aspect – Added side and rear ADS cameras, but the ADS front has been reduced from 3 to 2 cameras.Note: Reason for Upgrade – NAD system uses 2 ADS forward-facing cameras + 2 side forward-facing cameras (periscope layout) + 1 front laser radar, which completely covers the field of view detection range of NIOpilot system using three-camera system.

-

Laser Radar – Added a front laser radar.

-

Added C-V2X communication module.

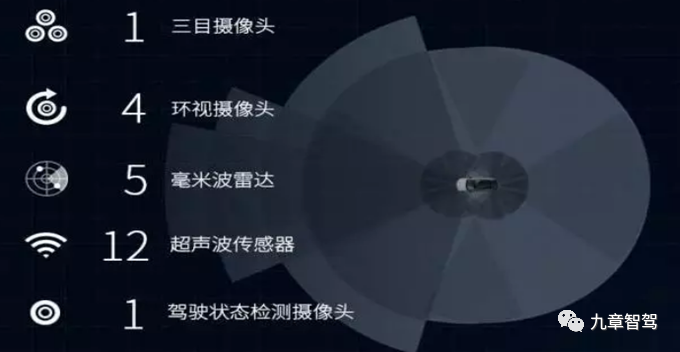

1) NIO Pilot – Perception System

Perception Sensors: 3 ADS cameras + 4 panoramic cameras + 5 millimeter-wave radars + 12 ultrasonic radars + 1 DMS camera.

Key Sensor Introduction:

a. Forward-facing Camera: Three-camera system (1.8 million pixels, independently developed by NIO)

- 52° medium distance camera: primarily used for general road condition monitoring

- 28° long focal distance camera: detect distant targets and traffic signals

- 150° wide-angle short distance camera: detect the side of the vehicle and vehicles that are too close

The primary functions of the three-camera system are shown in the following figure:

Functions: Road sign recognition, automatic control of high and low beam, lane departure warning, lane keeping, emergency braking assistance, forward collision warning.

b. Millimeter-Wave Radar: 1 front long-range radar + 4 corner radars

Functions: Lane change warning, vehicle blind spot monitoring, side door opening warning, forward approaching vehicle warning, rear approaching vehicle warning.

2) NAD – Perception System

NIO Aquila Ultra Sensing System: Perception sensors + high-precision positioning unit (GPS+IMU) + Vehicle-Road Cooperative Perception V2X

Perception Sensors: 1 laser radar + 7 ADS cameras + 4 panoramic cameras + 5 millimeter-wave radars + 12 ultrasonic radars.

Key Sensor Introduction:

a. Lidar (Velodyne – Falcon) Performance Parameters:

- Type: Dual-axis rotating mirror scanning hybrid solid-state lidar

- Detection distance: 250 m@10% reflectivity

- FOV (H&V): 120°*30°

- Angular resolution (H&V): 0.06°*0.06°

- Laser wavelength: 1550 nm

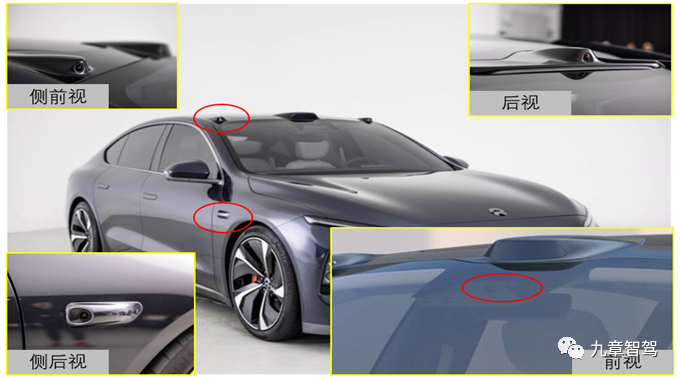

b. ADS HD cameras:

7 ADS cameras (8MP): Front view * 2 (front windshield) + Front side view * 2 (both sides of the top of the car) + Rear side view * 2 (fenders) + Rear view * 1 (center of the top of the car)

Front Side View Camera – Periscope Layout: The sensor’s line of sight can effectively pass through obstructions, reducing blind spots.

-

Reducing blind spots: In urban scenarios, the sensor line of sight is easily obstructed by green belts and vehicles. Compared with cameras installed on the B-pillar and rearview mirror, the high-positioned front-side camera deployed on the roof can reduce blind spots;

-

As forward vision redundancy: Built on the high-positioned front-side camera on the roof, the camera has a broad view and increases the redundancy of forward vision. Even if the primary front camera fails, the complete perception of forward vision can still be achieved by relying on the two high-positioned front-side cameras.

XPeng – Automatic Driving System Perception Solution

Note: √ has this configuration, N/A not applicable, — unknown

Xpilot 3.0 compared with Xpilot 2.5:

- ADS Camera: The front camera has been upgraded from a single-eye camera to a triple-eye camera, and an additional front single-eye camera has been added. The total number of cameras has increased to 5, including front view, side view (4 cameras) and rear view (1 camera).

- Added 1 driver monitoring camera

- Millimeter-wave radar: added two rear corner radars.- The application of high-precision maps has been added compared to Xpilot 3.0.

Xpilot 3.5 compared to Xpilot 3.0:

- ADS camera: One front-facing single-lens camera has been removed from the forward view.

Note: Presumed reason – Xpilot 3.5 system has two front-facing lidar sensors arranged in the front of the vehicle, which completely replaces the previous single-lens camera and provides redundant perception.

- Lidar: Two front-facing lidar sensors have been added.

1) Xpilot 2.5 – Perception system

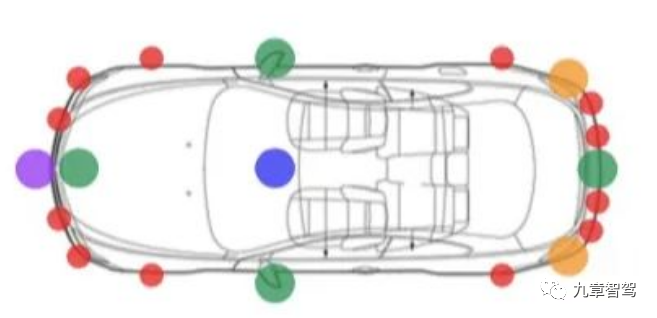

Perception system configuration: ADS camera1 + Surround-view camera4 + Millimeter-wave radar3 + Ultrasonic radar12

Note: Purple circle – Forward mid-range millimeter-wave radar1, Orange circle – Rear close-range millimeter-wave radar1, Green circle – Surround-view camera4, Blue circle – Forward primary camera1, Red circle – Ultrasonic radar*12.

Important sensor introduction:

a. Front millimeter-wave radar (Bosch – MRR Evo14)

- Mid-range radar: with two detection angles of wide-angle/narrow-angle, the detection distances are 100m and 160m respectively. It is installed in the middle of the front bumper, mainly used for detecting and tracking front targets.

b. Rear millimeter-wave radar (Bosch)

- Close-range radar: the detection distance is 0.36-80m. It is installed on both sides of the rear bumper, mainly used for detecting obstacles on both sides of the vehicle at the rear.

2) Xpilot 3.0 – Perception system

Perception system configuration: Perception sensor + High-precision map (Gaode Map) + High-precision positioning.

Perception sensors: ADS camera9 + Surround-view camera4 + Millimeter-wave radar5 + Ultrasonic radar12 + DMS camera*1

Important sensor introduction:

9 ADS cameras: forward triple lens (windshield), forward single lens (windshield), side forward views2 (mirror base), side rearview2 (fender), rear view*1 (upper license plate).

a. Forward triple lens camera:

-

Long-range narrow-angle camera (28°): mainly used for detecting forward moving objects, such as automatic emergency braking, adaptive cruise control, and forward collision warning.Performance Parameters: Detection distance is over 150 m, resolution is 1828 * 948, pixel – 2 MP, and frame rate is 15 fps.

-

Medium-range main camera (52°): primarily used for identifying traffic signals, lane recognition, and detecting moving objects ahead of the vehicle, such as detecting traffic signals, automatic emergency braking, adaptive cruise control, forward collision warning and lane departure warning.

Performance Parameters: Pixel is 2 MP and frame rate is 60 fps.

- Short-distance wide-angle camera (100°): primarily used for anti-cut-in, rainfall detection, and traffic signal recognition.

Performance Parameters: Pixel is 2 MP and frame rate is 60 fps.

b. Front monocular redundant perception camera (front windshield): pixel is 2 MP and frame rate is 69 fps.

Purpose: serves as a redundant perception sensor in case the front three-camera setup malfunctions.

3) Xpilot 3.5 – Perception System

Perception System Configuration: Perception sensor + High-precision map (Gaode) + High-precision positioning.

Perception Sensor: 2 Livox-HAP Lidars + 8 ADS cameras + 4 panoramic cameras + 5 millimeter-wave radars + 12 ultrasonic radars + 1 DMS camera.

Key Sensor Introductions

a. Livox-HAP Lidar Performance Parameters:

- Type: Prismatic scanning solid-state hybrid Lidar

- Detection distance: 150 m @ 10% reflectivity

- FOV (H&V): 120° * 30°

- Angle resolution (H&V): 0.16° * 0.2°

- Laser wavelength: 905 nm

b. 8 ADS cameras: 3 for front view (front windshield, 2 MP pixel), 2 for side view (rearview mirror base), 2 for side view (wing), and 1 for rearview (upper part of license plate).

Ideal – Autonomous driving system perception solution

Note: √ indicates that this configuration is included, N/A means it is not applicable, — means unknown, (E) means inferred.

Perception System Comparison of the 2021 and 2020 Ideal One Models:a. Improvement in Front Camera Performance: The old model ideal One’s front single camera had 1.3 million pixels and a horizontal viewing angle of 52°, while the new model ideal One has a single camera with 8 million pixels and a horizontal viewing angle of 120°.

b. Increased Number of Millimeter-wave Radars: Four corner radars have been added.

1) 2020 Ideal One – Perception System

Sensor Configuration: ADS camera * 1 + Surrounding camera * 4 + Millimeter-wave radar * 1 + Ultrasonic radar * 12

Note: The above figure shows 3 cameras at the front windshield position. a. The 2 cameras on top, of which only ② is the ADS camera, used for driving assistance during driving; b. An additional camera ① is a data collection and acquisition camera, specially used to collect road information and driving scene data, which is convenient for subsequent algorithm training and optimization; c. The camera at the bottom ③ is a dashcam camera;

Key Sensor Introduction:

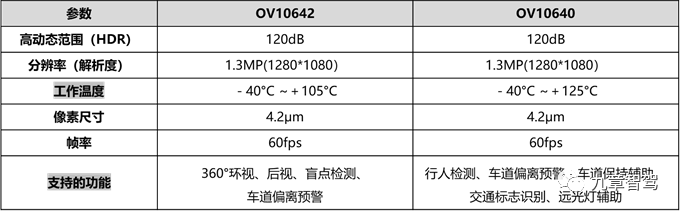

Front single-eye ADS camera (OmniVision – OV10642) and Surrounding camera (OmniVision – OV10640)

2) 2021 Ideal One – Perception System

Sensor Configuration: ADS camera * 1 + Surrounding camera * 4 + Millimeter-wave radar * 5 + Ultrasonic radar * 12

Key Sensor Introduction:

a. Front Single Camera: 8 MP pixels, horizontal viewing angle of 120°, detection distance of 200 m

b. Millimeter-wave Corner Radar: Bosch fifth-generation radar, horizontal viewing angle of 120°, detection distance of 110 m

3) Ideal X01 – Perception System

Perception System Configuration: LiDAR * 1+ ADS camera * 8 (E) + Surrounding camera * 4 + Millimeter-wave radar * 5(E) + Ultrasonic radar * 12 + DMS camera * 1

Autonomous Driving System – Computing Platform

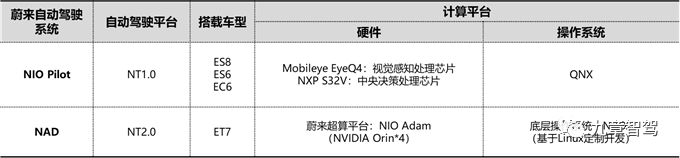

Table 2. Comparison of Autonomous Driving System Computing Platforms among Three New Energy Vehicle Startups### NIO – Autonomous Driving System Computing Platform

1) NIO Pilot Autonomous Driving Computing Platform

Hardware: Mobileye-EyeQ4 chip (perception processing operation) + NXP – S32V chip (decision processing operation)

a. EyeQ4 performance parameters: computing power – 2.5 TOPS, power consumption – 3 W, response time – 20 ms, process technology – 28 nm, chip type – ASIC

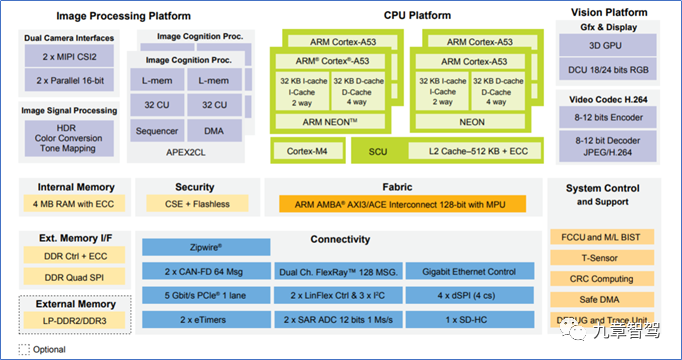

b. S32V performance parameters: computing power – 9200 DMIPS, processor – 4 cores 64-bit CPU Arm®Cortex®-A53 1GHZ ,4 cores 32-bit CPUArm®Cortex®-M4 133MHZ

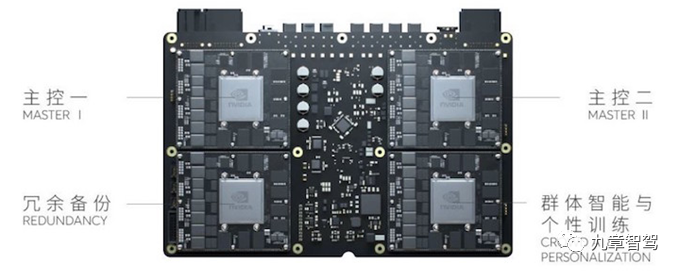

2) NAD Autonomous Driving Computing Platform

NIO Adam supercomputing platform (composed of 4 NVIDIA Orin chips) performance:

- 4 * high-performance dedicated chips: 2 main control chips + 1 redundant backup chip + 1 chip dedicated to collective intelligence and individual training

—— 2 main control chips: implementing full-stack operations of NAD algorithm, including perception checking of multiple solutions, high-precision positioning from multiple sources, and multi-modal prediction and decision-making; sufficient computing power ensures that NAD system can handle complex traffic scenarios with ease;

—— 1 redundant backup chip: ensures safety of NAD in case of failure of any main chip;

—— 1 chip dedicated to collective intelligence and individual training: speeds up NAD evolution progress and provides personalized local training for each user’s driving environment, enhancing the autonomous driving experience for each user.- Super Image Processing Pipeline: Ultra-high bandwidth image interface, ISP capable of processing 6.4 billion pixels per second

- Super Backbone Data Network: Real-time and lossless distribution of signals from all sensors and vehicle systems to each computing core

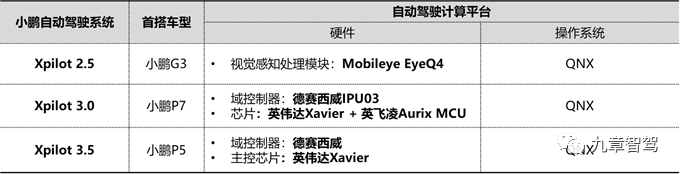

Xpeng – Autonomous Driving System Computing Platform

1) XPilot 2.5 Autonomous Driving Computing Platform

Hardware: Mobileye EyeQ4 module

EyeQ4 chip: Computing power – 2.5 TOPS, Power consumption – 3 W, Response time – 20 ms, Process technology – 28 nm, Chip type – ASIC

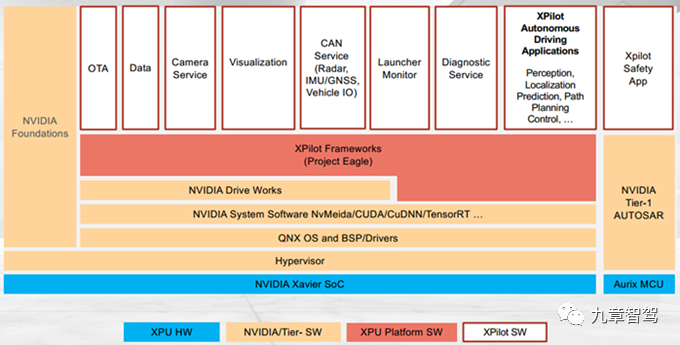

2) Xpilot 3.0 Autonomous Driving Computing Platform

Xpilot3.0 Computing Platform: NVIDIA Xavier SoC + Infineon Aurix MCU

a. NVIDIA Xavier SoC: Computing power – 30 TOPS, Power consumption – 30W, Safety Level – ASIL D, Video Processor – 8K HDR, CPU – Custom 8-core ARM architecture, GPU – 512 core VoltaGPU

b. Infineon Aurix MCU: (RISC processor core, microcontroller and DSP integrated into one MCU)

Computing power – 1.7 DMIPS/MHz, Clock frequency – Single-core 300 MHz, Process – 40 nm, Safety level – ASIL D

The software stack of the Xpilot3.0 system below shows that:

Self-developed parts include: XPU’s platform software (Xpilot system architecture) and Xpilot system application layer software: including OTA, diagnostic services, virtualization, camera services, CAN services, autonomous driving applications (perception, positioning, prediction, path planning, etc.) etc.

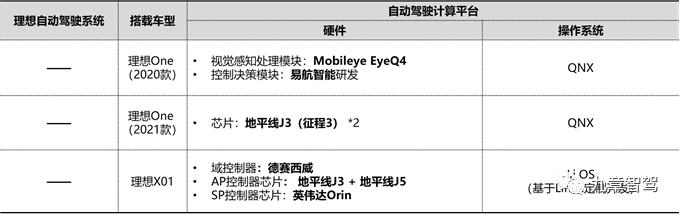

Ideal – Autonomous Driving System Computing Platform

1) Autonomous Driving Computing Platform mounted on 2020 Ideal One– Image perception processing module: Utilizes the Mobileye EyeQ4 chip.

- Control decision module: Developed by EHang Intelligence.

2) Automatic driving computing platform equipped in 2021 version of Ideal ONE.

Processing chip: Horizon Journey 3*2.

3) Automatic driving computing platform equipped in Ideal X01.

Hardware:

-

AD domain controller: Desay SV.

-

Main control chip: SP controller chip – NVIDIA Orin-x.

Operating System: Ideal X01 will be equipped with its self-developed real-time operating system Li OS.

Li OS is customized based on the Linux kernel, including: a. Core parts such as the kernel file system, IO system, and boot loader; b. Middleware that communicates with other application layers. The application layer is developed in cooperation with strategic suppliers.

Automatic driving system – Function implementation

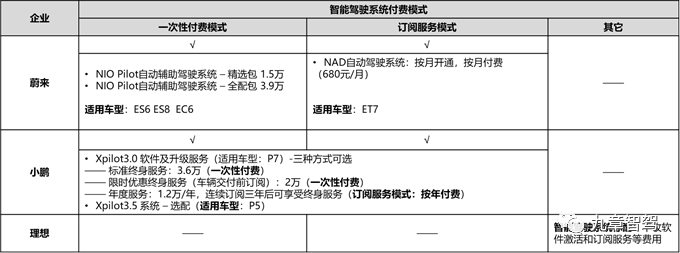

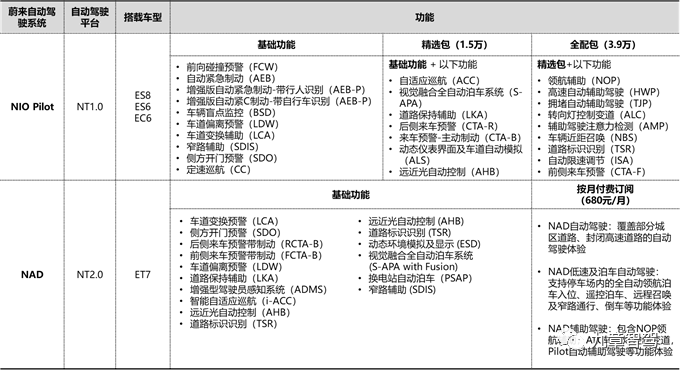

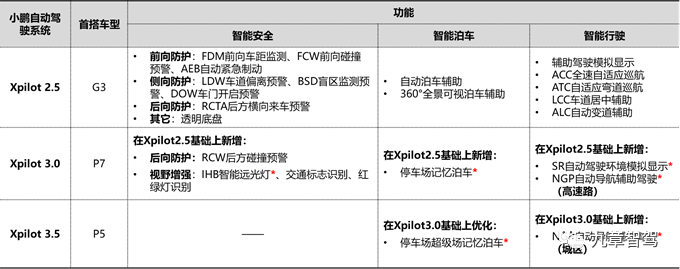

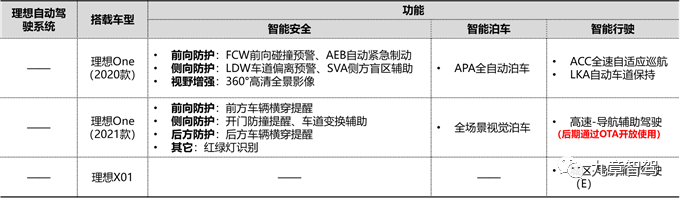

Table 3: Comparison of the intelligent driving system payment models among three new forces in car-making

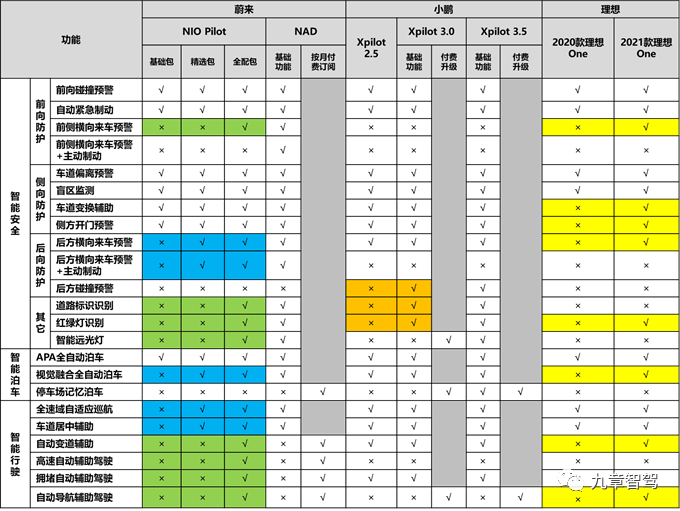

Table 4: Comparison of the functional implementation of the automatic driving systems among three new forces in car-making

Note: √ indicates that this configuration is available; × indicates that this configuration is not available.

NIO – Function implementation of automatic driving system

1) Basic functions of the NIO Pilot system are free, and one-time payments are used for selected packages and full packages.

2) NAD adopts a monthly subscription payment model: In addition to some basic functions, if you want to have the complete NAD function, you need to adopt the “monthly subscription, monthly payment” service subscription model, which is ADaaS (AD as a Service).### Xpeng – Implementation of Autonomous Driving System Features

Note: Features with “*” are optional equipment and can be obtained through OTA upgrades after subscription and payment.

1) Xpilot 3.0 vs. Xpilot 2.5:

Xpilot 3.0 has strong software upgrade capability and can add the following through OTA upgrades: Intelligent High Beam, Parking Memory Parking, NGP Automatic Navigation-Assisted Driving (Highway), SR Automatic Driving Environment Simulation Display

2) Xpilot 3.5 vs. Xpilot 3.0:

- Improved stability: Better able to handle challenging scenarios such as night-time driving, backlighting, weak lighting, and alternating light and dark in tunnels;

- New City NGP – Automatic Navigation-Assisted Driving feature

- Improved Parking Memory Parking Feature experience: Better able to handle complex scenarios such as inbound and outbound vehicles in parking lots, oncoming traffic, pedestrians, and continuous right-angle turns

Ideal – Implementation of Autonomous Driving System Features

1) 2020 Ideal One: Mainly realizes level 2 driving assistance functions with shortcomings in L2+ advanced driving assistance functions;

a. Due to the vehicle not being equipped with long-distance sensors that can monitor the rear of the vehicle, such as millimeter-wave radar or side rearview/reversing ADS cameras, the vehicle has weaker rear protection ability during high-speed driving. It is difficult to achieve rear collision warning, lane-assist, and automatic lane-changing functions;

b. The sensing ability of the forward sensors has certain limitations;

Multiple accidents happened in 2020 involving Ideal One rear-ending a big truck. These accidents were mainly caused by the following two situations: First, the ADAS failed to identify the front vehicle when the front vehicle intruded into 1/3 to 1/2 of the lane while the assisted driving function was activated; Second, the assisted driving sensor of Ideal One had poor recognition ability for the vehicles on the side-front, which was even weaker in low-light environments.Therefore, besides the software algorithm factor, the limitation of forward perception capability of the sensors is another significant reason. Firstly, the front camera of the 2020 Ideal ONE is monocular with a field of view angle of 52°, and there are no angle radars arranged on both sides of the vehicle, which results in poor perception ability in weak light conditions, and thus late recognition of vehicles quickly inserted from the side.

Therefore, the hardware configuration upgrade of the automatic driving system related to the 2021 Ideal ONE is a natural thing to do.

2) 2021 Ideal ONE: The front camera adopts an 8 million high-definition camera with a horizontal viewing angle of 120°, and 4 angle radars are added to enhance the perception ability of medium- and long-distance targets in the rear and front sides. Compared with the old Ideal ONE, it has added functions such as traffic light recognition, cross-traffic warnings for vehicles in front and behind, and door-opening collision warnings.

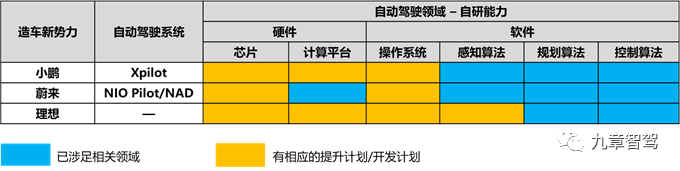

Conclusion

- From the automatic driving development plan and self-research layout of the three new forces in the car-making industry, their strategic layout directions are relatively consistent, and they all want to create a full-stack self-research automatic driving capability. Because the hardware of future models will tend to converge, the software capability of enterprises or the capability of software-hardware integration will be their core barriers.

-

In the future advanced automatic driving perception solution, the three new forces in car-making industry will adopt the “strong perception” route with redundant perception by multiple sensors, such as lidar, millimeter-wave radar, and camera. However, in addition to soft and hard integration, data is also crucial for the evolution of automatic driving technology. Only by continuously accumulating local user data under complex road conditions in China and continuously optimizing software algorithms can differentiated automatic driving experience be created. Chinese local enterprises understand Chinese road scenes and driving habits, so they have the opportunity to iterate out better automatic driving technology experience in Chinese scenarios. For example, some characteristic functions applied to Chinese roads: merge warning, night overtaking reminder, and heavy truck avoidance.

-

In terms of automatic driving computing platform, NIO, XPeng, and Ideal all initially chose a relatively closed Mobileye solution, which makes it difficult for them to effectively obtain and utilize underlying visual data. Therefore, later, they turned to cooperate with Nvidia, which has a higher degree of openness. In the future, strong enterprises will form a research and development model of AI chip self-research + automatic driving domain controller self-research (cooperative development). With this model, combined with self-researched software algorithms, it can fully give play to the optimal performance of automatic driving computing platform.

-

In terms of realizing functions of mass-produced models, more emphasis is placed on the landing of specific user scenarios; starting from user scenarios, it gradually realizes point-to-point automatic driving in three major scenarios: parking, high speed, and urban area.5. The current autonomous driving technology is in a constantly iterative and developing stage. OTA enables rapid iteration of vehicle software, which can continuously add new features or optimize existing ones for vehicles that have already been delivered to users. However, OTA for new features is also strongly dependent on hardware. If the hardware does not support it, software alone cannot implement it. But most hardware installed on mass-produced models cannot be fully installed at one time, so OEMs can only try their best based on their own abilities – “strive for forward compatibility and backward compatibility as much as possible”.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.