Review of the Whole Process of Tesla FSD Beta Release and the Controversy Surrounding the Use of “High-Precision Maps”

Throughout the entire process of Tesla’s FSD beta version release, the most controversial issue is whether Tesla actually uses “high-precision maps”. Some people marvel at its ability to create extremely approximate maps without actual maps. Others question whether Tesla secretly preloads map data on the vehicle side.

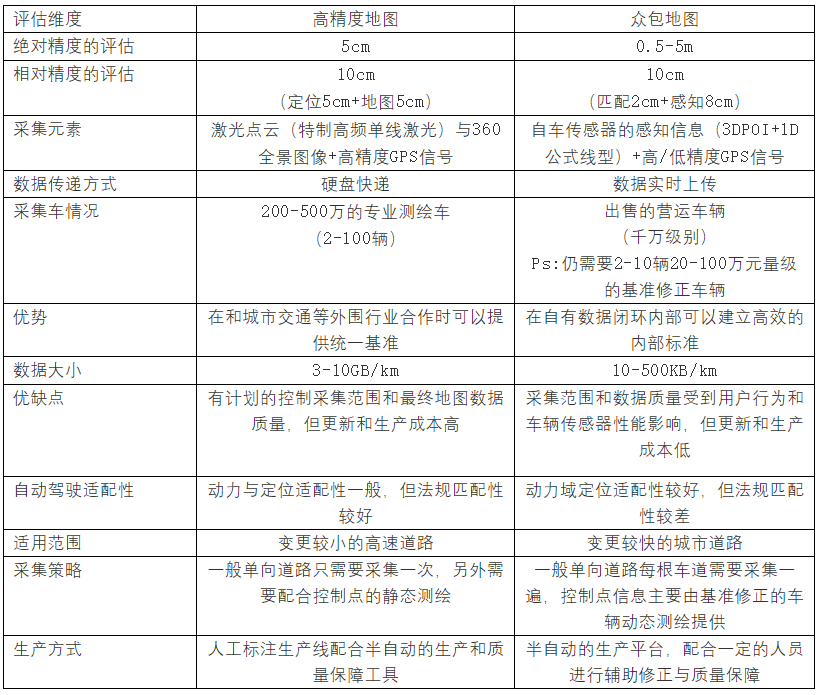

To answer this question, we first need to understand a concept: there is a difference between high-precision maps and crowdsourced maps.

The production process of high-precision maps is simple in theory. Professional surveying vehicles first drive on the road to collect raw images and laser data, as well as some static control point information. After the collected data is transmitted to the cloud by physical means, it enters the production stage. First, the necessary post-processing and data alignment of the original GPS data and point cloud data are performed to obtain more accurate original point cloud data. Then, the formal map production line task begins.

Using a semi-automatic tool chain and production platform, annotators work on drawing vector features on point clouds and original images. After the system completes the subsequent compilation tasks, the map finally enters the quality inspection stage. If the quality is qualified, it is stored in the library, and the production process of a high-precision map is completed.

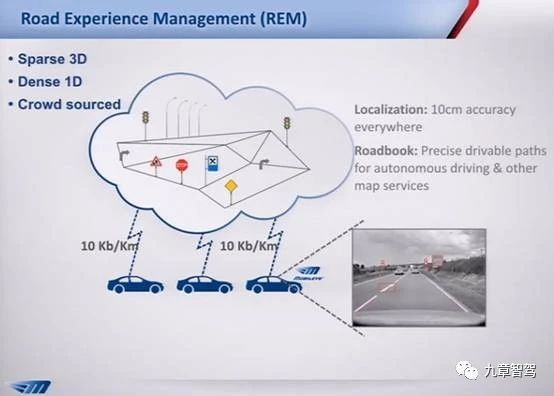

The production process of crowdsourced maps is simple in theory. First, on-sale vehicles upload the corresponding perception local reconstruction results and compress the data on the vehicle side. Then, the data is transmitted to the cloud through 4G/5G communication and enters the production stage.

In addition, a small number of baseline correction vehicles obtain dynamic control points and synchronize them to the cloud. The global SLAM technology is used to cluster and align vector feature information on a large scale. Local problems and quality issues are manually corrected and stored in the library, and the production process of a crowdsourced map is completed.

As shown in the table below, we evaluate the differences between the two mapping methods from different dimensions. It can be seen that there are pros and cons to both methods.

From a literal perspective, it is undoubtedly true that Tesla did not use “high-precision maps” but used its huge vehicle fleet to build a wide range of crowdsourced maps. Therefore, the view that Tesla spent a lot of resources to organize its own map data is circulating on the internet. The real question is: did the vehicle side really “directly” use this data?The speculation around the crowdsourced maps is not the end of the Tesla Map mystery. The crowdsourced maps are still a pre-installed map and still have a significant difference from what Tesla claims as not using maps.

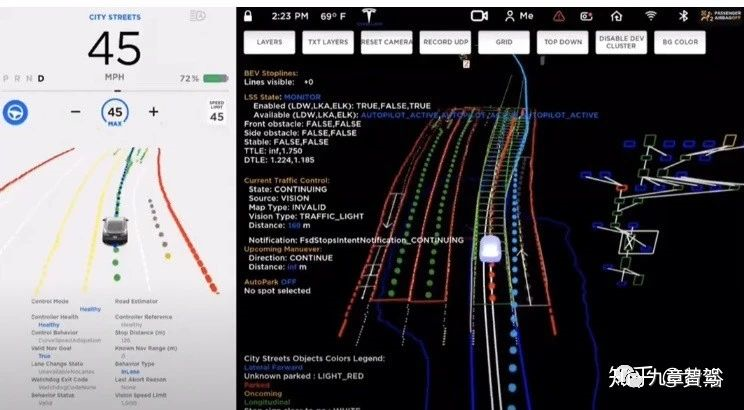

Analyzing various videos of Tesla FSD, two things can be confirmed. First, Tesla’s planning still has a clear tendency towards rule algorithms, so the input for its “maps” must also be structured data. Second, there is an obvious shaking phenomenon in FSD’s video points, which does not comply with the data characteristics of the map.

Therefore, Tesla’s map is indeed not entirely based on the pre-installed map, and the suspicion of pre-installed maps on the network mostly comes from many unseen objects that appear on the FSD interface, which is deemed impossible. However, currently, the development of deep learning has a technical solution to “suppose” the content that cannot be seen.

It seems that ME’s HPP is an early practice of this approach. Its HPP can guess a drivable path without any explicit lane markings. Tesla also had in-depth cooperation with ME in the early stage of Autopilot’s partnership, which logically corresponds.

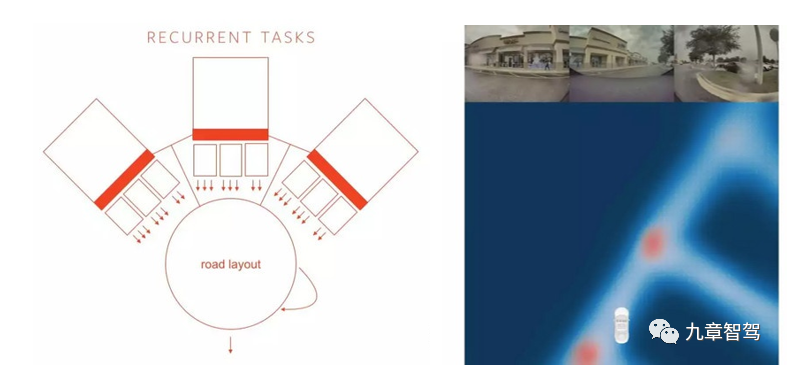

At the Tesla technology exchange conference, some technical clues were also revealed, such as introducing a compressed representation of multiple road images into an RNN recurrent network, and its road layout prediction has “supposed” the invisible part at a coarse-grained level, displaying the potential of this technology.

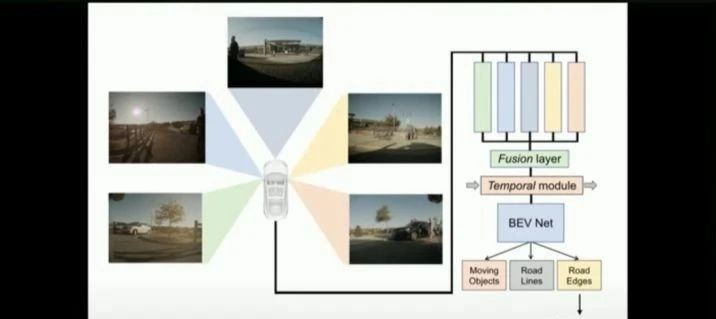

This release of FSD has significantly improved its accuracy and output results that are almost as good as those of the map. There are few analyses on this aspect on the network, so a detailed technical analysis is conducted. Firstly, from the materials released by Tesla, it can be preliminarily judged that BEV network is employed.

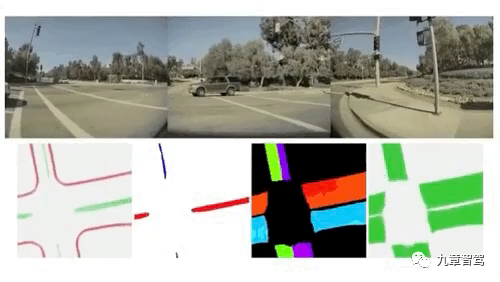

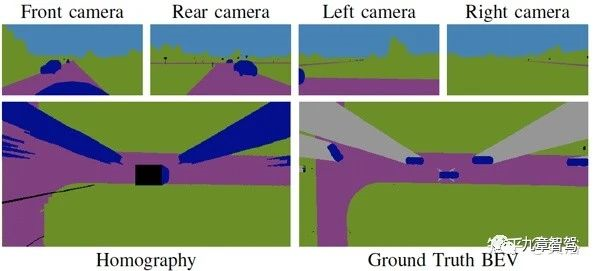

The principle behind this can be found in the paper “A Sim2Real DL Approach for the Transformation of Images from Multiple Vehicle-Mounted Cameras to a Semantically Segmented Image in BEV, arXiv 2005.04078”. The paper applies homography transformation to four semantic segmented images from vehicle-mounted cameras to convert them to BEV.

The principle behind this can be found in the paper “A Sim2Real DL Approach for the Transformation of Images from Multiple Vehicle-Mounted Cameras to a Semantically Segmented Image in BEV, arXiv 2005.04078”. The paper applies homography transformation to four semantic segmented images from vehicle-mounted cameras to convert them to BEV.

Directly using homography transformation for perspective transformation can cause large errors due to the assumption of a flat road surface. This method learns how to calculate accurate BEV images without visual distortion, and it uses simulation software for training to avoid huge annotation work.

After obtaining semantic segmentation results from a top-down view, the next step is to obtain perception data with structured attributes.

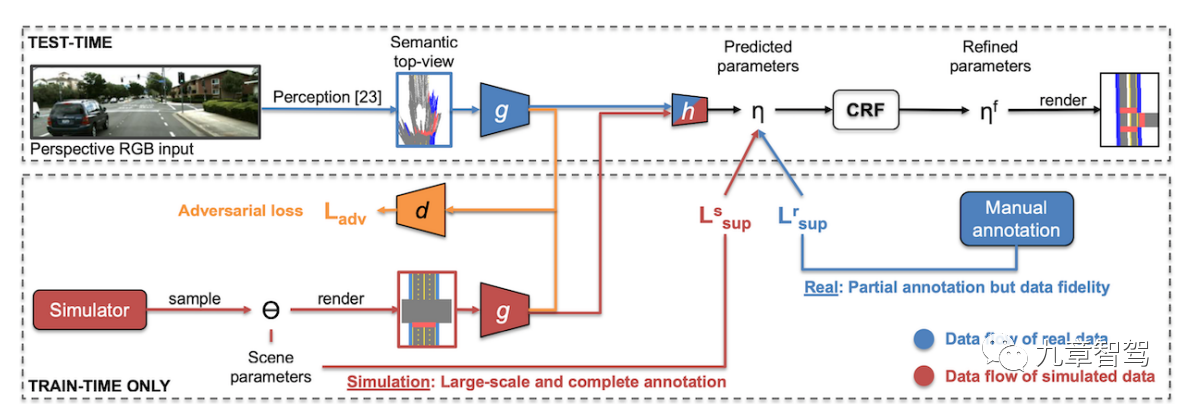

Based on current information analysis, it can refer to the method mentioned in the paper “A Parametric Top-View Representation of Complex Road Scenes”.

Using the BEV perception result as input, the crowdsource map is imported into the simulation software, and the input of the real environment and the structured map output are simulated in the simulation system for preliminary pre-training. By using the domain transfer method of generative adversarial networks, inputting real image data, and after BEV conversion, the results learned in the simulation system are transferred to real images to obtain parameterized map output.

From the latest research progress in the industry, Tesla is highly likely to have the ability to use map data to train approximate map perception output instead of using pre-installed maps directly.

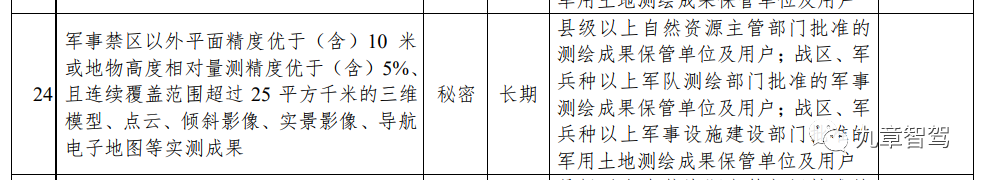

This type of output is not yet mature, but this direction still has great significance. The technology’s greatest contribution in China may come from effectively avoiding national security issues. The notice “Circular of the National Administration of Surveying and Mapping on the Provisions for the Scope of National Secrets of Surveying and Mapping Geographic Information Management Work” (Natural Resources DPF No. 95) clearly stipulates the relevant subjects for stationary surveying and mapping.

There is still certain policy risks in the current autonomous driving technology, however, this output method of “mapping” seems to avoid some problems as it does not involve absolute latitude and longitude. It also leads deep learning from perception modules to more downstream modules, and takes one step closer to a fully differentiable autonomous driving system.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.