The WEY Mocha, Equipped with High-end Intelligent Cockpit Hardware from XENDO Intelligent

The WEY Mocha, available for pre-sale at this year’s Shanghai Auto Show, comes with top-of-the-line hardware for the intelligent cockpit, including the Qualcomm SA8155P cockpit SoC built on a 7nm process, 12GB of standard in-vehicle memory, 5G communication module, and connectivity to the entire vehicle’s key electronic control unit systems such as the millimeter-wave radar, infrared camera, fisheye camera, light sensors, large screen, and A R- HUD sensors.

These are almost the highest hardware configurations that the current intelligent cockpit field can achieve. With a solid hardware foundation, XENDO Intelligent has the opportunity to showcase its capabilities in the intelligent cockpit.

XENDO Intelligent is a smart cockpit technology company established in 2019, where Great Wall Motors is its angel investor and client. XENDO Intelligent has provided some cockpit features for Great Wall’s Haval F5 and F7 in the past. The MO.Life 1.0 intelligent system found in the Mocha is XENDO’s first cockpit product designed and developed from the ground up, showcasing its full-stack capabilities.

- Always Online

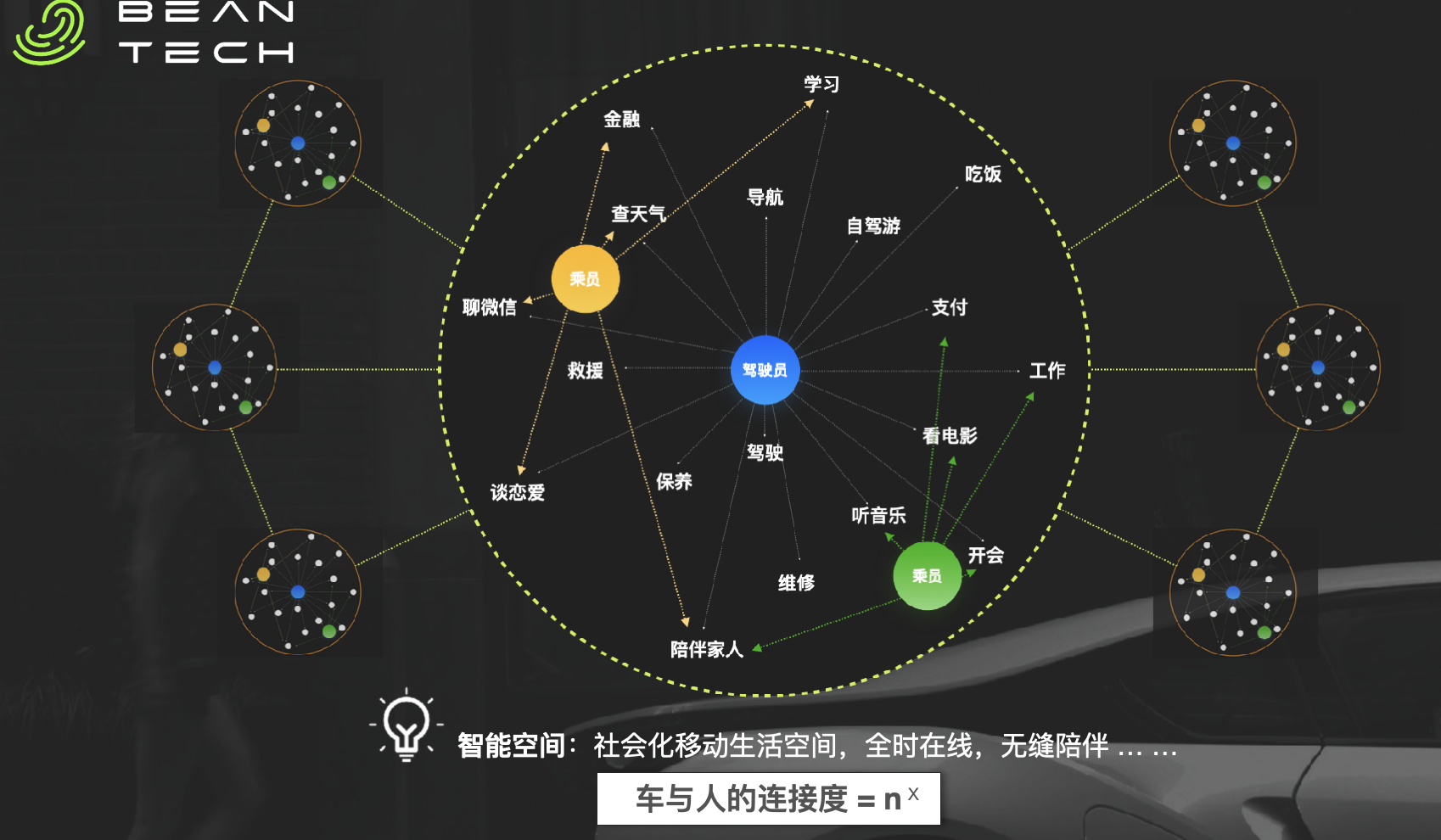

In the era of the Internet of Things, the car cockpit is the most imaginative intelligent terminal. During the traditional driving process, the driver is briefly “offline” from the information society. XENDO Intelligent hopes to create a secure connection suitable for driving scenes between people and the information society. This not only requires support for information software such as WeChat for vehicles, vehicle interconnection, Zhihu, etc., with the connection between mobile end and car interior continuing, but also requires a user-friendly voice control within the vehicle.

Voice control is currently recognized as the most suitable human-computer interaction mode for driving scenes. In this area, XENDO has integrated multiple microphones in the vehicle, achieving independent sound zone recognition control. By the end of this year, XENDO will enable 6 sound zones, continuous dialogue, visible and speakable, and lip movement-assisted recognition accuracy improvement technologies.

In addition to the driver being “always online” in the vehicle, XENDO has also achieved “no disconnection” for the entire vehicle. In the Mocha, XENDO Intelligent has connected most of the key ECU systems with the cockpit. This enables key ECU systems to be networked, remotely diagnosed, remotely updated, and significantly widens the boundaries of human-computer interactions. For example, on the WEY Mocha’s car control screen, it is possible to accurately adjust the opening range of the sunroof. In future products, XENDO Intelligent will connect all ECU systems in the vehicle for finer control.

- One ID Function + FACE ID LoginCurrently, XianDou Intelligence has obtained authorization from the account and car owner ID binding of QQ Music, iQiyi, Maps and other applications through the car owner APP. After getting in the car, the cabin camera’s FACE ID will automatically log in to the car owner ID, and then automatically log into various ecosystem APP accounts through the car owner ID. By logging in to the accounts, the ecosystem from the phone can be extended to the car, such as music membership and song collections.

Individual IDs can also record each person’s driving habits, such as seat angle, steering wheel height, and rearview mirror angle. With the growth of vehicle usage data, the system will learn the car owner’s usage preference and provide more personalized settings such as temperature, music, and driving habits.

The ID account belongs to the person, not the car. Even if the car is replaced with another XianDou Intelligent car, users can still log in to their personalized preferences and ecosystem accounts. Additionally, they can set up automatic login upon entry and logout upon exit to effectively protect user privacy.

- Emotional interaction based on scenes

Users have different needs for the cabin in different scenes. XianDou can detect different scenes through sensor information, and time, map, location, and speed information. Once the conditions for triggering the scene are met, XianDou will push appropriate emotional proactive interactions.

For example, if a user is working late, XianDou can recognize this and recommend food options. These recommendations are not commercially driven, but rather aimed at enhancing user experience and meeting users’ emotional and practical needs.

Another typical scenario is congestion. Through map information, navigation information, and facial recognition, the car can determine if it has entered a congested scene. XianDou will then proactively care for the car owner and recommend playing music to alleviate the frustration caused by traffic jams.

- Interaction between people and cars outside the car

In addition to focusing on in-car interactions, XianDou is also addressing some interactions between people and cars outside of the car. For example, a new feature on Mocha allows users to start the car and control its movement through gestures set up within the camera’s field of view after carrying the key and unlocking the car. Gesture control and vehicle response will be further developed while ensuring user safety.

Furthermore, during driving, since the control line of the car’s external horn has been integrated, different external sound prompts can be set within the cabin to provide clearer information for different situations. For example, on narrow roads shared with pedestrians, a “Please be careful in front” prompt can be played.

- Small program as a primary ecosystem push.Compared to directly accessing the Android APP Store or adopting the Android APP Store solution, the difficulty of integrating a mini program framework will be greater at this stage, but the experience will be much better. Xiandou chose the more challenging mini program.

Xiandou Intelligent will define a mini program framework that integrates the entire vehicle status signal, control signal, and map information, which can be integrated into the scene. Secondly, it will also integrate voice assistants, achieving both voice control of mini programs and scene-based active emotional interaction.

This approach may be slower than developing directly based on the APP Store, and the ecological environment needs to be gradually enriched, but the experience will be better. For example, it is impossible to call APPs downloaded on other smart cockpits via voice, but it is possible to support voice control based on open mini programs.

- The possibility of integrating HarmonyOS is not ruled out, but it is not mandatory.

Xiandou Intelligent stated that it has already contacted Huawei to explore the possibility of cooperation, but HarmonyOS is currently not a mandatory option. Huawei’s smart cockpit not only has a full range of software and hardware solutions, but also has a higher degree of openness. Even if HarmonyOS is not available, Xiandou can still integrate into Huawei’s ecology, such as Huawei’s Hi-Car solution.

However, if HarmonyOS becomes open source later, many car companies may develop in-vehicle systems based on HarmonyOS, which can achieve mutual benefits with low costs. Car companies can reduce development costs, and Huawei can expand the ecological landscape, increase the coverage of HarmonyOS, and improve the user experience of HarmonyOS devices in vehicles.

In the short term, intelligent driving is still the core product in vehicle intelligence. The public and car companies’ attention to intelligent driving will be higher than that of the smart cockpit. However, in the long run, the development of intelligent driving will inevitably tend to homogenization. In the near future, the intelligent driving level of most vehicle models will approach or reach the level of autonomous driving. This is where the smart cockpit becomes the most core product for different vehicle models’ user experience.

The development of smart cockpits requires long-term observation and thinking about user needs, so early layout and building research and development capabilities are essential. Hope that more smart cockpit technology companies like Xiandou Intelligent will appear in the market, accelerating car companies’ digital transformation of cockpits. After all, in terms of experience, 50% of the vehicle system experience on the market is still very poor.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.