Author: Tian Hui

It doesn’t matter whether the brakes are responsive or not.

What matters is who takes the blame.

This is not an official statement from Tesla or Musk. It is a reality viewed from a third-party perspective.

As the female car owner climbed onto the roof of her car, Tesla once again stood at the center of public opinion. The woman involved was promptly dealt with by the judiciary and Tesla promptly shifted the blame to her because she refused to have the vehicle inspected by a third-party testing agency designated by Tesla.

“We have no choice but to compromise, as it is an inevitable process in the development of a new product,” said Tesla’s Vice President of External Affairs, Tao Lin, in response to the auto show incident, in the company’s official statement.

If it weren’t for the joint criticism of three major state media outlets, including the People’s Daily, China Central Television, and Xinhua News Agency, and the robust pressure from three governmental departments, including the Consumer Association, the Market Supervision, and the Supervision Committee, the auto show incident might have ended with the phrase “no choice but to compromise.”

After all, many incidents of brake malfunction, steering loss of control, and even self-ignition and fire have been resolved with Tesla’s refusal to compromise and consumers’ compromise.

The phenomenon of group rights protection by Tesla consumers is rare in the automotive industry in recent years. Although the demands of consumers in protecting their rights may differ, the similarities among the difficulties they face are the lack of access to all the original vehicle data recorded by Tesla.

What consumers want is what Tesla can’t give, which has led consumers to the top of the vehicle.

Two days after the auto show incident, the Market Supervision Department, responsible for recalling automotive defects, demanded that Tesla unconditionally provide all original driving data for the half-hour preceding the brake malfunction of the woman involved in the rights protection.

Tesla could not shift the blame again and eventually took the blame itself.

Lack of Data Makes Rights Protection Difficult

Rights protection by consumers at auto shows is not about face, but about data.

At the end of 2020, Mrs. Wang, a Tesla owner, had a loss of control incident at the intersection of Chongwenmen in Beijing while driving her Model 3. She felt a sudden increase in steering torque on the road, causing her to lose control of the steering wheel, and the car rushed into a bus stop, injuring a traffic volunteer (see below).

After the incident, Mr. Wang repeatedly asked Tesla for the vehicle’s driving data when the incident occurred. Tesla only provided the steering wheel turning angle data to prove that the vehicle was in normal driving condition and under the control of the driver.Mr. Wang is not satisfied and demands Tesla to provide further data on the steering wheel torque to prove whether there was a steering anomaly during the incident. Tesla failed to satisfy Mr. Wang’s further demands, and most of the owners who are currently litigating against Tesla have not obtained the data they want.

Even traffic police, as law-enforcement agencies, failed to obtain more data from Tesla after the Tesla vehicle driven by Mrs. Wang was involved in an accident, which hindered traffic accident investigations.

Tesla’s Model 3, which has advanced driver assistance capabilities, has been praised by the tech industry and consumers for its autonomous emergency avoidance and risk avoidance multiple times. However, from a technical perspective, the autonomous emergency avoidance function of Model 3 is developed based on visual recognition algorithms.

If there is a possibility of calculation errors in the visual recognition algorithm, it is possible to cause brake failure and steering loss of control. Therefore, when investigating relevant traffic accidents, not only traditional traffic accident investigation data is needed but also all driving data related to the accident must be provided by the car company to arrive at a complete accident conclusion.

From a moral standpoint, companies that engage in the research and development of cars with driver assistance and autonomous driving functions have a natural obligation to provide original vehicle data.

That is to say, companies that develop and produce cars with driver assistance and autonomous driving functions have an obligation to clear their names.

However, Tesla has always maintained its dominant position with respect to driving data and provides only basic data to anyone who requests it, but not all original data.

A female Tesla owner who had experienced a similar event to Tesla lost control once voiced her concerns on Weibo (as shown in the above figure) and summarized the issue of missing Tesla data into two routines. One is that Tesla does not provide all data for comparison with third-party data. The other is that Tesla questions the original driving data provided by third parties.

Under data hegemony, Tesla does not provide data and does not verify data with third parties. In such a situation, how can Tesla vehicle owners win in litigation?

Has the data been recorded?

Since vehicles with driver assistance capabilities have a natural obligation to clear their names, can it be achieved technically?

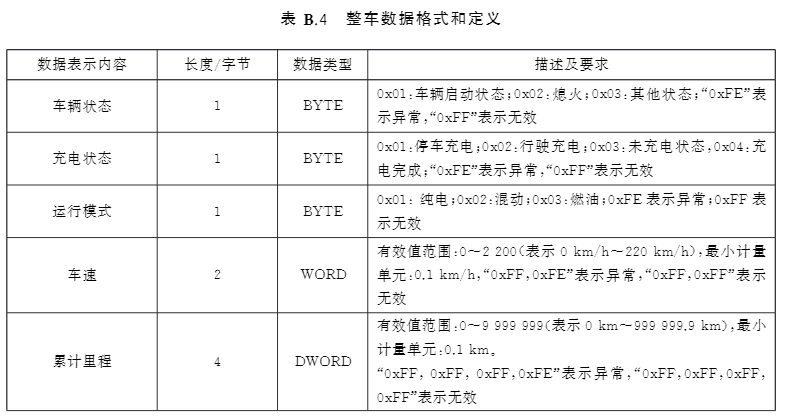

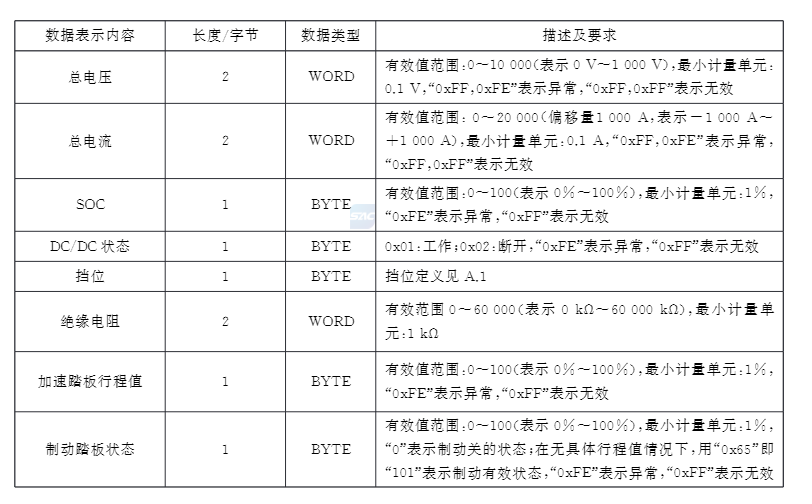

It should and must be. According to the requirements of GB/T 32960-2016 Technical Specifications for Remote Service and Management System of Electric Vehicles, electric vehicles should collect data, record it, and upload it to public supervision platforms.

As for the detailed data that electric vehicles need to collect, there are clear standards in the national standard.

In GB/T 32960-2016, it is clearly stipulated that electric vehicles should record key driving data such as driving mode, acceleration pedal position, and brake pedal state, and the recording interval should not exceed 30 seconds.

However, the national standard does not involve other key data such as steering wheel rotation angle and torque during vehicle driving. Therefore, the driving data recorded by GB/T 32960-2016 is only the minimum standard, and car companies should have higher data recording standards.

Tesla’s data standard is indeed higher than the national standard, at least in terms of time interval, which is much higher, with accuracy up to every second.

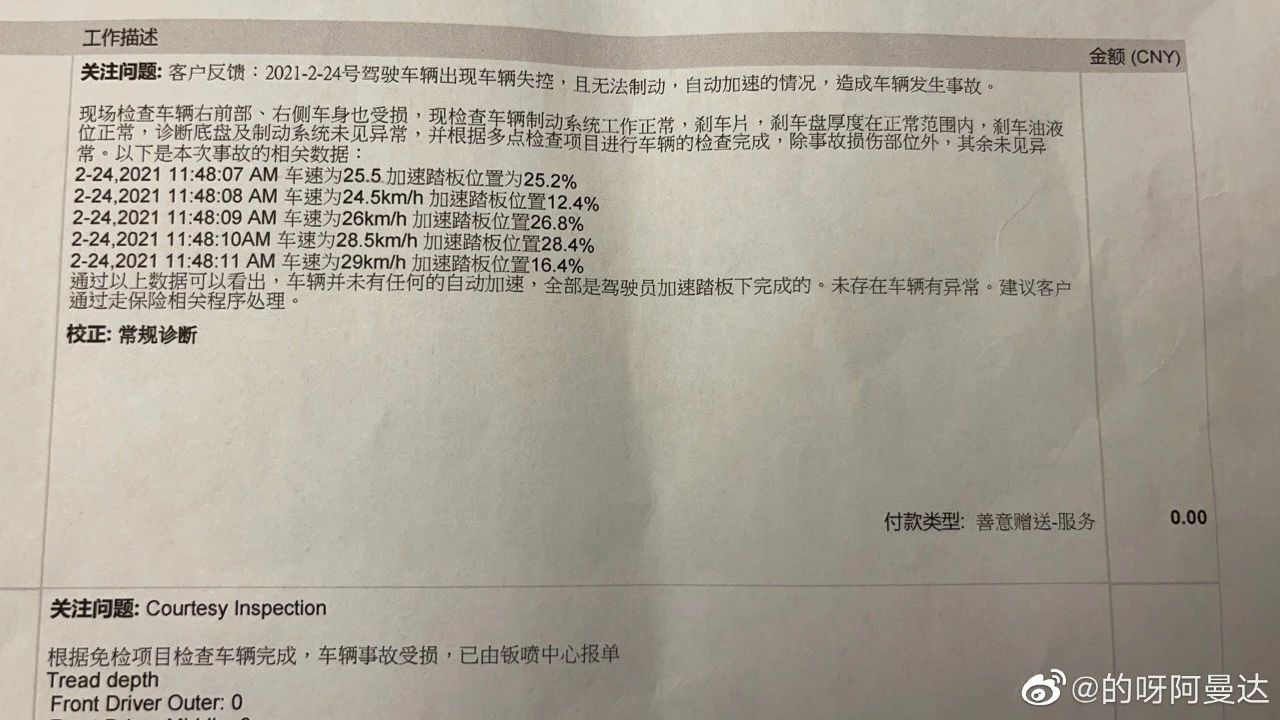

The Weibo user “的呀阿曼达” uploaded a set of driving data provided by Tesla, and from this data, Tesla records the acceleration pedal position data every second.

After the rights protection incident at the Shanghai Auto Show, Tesla, at the request of the market supervision department, will provide vehicle driving data for the 30 minutes before the accident without any conditions.

The mandatory requirements of government departments can unlock all the secrets in the Tesla driving data recorder, but before the secrets are unlocked, we can at least confirm that Tesla has the ability and indeed records driving data related to key operations such as acceleration and braking.

Strict Management of ASSISTED DRIVING Data

There is a paradox in autonomous driving accidents.

Who should be responsible for the traffic accident, and who can prove whether the responsibility allocation is correct?

In traffic accidents involving autonomous driving, drivers cannot effectively prove whether the accident was caused by the car or the person, and only car companies have the technical ability to prove it. From the perspective of car companies’ interests, car companies will do everything possible to absolve themselves, and data hegemony is one of them.

At the beginning of the launch of Tesla’s AutoPilot (AP) function, Tesla promoted it as an autonomous driving function. However, after multiple accidents caused by AP, Tesla officials changed the Chinese name of AP to automatic assisted driving function.

The addition of the word “assisted” allows Tesla to be flexible when facing policy regulations.

According to the “Smart Networked Vehicle Road Test Management Specification (Trial)” issued by the Ministry of Industry and Information Technology, the Ministry of Public Security, and the Ministry of Transport in April 2018, smart networked vehicles must record the following data.

As the regulations mainly target autonomous vehicles on urban roads, Tesla managed to bypass the requirements by modifying AutoPilot into the assisted driving feature, providing flexibility in data recording without being strictly bound by the regulations.

However, Tesla accelerated the promotion of semi-autonomous driving features to ordinary consumers through continuous OTA upgrades and vague advertising strategies.

As a result, Tesla dealt with accidents through data hegemony.

This issue is not only limited to China, but also applies to the United States.

According to a report by Caixin, the US police also need to obtain the vehicle driving data recorded by Tesla using a search warrant when investigating traffic accidents.

Data hegemony has always been a tradition for tech companies, particularly US-based ones.

In the age of personal computers and smartphones, this kind of data dominance does not easily lead to physical safety issues. However, in the age of intelligent electric cars, data hegemony has become a fig leaf for automakers to deal with autonomous driving accidents.

Consumers who have not yet experienced the convenience of autonomous driving cars may be suppressed by automakers’ digital hegemony and fall into the trap of defending their rights.

Therefore, before the arrival of the age of fully autonomous driving, the entire industry should establish standards for assisting driving/autonomous driving data supervision. The Electric Vehicle Observer believes that at least three principles should be applied: recorded, traceable, and verifiable.

Recorded:

Automakers of cars with advanced assisted driving functions must record driving data and assisted driving/control data for autonomous driving.

Traceable:

Regulatory agencies should introduce data recording standards for autonomous driving, and the core is to trace back to whether the behavior that caused the vehicle accident came from the driver or the assisted driving/autonomous driving operation, even tracing back to the perception and decision-making data of the assisted driving/autonomous driving system.

Verifiable:

Regulatory agencies or independent third parties should be able to verify the assisted driving/autonomous driving data provided by automakers to determine the authenticity of the data and prevent automakers from tampering with the data.

Regarding this, should automakers adopt a radical measure of setting up server backup storage for data in regulatory agencies for direct readout in case of accidents? Such a measure will naturally make enterprises worry about their data assets. But if you don’t discipline yourself, the government will come to regulate you. Perhaps automakers can jointly establish an industry organization responsible for data storage and special viewing requirements.

Only by strictly requiring data supervision can we avoid the formation of automakers’ digital hegemony.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.