I do not fully agree, and even oppose to the approach of purely vision-based autonomous driving technology. I believe that this approach is not suitable for China. Below are the reasons I provide.

Shortcoming of Vision-Based Autonomous Driving Solution

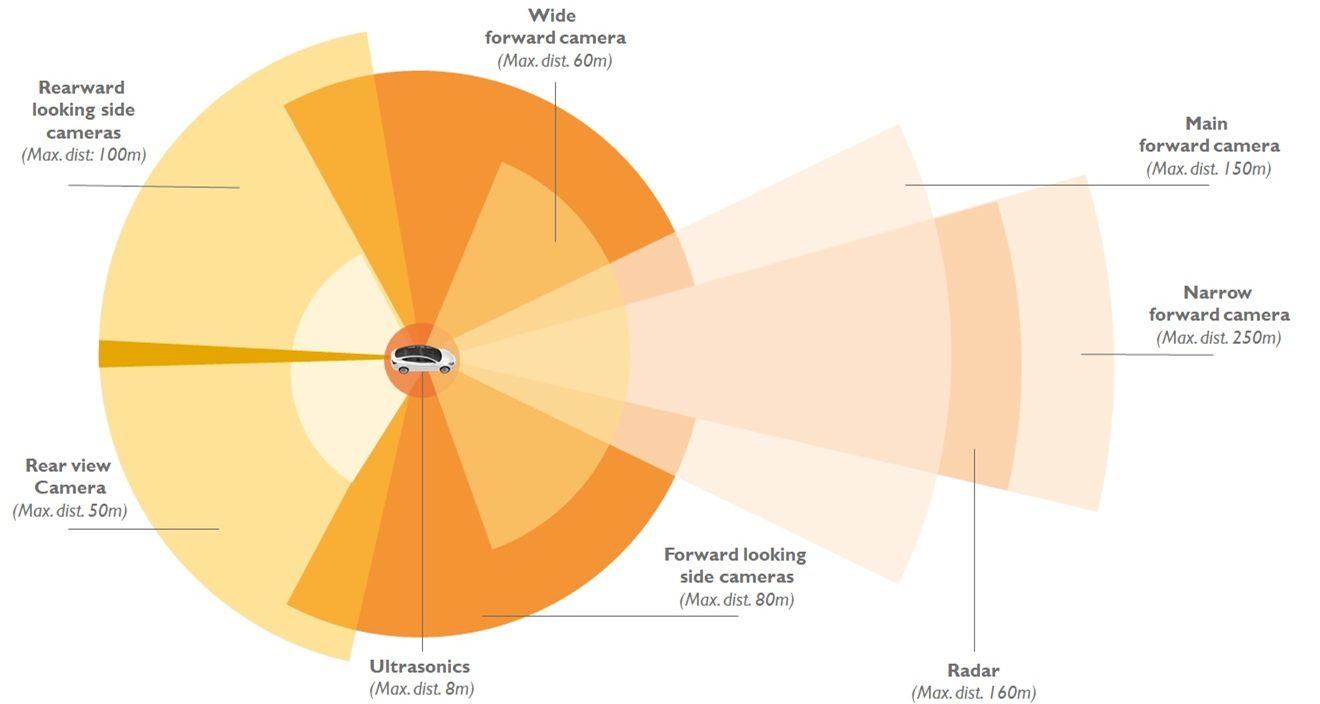

Practitioners of vision-based autonomous driving tend to approach technical issues with “first principles.” In their view, since humans are able to perceive the traffic environment by only recognizing lane lines, traffic lights, and signs with their eyes, autonomous cars must also be able to sense their environment only with the eight cameras on the vehicle.

Vision-based autonomous driving solutions seem reasonable at first glance but do not withstand scrutiny. This thought process completely ignores the capability of present-day software algorithms. Apart from having the “extremely high pixel” eyes of humans, we also have an equivalent processor to artificial intelligence — the brain. However, the perception algorithm capability of autonomous vehicles is still in the weak artificial intelligence stage and cannot meet the level their solution expects.

There are three drawbacks to vision perception algorithms that are in the weak artificial intelligence stage.

Drawback 1: Heavy Reliance On Annotated Data

Mainstream vision perception algorithms require a large amount of annotated data (ranging from thousands to tens of thousands of variables) for model training. Only when the model is given enough samples of cars, pedestrians, cyclists, and other objects, can it detect and distinguish targets in complex road scenarios. If an object has very few or even no examples in the annotated sample set (such as a full-loaded Express tricycle), it is challenging to make correct detection.

Drawback 2: Inadequate Deductive Reasoning

Compared with the human brain, existing vision perception algorithms have inadequate deductive reasoning abilities. The human brain can recognize the fundamentals of things with just a few pictures or videos. For example, after recognizing a target truck, even if we have never seen a wide variety of colorful refurbished trucks or trucks that have overturned on the ground, we can still make quick judgments when we see them. This is the deductive reasoning ability of the human brain, which many vehicles still lack. Currently, widely used vision perception algorithms in the field of autonomous driving still lack this ability. Once an obstacle that is not in the data set appears on the road, there is a high probability of a missed detection.

Drawback 3: Inadequate Perception Precision

During the driving process, the human brain processes information from the surrounding environment more systematically.

Human eyes can see if there is a car driving in the obscured area through the gap between two cars. Besides, apart from observing subtle changes in the car’s body and wheels, we can also observe other drivers’ expressions, actions, etc., through the windshield, which is essential for driving judgments. However, present-day vision perception algorithms cannot achieve such precision, and there is still a long way to go.

These are the three drawbacks of vision perception algorithms that are currently known.A low-cost sensor solution for autonomous driving that is primarily based on visual perception is sufficient for high-speed/highway and parking lot scenarios, as traffic environments and participants (such as vehicles and pedestrians) are relatively uniform in these situations. However, when faced with more complex environments or traffic conditions in urban areas, such as a large vehicle lying in the middle of the road, the sensing capabilities provided by these three types of sensors may be insufficient.

Typical problems that need to be addressed when implementing autonomous driving in urban areas include poorly lit road construction areas at night, suddenly opened car doors of parked vehicles, three-wheeled carts stacked with bulky cargo, and electric bikes suddenly crossing narrow roads. These problems are difficult to solve using visual-based techniques, so technology routes primarily based on lidar have emerged.

Some European and American carmakers have abandoned lidar and are racing recklessly on the road, fueled by countless enthusiastic fans who encourage them and test them personally, even at the risk of their own safety. Domestic carmakers are not so fortunate; they must be prepared to address any accidents and be as cautious as walking on thin ice, as one misstep could lead to a heavy blow to their brand reputation. Therefore, even though the cost of lidar is high and there is no way of knowing when the cost will come down, domestic carmakers that are technologically advanced have already begun to explore lidar-based technology routes in order to ensure driving safety.

The Breakthrough of Chinese Automakers

Thanks to China’s strong research and development capabilities and supply chain system, the cost of lidar has dropped from hundreds of thousands of yuan to the tens of thousands of yuan level, and a solid-state lidar suitable for automotive use has been developed based on mechanical lidar. Domestic carmakers that are leading the way in autonomous driving have expressed their intention to mass-produce models equipped with lidar within the year to achieve Level 3 or even higher-level autonomous driving functions.

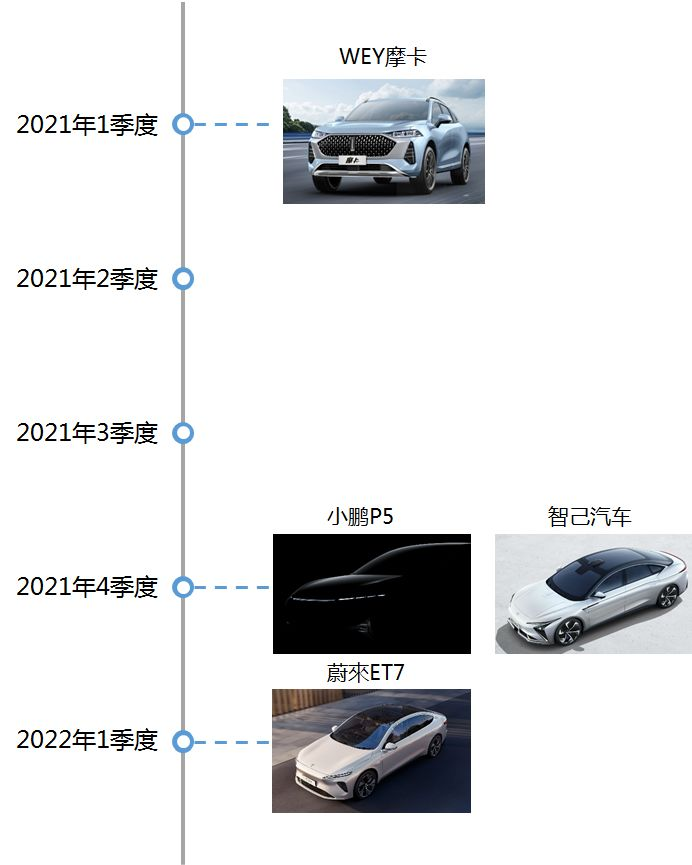

From the currently available public data, four domestic carmakers will officially launch mass-produced models equipped with lidar around 2021. WEY Mocha, a model produced by Great Wall’s WEY brand and the first fixed-state lidar-equipped car in China, will be launched in the first quarter of 2021, and new players in the domestic automotive industry such as NIO and XPeng will also launch three models equipped with lidar in the fourth quarter of 2021 and the first quarter of 2022, respectively. The following table displays the models.

It is worth noting that the WEY Mocha, as a mass-produced vehicle equipped with a LiDAR, has not reduced the configuration of other sensors. In addition to the three solid-state LiDARs, it is also equipped with eight millimeter-wave radars, eight cameras, and 12 ultrasonic radars. Combined with high-precision maps and 5G+V2X, it achieves a 360-degree sensing range with no blind spots, just like NIO and XPeng, relying on multiple redundant sensors.

It is worth noting that the WEY Mocha, as a mass-produced vehicle equipped with a LiDAR, has not reduced the configuration of other sensors. In addition to the three solid-state LiDARs, it is also equipped with eight millimeter-wave radars, eight cameras, and 12 ultrasonic radars. Combined with high-precision maps and 5G+V2X, it achieves a 360-degree sensing range with no blind spots, just like NIO and XPeng, relying on multiple redundant sensors.

Highway automated navigation assistance is an important feature in the field of autonomous driving, which can assist the driving system in autonomous completion of ramp up and down, highway cruising, lane change, overtaking according to the navigation information. Tesla’s NOA, NIO’s NOP, XPeng’s NGP, and WEY Mocha’s NOH have different names but the same function.

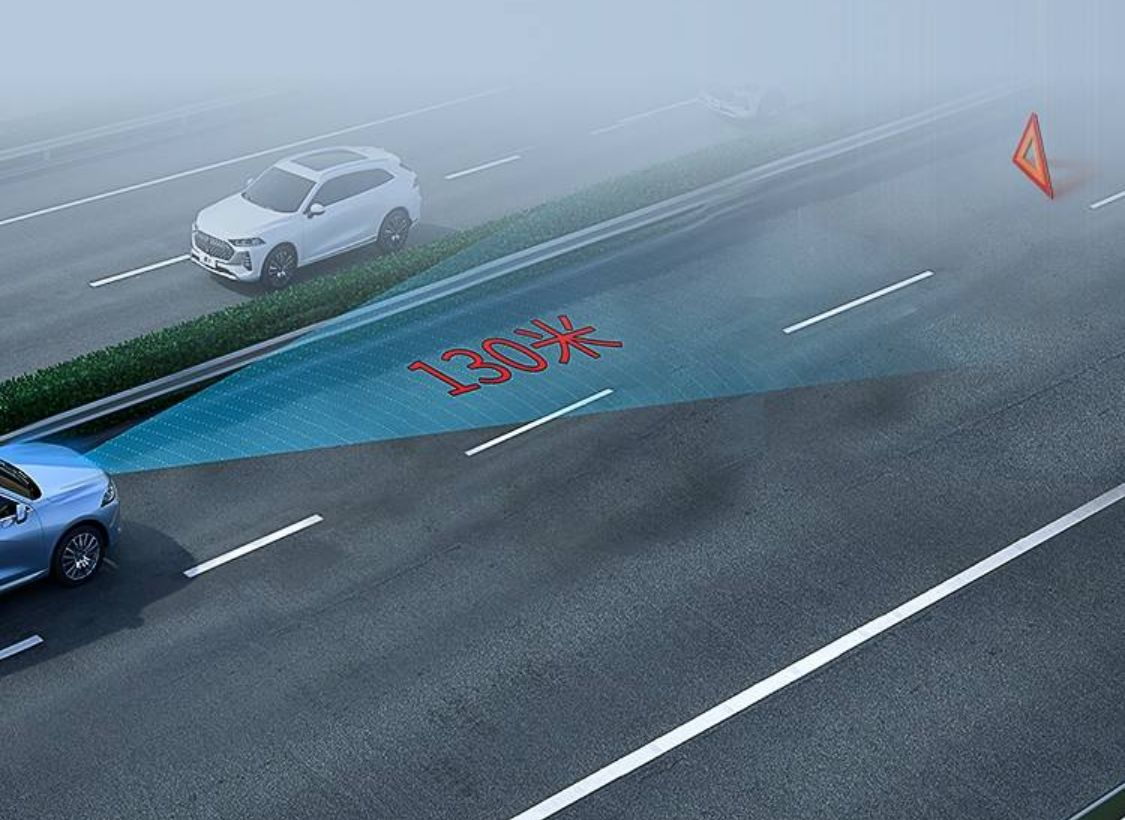

Whether it is Tesla’s NOA, NIO’s NOP, XPeng’s NGP, all of them rely on the two sensors, cameras and millimeter-wave radars, to realize navigation assistant driving. As for WEY Mocha’s NOH, it is different in the way that the LiDAR point cloud information is introduced into the high-speed automatic navigation assistant driving system. The LiDAR can strengthen the perception of distant static targets, effectively compensating for the limitations of cameras, such as perception fluctuations, and restrictions in special conditions such as night or changes in light.

In our daily commuting scene, we often encounter such situations where tires and low obstacles are scattered on the ground 100 meters away. These targets are small in volume and too close when detected visually. Once the speed cannot be reduced in time, it may cause accidents. However, with the LiDAR point cloud, these targets can be detected at a distance of 100 meters or more, even in rainy and foggy weather, to identify hazards in advance.

Identifying risks in advance is just one capability of the LiDAR. The precise point cloud information of the LiDAR can also improve the accuracy of detecting vehicles and pedestrians, thereby achieving more accurate lateral and longitudinal control, ensuring safety and improving comfort.

Conclusion

This is the breakthrough path that Chinese automakers have recognized. Abandoning the illusion of achieving L4-level autonomous driving based on pure visual and millimeter-wave radar, relying on the technology route of LiDAR and multiple sensors (high-precision maps, and V2X can also be regarded as alternative sensors) redundancy, is the safe and reliable technology route at this stage, and also the suitable technology route for Chinese road conditions.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.