Last night at Shenzhen’s DaYun Center, heavyweight industry leaders including Nvidia founder and CEO Huang Renxun, Luminar founder and president Austin Russell, and ZF Group board member Dr. Holger Klein appeared on the screen successively.

They all explicitly stated in the video that they will use their own products to empower SAIC R-TECH.

And the core theme of this conference was R-TECH.

You can simply understand R-TECH as the technology day for SAIC R cars. Huang Renxun and Austin Russell’s appearance also laid the foundation for this hardcore conference. From this hardcore conference, we can gain a deep understanding of SAIC’s technology roadmap.

Autonomous driving is the trend of this era, and both autonomous driving companies and traditional car companies hope to have their own place in the field of autonomous driving.

The core of R-TECH is the PP-CEM full-stack self-developed high-level autonomous driving solution, which is also SAIC’s answer to the autonomous driving era.

The perception capability of PP-CEM consists of the following six aspects:

-

one Luminar 1550 nm LiDAR

-

two 4D imaging LiDAR and six long-range point cloud LiDARs

-

12 perception cameras

-

12 ultrasonic radars

-

5G-V2X

-

High-precision map

The upcoming new car ES33, to be officially launched in the second half of next year, will also become the first SAIC model equipped with the PP-CEM full-stack self-developed high-level autonomous driving solution.

With such rich perception hardware, the sole purpose of PP-CEM is not to let any small object on the road be missed.

Although a wave after wave of conferences last year have led geek users to complete the process from novice to entry-level in autonomous driving hardware, the 4D imaging LiDAR proposed by SAIC last night still exceeded the recognition of many people.

What can the 4D millimeter-wave radar do?

The 4D millimeter-wave radar on the ES33 comes from ZF and has been displayed on the company’s official website. According to ZF’s description on its official website, the advantages of this 4D millimeter-wave radar include:1. With 192 channels, it can achieve detailed object detection up to a height of 300 meters.

- Supports fusion with other sensors technologies, including cameras and LIDAR.

- Capable of detecting small objects such as bricks and bicycles within an 80-meter range.

Compared to traditional ADAS forward millimeter-wave radar, which can achieve 8 to 16 channels, Valeo’s 4D millimeter-wave radar is 12 times that of a conventional radar, meaning that it returns more points and has higher resolution.

For example, at the same distance of 200 meters ahead detected by a traditional radar, only one point is returned, which means the system can only determine that there is an obstacle 200 meters ahead. However, 4D millimeter-wave radar can return 12 points, and based on the shape and arrangement of these points, combined with an algorithm, the obstacle ahead can be identified more accurately.

The most challenging task of traditional fusion algorithms combining millimeter-wave radar and cameras is detecting stationary obstacles. Even powerful companies like Tesla may still collide with overturned trucks. In most cases, the solution is to remove stationary obstacles detected by millimeter-wave radar to reduce the computational workload of visual algorithms, mainly from trees and guardrails on the roadside.

The high-resolution distance information obtained by 4D millimeter-wave radar can help the fusion algorithm handle different obstacles more minutely.

Moreover, due to its higher resolution, the system can also obtain more accurate information on the speed and posture of moving objects, and through algorithms, predict and avoid “ghost” or unpredictable scenarios, helping vehicles plan a reasonable avoidance strategy.

Regarding the relationship between 4D millimeter-wave radar and visual algorithms, we can take another example. Assuming you have a girlfriend who speaks only one word when she’s angry, as a straight man, you need to have exceptional emotional intelligence and long-term training to understand her true intentions and make the right decision.

But if your girlfriend grows and starts to speak complete sentences when she’s angry, the cost of communication falls sharply, and you don’t need too much emotional intelligence or long training to reconcile with her.

4D millimeter-wave radar is like a girlfriend who communicates more efficiently, reducing the demand for image deep learning ability and shortening the algorithm development cycle for automakers.

Unlike LIDAR, 4D millimeter-wave radar is more easily combined with cameras.This is partly because the existing fusion algorithms of visual and millimeter-wave radar are mature enough, and partly because the 4D millimeter-wave radar can better complement the camera.

Lidar is prone to malfunction in rainy, snowy, and foggy weather, but at the same time, it is also the shortcoming of the camera. The 4D millimeter-wave radar inherits the perceptual ability of millimeter-wave radar in harsh weather conditions, while possessing high resolution capabilities closer to Lidar. Therefore, it is indeed a new strong member in the multi-sensor fusion scheme mainly focused on vision.

With the 4D millimeter-wave radar, why do we still need Lidar?

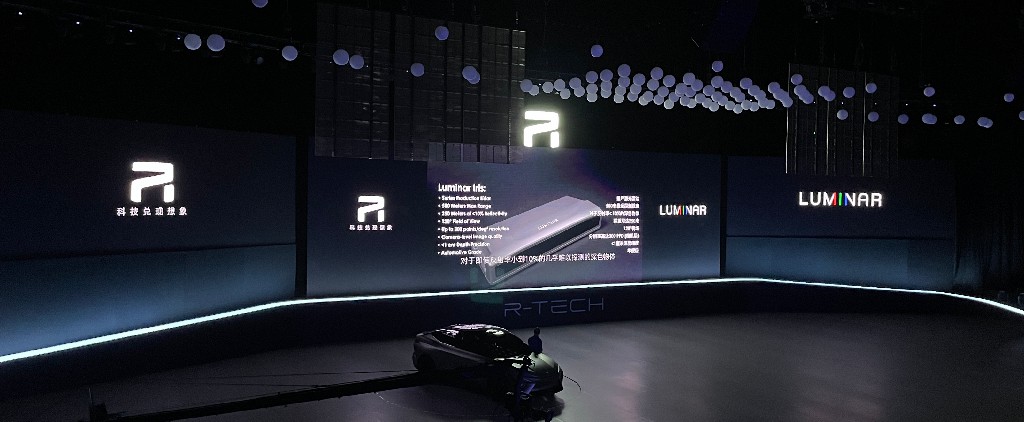

In addition to the 4D millimeter-wave radar, the ES33 also carries a Lidar lris provided by Luminar.

This 1550-nanometer Lidar is mounted on the roof, which has many advantages over mounting it on the bumper.

Not only does it have a better detection field of view, but it is also less likely to get dirty (Lidar is very afraid of dirt). Pedestrian collision detection is also better, and it won’t be affected by minor collisions during daily driving.

The only problem with mounting it on the roof is that wind noise is more noticeable, but this Luminar Lidar is already very small.

Another advantage of this Lidar is that it uses 1550-nanometer laser, while the other 905-nanometer wavelength Lidar can only operate at low power to protect the human eye. As the 1550-nanometer laser is absorbed by the transparent part of the eye before reaching the retina, it does not harm human eyes and can thus operate at higher power.

Higher power can directly increase point cloud resolution, increase detection distance, and enhance the penetration ability of complex environments.

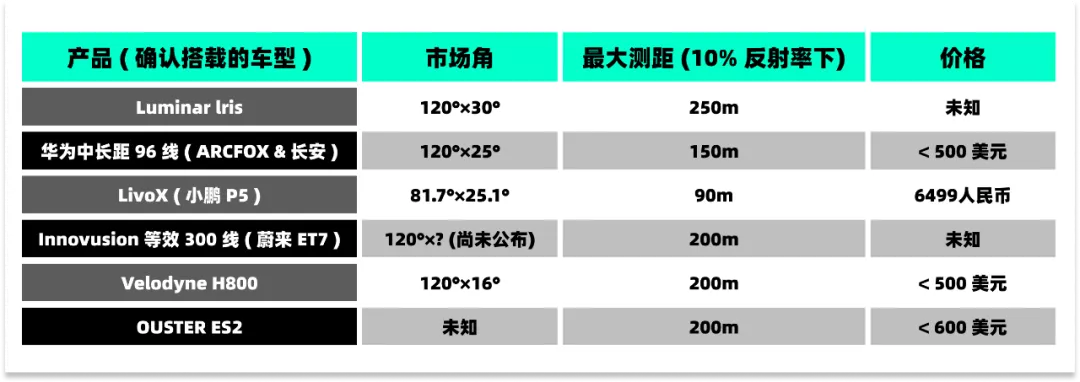

The official data shows that the lris has a horizontal field of view angle of 120 degrees and a vertical field of view angle of 30 degrees, and the maximum detection distance when the reflectivity is less than 10% is 250 meters.

What is the level of this performance? Let’s make a comparison with similar products:

On paper, the Luminar lris is the best among several products, but this product is still not in mass production and needs to be jointly verified with SAIC Motors for vehicle-level validation. LivoX is relatively earlier in terms of development, so its parameters are slightly inferior.To ensure Lris can smoothly get on board, Luminar will establish an office in Shanghai to jointly promote product validation and provide software services.

According to Luminar’s press release, Iris may be produced in Shanghai, and the possibility of the two parties establishing a joint venture in the later period cannot be ruled out.

In addition, based on Luminar’s official press release, the core function of this radar is to help achieve automatic driving on highways, so we can roughly understand why ES33 has a 4D millimeter-wave radar and also carries a lidar.

Things started with NIO NOP and XPeng NGP. The assisted driving function of map fusion was constantly appearing. Automakers such as BMW, Geely, and Chery StartRoad are also expected to release similar functions this year. The basis of these functions is based on high-precision maps drawn by the lidar and high-precision positioning based on ground-based augmentation systems in China.

Taking XPeng NGP as an example, two technical issues are encountered during the experience. In super long tunnels and urban canyons, the system occasionally exits.

The core of this problem lies in high-precision positioning. Currently, everyone achieves high-precision positioning through RTK or RTX signals and feeds back positioning information to high-precision maps. Once the signal is blocked and lost, the function cannot continue to be used. Sometimes the target’s vertical positioning may also be wrong, causing the system to think that you are on the overpass while you are actually under it, thus turning on the auxiliary system.

With the lidar, this problem can be solved. The system can compare the point cloud map scanned by the lidar with the high-precision map, which can not only verify the high-precision positioning data, but also obtain the dynamic absolute positioning of the vehicle through comparison in the positioning loss scenario, thus becoming the redundancy of high-precision positioning.

In an open scene, if you want to break through from assisted driving to autonomous driving, sensor and computing chip redundancy is essential. As a model that will not be delivered until the second half of 2022 and the delivery time is uncertain, the lidar cannot be absent. Otherwise, facing L3 autonomous driving in the upcoming highway scenario, the R brand will have no tickets to get in.

Understanding the application of 4D millimeter-wave radar and lidar, let’s take a look at what Yang Xiaodong said on the scene: “We won’t confuse the white cargo box of the front truck with the sky, nor will we be helpless in dark narrow tunnels.”

Can you feel the tension? He just mocked Tesla, NIO, and XPeng one by one!

It is necessary to compare Orin with ET72020, Huang Renxun, the founder and CEO of NVIDIA, publicly stated that Ideal Auto will be the first manufacturer to use the Orin chip series, and NVIDIA will advance the mass production time of Orin by one year from 2023 to 2022 to match Ideal Auto’s production cycle.

At the NIO Day in January 2021, Li Bin also said that NIO is the first car manufacturer in the world to use NVIDIA’s Orin chip.

At last night’s press conference, Yang Xiaodong announced that the R brand is the world’s first mass-produced car to be equipped with Orin. As SAIC has a large presence, Huang, the founder of NVIDIA, was even invited to the stage at the scene.

However, although the ET7 is planned to be delivered in Q1 2022, the ES33 will not be on the market until the second half of 2022, and the delivery time is still unknown.

Although it has been rumored that NVIDIA’s Orin cannot be delivered in Q1 2022, at least from the time they officially announced it, the ET7 is earlier than the ES33.

What makes me more confused is that ES33 may not have planned how to use Orin’s computing power yet, after all, the officially announced information is still a flexible semi-open and semi-closed interval with 500 to 1000+ Tops of computing power.

Before introducing the computing power of the ET7 at NIO Day, Li Bin spent a long time introducing the first 8 million high-definition cameras, as well as the massive data generated by the high-definition cameras. Therefore, we can understand why the ET7 needs 4 single-chip chips with Orin’s computing power of 254 Tops.

Processing video information is the specialty of GPU, and GPU is NVIDIA’s specialty and the main component of Orin.

Regarding the planning of computing power requirements, the R brand stated, “to achieve perception and fusion with the super-environment model algorithm developed by the whole stack,” although I didn’t understand it, I felt it sounded very advanced.

Software Ability is the Real Bayonet

Just as mentioned in the title, R brand has gathered the world’s strongest millimeter-wave radar + solid-state lidar + computing chip, everything looks great from the hardware perspective, but the real experience depends on SAIC’s software ability.

Currently, the core of realizing autonomous driving is still visual perception technology, and the ability of visual recognition depends entirely on the training of software algorithms. Behind the super-detailed recognition ability of industry benchmark Tesla FSD Beta is the industry’s experts and more than 5 years of self-research experience and a large amount of data iteration.

In an interview after the press conference, SAIC revealed that the autonomous driving team that developed the whole stack is the intelligent driving team of R Auto, and the cloud computing team is also a zero-beam of SAIC Group, which is the software center.“`markdown

当然,大量的毫米波雷达和激光雷达可以来辅助摄像头感知,可以处理很多摄像头无法识别的 Corner Case,在极端天气或者一些极端细小的物体探测上确实有优势,但是目前还没有对外秀过软件实力的 R 汽车到能把 PP-CEM 全栈自研高阶自动驾驶方案做成什么样子仍然是个未知数。

我们希望所有车企在发布会上拿特斯拉当作反面教材的同时,也对特斯拉在北美目前的实力有所认知,也希望明年交付之际在更丰富的感知硬件的赋能下,能够有比特斯拉更强的自动驾驶能力和更好的使用体验,全方位地超越特斯拉。

那一刻,我们会用最热烈的掌声迎接这历史性的时刻。

“`

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.