Author|Ammie

Why write this article? In practical terms, achieving true autonomous driving cannot be accomplished all at once. In fact, in a sense, there are still many obstacles to overcome in achieving autonomous driving. For example, how to solve unpredictable conercases during driving, how to provide users with meaningful warning functions at a larger and more meaningful level to ensure timely takeover and avoid safety hazards. From an experiential perspective, how to explore more meaningful APPs that can serve customers faster, more accurately, and more intelligently.

In terms of the human-machine interaction strategy of autonomous driving, it mainly involves the corresponding relationship between the ownership of driving between the initiator and the receiver in different scenarios. The initiator and receiver can be either the system or the driver. For the functional operation of autonomous driving, it mainly includes activation, intervention, takeover, and minimum risk strategy. When a dynamic driving task (DDT) related system failure or exceeding the ODD range occurs, the system issues an intervention request. The user responds by controlling the horizontal and vertical control system, and this process is called takeover (with an emphasis on the driver passively executing); the driver actively provides input to the horizontal and vertical control system while the system is still active, and the system judges whether to exit the function or continue to execute the remaining part of the DDT task based on the threshold. This process is called intervention (with an emphasis on the driver taking the initiative to execute).

As shown in the above table, the switch of driving rights for autonomous driving can be divided into emergency situation driving right transfer and non-emergency situation driving right transfer according to the degree of urgency, and the response time requirements are different under different situations. For automakers, it is necessary to carry out work on database development, data customization and collection, and test case development based on different interaction functional stages.## Development and Research Directions of In-car Intelligent Interactive Technology

Currently, several L3 autonomous driving production vehicles have been introduced to the market. However, due to various reasons, none of them have truly reached L3 level or opened up to the public yet. In the context of artificial intelligence and “Internet +,” intelligent interaction will be one of the core technologies that brings significant changes to the automotive industry, from both a product and an industry chain perspective. As demands for vehicle operational safety and comfort continue to escalate, both automakers and autonomous driving system suppliers have been accelerating the commercialization of autonomous driving systems. Smart connected vehicles will remain in the stage of collaborative driving between humans and machines for a long time. Thus, aspects such as the evaluation and application of various intelligent interaction functions need to be analyzed in complete and valuable reports simultaneously.

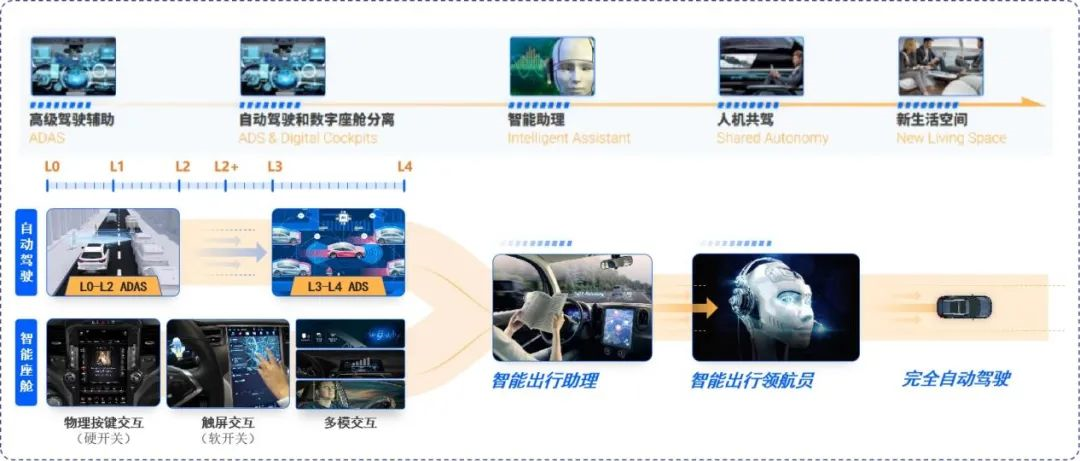

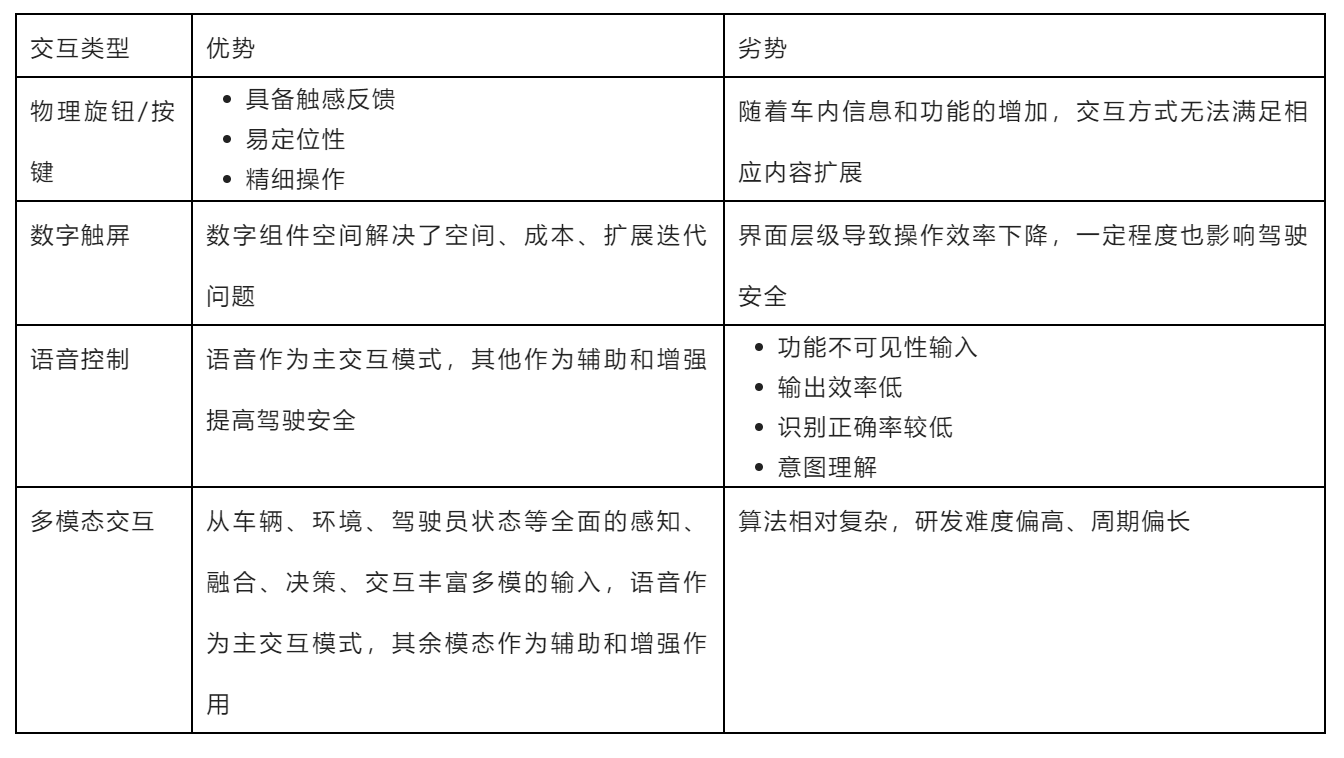

To comply with the development trend of intelligent interaction, it is necessary to pay attention to the impact of human-machine takeover and form a new intelligent driving interactive experience. The development of in-car intelligent interaction has gone through the entire control process from physical knobs/buttons to digital touch screens, voice control, and finally evolved into multimodal control (such as gesture control). Both inside and outside the cabin, it is moving towards a higher level of intelligence. Outside the cabin, people need to consider issues beyond L3, while inside the cabin, there is a need to consider multimodal interaction, and software iteration requires more data.

In different stages, different interaction modes have become the research and development topics for intelligent driving, each with its unique advantages.

## Current State and Research on Human-Machine Co-Driving in Intelligent Interactions of Automobiles

## Current State and Research on Human-Machine Co-Driving in Intelligent Interactions of Automobiles

Currently, research on human-machine co-driving in the field of intelligent interactions in automobiles mainly focuses on the experience of driving functions, human-machine interaction, and the scenario system of human-machine takeover. All these aspects have become the key research questions and directions for government, OEM, Tier 1, autonomous driving companies, domestic and foreign universities, and other research institutions. Corresponding research has been conducted in the following aspects:

1) Research on Driving Function Experience

Research on the effects of driver function experience in vehicle interaction involving sound, vibration, and lighting;

Research on the impact of various vehicle information interaction, to ensure the optimal effect of interactive information with the driver;

Evaluation of the effects of various interactive methods;

2) Research on Human-Machine Interaction

Research on the transformation and application of human-machine interaction in practical use;

Development of multi-modal interaction functions such as touchscreen, speech recognition, gesture recognition, and face recognition;

Provide development support for human-machine interaction in autonomous driving vehicles;

3) Research on Human-Machine Takeover

Research on the correspondence between the system and the autonomous driving function;

Research on the multi-dimensional takeover scenario system from the driving scenario (emergency braking, pedestrian crossing), the driver (age, occupation, scenario, etc.), and the vehicle status (active takeover, ODD scenario takeover);

Intelligent Interactive Business System

The human-machine interaction in intelligent driving is divided into two directions: pure human-machine interaction and human-machine takeover. Human-machine interaction includes several aspects, such as speech data, face data, gesture recognition, and human factor data. Research is carried out from the data collection, establishment of sample libraries, algorithm development, to the final evaluation and application. The human-machine takeover process is more closely associated with the control process of intelligent driving, and its implementation requires research in the direction of the driver, environment, and mechanism. Specifically, it includes the following business systems.For the human-machine interaction solutions targeting autonomous driving, it involves high-performance domestic AI chips-based high-security solutions in the advanced intelligent stage, as well as joint cloud brain, integrating high-precision maps, data loops, intelligent operation of vehicles and other all-round cloud intelligent services to build the core intelligent capabilities of vehicles. By integrating roadside intelligence and supporting vehicle-road coordination functions, the system security can be greatly enhanced.

Through the organization of intelligent interaction business, the development of databases, test cases, algorithm development, and evaluation applications can be used as business directions, while providing comprehensive monitoring of driver behavior to provide a more scientific basis for human-machine takeover, thereby fully enhancing the driving experience.

Intelligent Interaction Database Development — Speech Data

1) Speech Data Collection

The development of speech databases should be based on the design of corpora, personnel characteristics, noise types, and other dimensions: multi-dimensional instruction sets for telecommunications, navigation, and vehicle control; information on noise sources in multiple types of personnel and various environmental scenes. The collection rules are based on personnel, corpora, and noise sources. The collection rules need to follow international standards and consider different styles and special groups. Among these, personnel should be in the age range of 20-60 years, with a distribution that averages every 10 years.

The establishment of corpora mainly targets the following aspects:

The main source of noise data comes from different types of noise sources of vehicles, including multi-person background, source location, external noise, and multimedia interference. The collection process involves several aspects: collecting noise data of different vehicle types, tire noise caused by different vehicle speeds, noise in different environmental scenes, and collecting different background noise data. As for the collected database, it is necessary to ensure different responses to the window and multimedia switches under different scenarios, including cold starts, idle speeds on the ground, and idle speeds in underground parking lots.

The main source of noise data comes from different types of noise sources of vehicles, including multi-person background, source location, external noise, and multimedia interference. The collection process involves several aspects: collecting noise data of different vehicle types, tire noise caused by different vehicle speeds, noise in different environmental scenes, and collecting different background noise data. As for the collected database, it is necessary to ensure different responses to the window and multimedia switches under different scenarios, including cold starts, idle speeds on the ground, and idle speeds in underground parking lots.

2) Speech Algorithm Development

The core technologies of speech interaction include speech enhancement, speech recognition, semantic understanding, dialogue management, natural language generation, voiceprint recognition, etc. At present, the optimization and technology accumulation of algorithms are aimed at.

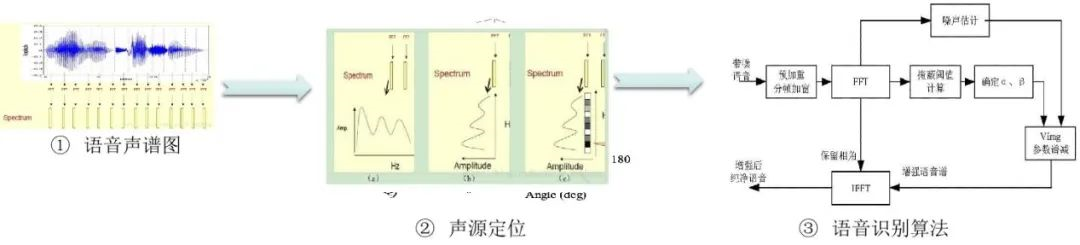

Speech enhancement involves beamforming, source location, and source noise reduction. Among these, speech enhancement is an algorithm module that uses source location to determine the direction of the target speech signal and suppresses noise and reverberation through methods such as deep learning and beamforming. As shown in the figure below, the actual process of speech beamforming involves the superposition of inputs from multiple original sound sources and noise sources. The formed sound source beam is actually the synthesis of various sound sources. After input, the main processing sound source with high energy and of interest is extracted through certain algorithms. Then, through some classic programs, including data cloud synchronization, multi-communication speech, target masking, and other processing methods, a spatial covariance matrix is formed, and the matrix is used for mask processing to achieve effective noise reduction of the sound source. In the process, a system combining beamforming and deep learning is used to improve the noise reduction performance.

Apart from the basic speech processing methods mentioned above, it is necessary to use certain speech recognition algorithms for speech recognition. This includes feature extraction from the speech database, acoustic model training, and acoustic model exporting. The next step is to input the language model and semantic dictionary trained from the text database into the speech decoding and search algorithm to achieve appropriate speech output.

Apart from the basic speech processing methods mentioned above, it is necessary to use certain speech recognition algorithms for speech recognition. This includes feature extraction from the speech database, acoustic model training, and acoustic model exporting. The next step is to input the language model and semantic dictionary trained from the text database into the speech decoding and search algorithm to achieve appropriate speech output.

3) Speech Testing and Evaluation

The algorithm is evaluated by automatically testing the car-mounted speech recognition module through multiple annotations of collected speech wake-up and command data. The evaluation process includes data segmentation, data annotation, and actual testing processes.

Data segmentation involves setting wake-up words, command words, and multiple interaction commands. Wake-up word test cases need to include basic wake-up words, similar wake-up words, and interfering wake-up words. Command word test cases include basic command words, gender interference, noise interference, similar command words, multimedia, background music, dialect interference, and location effects. Multiple interaction commands involve selection words, incorrect input, and content input.

Data annotation mainly targets different types of samples for text content, word slot annotation, intent annotation, and response time annotation. The main categories in the sample library include communication, maps, audio and video entertainment, systems, vehicle control, vehicle information retrieval, life information retrieval, and chat interaction.

The testing process involves feeding data into the scenario module, then identifying whether the speech retrieved belongs to background sound, in-car noise, or outside noise through the “testing language science” feature. The testing content involves basic speech recognition, interaction success rate, speech wake-up, speech interruption, semantic understanding, response time, and voiceprint recognition.# Intelligent Interaction Database Construction — Gesture Data

1) Visual Data Collection

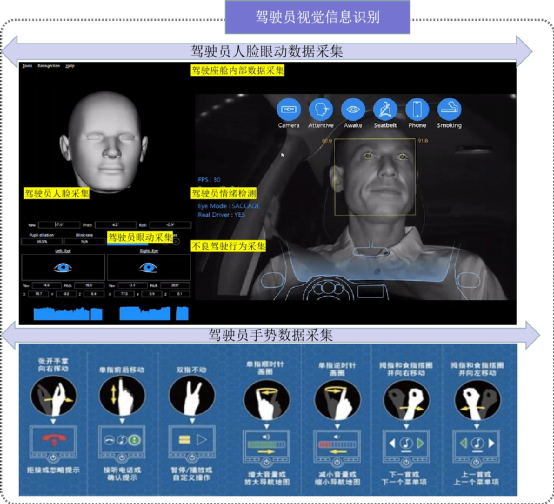

The main focus of visual data research here is the collection of data related to the driver’s face, eyes, gestures, and bad driving behavior to build a database for driver’s visual interaction, thus supporting product development and competitive benchmarking. It involves the collection of data related to the driver’s facial movement, eye movement, body posture, and gesture recognition. For instance, eye movement data points out the key points of the eye such as the iris, eyelid, pupil, and direction of gaze. Body posture data points out the key bone points of the human body, forming a skeleton connection. Facial data involves annotating 26, 54, 96, and 206 facial key points, tracking corresponding key points.

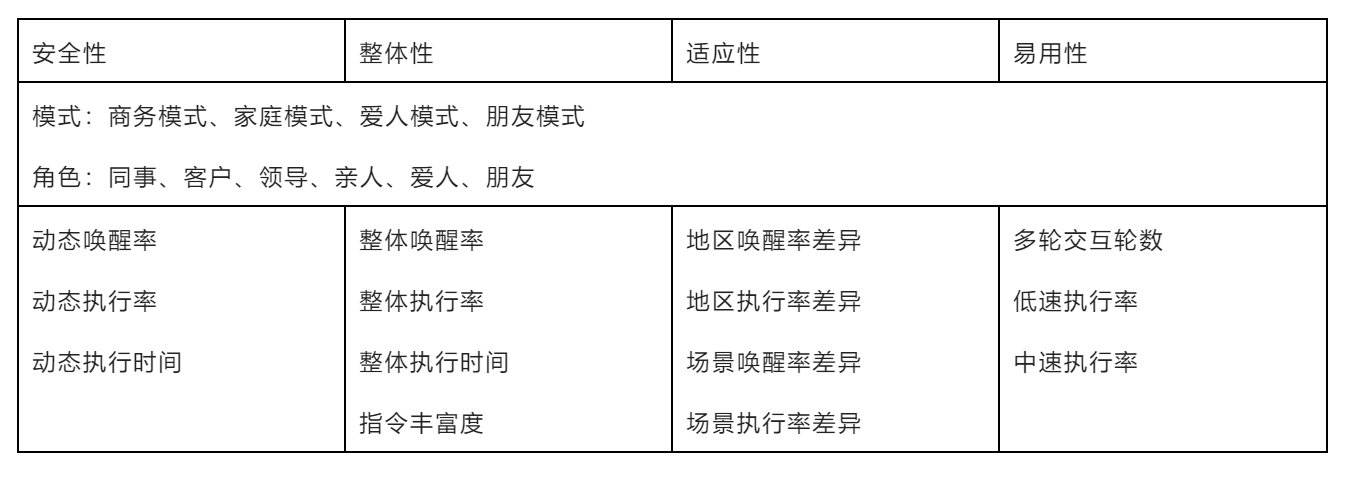

In addition, reasonable evaluation mechanisms should be used for speech evaluation results. For the application of in-car voice recognition function, a mass-production version function benchmark report, interaction logic organization, driver personal experience investigation and the interaction rule design for interaction scenario categories are formed. The evaluation dimension corresponds to subjective and objective indices of corresponding themes in different scenarios (business mode, family mode, lover mode, friend mode). Objective indices usually analyze safety, integration, adaptability, and ease of use from four dimensions, and differ distribution proportion is assigned for these four evaluation dimensions. Subjective experience mainly emphasizes performance in terms of high recognition rate, simplified operation procedure, reasonable voice guidance, smooth interaction process, quick response speed, no usage confusion, attention occupancy level, etc.

From a more objective perspective, based on driver physiology, psychology, and behavior data, it is necessary to conduct research on vehicle driving switching by combining vehicle data with take-over scenario data.

From a more objective perspective, based on driver physiology, psychology, and behavior data, it is necessary to conduct research on vehicle driving switching by combining vehicle data with take-over scenario data.

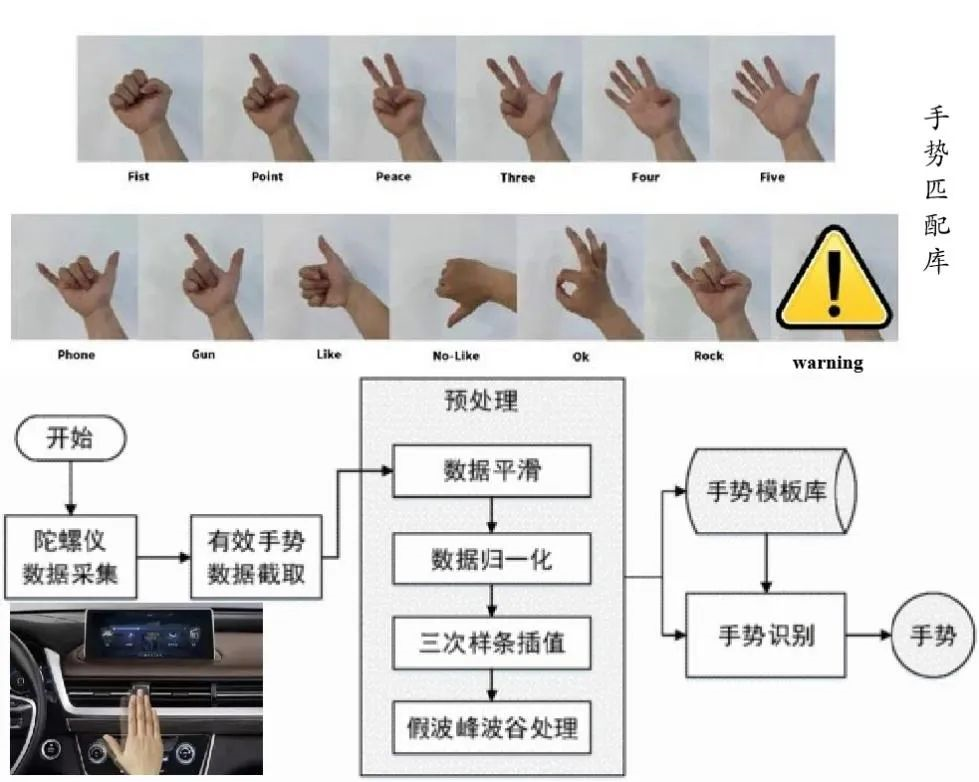

2) Development of Gesture Recognition Algorithm

Establishing a visual matching library is a prerequisite for all research and development. From the perspective of intelligent cars, it is actually the effective data collection in the data closed-loop submodule that we are currently more concerned about. Of course, cabin data collection needs to conduct target recognition and classification through complex algorithms. Extracting the interested parts is a prerequisite for subsequent visual recognition. The study of facial recognition of drivers has been the focus of various researches during the early stage of intelligent driving, which we will not focus on here. This article mainly focuses on the gesture recognition process in the next cockpit visual development focus.

An important part of algorithm development is the segmentation and labeling of gesture data. For gesture segmentation, it is mainly establishing usable recognition labels through large-scale recognition of different lighting, age, gender, and gesture postures in the early stage. These labels can actually be classified. For example, there are four categories for thumb posture classification: thumbs up, thumbs down, thumbs up and hooked, and thumbs hooked. Hand posture refers to pushing the hand inwards and outwards, clockwise and counterclockwise rotation of the hand. Sliding two fingers down, shrinking two fingers, speeding up all fingers, sliding left and right, rolling the hand forward and backward, making a fist. For each gesture mentioned above, data augmentation is required to form a matching database. As for gesture labeling, the 21 main bone nodes of the hand are labeled, including fingertips and bone connections.

3) Gesture Recognition Testing EvaluationUsing visual data such as driver’s gestures, facial expression, eye movements, and actions, a functional test case is formed through effective segmentation, labeling, and clustering to validate the functionality and evaluate the algorithm. For gesture recognition, the evaluation includes hardware platform computing power, product performance, load status, and performance under extreme conditions.

The functions involve universal functions and specific functions. Universal functions mainly include selection, switching, and confirmation, while specific functions include telephone, multimedia and main interface. Response time, misrecognition rate, accuracy, and frame loss rate are considered as primary factors when evaluating the performance, taking into account certain influencing factors such as gesture direction, angle of rotation, environmental influences, operating range, and gesture duration. It is important to ensure that the performance meets the requirements while the car is operating. The performance includes computing power and performance under normal driving and operating conditions, influence of computing power and performance after long-term operations, and impact of different vehicle functions such as navigation, multimedia, 3D rendering, animation, and video display on the remaining computing power and performance.

In addition, redundant testing can also be conducted under extreme conditions, including testing multiple gestures continuously, non-standard gestures, interruption or incomplete instructions during dynamic gestures, special group gestures, recognition performance during high load operation of car functions, recognition performance during function overlapping, recognition performance when multiple gestures from main and deputy drivers appear simultaneously, and incorrect gestures.

Summary

In the human-computer interaction mode, various algorithms need to be optimized, including speech recognition, gesture recognition, and facial recognition. Speech enhancement, semantic understanding, and TTS are particularly important in further speech development. Gesture recognition algorithms require more efforts on hardware platforms, model selection, computing optimization, and load evaluation. Moreover, facial recognition requires targeted optimization of eye movement recognition and expression algorithms. Finally, in the aspect of integrating human factors, more attention needs to be paid to the comprehensive evaluation of multi-dimensional human factor data and related content.

In the human-computer interaction mode, various algorithms need to be optimized, including speech recognition, gesture recognition, and facial recognition. Speech enhancement, semantic understanding, and TTS are particularly important in further speech development. Gesture recognition algorithms require more efforts on hardware platforms, model selection, computing optimization, and load evaluation. Moreover, facial recognition requires targeted optimization of eye movement recognition and expression algorithms. Finally, in the aspect of integrating human factors, more attention needs to be paid to the comprehensive evaluation of multi-dimensional human factor data and related content.

Apart from algorithm optimization, the overall human-computer interaction also depends on functional evaluation and application. This includes establishing a complete evaluation system, clarifying functional performance grading, benchmarking basic functions (involving horizontal benchmarking processes of safety, accuracy, integrity, and experience), and effectively evaluating intelligent interaction performance (including accuracy, misidentification, response time, etc.) through the implementation of intelligent interaction landing sign levels. Additionally, evaluating interaction functions with warning under load simulation conditions, and conducting longitudinal evaluations of intelligent interaction functions for individual mass-produced vehicles.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.