Automatic Driving Simulation and Tesla’s AI Day

Author: Junchuan Zhang, an automatic driving simulation algorithm expert at Foresight Microelectronics

Automatic driving simulation is the application of computer virtual simulation technology in the automotive field. It digitizes and generalizes the real world, and a highly realistic and reliable simulation platform can accelerate the commercialization of automatic driving. In real-life automatic driving vehicles, the design and testing of hardware such as lidar and cameras is a complex process, and hardware upgrades and updates can bring a lot of manpower and material costs. Learning and testing the automatic driving model requires a large amount of road data input. According to a Rand Corporation evaluation, automatic driving systems need to undergo at least 11 billion miles (about 17-18 billion kilometers) of road verification to achieve mass production application conditions. Nowadays, many cities have established automatic driving test zones, but the scenes are single, and the road environment cannot achieve full coverage of road conditions, and some special collision test scenes cannot be obtained.

Automatic driving simulation can solve some of the cost and scenario diversity needs, and for major automotive companies and autonomous driving companies around the world, virtual simulation platforms are the main testing method, and 90% of the scenarios in automatic driving testing are completed through simulation platforms. The information of the real road section is realized in a virtual environment through scenario modeling or digital twin methods, and various traffic flows are generated on this basis, producing a large amount of mixed simulation data and automatically generated labeling truths, which provide automatic driving models for training and testing and improve model generalization. In addition, automatic driving simulation can easily form a closed loop in data, labeling, training, and testing, thus accelerating the update and iteration of automatic driving algorithms.

Tesla AI Day’s Analysis of Automatic Driving Simulation

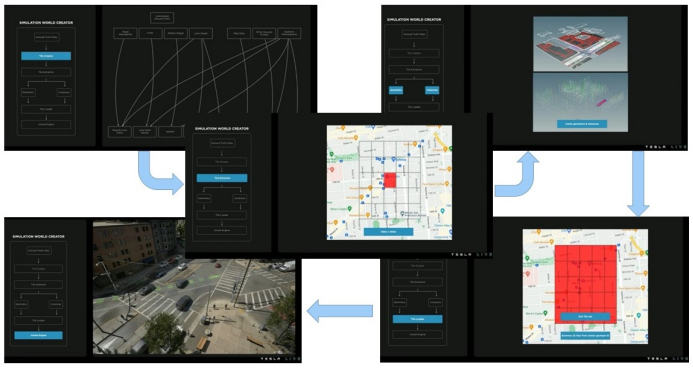

As a benchmark for autonomous driving, Tesla is also a leader in automatic driving simulation. At last year’s Tesla AI Day, Tesla showed its highly realistic automatic driving simulation platform, with very complete driving scenes, roads, vehicles, pedestrians, street lights, surrounding roads, grasslands, trees, irregular buildings, and light sources, etc. At first glance, it is almost like a real scene under the camera. At this year’s AI Day, Tesla once again demonstrated their progress on the automatic driving simulation platform and introduced their Simulation World Creator’s workflow and overall framework.The simulation work introduced by Tesla in this year’s release conference mainly belongs to the category of WorldSim, which means that all the data used for simulation testing is virtual. As for the simulation testing content of LogSim, which simply models and replays real data for autonomous driving algorithms, it was mentioned at last year’s Tesla AI Day conference. Of course, it should be noted that in the methods introduced by Tesla at this year’s conference, the boundary between WorldSim and LogSim has been further blurred, and some real data has also been used as input in its World Creator, which will be further introduced in the following article.

According to the content of the conference, the author has summarized the Tesla Simulation World Creator workflow.

-

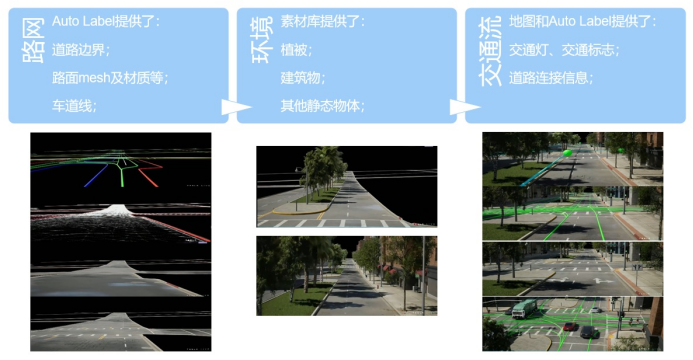

Firstly, Tesla’s powerful Auto Labeling toolchain will provide detailed road information about the location where the data is collected, including road boundaries, median strip boundaries, lane lines, road connection information, etc. Tesla will use these high-precision map-like information to make a road network model in Houdini, generate road surface meshes based on road boundaries, etc., and render the road surface and lane lines. Houdini is a widely used model and special effects production software in the film and game industry. The author speculates that Tesla has developed an automated pipeline for simulating scene modeling based on Houdini, as they emphasized at the conference, to efficiently generate simulation scenes.

-

After the road network model is generated, World Creator will select vegetation, buildings, and other static objects from Tesla’s rich 3D model library to fill the median strip, improve the roadside scenery, and increase richer visual details, such as random obstruction of the road edge by falling leaves. As Tesla said at last year’s AI Day conference, they have already made thousands of different 3D models, which can be combined according to certain rules to form different styles of random and reasonable street scenes, so as to enrich the visual rendering information and achieve the goal of obtaining a large amount of sensor data sets. Automated generation of street scenery can save a lot of technical artistic manpower.- After completing the static scene, World Creator will also add traffic lights and signs to the road network based on map information, and obtain road connection information such as driving direction, connection, and adjacent relationship from the results of Auto Labeling as the basis for generating random traffic flow in the next step. AI-controlled traffic participants will form random traffic flow in the simulated scene based on the above road connection information, allowing the tested vehicles to be in a more realistic traffic environment.

The importance of simulation for autonomous driving development lies in its ability to provide a large amount of data that cannot be provided by real-world environments. So how to generate a large number of rich scenes? Tesla mentioned two points during the launch event:

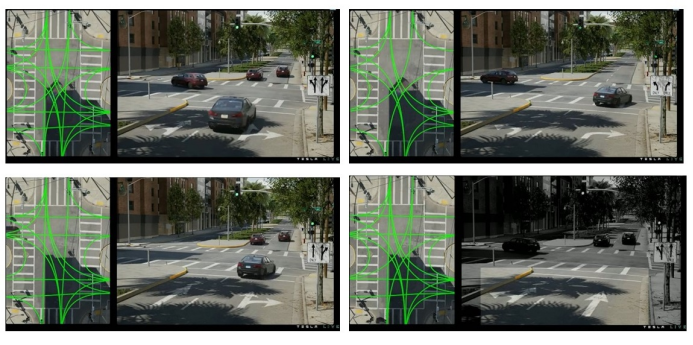

The first is the random generation of roadside street scenes, as shown in Figure 3, six different street scenes are generated around a junction with the same structure.

The second is that road connection information can also be modified according to the algorithm testing requirements. Figure 4 shows that by changing the road connection relationship of a junction (and ground driving signs), the direction of traffic flow can be changed, creating more different simulation scenes.

Tesla then introduced some engineering content of World Creator (Figure 5): including using tile creator to make 3D scenes, and then using tile extractor to store these geometry and instances assets in segmented areas. When necessary, multiple segmented scenes can be loaded into tile loader at corresponding positions according to the map to run the simulation in the Unreal Engine.

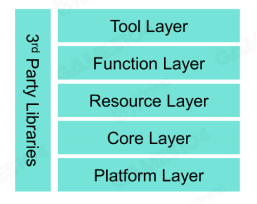

Architecture of Autonomous Driving Simulation ProductI plan to analyze the main architecture levels of the autonomous driving simulation product (WorldSim) by referring to the game engine architecture introduced in the Tesla press conference. Figure 6 shows the architecture levels of modern game engines [1].

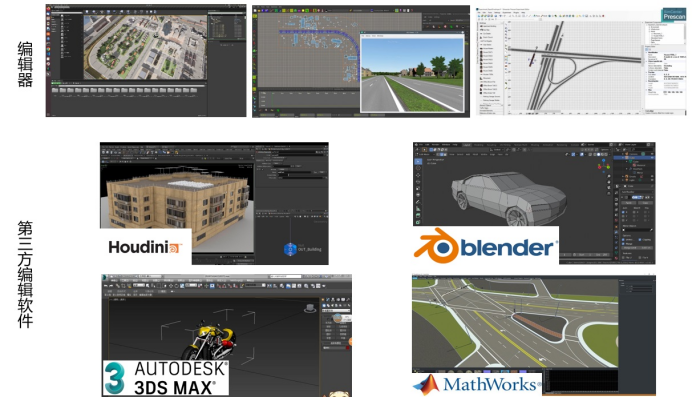

- The tool layer refers to various tools and capabilities necessary to produce simulation scenes manually or automatically, as shown in Figure 7. This includes the editor of the simulation product itself, as well as the ability to import content produced by third-party tools. This may include static maps, road models, building models, pedestrian models, and even dynamic scenes. A good simulation product tool layer should enable various technical roles to develop simulation scenes together easily. As Tesla mentioned in the press conference, their World Creator product can enable artists to quickly create simulation scenes.

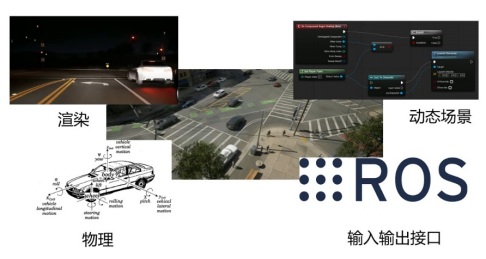

- The function layer reflects the main functions provided by the simulation product to achieve simulation testing goals, such as physics calculation, rendering, animation, dynamic scenes, driving behavior simulation, true value output, and interface with the tested object. As Tesla emphasized in last year’s AI Day press conference, they combined real-time ray tracing with neural network rendering for graphical rendering.

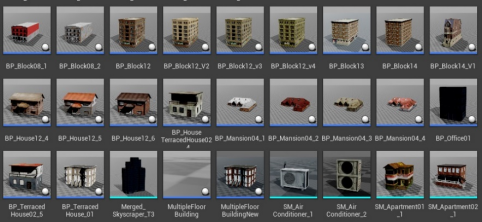

- The resource layer represents all data files needed to complete simulation testing and the ability to load these files during simulation runtime. For example, a sophisticated vehicle model can provide better visual effects, but may slow down the loading of the simulation product. Figure 9 shows a series of static building models on the Carla simulation platform.

– The core layer represents a series of infrastructure for simulation products, including mathematical calculation libraries, file parsing tools, and thread management tools, which determine many basic behaviors of simulation products, such as whether simulation products can fully utilize parallel computing resources to simulate complex physical processes.

– The core layer represents a series of infrastructure for simulation products, including mathematical calculation libraries, file parsing tools, and thread management tools, which determine many basic behaviors of simulation products, such as whether simulation products can fully utilize parallel computing resources to simulate complex physical processes.

- The platform layer determines whether simulation products can adapt to different computer platforms: Linux, Windows, cloud platforms, and specific real-time computing platforms, etc.

In addition, third-party libraries can provide solutions for some levels of simulation products, such as physics solvers can choose Bullet, ODE, DART and other physics engines.

Automatic Annotation in Autonomous Driving Simulation

The biggest advantage of autonomous driving simulation platform is that it can provide accurate ground truth. The installation location and configuration of sensors can be adjusted arbitrarily, and automatic annotation tools can adjust the generated raw data and labels to match algorithm models with changes.

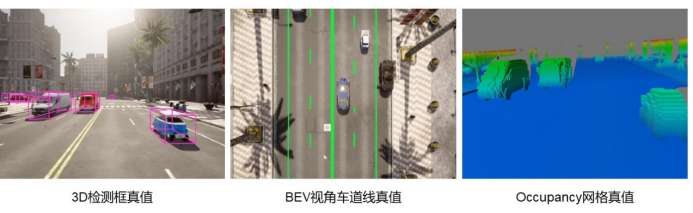

Autonomous driving algorithms are undergoing a transition from 2D detection/segmentation networks to 3D detection/segmentation networks. At present, the algorithm deployed mainly use 2D detection/segmentation networks, such as Yolo, Unet, and other networks, which are widely used in 2D target recognition. The ground truth for 2D detection, lane segmentation, and drivable area segmentation used in network training can be easily obtained in simulation platforms.

In recent years, with the emergence of Transformer models, there has been a proliferation of 3D detection/segmentation networks based on multi-sensors and BEV space. The corresponding ground truth labeling such as 3D detection boxes, vector lane lines, and occupancy grid are difficult for manual labeling, but can be automatically and accurately obtained through coding in the simulation platform, which is essential for network training and testing.

With the rapid development of autonomous driving perception algorithms, massive perception data and matching ground truth labels are becoming increasingly important. With the help of more advanced computing hardware and updated modeling technology, the autonomous driving simulation tool system will certainly bring more benefits to the industry and accelerate algorithm development and product testing, saving more human time and money costs.

Reference:1. Games 104: Modern Game Engines: From Beginner to Practice: https://games104.boomingtech.com/

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.