Author: Zheng Senhong, Tian Xi

The Tesla AI Day has finally arrived.

This time, Musk brought three teams, including the humanoid robot Optimus, the Autopilot team, and the supercomputer DOJO. The audience was so huge that they almost couldn’t fit in front of the stage.

Firstly, Optimus was showcased. It can not only walk freely, but also grab and move some small objects.

In addition, with the same FSD computer used in Tesla cars, Optimus also has a certain degree of artificial intelligence. Musk introduced that this robot will soon be put into production. The future production volume is expected to exceed millions, and the price will be lowered to less than $20,000.

The Autopilot team brought new play at the Tesla AI Day in terms of sharing autonomous driving technology. They introduced “occupancy” into autonomous driving, mapping the real world to vector space to achieve better vehicle planning experience.

At the same time, “data-driven” has reached new heights at Tesla with the accumulation of 30PB of data, enabling one model to be trained in every 8 minutes.

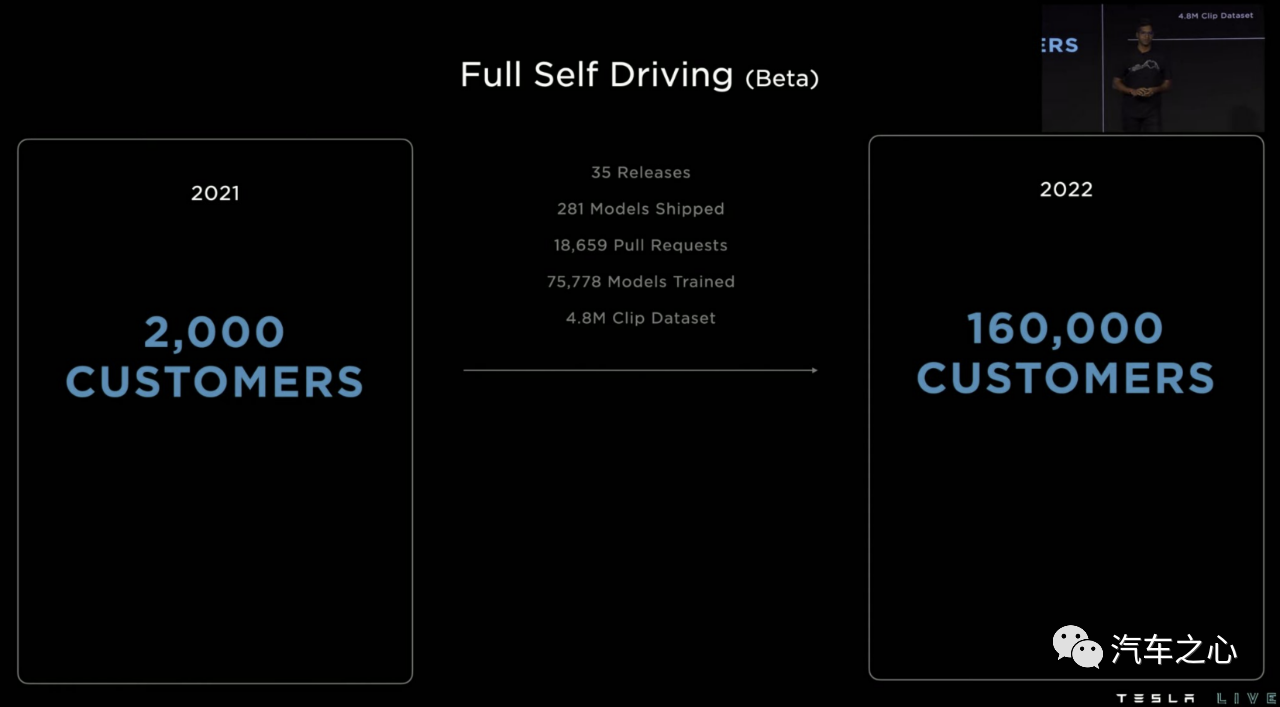

Now, the FSD Beta has iterated to version 10.69.2.2, and the number of test participants has reached 160,000.

According to Musk’s new FLAG, it will be opened to the world by the end of this year.

On the other side, the supercomputer DOJO also brought good news. It not only provides super-high computing power but also has advantages such as bandwidth comparable to Nvidia, reduced latency, and cost savings. It is reported that Tesla will complete the construction of seven EXA PODs in Palo Alto, USA in the first quarter of 2023.

It is worth mentioning that the AI Day has also become Tesla’s recruitment promotion event. Musk repeatedly expressed his desire to attract talented people to join, “Silicon Valley’s big companies may disappoint you, but Tesla won’t. Here, you will enjoy unprecedented freedom.”

“Occupancy” was introduced into autonomous driving at the Tesla AI Day, and the FSD Beta has tested 160,000 people.At this year’s AI Day, the latest Tesla humanoid robot, Optimus, undoubtedly attracted a lot of attention.

Even Ashok, the person in charge of Autopilot, couldn’t help but sweat and jokingly said, “I’ll try my best not to be awkward,” during his speech after taking over the part.

Despite this, whether from the time and length or the number of speakers, automatic driving is still the highlight of this event. Unlike the previous AI Days, which focused on breakthroughs in perception technology, this year’s sharing focused more on planning.

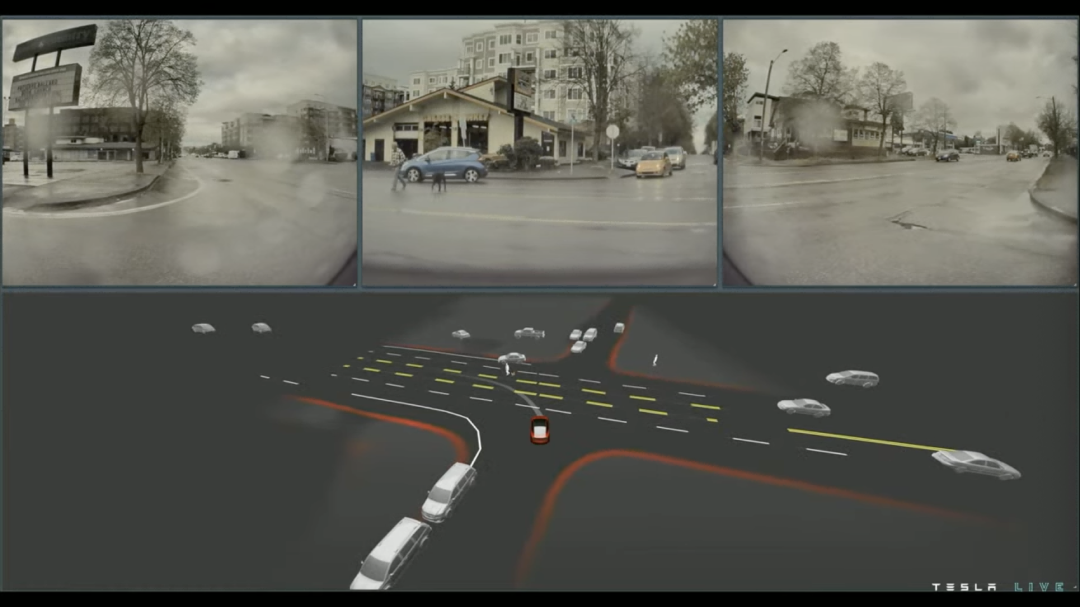

Ashok used the diagram above to summarize the main content related to automatic driving at this AI Day, and as can be seen:

The training infrastructure on the left and the AI compiler & inference engine on the right, along with the training data below, collectively input information into the neural network, which analyzes the occupancy of the network and some geometric shapes such as lanes and objects, and finally generates the path planning of the autonomous driving vehicle.

Taking the passing traffic flow at a crossroads as an example, the current vehicle is in a position waiting to turn left into the east-west direction lane, while pedestrians are crossing the road and there is a constant stream of traffic in the lane to be turned into. How should the autonomous driving vehicle pass?

From a strategic point of view, letting the traffic flow first and waiting for the pedestrians to walk far away before proceeding is undoubtedly the best choice. However, for Tesla, which relies only on 8 cameras for perception, this seemingly ordinary operation is not so simple.

This involves judging the interrelationships between many objects (including people) and how intelligent vehicles should coordinate planning.

Tesla’s approach is to first form a “visible space” based on 3D positioning from the video streams of the 8 cameras, which is the so-called occupancy network area.The area scanned by the system will display the object edges in blocks, similar to the grid representation of various buildings in the game “Minecraft,” and render them in vector space to present the real world.

At the same time, there are some areas that the camera cannot cover, such as obstructed obstacles. At this time, Tesla FSD can predict possible road edges, various road markings, and so on, through AI compilers and inference engines.

It is worth mentioning that for vehicles or pedestrians, Tesla also considers their kinematic states, such as speed and acceleration, to perform multimodal predictions. This is much more complicated than traditional target detection networks, which are entirely unattainable.

Autonomous driving cannot do without the “feeding” of data.

Corresponding to Tesla’s training data, it is divided into three parts: Auto Labeling, Simulation, and Data Engine.

According to members of the Autopilot team, Tesla has accumulated massive video clips, and the data is 30PB, which requires 100,000 GPUs to work simultaneously for 1 hour to process.

To train these clips, a powerful annotation network is required. It not only needs to be efficient enough but also needs to meet the same high-quality, diversity, and scalability requirements as human annotation.

To this end, Tesla adopts a “human-machine collaboration” approach, which not only uses manual labeling but also employs automatic labeling.

However, the Autopilot team is strengthening the ability of automatic labeling. For example, by combining with the occupied network and kinematic information, FSD can become more intelligent and efficient.

“We may need 100,000 clips to train FSD, which is like a dedicated annotation factory that can make our technical foundations more solid.”

Real-world collected data is insufficient to cover all scenarios. To improve the functionality of FSD, simulation and modeling are also needed.

Introduction of Autopilot team members: currently, Tesla can generate a virtual scene that is very close to the real world in just 5 minutes. For example, the San Francisco street map, including pavement markings, pedestrian vehicles, traffic lights, and even trees and leaves can be changed at any time.

In addition, for different areas such as cities, suburbs, and rural areas, Autopilot can also establish lifelike road scenes. If designed manually, it may take one or two weeks, or even several months.

Data Engine is also a highlight of autonomous driving technology at this year’s AI Day.

According to Autopilot member Kate Park, the data engine increases determinism by inputting data into the neural network to better solve real-world prediction problems.

She introduced a problem of how to determine whether a vehicle has stopped at a crossroad turning: if the vehicle’s speed decreases at the turning, how should it be dealt with, and can it be judged as stopping.

To address this issue, Tesla created many neural networks for evaluation and collected 14,000 similar videos related to this issue, either from the current fleet or simulation, and added them to the training set to help automatic driving vehicles make better predictions and judgments.

From the demonstration video, Tesla used different colors to mark the driving situation of vehicles at different intersection turns. “Red may mean a stop state, and, as the results show, our current judgment is already pretty accurate.”

In fact, all of these features mentioned above have been pushed on Tesla’s latest FSD Beta 10.69.2.2. It is reported that the test users of Tesla’s FSD Beta has expanded to 160,000, which was only 2,000 people last year.

Ashok said that Tesla has been pushing R&D for over a year, training 75,000 neural network models in 7 days, which is equivalent to training one model every 8 minutes.

At the same time, Musk also revealed that at this rate, Tesla can launch FSD globally by the end of 2022, not just in the US and Canada.

“We have already prepared technically and can adapt to different road conditions of any country.” As for regulatory approval issues, Tesla is also in close communication with local governments.

According to Musk’s previous description, the FSD test population will expand to 1 million people by the end of this year.

DOJO Ready to Decrease Costs and Increase Efficiency to Compete with NVIDIA

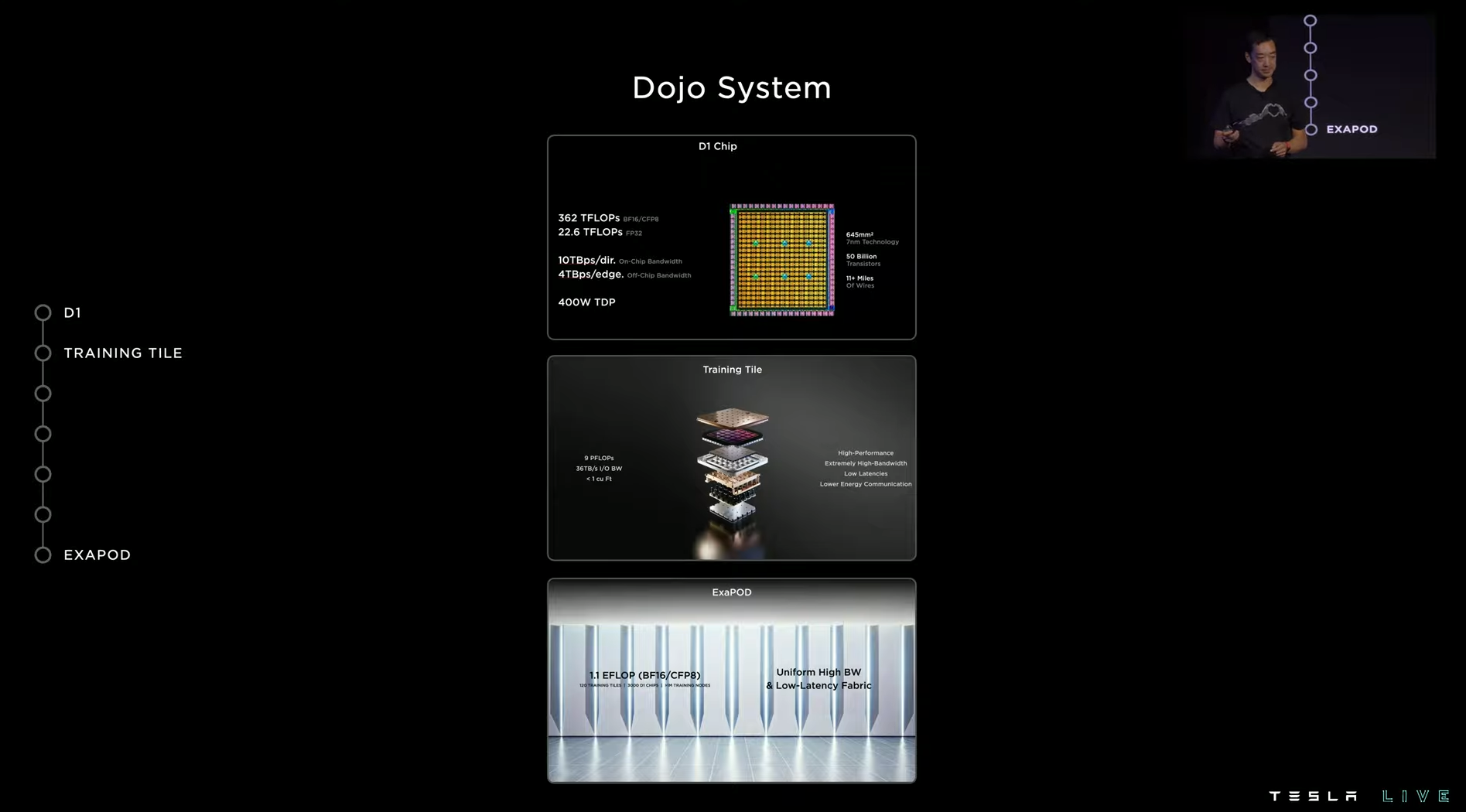

As a supercomputer for Tesla’s cloud training, DOJO is undoubtedly the highlight of Tesla AI Day.

Musk first mentioned the concept of DOJO in 2019, calling it the performance beast that can handle massive data for “unsupervised” annotation and training. The system can self-analyze the sample set through the statistical scale between samples and improve efficiency without manual annotation.

In other words, DOJO’s mission is to train Tesla’s pure vision autonomous driving with the highest efficiency.

At the Tesla AI Day and Hot Chips 34 conferences last year, Tesla disclosed the architecture and detailed parameters of DOJO, including the interface processor (DIP) that builds a bridge between the host CPU and training processing, and the D1 chip with AI computing power up to 362TFLOPs.

After one year, Tesla not only brought more symbolically research achievements but also plans to officially mass-produce DOJO EXA POD in the first quarter of 2023.Tesla’s Autopilot VP of Hardware Engineering, Pete Bannon stated that the progress of DOJO this year mainly focuses on the composition of chipsets behind DOJO and more efficient compilation compared to last year’s testing.

“Time-saving, labor-saving, cost-saving, and space-saving,” the appearance of EXA POD follows Musk’s emphasis on first principles.

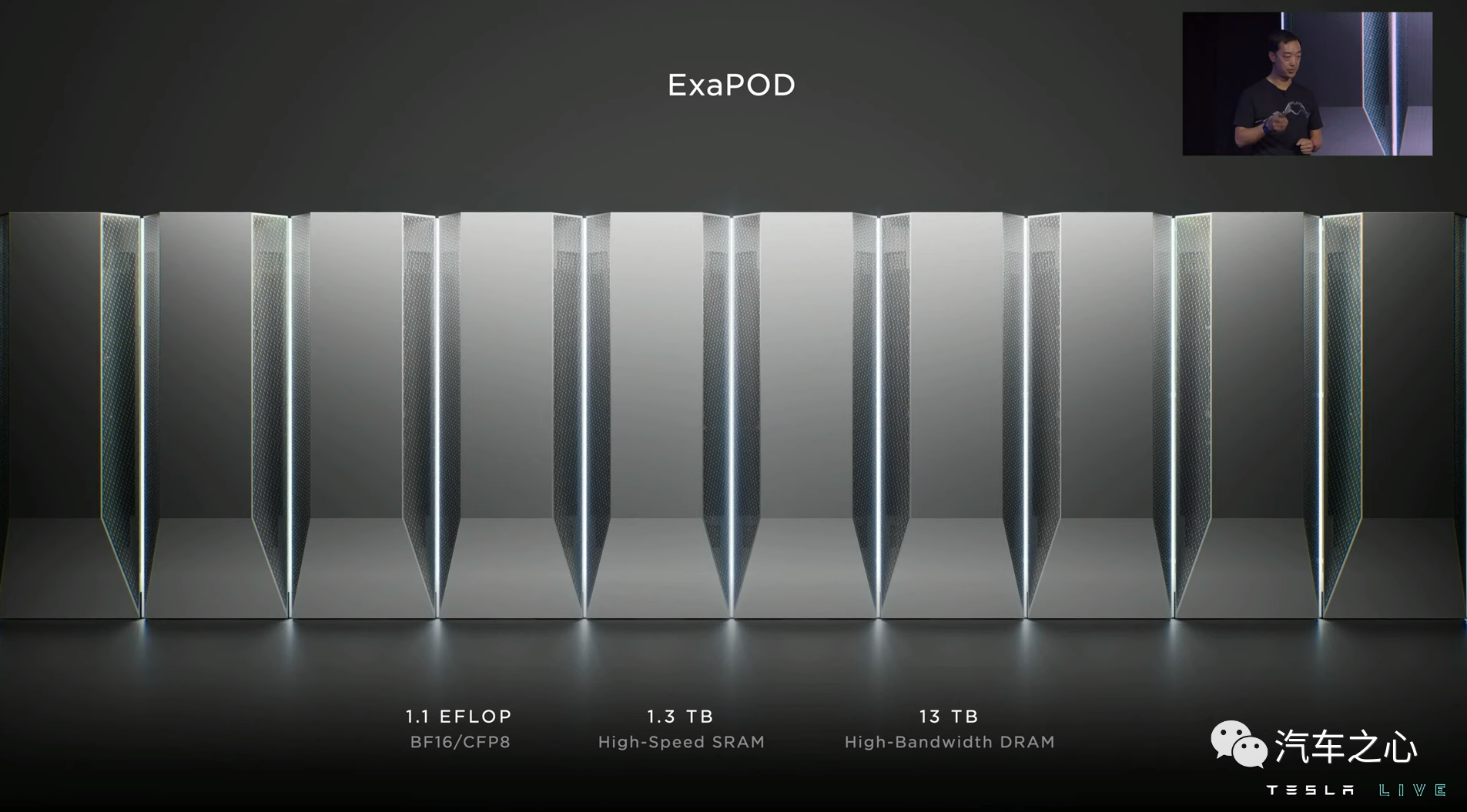

What is EXA POD?

Simply put, if DOJO is viewed as a supercomputer cluster, then EXA POD can be seen as a group member within this cluster.

An EXA POD will be composed of two layers of computing trays and storage systems, each tray including 6 D1 chips, 20 interface processors, 1.3TB high-speed SRAM, 13TB high-bandwidth DRAM, and 1.1 EFLOP computing power.

In addition, in order to solve the problem of coefficient of thermal expansion (CTE) caused by high integration of EXA POD, Tesla has iterated 14 versions in 24 months and ultimately adopted a self-developed voltage regulation module (VRM), reducing over 50% of CTE and expanding more than triple the performance indicators.

Tesla engineers state that besides having ultra-high computing power for AI training, EXA POD also has advantages such as expanded bandwidth, reduced latency, and cost savings.

For example, in the Batch Norm Results test, EXA POD has an order of magnitude delay advantage compared to GPU.

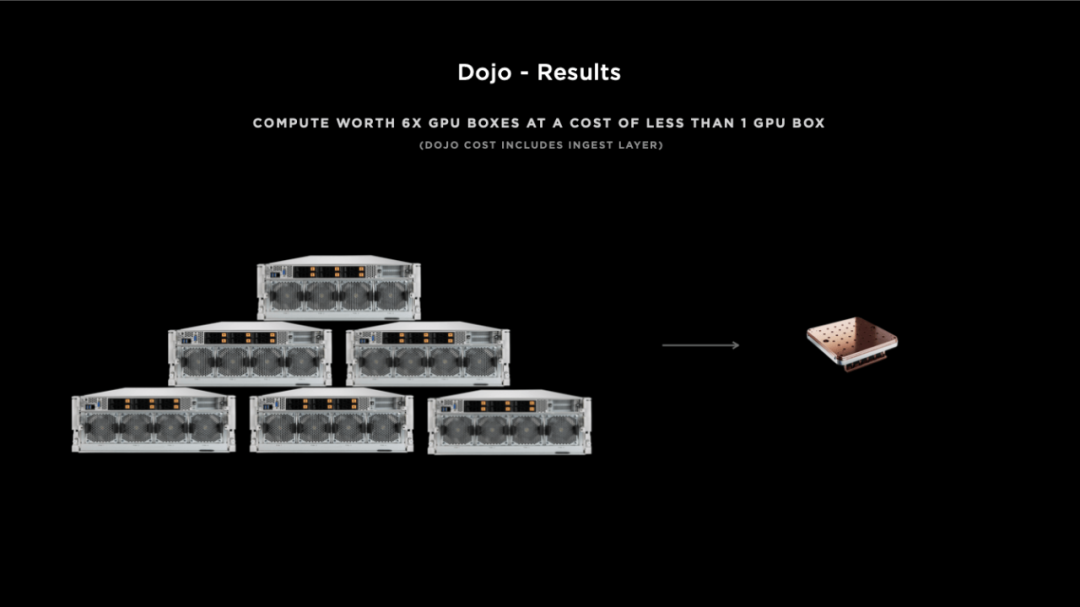

Secondly, when running the classic image project RESNET-50, EXA POD’s computing power surpasses Nvidia A100.

Finally, in automatic annotation algorithm tests, EXA POD’s performance doubles compared to Nvidia A100.

The cost of one EXA POD is equivalent to six GPUs in terms of cost. Four EXA PODs can replace 72 GPU racks, which means that, with equal cost, the performance of the EXA POD is 4 times higher, the energy efficiency is 1.3 times higher, and the space is reduced by 5 times.

The cost of one EXA POD is equivalent to six GPUs in terms of cost. Four EXA PODs can replace 72 GPU racks, which means that, with equal cost, the performance of the EXA POD is 4 times higher, the energy efficiency is 1.3 times higher, and the space is reduced by 5 times.

In conclusion, Tesla’s DOJO project is almost at the end of its development. This also means that Musk has once again outlined the final form of FSD, as real-world visual AI is the only solution for countless edge cases. AI chips are merely an embellishment.

This fact was recognized by Musk himself: only by solving the problem of real-world AI can the problem of autonomous driving be solved – unless there is strong AI capability and supercomputing power, it is impossible to achieve.

And this is also the reason why Tesla initiated the DOJO project. Taking Alpha Go as an example, which was specialized in Weiqi, after training with human involvement, it was able to defeat global Weiqi masters.

DOJO can be regarded as the Alpha Go for autonomous driving, which can automatically process labeling data and find the optimal solution to problems by analyzing massive amounts of Tesla fleet data through deep learning.

According to the plan, Tesla will complete the construction of seven EXA PODs in Palo Alto, USA in Q1 2023.

This means that the DOJO supercomputer will become one of the most powerful supercomputers in the world, reducing the months of annotation work to one week.

“At least it can help you train models online with less money and faster,” Musk added. The DOJO supercomputer will be offered to other users in the cloud through a paid form, just like Amazon Web Services.

Tesla, a new era terminology

In just a year, Tesla has not only turned last year’s humanoid robot Easter egg into a reality, including the engineering technology, development logic, and cost, but also the team of engineers behind Tesla is confident.

It is worth mentioning that the perception and technology part of Tesla humanoid robot basically follows the Tesla FSD solution, including the Tesla D1 chip-integrated system as the “brain” and the eight Autopilot cameras on the face.

Tesla engineers said that Tesla humanoid robots collect perception data through the head camera, and then recognize and execute commands through FSD visual algorithms.

In other words, Tesla humanoid robots do not simply execute commands according to traditional programs, but learn autonomously through AI models.

As Musk said, through AI Day, “we hope that the outside world’s understanding of Tesla can go beyond electric vehicles, and we are the pioneers of the real-world AI field.”

At the event, Musk reiterated Tesla’s holding of AI Day and showcasing the robotic prototype to “persuade talented people like you around the world to join Tesla and help us make it a reality.

Indeed, for such a company with unlimited firepower, Tesla may already be a new era terminology.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.