Author: Zheng Senhong

The controversy over the perception route of autonomous driving technology has always been the focus of the industry.

Although Tesla’s pure visual solution leads the industry, the multi-sensor fusion solution is regarded as the key means to achieve a safe and redundant autonomous driving.

Behind the dispute over the autonomous driving technology route, besides the demanding requirements for perception hardware capability, it actually tests the algorithm — how to maximize the performance of perception hardware.

To ensure high-dimensional perception data and reduce data loss, the “one-pass” front fusion algorithm is used by the “pure visual” players.

The “multi-sensor fusion” players put safety first, and output perception data information and then summarize and process the data from each sensor to draw conclusions, which is the back fusion algorithm.

The advantages and disadvantages of the two algorithms are varied, with the former requiring higher hardware computing power and the latter unable to maximize the performance of a single sensor.

As autonomous driving moves closer to everyday driving scenarios, the requirements for external environment detection and perception will only become higher.

What are the front and back fusion algorithms?

Autonomous driving is a technology that integrates perception, decision-making, and control feedback.

Environmental perception, as the first link in autonomous driving, is the link between the vehicle and the environment.

The overall performance of the autonomous driving system largely depends on the quality of the perception system.

Nowadays, the multi-sensor fusion perception based on multiple sources of data has become the mainstream solution in the industry, making the final perception results more stable and reliable, and better utilizing the advantages of each sensor:

-

Although cameras can perceive rich textures and color information, their ability to perceive distance from obstacles is weak, and they are easily affected by lighting conditions.

-

Millimeter-wave radar can work around the clock, providing accurate distance and speed information, as well as a longer detection distance, but with lower resolution and unable to provide object height information.

-

Although LiDAR can perceive distance information more accurately, it is unable to obtain rich texture and color information due to the sparse point cloud collection.

The fusion of multiple sensors can play to their strengths and avoid their weaknesses, improving the overall vehicle safety coefficient. For example, the fusion idea of millimeter-wave radar and camera can quickly eliminate a large number of invalid target areas, greatly improving recognition speed.

The fusion of cameras and LiDAR uses visual image information for target detection, from which the obstacle position can be obtained, and the obstacle type information can also be identified.

Due to the high complexity of autonomous driving problems and its safety-first characteristics, it is necessary to rely on the mutual fusion of various sensor data to improve perception effectiveness.And there are various ways to fuse multi-sensor information, such as front-end fusion and back-end fusion.

Front-end fusion means that all sensors run the same set of algorithms to uniformly process different raw data from LiDAR, cameras, and millimeter-wave radar, outputting a result as if a super eye outputted it through a super algorithm.

Tesla is a representative player of front-end fusion.

Tesla unifies the different raw data from millimeter-wave radar, cameras, and other sensor devices and integrates them into a set of super sensors that surround the entire car 360°. The AI algorithm then completes the entire perception process.

In Tesla’s solution, all sensor devices are only responsible for providing raw data, while the algorithm is responsible for integrating the raw data.

Musk believes that when millimeter-wave radar and vision are inconsistent, vision has better accuracy, so pure vision is better than the existing sensor fusion method.

The advantage of this approach is that it will not be affected by a single sensor signal, so Tesla directly removes the millimeter-wave radar. But this means that the system has extremely high requirements for data timeliness and hardware computing power, requiring higher safety redundancy.

This is because if the algorithm makes a misjudgment, the final control layer will still make incorrect instructions.

Back-end fusion allows different sensors to perform their own duties — ultrasonic radar, cameras, and millimeter-wave radar use different algorithms to independently perceive.

When all sensors have generated target data (such as target detection and target speed prediction), these pieces of information and target lists are verified and compared:

In this process, sensors will filter low-confidence or invalid raw data through algorithms and finally identify the merging of recognized objects to complete the entire perception process.

This is also the mainstream fusion solution in the industry.

For example, Huawei’s ADS intelligent driving solution uses different algorithms for independent perception of sensors such as millimeter-wave radar and cameras and generates independent information.

For example, LiDAR sees a Corgi, millimeter-wave radar sees a dog, and the camera sees a small animal. After integrating and processing this information, the system makes comprehensive judgments.

Of course, the back-end fusion perception framework also has inherent shortcomings:

Single sensors may have limitations and may cause false detection and missed detection under specific conditions. For example, cameras are not good at judging distance and position, and radar is not good at judging color and texture. The system needs to verify their information with each other to achieve higher credibility.

## Translation

## Translation

Yuanrongqi CEO Zhou Guang once gave an example of the perceptual ability of front and rear fusion:

“Suppose you hold a mobile phone in your hand. The lidar can only see one corner of the phone, the camera can only see the second corner, and the millimeter-wave radar can see the third corner.

If you use the rear fusion algorithm, since each sensor can only see a part of it, the object is very likely not to be recognized and ultimately filtered out.

But in the front fusion, since it collects all the data, it is equivalent to being able to see the three corners of this phone. For the front fusion, it is very easy to recognize that it is a mobile phone.”

This differential phenomenon naturally produces different results, such as facing the same complex scene, the probability of extreme scenes appearing in the rear fusion is 1%, and the front fusion can reduce this probability to 0.01%.

Both technological routes have their own advantages and disadvantages. Front fusion is better than high precision, while rear fusion is better than high efficiency. Behind these different schools of thought, players naturally hold different views on hardware and computing power.

What problem does the full fusion algorithm solve?

Some time ago, there was a post on Zhihu that sparked a discussion about whether “hardware or software” is the key to automatic driving.

The focus of everyone’s controversy is whether car companies can maximize their effectiveness after putting hardware on the car.

In the discussion of the topic, Jin Jiemeng, the chief scientist of the RisingAuto autonomous driving team, responded:

Intelligent driving hardware determines the ceiling of the software, and software determines the user experience. However, relative to the groundbreaking performance improvement of hardware, software algorithms have reached a time when they must be reformed.

Indeed, if autonomous driving can always rely on stacking hardware to solve problems, it seems to be a one-stop solution.

However, in reality, if algorithm capabilities cannot keep up effectively, hardware can only become a decoration.

So, how does RisingAuto Automobile change its way of thinking in the era of intelligence, so that “hardware stacking” is no longer a commercial gimmick, but a way to make hardware play a higher efficiency, and achieve the goal of the same hardware playing different roles in different scenes?

Jin Jiemeng believes that through the control of software, hardware can present different states in different scenarios to solve different problems, which is equivalent to the hardware being a resource that software can call at any time.

For example, in terms of hardware quantity: the RisingAuto R7 is equipped with 33 perception hardware, including the domestically first- mass-produced Premium 4D imaging radar, an enhanced version of long-distance point cloud corner radar, and a centimeter-level high-precision positioning system.In terms of hardware quality, the Luminar Lidar can achieve a maximum detection distance of over 500 meters and a detection angle of 120° horizontal and 26° vertical.

Two 5th generation Premium high-performance mmWave radars from German supplier Continental are capable of detecting up to 350 meters, surpassing conventional radar’s range of 210 meters, even under extreme weather and lighting conditions.

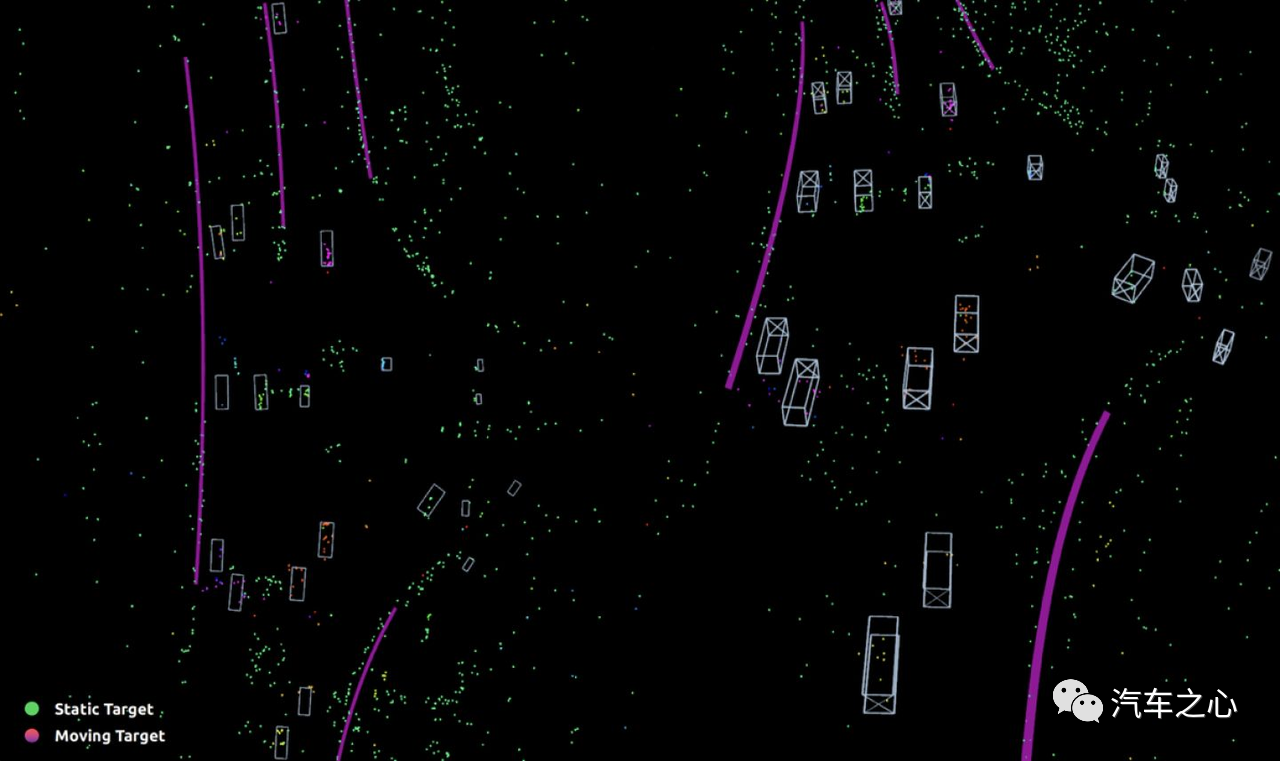

The Premium 4D imaging radar can simultaneously identify distance, velocity, horizontal azimuth, and vertical height in four dimensions, including free space such as roadside and road forks, as well as stationary obstacles such as construction fences and ice cream cones.

The vehicle is covered with 12 8-megapixel cameras that provide 360° full-range perception of dynamic human and vehicle traffic participants, ground markings, traffic lights, and speed limit signs with more accurate sensing signals.

Finally, there is the software algorithm capability. The Flyby R7 is equipped with an NVIDIA Orin chip, which provides a single chip computing power of 254Tops, expandable up to 500-1000+Tops. Compared with the previous generation Xavier chip, the computing performance has been increased sevenfold, enabling rapid conversion of road conditions into actual images and quick processing of emergencies.

This approach seems like hardware stacking, but what is the actual situation behind it? Jin Jiemeng mentioned the concept of “full fusion” in his answer on Zhihu.

The working process of “full fusion” is as follows: first, the perception results output by the front fusion multi-task, multi-feature network, and deep neural network are compared with the perception results output by the rear fusion independently to achieve mixed fusion. Secondly, relying on the three-fold fusion (within milliseconds) deployment of high-bandwidth and supercomputing chip platforms, the whole set of actions such as perception, fusion, prediction, decision-making, and execution are completed.

In simple terms, first, the front fusion approach is used to obtain a solution, then the rear fusion approach is used to obtain another solution, and finally, the system compares and checks the two solutions to obtain a perception and efficiency win-win result.

So, how does the “full fusion algorithm,” which is considered as a software transformation significance by Flyby, perform?From the official videos released so far, the RISING PILOT system of FEV cars mainly performs in extreme scenarios such as enhancing recognition in the entire domain of the ramp, ultra-sensitive static barrier perception, and long-range identification in rainy, snowy, and foggy conditions.

For example, in the triangle area of the ramp where it is currently impossible to identify in advance when entering a multi-forked ramp or in the case of multiple and complex ramp entrances, the system may fail to identify and directly miss the ramp or automatically exit.

Through comprehensive perception from a greater distance, RISING PILOT can identify the triangular area of the ramp in advance, thereby having more sufficient time to change lanes and merge into the ramp safely.

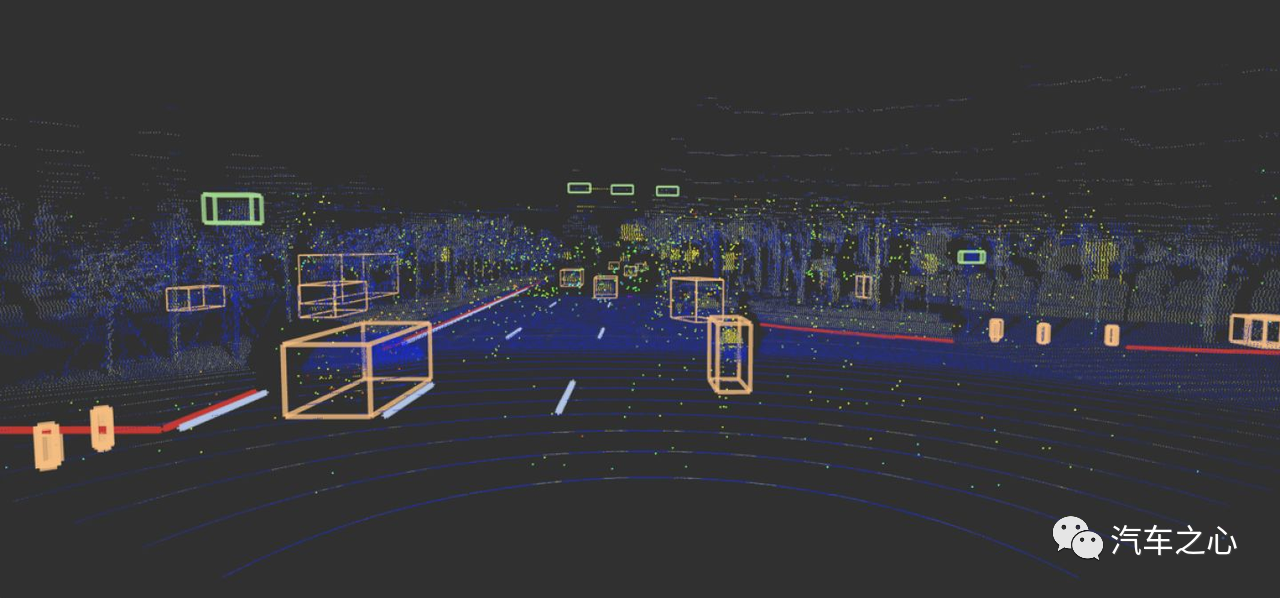

For instance, in the scenario of a construction area, collisions may occur due to inability to accurately identify static barriers, or simply failing to identify the construction area:

-

RISING PILOT is able to accurately identify the three-dimensional geometric position, size, and type of static obstacles, and make avoidance reactions quickly in advance.

-

Precise identification can even be achieved in advance with only an ice cream cone or construction sign, through prediction and decision-making models.

It can be foreseen that when autonomous driving undergoes various fancy stacking of on-board sensors and numbers, as well as on the hardware of autonomous driving, the final likelihood of wanting to stand out with one’s own intelligence driving technology still likely returns to software algorithms.

Hardware, Needs to be a Dispatch Resource for Software Algorithms at All Times

If autonomous driving development is a long-term refinement process, then “technical iteration” is the touchstone for this process.

Upgrading from single to multiple cameras, from 2 million to 8 million pixels, and upgrading from low-frequency 24GHz to 77GHz, 79GHz for millimeter wave radar, the light detection and ranging (LIDAR) experiences a heavy manufacturing and cost-cutting transformation from mechanical rotary type to micro-electrical mechanical systems (MEMS).

When autonomous driving hardware gradually matures, algorithms naturally become an important basis for evaluating autonomous driving systems.

Although the advantages of front fusion are beyond doubt, almost 99% of automakers are still in the Demo stage and very few of them can really do a good job with front fusion algorithms.

This is also why most automakers choose back fusion.

In the context of immature technology, the front and back fusion exposed their respective shortcomings in extreme scenarios one by one, and these problems can hardly be solved by just upgrading the software algorithm and hardware.

Although full fusion is currently considered an algorithm that can take advantage of the strengths and circumvent the weaknesses, it is still a challenge for the basic abilities of hardware and software and how to achieve seamless collaboration between the two with the entire vehicle.

In addition, participants in different segments also have different perspectives, such as the belief that front fusion is in line with the first principles held by Zhou Guang.”The lack of algorithms cannot be compensated by hardware alone. In order to ensure the safety of L4 level Robotaxis, it is necessary to adopt multi-sensor front fusion instead of post-fusion, according to He XPeng’s viewpoint, which emphasizes the importance of algorithms and data and advocates strong visual perception with lidar only as a supplement.

“Experience, cost, safety, and comprehensive range of use” are the four dimensions that He XPeng uses to evaluate the advantages and disadvantages of autonomous driving technology, and he insists that a balance must be maintained in all four dimensions.

If hardware stacking can only bring a high level of hardware, then software is the most powerful weapon in the battlefield. According to a report by Morgan Stanley, traditional automotive hardware accounts for 90% of the vehicle’s value, while software accounts for only 10%. However, in the future, the proportion of software value will increase to 40%, while that of hardware will decrease to 40%.

Deloitte Consulting further pointed out that software and software update iteration will determine the differentiation of future automobiles. This is why full-stack development has become the route pursued by auto companies. Against the background of extensive hardware stacking, the software algorithm for autonomous driving has become the key to building differentiated intelligent driving capabilities.

The ability of software algorithms, in the premise of sufficient hardware level, directly determines the actual performance of autonomous driving.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.