Author: Mr.Yu

Recently, my opinions on smart voice assistants have changed drastically.

I realized that my Xiao Ai speaker at home doesn’t work as well as before, sometimes it fails to wake up, and it’s not always the nearest smart speaker to respond to my call. When I asked her to turn on the living room light, the bedroom light above my head lit up instead.

Two months ago, I was lucky enough to experience a Mi Home server outage, and the experience was pretty sour. All the smart home devices went offline and disappeared from the Mi Home app. Some devices couldn’t even be turned on. It was a profound experience of the fragility of human technological civilization.

When I shared this outage story in the GeekCar industry community, many friends questioned why Xiaomi didn’t prepare a local redundant solution. The conclusion we reached was: there is no solution.

Then, I thought of the voice assistant in my car.

The driving and home scenarios are quite different, what are the similarities and differences in the problems they face? Can the full offline voice system proposed by Jidu Concept Car cover the controls in offline states? Is the advertised voice capability of the in-car AI a marketing-driven demand or a product strengthening point?

Moreover, since smart voice assistants are supposed to be good, why are there still so many people who don’t like them?

To understand the overall picture and essence of the problem more comprehensively and objectively, I invited friends from different chains in the auto industry to chat together, setting aside prejudice.

To present the conversation content as accurately and understandably as possible, this series will use a restoration approach rather than a formal interview, and there will also be some of my personal observations and thoughts during the exchange.

Due to the involvement of the work experiences and personal opinions of many people, anonymity is strongly required, and I will call them Mr.K.

The first Mr.K is a very experienced senior intelligent cabin product manager who works for a leading automaker. We had a discussion about the feasibility of smart voice assistants.

Quoting Mr. Liang Wendao’s program “Eight Part” slogan: No guarantee of success, not necessarily useful. For practitioners, it is more important to never stop thinking.

Below is a record of our conversation, with me as Mr.Yu@GeekCar and Mr.K as the other speaker.

Mr.K:

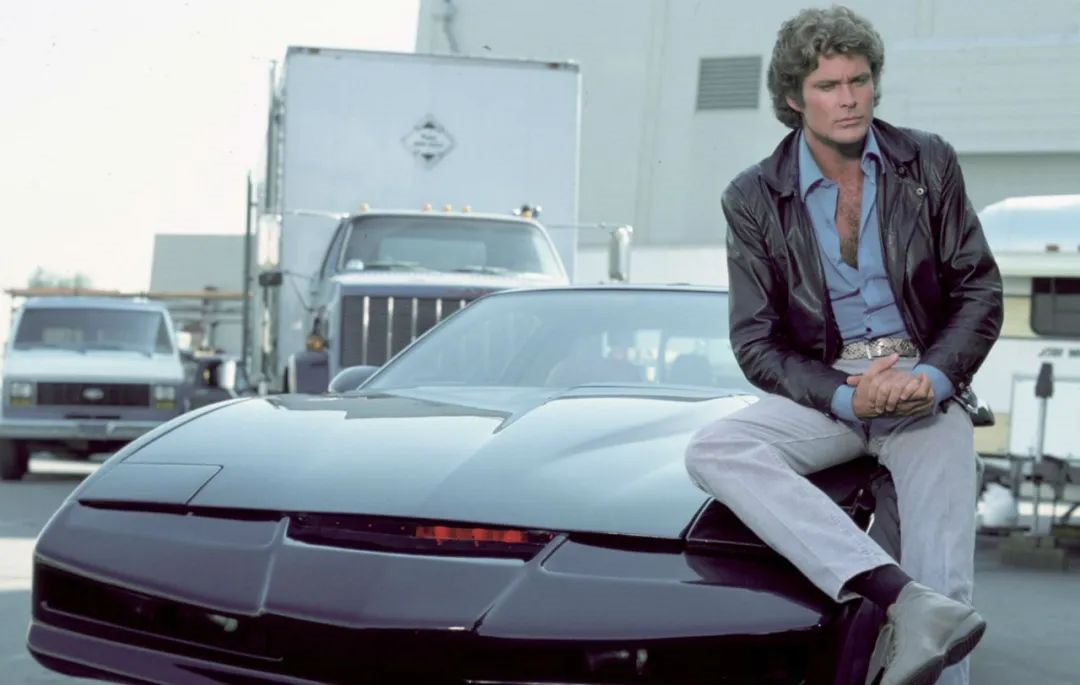

When you mentioned the topic of car voice assistants or smart voice assistants, instead of thinking of a certain car, I immediately thought of two familiar images. However, neither of them was the butler JARVIS that most people frequently mention.The first one is from “Captain America: The Winter Soldier,” where the SHIELD director was chased and blocked by Hydra agents disguised as police on the road, and the AI voice assistant in the cockpit demonstrated many capabilities. It was very impressive, and you can check out that plot.

The second one is from an American TV series called “Knight Rider,” which is quite old. Have you seen it?

Mr. Yu@GeekCar:

I know, Mac.

Mr. K:

Yeah, I brought up these two works to make a comparison and to show that the current intelligent voice assistants in cars are still far from intelligent, at least in quotation marks, compared to what people expect. Because when I was working on this type of product within the car factory, we also called it an intelligent voice assistant, but in reality, it is not very intelligent.

Why do I say that? Because as media professionals or automotive professionals, we all feel that it is relatively stupid or not up to people’s expectations.

Mr. Yu@GeekCar:

Why is that? Or to put it simply, what do people expect from intelligent voice assistants?

Mr. K:

Simply put, it should really be like an assistant or a partner in the car, able to give me appropriate reminders and suggestions at the right time. It should be able to give different suggestions by recognizing and analyzing personal expressions and emotions.

In this way, you would feel that it is closer to human communication, rather than just a machine.

Therefore, if you were to categorize, as mentioned earlier, the episode in “Captain America: The Winter Soldier” demonstrated many capabilities, such as monitoring the driver’s physiological state and giving proactive recommendations, monitoring the car’s status, proactively seeking adjustable support, giving proactive recommendations in crisis situations, planning escape routes, and so on. These are closer to the stage that can be realized in reality.

In terms of the status of the car’s AI “KITT” in “Knight Rider,” it is a high-level artificial intelligence.

Mr. Yu@GeekCar:

So “Knight Rider” is science fiction, and JARVIS is also science fiction. But even so, people who haven’t been exposed to or have no concept of it can’t really distinguish it.Let’s talk about something practical: what is the current level of the industry, or in other words, at what stage is everyone at?

Mr.K:

Well, I assume you have had experience with a lot of in-car voice systems, as you have mentioned that your previous work involved AI and voice recognition.

When we develop products, we often compare them with competing products. As we progress, we find that the ability of in-car voice systems is not greatly different from one another.

So what makes one more advanced? Primarily, it is accuracy in voice recognition, including better understanding and execution.

This process is a climb. As we all know, the voice recognition rate used to be not high, perhaps only 80%, then 85%. The industry and academia have put in more effort to improve this rate. I remember previously iFlytek mentioned that they could reach 99.9%, perhaps by using both voice and visual data together. By combining the movement of a person’s mouth and face with the voice, multi-modal recognition becomes possible and the accuracy can be increased. Nowadays, the industry can usually achieve 95% to 98% recognition accuracy, which is already very high.

However, we still face challenges. When people talk to each other, they often cannot accurately perceive each other’s intent. Therefore, we cannot place too high expectations on the machines, at least not for now.

Mr.Yu@GeekCar:

That makes sense. Speaking of which, how can we expand the coverage of voice-recognition?

Mr.K:

Actually, you will find that the so-called “artificial intelligence” here is putting the “artificial” first, and then the “intelligence”. I have always joked about it like this, but that’s the reality. We rely on people to accumulate and collect all the possible scenarios that might be encountered, including projects that I have done before, and even the ones I am currently working on.

When we work on a project, we try to cover all the possible scenarios and language environments that may be encountered. We have to guess what a person might say in different situations and do our best to make the system as complete and comprehensive as possible.

Most of the time, people will think the system is quite intelligent. But when someone tries to test or push the limits, say something unreasonable that is beyond the ability of AI, some issues may arise.

It is important to have some backup responses at that point, such as retrieving results from a search engine. This indicates that the system is still performing well and that the framework is still intact.

Mr.Yu@GeekCar:

I can relate to the artificial part. My colleagues from our partner companies used to work overtime on AI dialogues, and joked with me that “the smarter the AI, the more manpower required.”

What happens when the system doesn’t work properly?

Mr.K:

There is a very interesting example.

I sat in the car and woke up the AI voice assistant. I said “open”, and then the AI turned on the heated seats by itself.

In fact, I didn’t say specifically what to open, so this reaction is abnormal, right? You will find that actually, the current design of speech recognition systems used by various manufacturers will more or less have the same issues that I stated earlier. This can be attributed to the fact that the AI technology is not advanced enough, resulting in the lack of depth in its intelligence.

Let me say one more thing. In recent years, the frequently mentioned “visible and sayable” feature has been popular in the industry. The functional options displayed on the screen can now be controlled by voice. Here, I’d like to share my opinions, do you think it’s true?

I think the launch of “visible and sayable” feature needs further consideration.

What is the purpose of voice recognition technology? Is it to let us drive with peace of mind while improving the efficiency of interaction? Then, if you require me to look at the screen first before issuing voice commands, isn’t there a contradiction?

Mr.Yu@GeekCar:

I understand your point. If we can only occupy one human sensory channel, there’s no need to use two or more senses. The human brain has a limit to processing sensory information.

Mr.K:

Of course, I’m not saying that the relevant technology is not valuable.

You know, the interface is relatively fixed. There are still some advanced algorithms in the background that enable the AI to extract keywords from the interface, understand instructions, and execute them correctly to avoid embarrassing misrecognition. This requires advanced technological skills.

Of course, as a user myself, I hope that when driving, the AI can play the role of an assistant, reminding me at the right time or giving me suggestions, such as timely optimization of the route, or early warning of weather changes and traffic jams. This is what I really want.

In addition, there is a point of dissatisfaction with me, that is, there are too many fixed-sentence voice commands, and I really can’t remember them. I don’t know if you have ever read the user manual when buying a car, some car entertainment systems even have dedicated help documentation to guide you on how to use them.

Shouldn’t voice communication be the most natural way to interact with a car? Why do we still need so many special user manuals?

Mr.Yu@GeekCar:

Yes, what you said really touched me. Let me share my real experience with you.There was a time when we had to return a test car, and we went to refuel. However, when we stopped at the gas station, the staff came up with the gas gun, and we found an embarrassing problem – we didn’t know how to open the fuel tank cap.

I tried pressing the fuel tank cap, but there was no response;

My colleague shouted at the car screen, but there was no response;

Neither the main interface nor the settings seemed to have the solution.

We were stuck there all the time. At that time, there were quite a few people coming to refuel, to be frank, we were quite embarrassed.

Later, I had no choice but to use voice to open the help document and search for keywords to find the way to open it.

I won’t go into the details of how it works. I just thought that this kind of design, which relies heavily on voice and doesn’t cover everything, is really counter-intuitive.

Mr.K:

Yes, this kind of seemingly intelligent but actually stupid approach is not close enough to our daily communication.

Mr.Yu@GeekCar:

Because human communication is based on the accumulation of common sense, decision-making based on IQ and EQ, which machines often do not have.

Mr.K:

So the function we expect is also the expectation of the manufacturer. Whether it is called intelligent voice or voice assistant, it is only appropriate if it has the features you mentioned.

Nowadays, these so-called names are actually similar to the manufacturers’ promotion of intelligent driving. What is intelligent driving? In fact, this matter itself is very interesting.

The current correct term should be “intelligent assisted driving”, with emphasis on the word “assisted”. Manufacturers are willing to call it intelligent, that’s fine, but in fact, it is assisted driving, and do not call it automatic driving.

So the promotion of voice is also very similar. You should not talk about the concept of artificial intelligence voice itself. In terms of product presentation, it is just an ordinary voice assistant.

Mr.Yu@GeekCar:

Yes, in fact, manufacturers have relatively converged in terms of promotion.

In my observation, from 2016 to 2018, there was an AI concept bubble in the whole society. Consumer electronics or technology companies were conveying an expectation to the society: I can completely rely on artificial intelligence.

A very common point is that everyone can have a smart speaker at home, which can not only control smart home appliances, but also search and play songs. Manufacturers even tell you that you can treat “it” as a family member, and you can have emotional investment and interaction.

But the fact is that this point is completely unrealistic. I also think that manufacturers cannot take some very atypical cases as a universal manifestation of voice communication ability.Back in the car, it’s the same situation. You picked up a new car and are eager to try out the intelligent voice function advertised by the manufacturer. However, in reality, this is a process of mutual adaptation. For one to two months, you gradually become familiar with the marginal ability of AI as it tries to learn your usage habits. During this process, the expectation level drops, especially when you find that the actual ability of AI differs from your previous expectations. The frequency of your interaction with AI through voice may increase, but the overall interaction will still decrease.

After this stage passes, it can truly be called the beginning of the “user experience.”

I’m not sure if it’s correct, but I have such an observation, which is also supported by some industry research.

Mr. K:

You’re right. There is always a latent expectation level when users interact with voice, at any time. If it responds accurately, it’s still okay. If it doesn’t respond accurately or execute, it enters a typical logic: you think it’s not easy to use, or even very stupid.

Mr. Yu@GeekCar:

Yes. If the accuracy can reach 100%, you think it’s very okay. If it can reach 80%, you will think “well, it’s okay.” If it’s below 80%, it has nothing to do with “convenient.” The industry has been working hard in this regard for so many years and has only developed into what we see today.

I can give you an example. Once, I asked the smart speaker in my bedroom to turn on the lights while I was going to make milk powder. Normally, it would turn on the living room’s main light, but sometimes it would turn on the bedroom’s light, and it wasn’t even the preset low brightness. At that moment, our human baby was sleeping soundly in the crib and could be awakened at any time.

Then I panicked, and I quickly asked it to turn off the light, but this process was very slow. Maybe I spoke too fast and the intelligent speaker didn’t recognize it. Thinking back, I should have just gone and turned off the light manually.

There is a logical bug here. When we issue commands or interact with intelligent voice, the ideal state is a relatively stable, smooth, and balanced normal state, and we hope to get normal feedback.

Once you leave the ideal environment and enter a more urgent state, affecting your speed and tone, and the AI is not implemented, the impression and experience at this time will be poor, and even make people crazy.

Mr. K:

You’re right. The experience will indeed be affected.

Actually, the most common voice interaction scenes in the car are navigation, playback, and air conditioning, which are the most commonly used and supported by actual data. Although the scenarios seem simple, there may still be some bugs.Translate the following Chinese Markdown text to English Markdown text, in a professional manner. Preserve HTML tags inside the Markdown, and output the results only.

Like the most commonly used navigation function, I don’t know if you have encountered this situation. I ask the navigation to plan a route, and there is only one route. Then the voice will ask me, “Which one?”

Big brother, you only planned one route for me and still ask me which one I want to choose? Can’t the interaction be less complicated? Don’t just go through the motions?

Mr.Yu@GeekCar:

At least it shows that their framework is complete.

Mr.K:

Yes, it’s a reflection of the framework design. Then, extending to the driving process, if the system finds a better route based on real-time traffic conditions, shouldn’t it inform the user in a timely manner that there is a better route that can save 5 minutes, 10 minutes, do you want to take it?

At this point, the user will naturally make a judgment, right?

Including what I am saying now, if you don’t say it, it will only stay on the navigation interface. During the driving process, the voice did not actively remind me whether there is a better route. Actually, this is not difficult to achieve.

It is not an exaggeration to say that if this kind of proactive and benign reminder can be achieved, the current voice experience of the industry can be improved by at least 50% or more. This is the state that is closer to the concept of “intelligence”, and the sensory experience will be better as well.

Mr.Yu@GeekCar:

Understand. Just like in 2016 when Honor launched a phone that was integrated with many AI capabilities and scenarios, there is one impressing feature: when you book a movie ticket on a ticketing platform, when you walk near the cinema just before the movie starts, it will pop up your ticket code and QR code, so you can walk up to the ticket machine and take out your phone to get your ticket. Of course, I experienced this ability later on Huawei’s intelligent phone.

Is this feature extraordinary? To be honest, it’s just okay.

But by accumulating these seemingly insignificant conveniences and satisfaction one by one, it really is quantitative accumulation to a qualitative change, which will make people have a sense of recognition of a product, a brand. Just like what can bring happiness to daily life would not be expensive large items, but many convenient small appliances that can satisfy some of your less core needs.

Maybe it’s a bit far-fetched, this is my understanding of the experience.

Mr.K:

It’s okay, your ideas are quite detailed.

Returning to our problem, we can introduce a method similar to Maslow’s hierarchy of needs to divide it according to the response effect of the instructions.

We have also discussed before that some voices or other experiences, in terms of the framework, have not even been completed. Mainly reflected in, after you say the command, the system has no response, nor does it give you feedback, and then this round of interaction is gone.Translate the Chinese Markdown text below into English Markdown text in a professional manner, retaining the HTML tags inside the Markdown and only outputting the result.

A better one may be that the system understands, but cannot execute, so it intervenes through search engines. This is also considered to be understood, but unable to provide feedback in the way you want. At least it is better than before and can be considered as a last resort. It makes people feel that their psychological expectations have not been met, but at least the system is trying to help solve this problem.

Then something even better happens when the AI tells you directly that it may not be able to help you implement it right now, but it will try to improve. This is the best and most common thing I have seen in my daily work. Have you seen something better than what I just said?

Mr. Yu @ GeekCar:

There may not be anything better, but I can share something more bizarre.

Some AIs cannot respond to requests, but they do not directly say “I cannot do it” but instead tell you “I’m not going to do it” and then begin to maliciously act cute.

To be honest, this “cyber-coquettish” attitude is very annoying. Originally, it was a simple back and forth, you tell me you can’t do it, at least this is sincere. As a tool-type product, if you are neither smart nor sincere, then people will really not like you.

So, do you have any cases that you can recognize when it comes to implementing voice commands?

Mr. K:

I previously experienced a function in Beijing Auto’s Arcfox. When you open the air conditioner again, it defaults to the temperature and air flow from the last time, which is very common. However, if the air conditioner is already on and you say “open the air conditioner” again, the AI will tell you that the air conditioner is already on, and then ask if you feel cold or hot.

It will make a judgment based on the reasonableness of your command, and then judge your intention or consider your feelings. This is something I think is good because the granularity of the experience is improved to a finer degree.

Mr. Yu @ GeekCar:

I really understand.

It’s like when you’re doing SPA, the service personnel who provide services for you will ask you, “Is the strength suitable? Is it too heavy or too light?” In most cases, customers will answer “OK” or “Suitable.”

In fact, the meaning of this sentence itself is not significant, because if it is not suitable, you just tell the other party to adjust it. But being asked will make you feel okay, the service is in place, and your experience is valued.

So do you think relying entirely on voice is feasible? Now the proportion of voice interaction in cars is increasing, and we can also see a trend of physical button elimination, and distributing control and interaction to voice, touch and other methods.

Mr. K:This is worth discussing, let me not mention the conclusion, and let’s list some points first.

First, I want to adjust the volume or turn on the wiper. It can be solved in a few tenths of a second with the lever or buttons on the steering wheel. Why do we still have to go through such a long process of “wake up-respond-send instructions-execute-inform”?

This is my first point, at least for now, physical buttons are still irreplaceable.

Secondly, physical buttons and virtual buttons are essentially buttons. In general, it is only a difference between one that can be touched and one that cannot be touched. As you discussed in a previous article, physical buttons have strong feedback and are easy to use.

Mr. Yu@GeekCar:

It can also be operated blindly.

Mr. K:

Yes, it can also be operated blindly to achieve non-visualized operation.

In recent years, the industry has also been exploring, such as adding a vibrator in the screen to increase vibration feedback for touch and simulate the feeling of physical operation. For example, Mercedes-Benz, and the unreleased NIUTRON SELF-DRIVING NV. Of course, due to the cost increase or the complexity of technology, it has not been widely promoted yet.

Therefore, I believe that the most worthwhile expectation is still the combination of voice and other methods to form multi-dimensional interaction methods and achieve a balance between safety, efficiency, and convenience.

Mr. Yu@GeekCar:

Then why has voice gradually gained momentum in recent years? And even there are designs that completely eliminate physical buttons, and this trend is still on the rise.

Mr. K:

Firstly, I think it is to cater to the tastes of the public, which is undeniable.

Why? Because new energy vehicles and intelligent cabins themselves represent the concretization of a new technology, or give people the feeling that they are a general term for many new technologies. So there must be some technologies in the cabin that were not available before to match the positioning of the car itself.

Secondly, this is a trend. One company does, two companies do, and many companies do. This is how the industry develops, and manufacturers influence each other.

Thirdly, as mentioned earlier, physical buttons and voice operations each have their own advantages and disadvantages. People can judge for themselves during the driving process, which interaction method is more in line with the premise of safe driving and has higher execution efficiency.

Mr. Yu@GeekCar:

Why is that?

Mr. K:Because most car manufacturers are non-self-developed. The so-called non-self-developed, extended to the Tier 1 – Yes, Tier 1 is mentioned again. Macroscopically, many manufacturers do not have the ability to do deeper development in the field of voice technology. What if you can’t do it? Then you can only follow the trend. So you asked, do car manufacturers really want to do a good job in voice? I think they have the heart, but reality is often powerless. So more often, everyone stays at a similar level. It’s not about good or bad, but represents an industry reality. You can analyze the planning and marketing of current OEM manufacturers, and everyone’s ideas are similar at a high level. Because planning determines what this car should have; marketing determines how to sell this car to users and how to convey expectations to users. So let’s talk about the decision-making mechanism. In fact, R&D in the middle is very difficult. If you have some say, R&D can still have some space; if you don’t have any say and are under KPI pressure, whether you can make good things becomes uncertain all at once. Therefore, self-development becomes very important.

Mr.Yu@GeekCar:

So self-development has become very important.

Mr.K:

Yes, we can refer to XPeng Motors.

If someone says that XPeng’s in-car voice is at the top of the industry, I believe that anyone with objective perception would not object.

Mr.Yu@GeekCar:

What do you think about showing off through voice skills? Is there anything worth mentioning?

Mr.K:

Good question, I can give a simple example.

First, as mentioned earlier, I think visible speech is also a kind of showing off. Maybe I haven’t really realized the true value of this ability, so I’ll put a question mark here.

Second, I repeatedly talk to the in-car voice assistant, turn on the air conditioner, open the window, open the skylight, turn on the seat heating… This is showing off, and normal users don’t interact like this. Of course, we still need to distinguish between true continuous dialogue and showing off.

Therefore, if some functions have little practical value and are highlighted as the focus of promotion, I think it is inappropriate.

Mr. Yu@GeekCar:

I roughly know what you are talking about.

So, I would think that this is a very clever psychological scam. Because the demos shown to everyone must be the best performing. Or it can be said that it is the best performing through design.When users see these exaggerated advertisements on media or social networks, they will feel impressed and imagine themselves using the products. However, can they actually achieve similar effects after purchasing them? This raises a question mark.

If a brand conveys unrealistic expectations, is it inappropriate?

Mr.K:

You can view marketing and promotion as both inevitably containing exaggeration, which fundamentally conforms to people’s basic understanding of marketing, right?

If the energy conveyed by the promotion is less than the actual effect, the marketing is too conservative and needs to be penalized.

It is challenging to convey reasonable expectations to the public without appearing ostentatious. Of course, this is undoubtedly the most appropriate method.

However, the discussion of “stunning techniques” is not within the scope of this topic. The primary purpose of these techniques is to create a topic and promote publicity. The short video of a car dancing on a social media platform is an example of this.

Mr.Yu@GeekCar:

When it comes to excessive promotion, I think of the so-called “emotional interaction.” Many AI giants were hotly discussing this concept a few years ago.

Mr.K:

The precondition for emotional emphasis is that you must “be like a person.” If AI can interact with me in a human-like manner, I may develop dependence. However, this is more related to the trust in a tool-oriented product rather than emotion.

So when you bring up this topic, the first thing that comes to my mind is whether the voice tone, speaking style, and other factors are human-like, rather than whether I really need to open up to the algorithm.

Mr.Yu@GeekCar:

Yes. I think of a term often used in the English-speaking world: “trustworthy.”

As mentioned earlier, dialogue between humans is the result of a comprehensive influence of intelligence quotient, emotional quotient, expression ability, decision-making ability, emotions, common sense, and game theory.

In other words, at this stage, most of the forms of conversation supported by AI voice are turn-taking, such as you say one thing, and I will reply with another. Based on this alone, the so-called “emotional interaction” is basically doomed to failure.

Therefore, I believe that, for now, the conversation between voice assistants and AI can only be regarded as an inlet and outlet for interactions with explicit purposes.

Mr.K:

Yes, as you said, the voice is merely an inlet and outlet.

However, behind it is a brain-like mechanism that supports it. How to design and strategize it is crucial and complex. Perhaps its complexity is no less than that of the algorithms used for assisted driving.As products continue to improve, there will definitely be more advanced technologies integrated in the future. However, that’s something for the future.

Mr.Yu@GeekCar:

You just mentioned the word “support”, which I think is very appropriate and a satisfying conclusion to today’s conversation.

Whether it’s voice recognition or other interactive intelligent technologies, they fundamentally support experience and safety, rather than being the main focus.

Mr.K:

That’s right, it may be possible in the future, but it’s not enough right now.

As you media professionals often say, let’s wait and see.

In conclusion

Thanks to everyone who read to the end, this content is not short.

Voice recognition is a simple yet complex issue.

Simple, because the vast majority of users only care about whether it is easy to use and whether they are willing to continue using it, without delving into more details.

Complex, because even tiny improvements, such as speed improvements of a few hundred milliseconds or recognition rates for specific voices, are not to be ignored by professionals in the industry. The complexity is reflected in the constant trial and error and exploration of the boundaries of user experience throughout the industry.

Regarding these issues, I didn’t get an answer from Mr.K, but rather, his conclusion represents the thoughts and experiences of one industry professional.

I look forward to hearing different perspectives from the next person who discusses these topics, perhaps from Mr.K.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.