Title: The Association Between Laser Pens and Autonomous Driving – A Retrospective Analysis

Author: French Fries Fish

Twenty years ago, when I first got my hands on a “laser pen” toy, I couldn’t have imagined that I would be beaten up by the most venomous scolding in my childhood for ignorantly shining it at pedestrians.

At that time, I couldn’t have imagined that it would have such a strong connection with autonomous driving in 20 years.

Just as the naughty children who have had laser pen toys must have shone them at the passers-by, new Chinese automakers with the dream of L4 autonomous driving have all chosen to use LIDAR.

The former is because the naughty children are “naughty”, so why is the latter?

I think NIO has more authority to answer this question, since the NIO ET7, which has begun delivery, is currently the unparalleled “autonomous driving hardware ceiling”.

NIO’s Vice President of Intelligent Hardware, Bai Jian, spent an hour explaining NIO’s thinking on LIDAR.

Singing a Different Tune?

Currently, there are two schools of thought in the field of intelligent driving: one is pure vision and the other is multi-sensor fusion.

With the Aquila NIO sensing system, equipped with 33 high-performance perception hardware devices on the ET7, there is no doubt that NIO belongs to the latter category.

Although Musk has said, “Humans rely on eyes and intelligence to drive cars, so do autonomous cars,” and Tesla’s pure vision FSD may be the best solution for intelligent driving among the currently delivered solutions.

However, Bai Jian thinks there is a misunderstanding in this statement.

First of all, “human eyes are much more precise than current cameras.”

For example, a plastic bag floating on an elevated road. This is not too simple for humans, but recognizing a non-hazardous plastic bag is almost an instinctive judgment.

But for pure vision cameras, it’s a bit “difficult”. Especially when the plastic bag is far away, it may be a 2D color block information of just a dozen pixels in the camera’s field of view. As for whether it is a plastic bag, a stone, or something else, let pure vision “burn” for a while, and we’ll continue.

Secondly, “what the human eye, camera, and LIDAR see in the world is different from each other.”Previously I interviewed an engineer from a start-up company in China that focuses on pure visual intelligent driving solutions. They had trouble with the performance of their cameras in high-contrast environments such as driving into a tunnel on a sunny day.

NIO used a scenario of driving from a sunny day into a tunnel to help us understand the issue. The tunnel is relatively dark compared to the bright outdoors. In this extreme contrast scenario, human eyes would briefly lose vision, which is why experienced drivers would remind new drivers to slow down when entering a tunnel.

Not to mention, “cameras have much lower precision than human eyes”. In this type of rapidly changing high dynamic range scenario, the images captured by cameras would be just a white mess.

However, in the field of view of LiDAR, it relies on the laser point cloud reflected by objects ahead to perceive the world. Therefore, this problem does not exist. Moreover, this different perception method also endows LiDAR with the capability of obtaining depth information. Through this ability, LiDAR can clearly determine that the plastic bag floating over the overhead road is a lightweight, thin object that will not endanger driving safety.

Therefore, based on the above, NIO believes that “multi-sensor fusion technology is the key technology route for future advanced driving assistance and autonomous driving”.

Three Standards

After discussing the route, let’s talk about the technology.

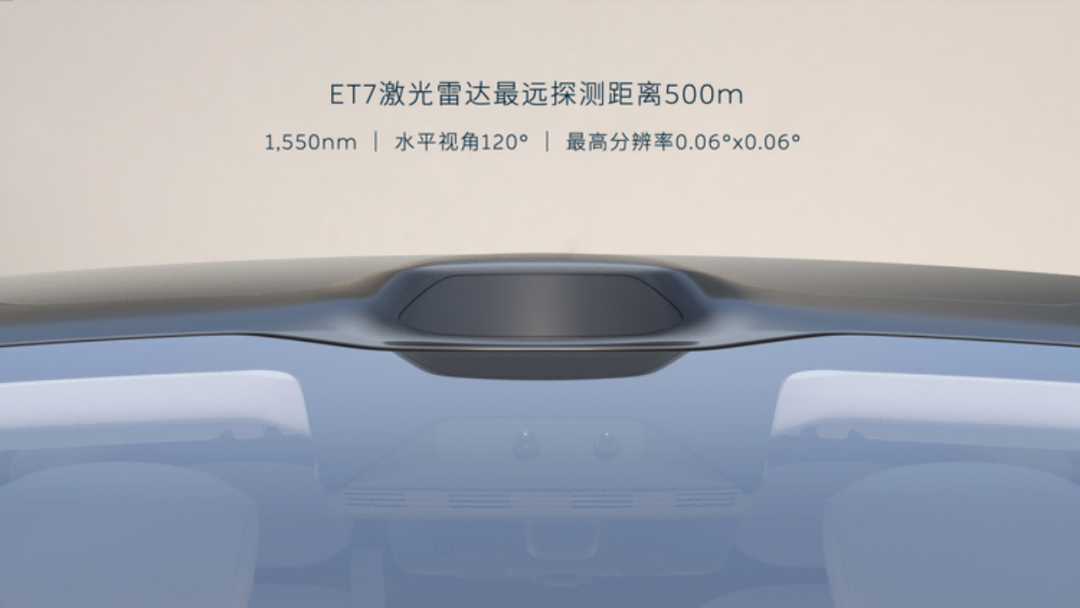

NIO ET7 uses a 1550nm wavelength LiDAR, which is the world’s first 1550nm LiDAR in mass production. Its horizontal field of view is 120°, the farthest detection distance is 500m, and the highest resolution is 0.06°× 0.06°.

For this LiDAR, NIO has the slogan of “see far, see clearly, and see steadily”.

Firstly, “seeing far” is mainly due to the 1550nm wavelength.

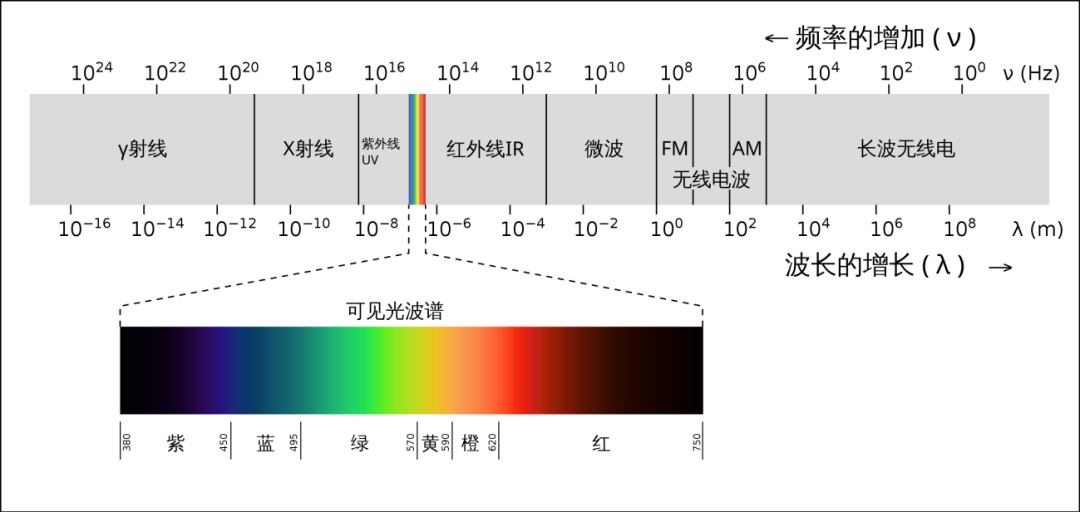

NIO says, “the 1550 nm wavelength laser can support high power emission”. Let’s brush up on some more hardcore physics knowledge. During middle school physics class, we learned that “light is an electromagnetic wave”. Since it is a wave, we can use wavelength to define different types of light. For example, “red, orange, yellow, green, blue, and purple” that we can see with our eyes have wavelengths roughly ranging from around 380nm to 750nm, and the reason why our eyes can see them is that our eyes can absorb these wavelengths of light.

Compared with the mainstream 905nm and 1550nm solutions for current LiDARs, the 1550nm solution has a longer range in the spectral range of visible light. In other words, the human eye is less sensitive to 1550nm laser radiation, and 1550nm lasers are also relatively less harmful to the human eye.

Therefore, NIO can emit laser beams with higher power and energy. Higher power means that it can “see farther”.

Compared with LiDARs of other brands, the LiDAR used in NIO ET7 can still detect up to 250m in daily use scenarios with a reflectivity of 10%.

Next is “seeing clearly”. The LiDAR used in NIO ET7 has a function called “Focus”. The 3D point cloud output from the LiDAR can also be seen as an image. Each point in the point cloud carries depth information. Since it is an image, the concept of resolution also applies to 3D point cloud images. The higher the resolution, the clearer the image.

In this regard, NIO compared its LiDAR with a relatively mainstream 125-line LiDAR. The angular resolution of the 125-line LiDAR is 0.1° H*0.2° V. H refers to the horizontal direction, and V refers to the vertical direction. The 125-line LiDAR can generate a point every 0.1° in the horizontal direction and every 0.2° in the vertical direction.

The Focus of ET7 LiDAR is similar to the focusing function of the human eye. In the ROI region, the angular resolution of this LiDAR can achieve 0.06° H*0.06° V.

Simply looking at data may not be intuitive. NIO set up a scene and used two types of LiDARs to illuminate a pedestrian with a height of 1.8m standing 200m away. The results show that the comparison group generates about 12 points on the pedestrian, while the ET7 LiDAR generates about 54 points.

More points mean higher image resolution, and higher resolution means that images are clearer, making it easier for higher-level systems to identify objects.In addition, there is the concept of Probability of Detection (POD) mentioned by NIO as “looking stable”.

This concept can be illustrated by using dead pixels on a screen. According to China’s warranty policy, liquid crystal displays with no more than three dead pixels are qualified products. This is because dead pixels are inevitable in the engineering implementation of ordinary consumer electronics. Laser radar scanning results also have this phenomenon.

For example, if a laser radar is designed to emit 100,000 points, in reality, due to factors such as laser energy, detection sensitivity, and system stability, the number of points that can be accurately received is often less than 100,000, which is the probability of detection.

NIO claims that the POD of their laser radar can reach 90%, higher than many other laser radars. A relatively high POD means more accurate detection results.

Beyond normal standards

In addition to the performance of the laser radar itself, NIO also mentioned some other things.

For example, the lead time. It took less than two years from the project initiation to installation in the car for this laser radar. Some people might think that two years is a long time, but in the automotive industry, this process involves the development of parts suppliers, matching by the whole vehicle manufacturer, and validation. This whole process often takes three to four years or even longer.

For example, the drag coefficient. The ET7 laser radar uses an externally projecting tower design but achieves an ultra-low drag coefficient of 0.208. This is also a noteworthy point since many models without laser radars and external observation towers have a much higher drag coefficient than the NIO ET7.

This laser radar could achieve this because it was developed jointly by NIO and Velodyne Lidar.

The structure of a laser radar can be roughly divided into several parts such as optics, circuits, and appearance. NIO is more proficient in areas such as circuits and appearance and thus led the relevant development. Therefore, considerations such as how to arrange it and how to integrate it with the vehicle body better were taken into account at the beginning of the design.

NIO has shared so much with us, and what they want to convey is that this is an advanced and stable laser radar, and most importantly, it is a laser radar that can be trusted.The biggest regret I have regarding ET7 is about waiting. Currently, the laser radar used on ACC and LCC in ET7 has yet to be applied in other expected scenarios, such as NOP+. We may have to wait a little bit longer. NIO expects to start delivery of NOP+ in Q3.

Meanwhile, I am especially concerned about interference issues.

Many Tesla owners may have experienced this: when you follow another Tesla, the distance alert on your screen would possibly be triggered because of interference caused by the similar bands of the two radars.

Fortunately, this kind of close-range following usually happens at low speeds, causing little danger except for being annoyingly distracting.

However, laser radar is different. With greater distance and being closely related to the operation of automatic driving systems, will interference happen if there are too many cars with laser radar on the road?

Bai Jian’s answer is no.

“The laser radar has certain encoding capabilities, and every pulse sent out and received back has a unique ID which we can recognize.”

Therefore, NIO means, you can confidently use laser radar on the streets.

As the naughty kids who played with laser pens grew up, and those pens were replaced by laser radar installed on the dome, today, I will no longer be beaten by someone because of shining a laser on them while driving on the road.

Thanks to technology, thanks to NIO.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.