Author: Michelin

Not long ago, when the concept of the metaverse was all the rage, the folks at GeekCar office sighed, “When will we ever get to attend a car company’s metaverse press conference, with virtual avatars of media sitting below… “

We didn’t have to wait long for such a press conference in the metaverse.

On June 8th, at the first brand launch event ROBODAY by Jidu, the first concept car by Jidu, the ROBO-01 debuted. This metaverse press conference was truly thorough: a virtual setting, virtual speakers, virtual audience, and a virtual concept car, the ROBO-01. Setting aside the fact that this was done out of necessity for the current times, this metaverse press conference really aligned with Jidu’s constant emphasis on the concept of car robots.

As the first concept car by Jidu, what sets the ROBO-01 car robot apart from the rest?

What is a car robot?

How long does it take from establishing a new brand to debuting a concept car? According to Jidu, it takes 463 days. Each iconic milestone during the press conference was measured in days: Jidu was registered on March 2, 2021; the first media communications meeting was held after 100 days; the clay model was exposed after 110 days; and the development of smart driving for the simulated car began after 216 days.

Is starting a car manufacturing business in 2021 too late? It’s not too late, but time is definitely tight. Therefore, the ROBO-01 concept car is not just another traditional concept car. Instead, it is more like a confirmation of Jidu’s design for their upcoming mass-produced cars. Jidu CEO Xia Yiping also announced that the similarity between the mass-produced cars and concept cars will reach up to 90%, and the mass-produced cars will debut in the fall, just a few months from now.

On the ROBO-01 car, we can see not only Jidu’s design philosophy but also the future mass-produced car products that will soon come.

As the first concept car, the ROBO-01 has a unique tag that sets it apart from its peers – the car robot. According to Jidu, when vehicles have an AI brain, they become robots and thus possess attributes beyond just cars such as futuristic design, robot-like AI perceptual abilities, and empathy to communicate with people.

These three concepts may seem abstract, so let’s take a closer look at what they really mean.In terms of futuristic aesthetics, ROBO-01 brings to life the representation of futuristic vehicles in science fiction films – minimalistic design with no excess. It not only eliminates door handles and adopts seamless side windows, but also features a completely buttonless interior. The trend towards the buttonless design is not uncommon in smart car interiors today, with touchscreens and voice recognition replacing physical control buttons – it can be said to be the basic operation of intelligent cars. However, it is still somewhat daring to delete all physical controls such as door handles, gear levers, and steering wheel knobs. As for whether such a minimalistic design would be preserved in mass-produced vehicles, it remains to be seen until the mass-produced vehicle is released.

When it comes to robots, the first thing that comes to mind is Transformers, which can be said to be everyone’s first impression of robots. Simply put, the robotization of ROBO-01 is the active transformation ability. For example, the dual laser radar design that recently triggered discussions between He XPeng and Li Xiang – Jidu used the “jump light” style retractable laser radar on the front hood to balance the performance of dual laser radar perception, aesthetic appearance of the overall vehicle, and safety during mass production. When the vehicle detects an imminent collision, the laser radar automatically retracts. Similarly, the wing-like ROBOWing can actively transform and can be raised and lowered according to vehicle speed and wind direction. Under automatic driving mode, the steering wheel can be automatically folded, hidden, and the U-shaped steering wheel can change the steering ratio through wire-controlled steering technology.

The empathy of the vehicle is entrusted to the AI light language outside the vehicle. The system can relay its thinking results in the form of AI light language based on its perception of the external environment. Users in the car, however, cannot see or touch this feature. Is this feature designed to open up new social attributes for cars?

(Introverted people and cars express that they do not want to say hello on the road.)

Backed by Apollo, “Plug and Play” Intelligent Driving

As a brand personally created by Baidu to collaborate in car manufacturing, Jidu’s biggest expectation is probably in intelligent driving.

For such a technology as autonomous driving, it needs real road tests in multiple scenarios to drive algorithm upgrades and iterations, and a large amount of test data to constantly improve the algorithm.For domestic players in the autonomous driving industry, perhaps only a few have accumulated as much as Baidu, with 27 million kilometers of autonomous driving test mileage and a large number of real-road Robotaxi road test fleets. As the only brand in the industry that fully applies the Apollo autonomous driving “unmanned” capabilities and safety systems, Baidu’s scenes and data accumulated in L4-level Robotaxi, as well as the experience of collaborating with vehicle manufacturers at L2-level intelligent driving, will be applied to Jidu, its “progeny”.

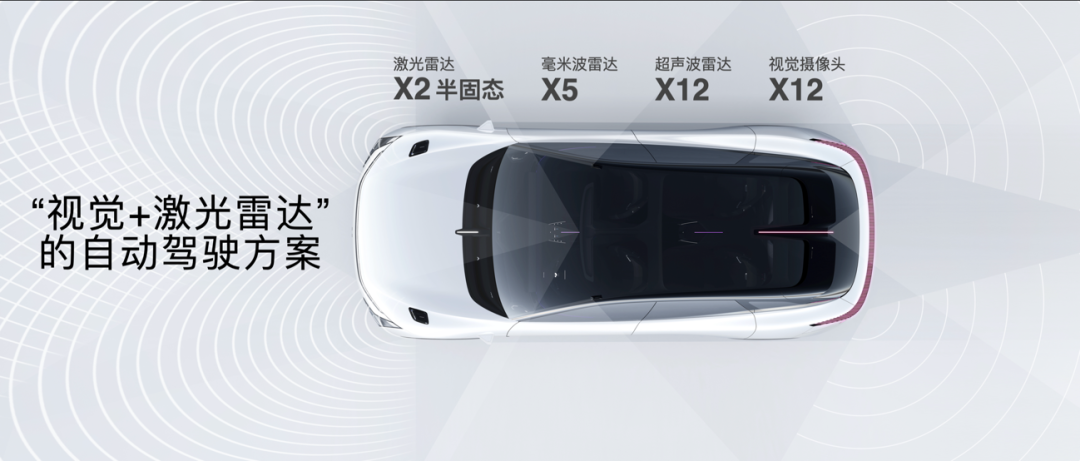

In order to cooperate with the algorithm of Apollo, Jidu’s braking and driving system is equipped with Nvidia’s “Dual” Orin X chips, and the entire vehicle is equipped with 31 external sensors, including 2 Lidars, 5 millimeter-wave radars, 12 ultrasonic radars, and 12 cameras.

With the hardware and software enhancements, Jidu’s autonomous driving system can achieve “three-domain integration” of high-speed, urban, and parking and achieve point-to-point advanced autonomous driving. According to the release, Jidu has now successfully implemented functions such as unprotected left turns, traffic light recognition, obstacle avoidance, and free on-and-off ramps. When the product is launched and delivered, users can achieve “plug and play”.

However, applying L4-level autonomous driving technology to L2+ mass-produced intelligent driving is not as simple as “reducing dimensions by attacking”. When limited test scenarios, data from dozens or hundreds of test vehicles are migrated to mass-produced vehicles with complexity and uncontrollability dozens or even hundreds of times higher, can it be as easy to use as envisaged?

Left Hand 8295, Right Hand Nvidia

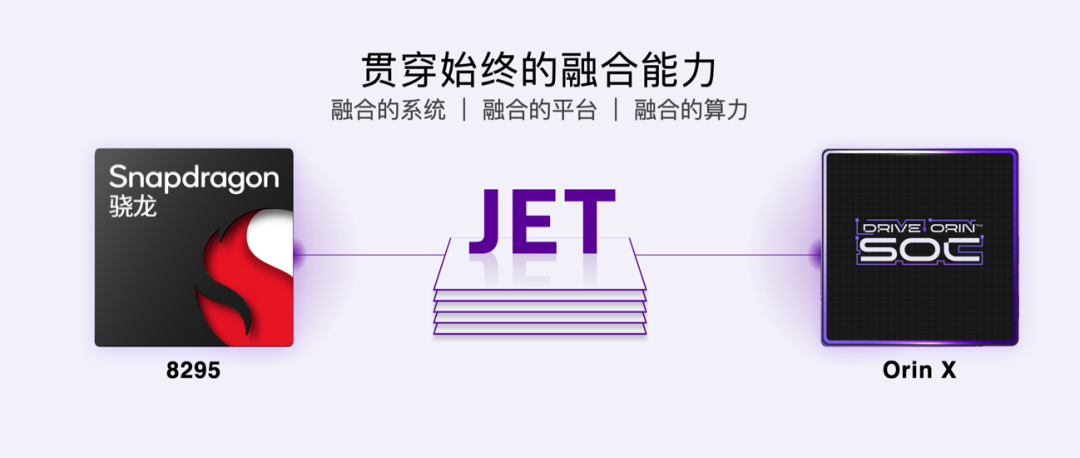

For intelligent driving, the ability of algorithms, data, and software is undoubtedly important; the support of underlying architecture, chips, and computing power is also indispensable. In the past year, chips and computing power have been the hot topics in the intelligent automotive field. Qualcomm’s 8155 and Nvidia’s Orin X chips have become the highlights of new cars.

On ROBO-01, Jidu adopted a “dual top-level chip” architecture, which integrated the Orin X chip and the Qualcomm SA8295 chip into the high-level intelligent driving architecture JET, and the vehicle’s AI computing power reached 538Tops. This is also the first appearance of the Qualcomm SA8295 5nm SoC chip in domestic models.

With the support of dual top-level chips, the JET architecture can achieve full cockpit fusion, sharing computing power, perception, and services. The fusion architecture with dual chips and high computing power can support stronger dual-perspective perception. By combining the 180° FOV lift-type dual lidar and perception cameras on the front of the vehicle, the independent visual and lidar perception scheme provides redundancy for intelligent driving systems.

With the support of dual top-level chips, the JET architecture can achieve full cockpit fusion, sharing computing power, perception, and services. The fusion architecture with dual chips and high computing power can support stronger dual-perspective perception. By combining the 180° FOV lift-type dual lidar and perception cameras on the front of the vehicle, the independent visual and lidar perception scheme provides redundancy for intelligent driving systems.

On the other hand, while meeting the requirements of the intelligent driving perception system, the high computing power provided by the dual top-level chips has the most obvious effect on the cabin, which is sufficient to provide a variety of 3D dynamic interactive modes. For example, in the intelligent driving state, the intelligent driving domain controller can support AI interaction of the 3D human-machine joint driving maps in the cabin, which integrates static map navigation and dynamic perception data.

Speaking of human-machine joint driving maps, the system requires accurate coordinate data for navigation. Whether it is a 2D or 3D navigation effect, it is more about allowing the driver to experience a more immersive navigation effect. Therefore, how to achieve the interactive effect of 3D human-machine joint driving maps has been a puzzle for me. From the effect displayed at the press conference, the interactive mode of the 3D human-machine joint driving map is more like AR 3D navigation in the third-person perspective or navigation effect achieved by combining the HUD.

The intelligent cabin with “human-like sensing”

Since Tesla started the era of minimalist cabins, the trend of long-link screens and physical button-free designs has become more and more popular in intelligent cabins. However, although various minimalist designs of the cabins have become commonplace, the cabin of ROBO-01 is still surprisingly “minimal.” Not only has it removed physical buttons, but it has also removed directional control keys, levers, and door handles. The steering wheel also uses a mode that can be actively folded.

However, since various physical control keys have been removed, in order to ensure the user experience, it naturally tests the ability of the car’s voice interaction and active interaction.The Jidu Smart Cockpit features an integrated long-connected screen design with basic functions such as full offline voice, millisecond-level response, 3D human-machine collaborative driving map, and in-car and out-of-car full-situation interaction. With “millisecond-level” intelligent voice response, the entire scene is covered inside and outside the car, and the “full offline” intelligent voice function eliminates the impact of network signals. In addition, it is equipped with “human-like” interactive ability through multi-modal fusion such as visual perception, voiceprint recognition, and lip reading. Physical control keys interact with users through hand gestures. It seems that there is hope to compensate for the lack of physical buttons through voice, eye contact, voiceprint, and even lip reading. My colleague, GeekCar Mr. Yu, previously talked about whether physical buttons are necessary in the cockpit in the article “Are Curve Innovations or Overcorrection?”. For you and me using the cockpit, the key to completely removing physical buttons is whether the other interaction experiences are good enough to make us ignore the action of looking for physical buttons. For Jidu, if they can make everyone ignore the fact that “there are no physical buttons,” then it is considered a success.

Finally, what stage has the race for intelligent cars reached? Some say it has just begun, while others say it has passed halfway. According to Jidu’s CEO, Xia Yiping, the current intelligent car has entered the stage of “Automotive 3.0”. In this stage, “the starting point of the era of change is the transfer of ‘driving rights’ from human to AI, and AI drives the evolution of cars.” This means that vehicles must have sufficient intelligence: intelligent driving capabilities, proactive interaction capabilities… Obviously, intelligence is the flagship of ROBO-01, with upgraded intelligent hardware and architecture as well as bold attempts at interaction. It remains to be seen whether Jidu’s concept car released in the metaverse can successfully stand out when it is put into mass production.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.